3D Mapping with Lidar + Camera

|

This post was updated on .

Hi, I was reading over this post:

http://official-rtab-map-forum.206.s1.nabble.com/RTAB-setup-with-a-3D-LiDAR-td1080.html#a1546 and thought it was perfect to try mapping with simply a lidar and a monocular camera. I'm coincidentally using the same Lidar as well, the Velodyne VLP-16. Since that post I see there is in fact a node now for making a depth topic from the camera and point cloud, the "rtabmap_ros/pointcloud_to_depthimage" node. I tried editing the minimalist launch file example at the very end of the older post to fit with my topics: Camera: RGB image -> /usb_cam/image_raw Camera: RGB Camera info -> /usb_cam/camera_info Lidar: Point cloud -> /velodyne_points (pointcloud2 message) But I'm coming across all kinds of issues if I try using the pointcloud_to_depthimage node by simply launching it in a different shell separate from the launch file mainly because I'm not very experienced with RTAB-Map. How can I incorporate the pointcloud_to_depthimage node into the launch file? I would really appreciate the help |

|

Administrator

|

This post was updated on .

Hi,

Try to generate a depth image alone. Similarly to Tango ROS Streamer tutorial, the node could be launched like this: $ rosrun rtabmap_ros pointcloud_to_depthimage \ cloud:=/velodyne_points \ camera_info:=/usb_cam/camera_info \ _decimation:=2 \ _fill_holes_size:=30You may have to play with decimation and fill_holes_size parameters to fill holes in the depth image. Note that this code is slightly different from the code that generated the image from this post which used this, while pointcloud_to_depthimage is using this (more general). The requirement you need is to have a static_transform_publisher publishing the transform between the lidar and the camera. For example if the camera is just over the lidar looking forward: $ rosrun tf static_transform_publisher 0 0 0.05 -1.57079 0 -1.57079 velodyne camera 100 cheers, Mathieu |

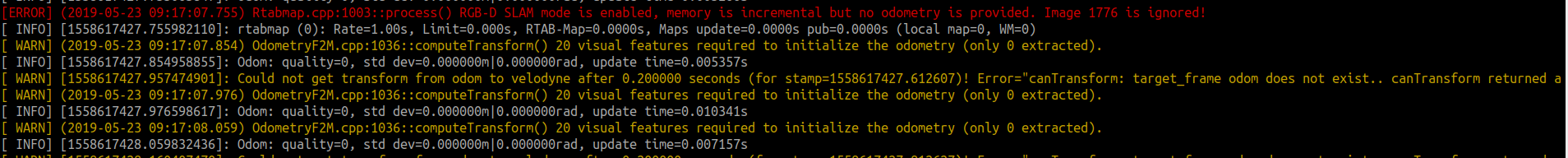

Thank you for the response! That's what I was looking for, some answer to defining the TF which turned out pretty simple. The image above shows a new warning I get when I launch the launch file. I don't have a base_link existing so I'm going over the TF course in robot ignite academy now. I should be able to get this working soon |

|

Administrator

|

When using approx=true (default), the fixed_frame_id should be set. In the example above, set fixed_frame_id to "velodyne":

$ rosrun rtabmap_ros pointcloud_to_depthimage \ cloud:=/velodyne_points \ camera_info:=/usb_cam/camera_info \ _decimation:=2 \ _fill_holes_size:=30 \ _fixed_frame_id:=velodyne cheers, Mathieu |

|

This post was updated on .

I'm now getting this on repeat when I launch. My TF tree is simply /velodyne and /usb_cam. Shouldn't there also be an /odom frame? I realize Could not get transform from odom to velodyne after 0.200000 seconds (for stamp=1558621071.290520)! Error="canTransform: target_frame odom does not exist.. canTransform returned after 0.200338 timeout was 0.2.". there is a line cut off in the photo: <launch> <include file="$(find rtabmap_ros)/launch/rtabmap.launch"> <arg name="frame_id" value="velodyne"/> <arg name="subscribe_scan_cloud" value="true"/> <arg name="rgb_topic" value="/usb_cam/image_raw" /> <arg name="depth_topic" value="/image_raw" /> <arg name="camera_info_topic" value="/usb_cam/camera_info" /> <arg name="scan_cloud_topic" value="/velodyne_points"/> <!-- Icp parameters based on KITTI dataset --> <arg name="rtabmap_args" value="--delete_db_on_start --Reg/Strategy 1 --Icp/MaxTranslation 0 --Icp/VoxelSize 0.3 --Icp/DownsamplingStep 10 --Icp/MaxCorrespondenceDistance 0.3 --Icp/Iterations 10 Icp/Epsilon 0.01 --Icp/CorrespondenceRatio 0.1 --Icp/PointToPlane true"/> <!-- Default visual-only odometry, Icp ROS interface is not yet implemented--> <arg name="odom_args" value="--Reg/Strategy 0"/> </include> </launch> Here is the launch file in case it helps. Edit: Okay so I found out the /image_raw topic from the pointcloud_to_depthimage node is publishing but its just publishing a blank image, no depth data, I'm trying to figure out why I'm not getting a good depth image. Lidar should be shooting out 300,000 points and my camera pixels total 307200 (640x480) so I assume my decimation should be 0? Thank you, ~Joel |

|

I got it working with a RealSense 435i camera so that I can just be given a depth image and its sweet! But I still want to do this with the monocular camera.

|

|

Administrator

|

Hi,

What does "/image_raw" look like in rqt_image_view? Can you record a small ros bag and share the link (dropbox, google drive...): $ rosbag record /usb_cam/image_raw /usb_cam/camera_info /velodyne_points While the velodyne gives 300 000 points, they are not all in the field of view of the camera. With a VLP-16 and not using hole filling parameters of pointcloud_to_depthimage, you would get only 16 lines in the image. cheers, Mathieu |

|

This post was updated on .

On rqt_image_view the /image_raw is just a black screen. In rostopic echo its just a whole bunch of zeros. Below is the rosbag, not for /image_raw but for the USB cam and velodyne topics

https://drive.google.com/file/d/1LHtwsXocaTcIvl2ynGuIitL0kmzj9CIa/view?usp=sharing Edit: I realize I didn't have a calibration file for my camera. I calibrated it, but and the situation is exactly the same. Still black image for /image_raw Thank you, Joel |

|

Hey sorry if google drive was blocking you from accessing the bag. I changed it to allow anyone on the web to access it.

|

|

Administrator

|

Hi,

the camera_info is not valid:

header:

seq: 724

stamp:

secs: 1558707652

nsecs: 722737664

frame_id: "usb_cam"

height: 480

width: 640

distortion_model: ''

D: []

K: [0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0]

R: [0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0]

P: [0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0]

binning_x: 0

binning_y: 0

roi:

x_offset: 0

y_offset: 0

height: 0

width: 0

do_rectify: False

---

At least K and P should be valid.

cheers, Mathieu |

«

Return to Official RTAB-Map Forum

|

1 view|%1 views

| Free forum by Nabble | Edit this page |