3D map based on laser + RGB

|

This post was updated on .

Dear People.

I do have a 3D point cloud based on a laser plus a RGB image. I am just wondering whether it might possible to construct a 3D map based on rtabmap_ros. If that is possible would you mind telling me or giving me an illustrative example with some code. Just to say that I found this link where they suggest to create a node depth image from rgb camera_info and Lidar data as follows: <node type="cloud_projection" ... <!-- inputs --> <--param name="camera_info_topic" value="/camera/rgb/camera_info" /> <--param name="cloud_topic" value="/lidar/cloud"/> <--param name="depth_topic" value="/camera/depth_registered/image_raw" /> </node> To be sincere I have no clue how to create this node :) maybe that node is already created but I cannt find it :) As far I can see in the code I may also need an odometry, shall be computed using ICP? Thank you

|

|

Administrator

|

This post was updated on .

Hi,

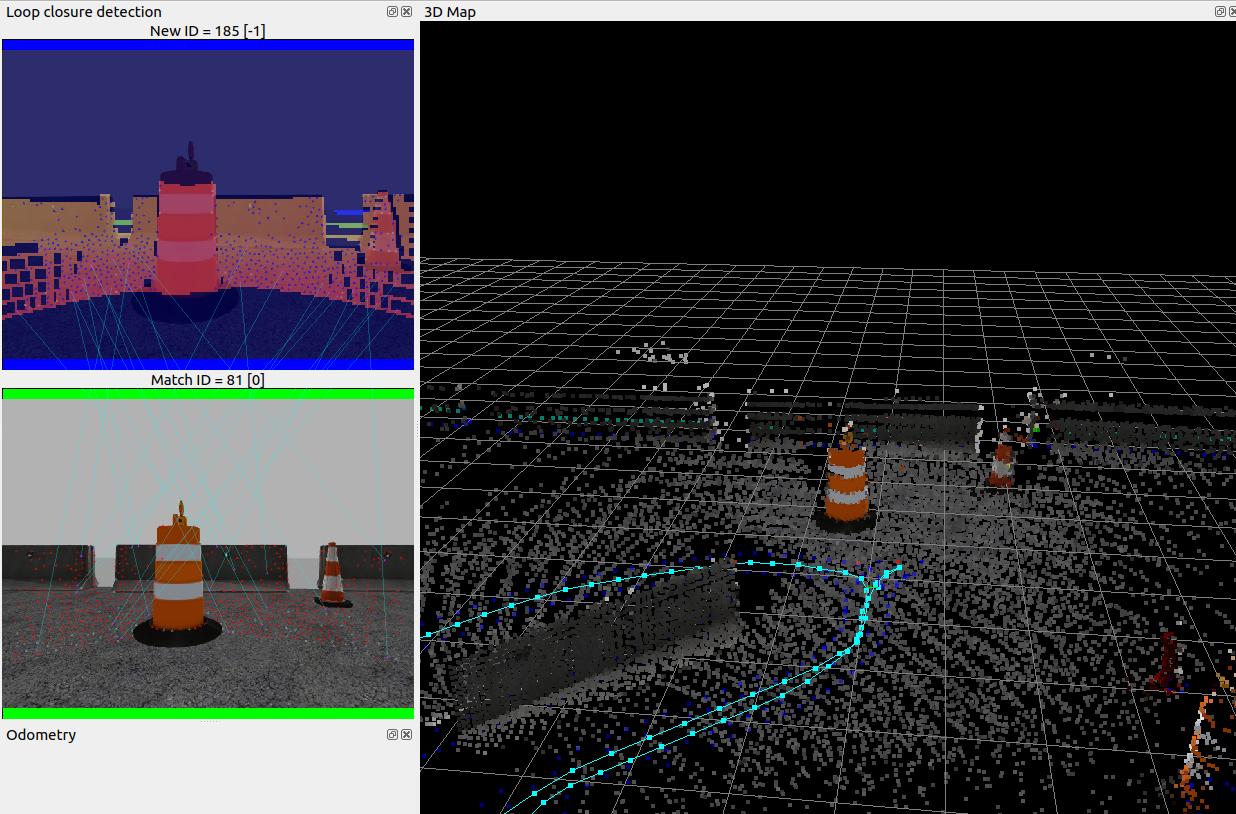

rtabmap has parameters to generate the depth image for you, taking care of the displacement of the RGB frame accordingly to odometry and lidar frames. I updated also the doc on ros wiki with those parameters. An external node for lidar registration to rgb camera is called "pointcloud_to_depthimage". If you want to reproduce the "fill to border" approach that you saw in the post you referred, you would need to use replace fillDepthHoles by fillProjectedCloudHoles. For pointcloud_to_depthimage node, fillDepthHoles is there. To compute odometry from 3D lidar, there is a demo_husky.launch file for reference. You can remove guess_frame_id if you don't have wheel odometry. Add subsribe_depth:=false to subscribe only to rgb if you use the first approach above. For convenience, I just added a new example to that demo_husky.launch file, here to generate depth image from 3D lidar. Here the RGB-D point cloud is actually created from lidar projection in RGB frame (we can see the generated depth image on top left):  cheers, Mathieu |

|

This post was updated on .

Hi Mathieu

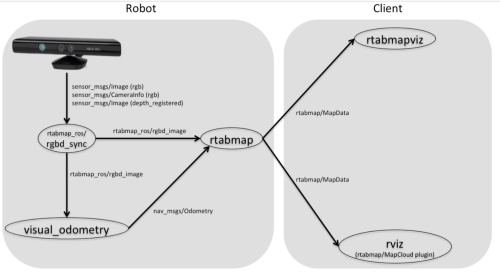

In advance thank you for your replay, a roslaunch implementation was implemented based on kinect as shown in the picture:  The following topics have been created: sensor_msgs/Image (rgb) sensor_msgs/CameraInfo (rgb) sensor_msgs/Image (depth_registered) Then the node depth_transforms_manager is used to create the scan_cloud to be feed to the rtabmap_ros node. I put the launch file:

<launch>

<node pkg="tf" type="static_transform_publisher" name="link1_broadcaster" args="0 0 0 0 0 0 camera_link camera_rgb_optical_frame 100" />

<arg name="rtabmapviz" default="true" />

<node pkg="img_rect_depth" type="img_rect_depth" name="img_rect_depth" output="screen"/>

<include file="$(find pcl_rgb_rtabmap)/launch/depth2depthcloud.launch"/>

<group ns="rtabmap">

<node pkg="nodelet" type="nodelet" name="rgbd_sync" args="standalone rtabmap_ros/rgbd_sync" output="screen">

<--param name="queue_size" type="string" value="30"/>

<remap from="rgb/image" to="/camera/rgb/image_rect_color"/>

<remap from="depth/image" to="/camera/depth_registered/image_raw"/>

<remap from="rgb/camera_info" to="/camera/rgb/camera_info"/>

<remap from="rgbd_image" to="rgbd_image"/> <!-- output -->

<!-- Should be true for not synchronized camera topics

(e.g., false for kinectv2, zed, realsense, true for xtion, kinect360)-->

<param name="approx_sync" value="true"/>

</node>

<!-- Odometry -->

<node pkg="rtabmap_ros" type="rgbd_odometry" name="rgbd_odometry" output="screen">

<--param name="Odom/ResetCountdown" type="string" value="20"/>

<--param name="subscribe_rgbd" type="bool" value="true"/>

<--param name="frame_id" type="string" value="camera_link"/>

<remap from="rgbd_image" to="rgbd_image"/>

</node>

<node pkg="rtabmap_ros" type="rtabmap" name="rtabmap" output="screen" args="-d">

<--param name="frame_id" type="string" value="camera_link"/>

<--param name="subscribe_depth" type="bool" value="false"/>

<--param name="subscribe_rgb" type="bool" value="false"/>

<--param name="subscribe_rgbd" type="bool" value="true"/>

<--param name="subscribe_scan_cloud" type="bool" value="true"/>

<param name="approx_sync" type="bool" value="true"/>

<!--remap from="scan_cloud" to="/camera/depth_registered/points"/-->

<remap from="scan_cloud" to="/pointcloud2/points"/>

<remap from="rgbd_image" to="rgbd_image"/>

<remap from="odom" to="odom"/>

<!-- RTAB-Map's parameters -->

<--param name="Grid/DepthMax" type="double" value="0"/>

<--param name="Grid/FromDepth" type="string" value="true"/>

<--param name="Grid/MaxObstacleHeight" type="string" value="5.0" />

<--param name="Odom/ResetCountdown" value="1" /> <!-- 1 reset the odometry -->

<--param name="RGBD/NeighborLinkRefining" type="string" value="true"/>

<--param name="RGBD/ProximityBySpace" type="string" value="true"/>

<--param name="RGBD/AngularUpdate" type="string" value="0.01"/>

<--param name="RGBD/LinearUpdate" type="string" value="0.01"/>

<--param name="RGBD/OptimizeFromGraphEnd" type="string" value="false"/>

<--param name="Grid/FromDepth" type="string" value="false"/>

<--param name="Reg/Force3DoF" type="string" value="true"/>

<--param name="Reg/Strategy" type="string" value="0"/> <!-- 1=ICP -->

<--param name="RGBD/OptimizeMaxError" type="string" value="0"/><!-- accept all loopclosures -->

<param name="odom_frame_id" type="string" value="odom"/>

<--param name="odom_tf_angular_variance" type="double" value="0.001"/>

<--param name="odom_tf_linear_variance" type="double" value="0.001"/>

</node>

</group>

</launch>

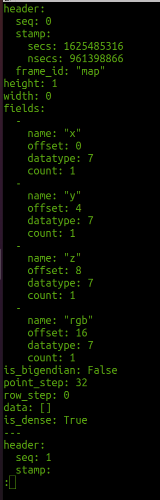

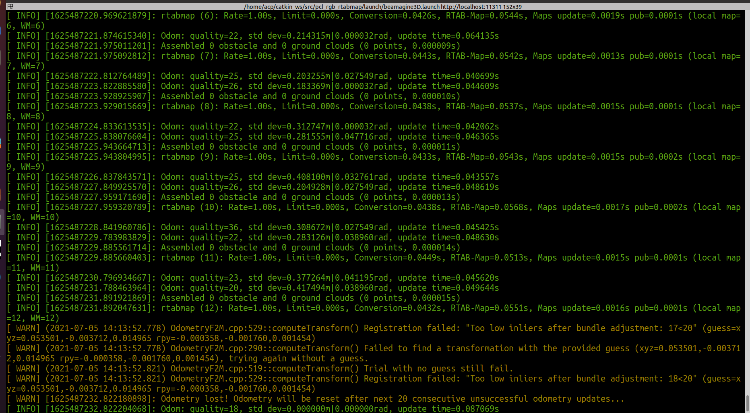

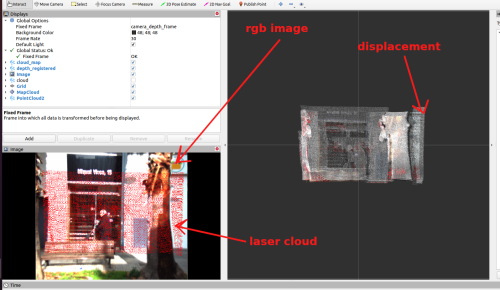

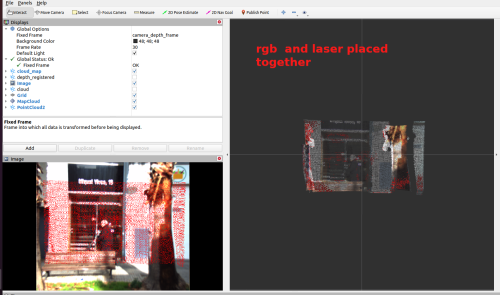

When the previous roslaunch file is launched the following topics are created. rtabmap_ros/rgbd_image nav_msgs/Odometry rtabmap/Mapcloud rtabmap/cloud_map However I do not see any data on rviz, and taking a look to the topic rtabmap/cloud_map the data field is empty.  Also, the output of the terminal is shown in the next figure.  I just want to mention that the rgb and the laser are of different sizes, the rgb is the size of (1224, 1024), however a zeros matrix have been created and stored the values of the laser, so bot images are the same size. In this context the pixel values are aligned with the laser point as shown in the picture, but when the rgb image is placed together with the laser topic from the depth_transforms_manager, there is a bit displacement, they are not 100% aligned. The following picture shows the previous:  And the following picture shows the following topics placed together. sensor_msgs/Image (rgb) sensor_msgs/Image (depth_registered)  The question arises; is it possible to integrate to certain level your solution to what I have mentioned above?. And, how to do it?

|

|

In reply to this post by matlabbe

Hi Mathieu I have tried to run the demo_husky with the following command and the following topics roslaunch pcl_rgb_rtabmap demo_husky.launch lidar3d:=true slam2d:=false camera:=true icp_odometry:=true depth_from_lidar:=true rtabmapviz:=true <arg name="rgb_topic" value="/camera/rgb/image_rect_color" /> <arg name="camera_info_topic" value="/camera/rgb/camera_info" /> <arg name="depth_topic" value="/camera/depth_registered/image_raw" /> I get the following warning and the algorithm does not run as it should be. odometry: waiting imu (/imu/data) to initialize orientation (wait_imu_to_init=true) /rtabmap/rtabmap subscribed to (approx sync): /rtabmap/odom \ /rtabmap/rgbd_image \ rtabmap/rtabmapviz subscribed to (approx sync): /rtabmap/odom \ /rtabmap/rgbd_image \ /rtabmap/odom_filtered_input_scan I have no clue how to solve it, looking forward to your replay Thanks |

|

Administrator

|

Hi,

are you using rtabmap binaries or from source? Just tried from source and this should work: export HUSKY_URDF_EXTRAS=$(rospack find rtabmap_ros)/launch/config/husky_velodyne_extra.urdf.xacro roslaunch husky_gazebo husky_playpen.launch realsense_enabled:=true roslaunch rtabmap_ros demo_husky.launch lidar3d:=true slam2d:=false camera:=true icp_odometry:=true depth_from_lidar:=true rtabmapviz:=true Not sure what does depth_transforms_manager do? The laser is created from what kind of sensor? Is it a 3D LiDAR? When you have a registered depth image to RGB, you don't need to subscribe to scan_cloud, unless you want to keep the raw scan for comparison. |

«

Return to Official RTAB-Map Forum

|

1 view|%1 views

| Free forum by Nabble | Edit this page |