About Visual slam with lidar

|

http://wiki.ros.org/rtabmap_ros/Tutorials/SetupOnYourRobot I have a question about the combination at the top of this page. Does this combine visual SLAM with lidar's sensor information? Or is it Visual SLAM combination of a and Lidar SLAM?

Sorry for the confusing explanation, but the point is that I want to know how Lidar is handled. Is it being used as a sensor, or as SLAM? Please let me know. |

|

Administrator

|

Hi,

On the top configuration of that page, we use 1) wheel odometry for odometry, 2) then feed RGB-D images for loop closure detection and 3) 2D lidar for odometry and loop closure refining (ICP) and 2d occupancy grid. |

|

This post was updated on .

Thank you. Am I correct in understanding that this is creating a 2d occupancy grid with Lidar only?

It is not possible to create a 2d occupancy grid from both camera and Lidar information? |

|

Administrator

|

Hi,

First question yes, in that configuration. For the second question, it is one or the other, you can set Grid/FromDepth to switch between lidar and camera. Note that using the lidar-only for occupancy grid, it is still possible to avoid 3D obstacles during navigation by feeding camera cloud to local costmap of move_base. Global plans are done in the clean lidar map, and navigation is using both, which is actually my favorite config. Actually, in latest rtabmap version, Grid/FromDepth parameter is now called Grid/Sensor and camera-lidar config is supported. If * Grid/Sensor=0, map is created from lidar, * Grid/Sensor=1, map is created from depth image * Grid/Sensor=2, map is created from both lidar and depth image cheers, Mathieu |

|

Thanks for the reply!

I see that the one I am using has Grid/FromDepth, which means that I can only select from either lidar or camera. So if I use the latest version, I can create a map that reflects the sensor information from both? Where can I get that?" If I install it from "sudo apt install ros-melodic-rtabmap-ros", can I get the latest version and use Grid/Sensor? |

|

Administrator

|

Hi,

yes, just update the binaries, 0.20.16 has the new parameter. |

|

Is there any sample code somewhere that actually uses Grid/Sensor? I'd like to see an example of its use if there is one. Thanks in advance.

|

|

Administrator

|

Hi,

Grid/FromDepth=false is Grid/Sensor=0 Grid/FromDepth=true is Grid/Sensor=1 For Grid/Sensor=2, you can change Grid/FromDepth from this demo launch file to Grid/Sensor=2. Then if we launch like this (with demo_mapping.bag):

roslaunch rtabmap_ros demo_robot_mapping.launch \

rviz:=true \

rtabmapviz:=false \

rtabmap_args:="-d \

--Grid/MaxObstacleHeight 1.5 \

--Grid/RayTracing true \

--Grid/3D false"

rosbag play --clock demo_mapping.bag

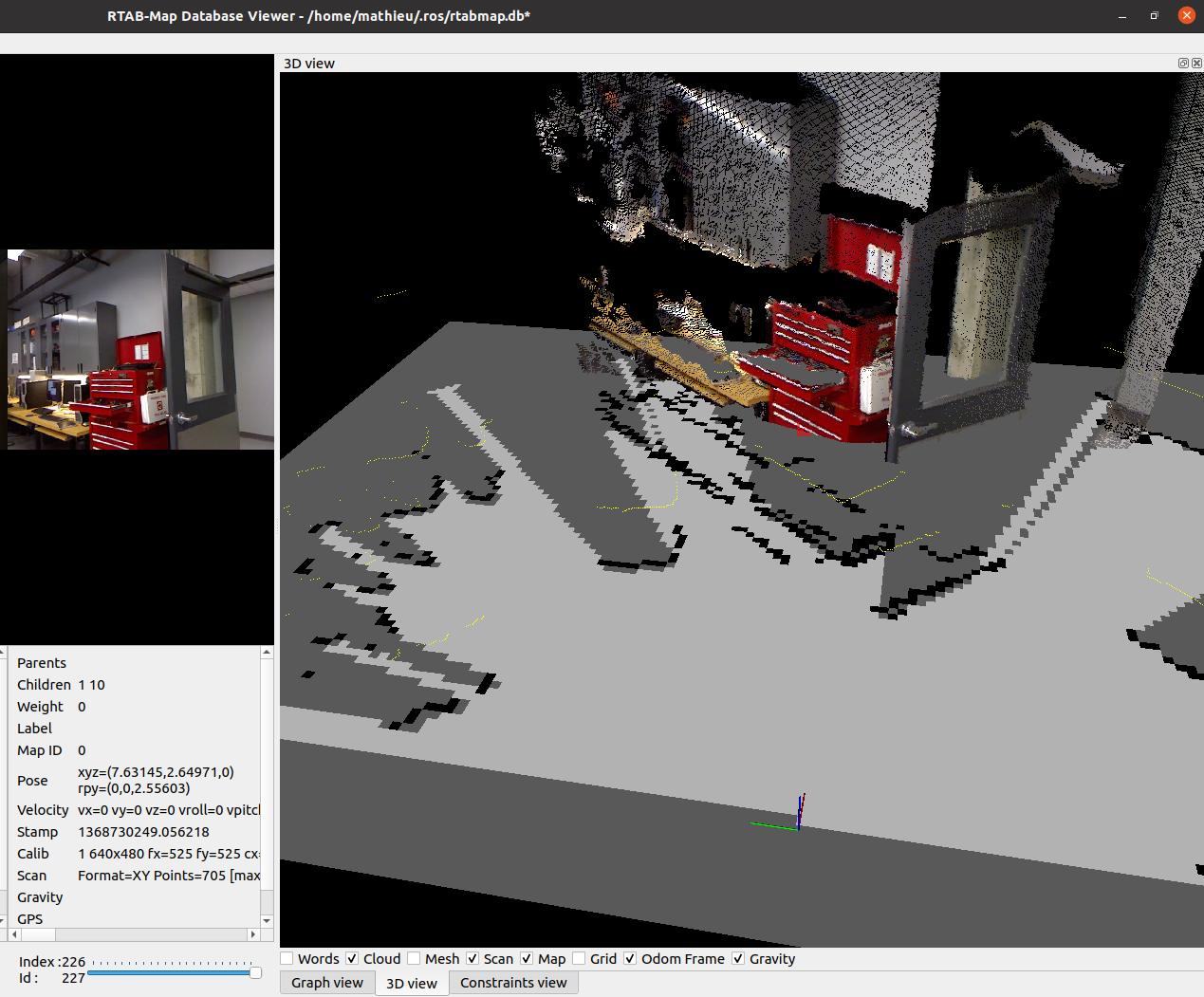

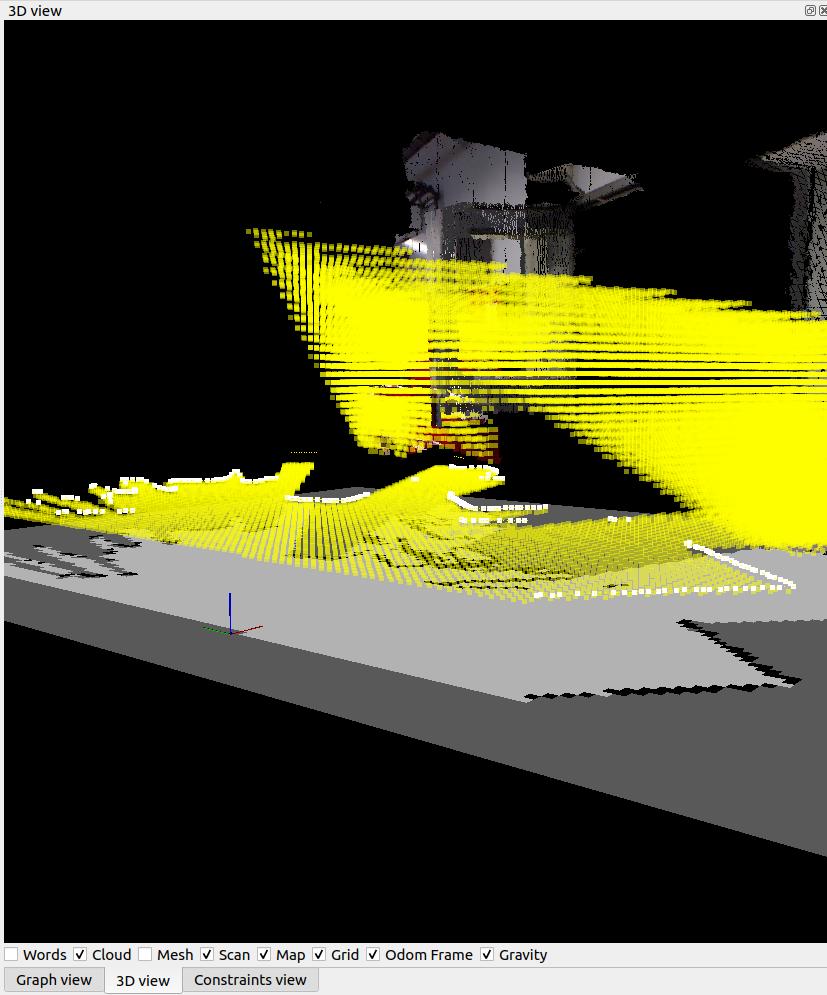

We can inspect the database afterwards in rtabmap-databaseViewer to see how the local grids are created. Here we can see the combination of lidar (yellow) and camera depth used to create obstacle cells.  In particular, the table is not seen by the lidar, only the edge by the camera, and a line of obstacles is added. To make sure that lidar doesn't clear obstacles added by the camera, we would have to use Grid/3D (at cost of more computation) and make sure rtabmap is built with OctoMap, then as ray tracing will be done in 3D, normally the 2d lidar won't be able to clear 3d obstacles of the camera not seen by lidar. With Grid/3D=true, we can see ray tracing from both sensors:  cheers, Mathieu |

|

Thank you. I'll give it a try.

|

|

This post was updated on .

In reply to this post by matlabbe

I have updated to the latest version of rtabmap. Then I set Grid/Sensor=2 and it throws up an error and I cannot create the map. I was able to do it in the demo as you taught above, but when I did Grid/Sensor = 2 in the simulation with my robot, the map was not generated.

I also get the following error (this was also in earlier versions), is it safe to ignore this? " [pcl::IterativeClosestPoint::computeTransformation] Not enough correspondences found. relax your threshold parameters. " |

|

Administrator

|

what error? For the pcl error, it cannot do scan matching (it is not related to Grid/Sensor, but on Reg/Strategy>=1 or if you use icp_odometry), not enough correspondences. You could increase Icp/MaxCorrespondenceDistance. |

|

(1)When Grid/Sensor=0,1, the map can be created without any problem, but only when Grid/Sensor=2, the following error occurs and the map is not created.

[ WARN]util3d.cpp:605::cloudFromDepthRGB() Cloud with only NaN values created! OpenCV Error: Assertion failed (src[i].dims <= 2 && src[i].rows == src[0].rows && src[i].type() == src[0].type()) in hconcat, file /build/opencv-L2vuMj/opencv-3.2.0+dfsg/modules/core/src/matrix.cpp, line 2865 terminate called after throwing an instance of 'cv::Exception' what(): /build/opencv-L2vuMj/opencv-3.2.0+dfsg/modules/core/src/matrix.cpp:2865: error: (-215) src[i].dims <= 2 && src[i].rows == src[0].rows && src[i].type() == src[0].type() in function hconcat (2)Can I add together a map created by rtabmap and a map created by gmapping? If it is possible, I would like to do something like rtabmap(visual slam)+gmapping(lidar slam). Is there a way to do this? |

|

Administrator

|

Hi,

1) Can you rerun rtabmap node with --udebug argument? I suspect it is creahsing in the cv::hconcat here. However, based on the check if they are empty, it should not happen. The debug log may help to understand which line is problematic. 2) It is not possible. For more info, you are dealing with two different SLAM approaches, if they don't detect and close loop closures exactly the same way, the two maps will drift from each other. |

|

This post was updated on .

(1) Does that mean you want to run this command?

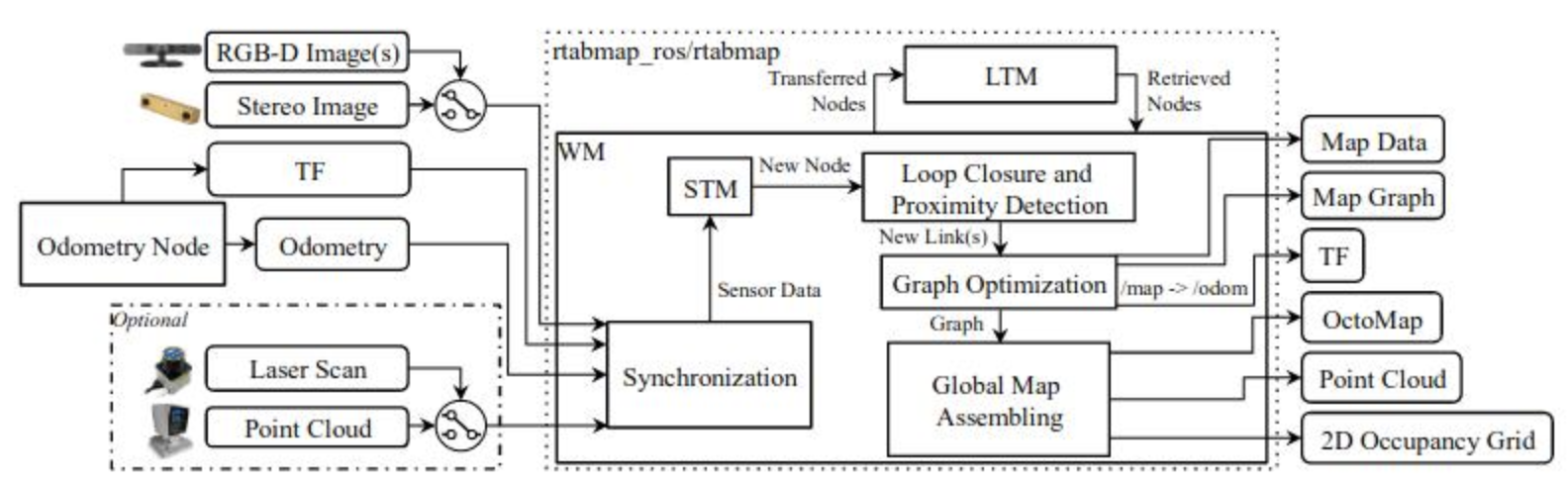

"roslaunch () () --rtabmap_args:=--udebag " (2) Thanks Is there a more detailed flowchart etc. than this picture? This is the input of the sensor used for mapping, the input of the sensor used for loop closer, etc.

|

|

Administrator

|

1. yes you can add "--udebug" in rtabmap_args, or call "rosservice call /rtabmap/rtabmap/log_debug" to enable debug log.

2. You may check this powerpoint too, but there are no detailed schemas of every inputs/outputs of each module. You would have to read the corresponding paragraphs of the modules shown in the Figure 1 of the related paper. If you are interested on which exact topic used under ros, you can look at the schemas on this page, or even launch rqt_graph when launching rtabmap. cheers, Mathieu |

«

Return to Official RTAB-Map Forum

|

1 view|%1 views

| Free forum by Nabble | Edit this page |