(Bad 3D map) robot_localization sensor fusion imu and odom

|

Dear people

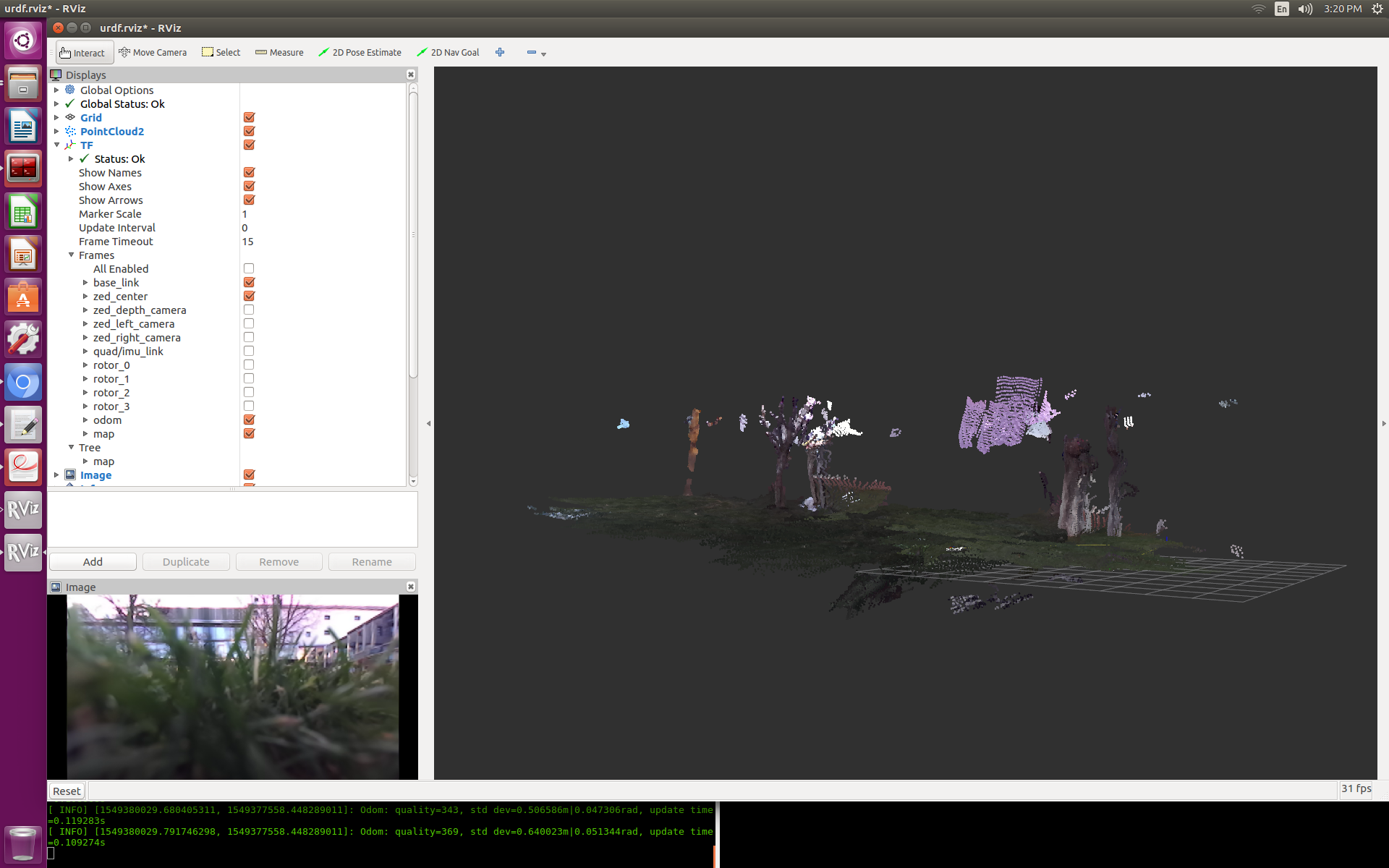

I am using the following sensor_fusion launch file https://github.com/introlab/rtabmap_ros/blob/master/launch/tests/sensor_fusion.launch To fuse IMU data /mavros/imu/data with odom from rtabmap rgb-odometry. I have adapted the launch file according to the topics names I get from the ZED camera. I have recorded a rosbag file. When I run the rosbag file and the sensor_fusion.launch I get a map which is not good, as you can see it in the picture.  When I run the code I get two warnings. publishMaps() Graph has changed! The whole cloud is regenerated and Registration failed: "Not enough inliers 0/20 (matches=15) between -1 and 73" Maybe that warnings has to do with getting a bad map I can post my code if it needed to help me. Thank you. |

|

Administrator

|

Hi,

If you can share the resulting rtabmap database, it would be easier to see if it is an odometry problem or a mapping problem. cheers, Mathieu |

|

Hi Mathieu

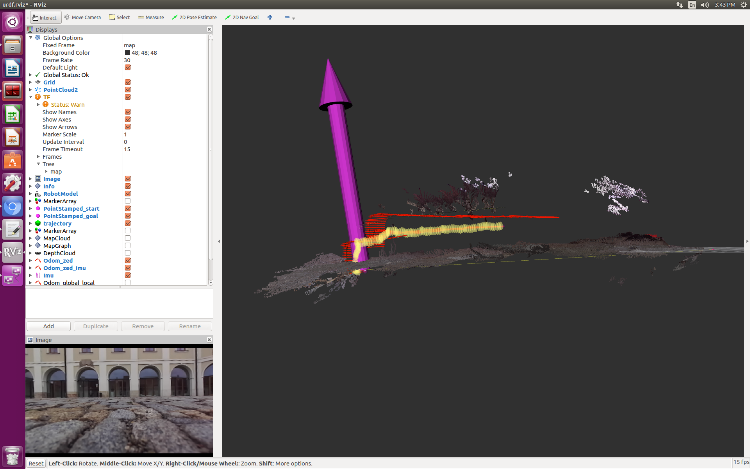

I have now fused an imu with vo to get the /odometry/filtered I share the picture as well as the rtabmap data base. I think is the one located at .ros/rtabmap.db right?  link to the rtabmap.db The yellow is the imu together with the arrow and the red one situated up the yellow path is the /stereo_camera/odom These two paths are shifted. I have a question. How can convert NED to ENU in mavros? the thing is that when I plot ros and pixhawk coordinates are rotated and shifted. Thank you :) |

|

Administrator

|

Hi,

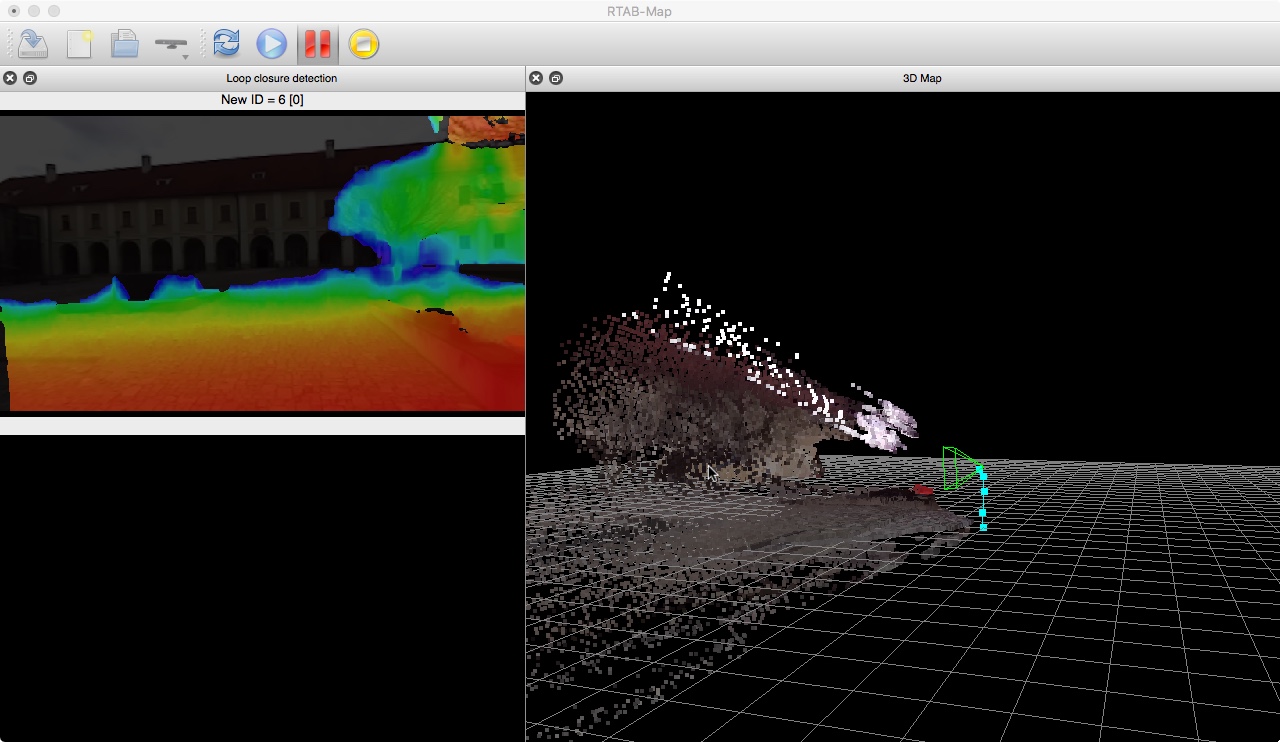

The depth image seems strange to me. It is like there is a maximum depth. The depth image looks also quite noisy when looking at the 3D point cloud generated.  Can you try the stereo version of rtabmap and use left/right images? (subscribe_stereo:=true) For /odometry/filtered, I cannot really comment on this as it is independent of rtabmap. For NED->ENU conversion, you may look at this post: https://github.com/mavlink/mavros/issues/49 cheers, Mathieu |

|

Hi

As soon as I have this result of the stereo version of rtabmap using left/right images? (subscribe_stereo:=true), I will post the results. Concerning NED->ENU conversion and this post: https://github.com/mavlink/mavros/issues/49 I have taken a look but it is not clear how can I set up in a mavros launch file. Just wondering if you may have an example or a piece of a code of mavros launch file. Thank you. |

|

This post was updated on .

Hi Matheiu

I have tried to run stereo version of rtabmap using left/right images? (subscribe_stereo:=true) I do not get any cloud. I sometimes I get the following during the runing of the bag and the launch file. [ WARN] (2019-02-13 10:47:51.015) OdometryF2M.cpp:469::computeTransform() Registration failed: "Not enough inliers 10/20 (matches=35) between -1 and 231" [ WARN] (2019-02-13 10:47:51.017) OdometryF2M.cpp:258::computeTransform() Failed to find a transformation with the provided guess (xyz=0.177359,-0.015194,0.035369 rpy=0.012964,-0.060532,-0.031622), trying again without a guess. Transform from fcu to base_link was unavailable for the time requested. Using latest instead. bagfile: http://www.fit.vutbr.cz/~plascencia/left_right_ned_enu.bag frames tree: http://www.fit.vutbr.cz/~plascencia/frames_fcu.pdf 1.-launch file for the rosbag: zed_stereoB_fusion_bag_play.launch 2.- With this launch file for the rosbag is working: zed_visual_odom_true_bag_play.launch Here is the map, which is not so bad.  Thank you and looking forward to your replay |

|

Administrator

|

Hi,

I cannot test the imu/fusion stuff as there are launch files missing. I could test the rtabmap vo part. It seems to work correctly, minor the fact there is a huge drift on take off, which makes the whole map tilting. I tried the stereo mode for rtabmap, but the right camera_info of the zed seems not correct (which makes the clouds very small and vo poses very small). The Tx (baseline) is wrong (note the -0.04010362923145294): header:

seq: 114

stamp:

secs: 1550050594

nsecs: 135220352

frame_id: "zed_left_camera"

height: 376

width: 672

distortion_model: "plumb_bob"

D: [0.0, 0.0, 0.0, 0.0, 0.0]

K: [334.4449462890625, 0.0, 350.9258728027344, 0.0, 334.4449462890625, 190.33578491210938, 0.0, 0.0, 1.0]

R: [1.0, 0.0, 0.0, 0.0, 1.0, 0.0, 0.0, 0.0, 1.0]

P: [334.4449462890625, 0.0, 350.9258728027344, -0.04010362923145294, 0.0, 334.4449462890625, 190.33578491210938, 0.0, 0.0, 0.0, 1.0, 0.0]

binning_x: 0

binning_y: 0

roi:

x_offset: 0

y_offset: 0

height: 0

width: 0

do_rectify: False

Here is an example with my zed: header:

seq: 238

stamp:

secs: 1551112002

nsecs: 133999296

frame_id: "zed_right_camera_optical_frame"

height: 376

width: 672

distortion_model: "plumb_bob"

D: [0.0, 0.0, 0.0, 0.0, 0.0]

K: [331.7145690917969, 0.0, 352.4257507324219, 0.0, 331.7145690917969, 189.7296142578125, 0.0, 0.0, 1.0]

R: [1.0, 0.0, 0.0, 0.0, 1.0, 0.0, 0.0, 0.0, 1.0]

P: [331.7145690917969, 0.0, 352.4257507324219, -39.805747985839844, 0.0, 331.7145690917969, 189.7296142578125, 0.0, 0.0, 0.0, 1.0, 0.0]

binning_x: 0

binning_y: 0

roi:

x_offset: 0

y_offset: 0

height: 0

width: 0

do_rectify: FalseThere is maybe a TF missing to rotate the camera in ROS coordinates on stereo mode. Look at this example: $ export ROS_NAMESPACE=stereo_camera $ roslaunch zed_wrapper zed_camera.launch publish_tf:=false resolution:=3 $ rosrun tf static_transform_publisher 0 0 0 -1.5707963267948966 0 -1.5707963267948966 camera_link zed_left_camera_optical_ame 100 $ roslaunch rtabmap_ros rtabmap.launch rtabmap_args:="--delete_db_on_start --Vis/CorFlowMaxLevel 5 --Stereo/MaxDisparity 200" right_image_topic:=/stereo_camera/right/image_rect_color stereo:=true Also when using rtabmap.launch in a launch file, set parameters in rtabmap_args argument like the example above. cheers, Mathieu |

|

Hi Mathieu

Thank you very much for your replay. I can see in both cameras left/right Tx (baseline) is wrong. I am not using the latest driver from zed-sdk version 2.2 and that driver comes with a zed urdf zed_test.xacro in that file I have that transformation. <joint name="zed_depth_camera_joint" type="fixed"> <parent link="zed_left_camera"/> <child link="zed_depth_camera"/> <origin xyz="0 0 0" rpy="-1.5707963267948966 0 -1.5707963267948966"/> </joint> So I do not know where to set the transformation with the frames camera_link zed_left_camera_optical_frame static_transform_publisher 0 0 0 -1.5707963267948966 0 -1.5707963267948966 camera_link zed_left_camera_optical_frame 100 I have: /stereo_camera/right/camera_info header:

seq: 213

stamp:

secs: 1551095985

nsecs: 672814496

frame_id: "zed_left_camera"

height: 376

width: 672

distortion_model: "plumb_bob"

D: [0.0, 0.0, 0.0, 0.0, 0.0]

K: [334.4773864746094, 0.0, 350.92645263671875, 0.0, 334.4773864746094, 190.33334350585938, 0.0, 0.0, 1.0]

R: [1.0, 0.0, 0.0, 0.0, 1.0, 0.0, 0.0, 0.0, 1.0]

P: [334.4773864746094, 0.0, 350.92645263671875, -0.040107518434524536, 0.0, 334.4773864746094, 190.33334350585938, 0.0, 0.0, 0.0, 1.0, 0.0]

binning_x: 0

binning_y: 0

roi:

x_offset: 0

y_offset: 0

height: 0

width: 0

do_rectify: False

rostopic echo /stereo_camera/left/camera_info

header:

seq: 1554

stamp:

secs: 1551096071

nsecs: 641753856

frame_id: "zed_left_camera"

height: 376

width: 672

distortion_model: "plumb_bob"

D: [0.0, 0.0, 0.0, 0.0, 0.0]

K: [334.4773864746094, 0.0, 350.92645263671875, 0.0, 334.4773864746094, 190.33334350585938, 0.0, 0.0, 1.0]

R: [1.0, 0.0, 0.0, 0.0, 1.0, 0.0, 0.0, 0.0, 1.0]

P: [334.4773864746094, 0.0, 350.92645263671875, 0.0, 0.0, 334.4773864746094, 190.33334350585938, 0.0, 0.0, 0.0, 1.0, 0.0]

binning_x: 0

binning_y: 0

roi:

x_offset: 0

y_offset: 0

height: 0

width: 0

do_rectify: False

Any suggestion?? |

|

Administrator

|

I am not sure to understand that joint:

<joint name="zed_depth_camera_joint" type="fixed"> <parent link="zed_left_camera"/> <child link="zed_depth_camera"/> <origin xyz="0 0 0" rpy="-1.5707963267948966 0 -1.5707963267948966"/> </joint> as I would assume that both left and depth image are in the same frame. The TF /camera_link -> /zed_left_camera_optical_frame is coming from the stereo example here, which starts zed launch file with publish_tf:=false. I tried the two other configurations on the RGB-D example page and they should still also work with latest zed ros wrapper. cheers, Mathieu |

|

This post was updated on .

Hi Mathieu

Well, the thing is that I am using the sdk-zed 2.2 version. I am not using the latest version which is the 2.7, I guess :) and in the zed_camera.launch I only have:

<arg name="base_frame" default="zed_center" />

<arg name="camera_frame" default="zed_left_camera" />

<arg name="depth_frame" default="zed_depth_camera" />

Where to find or how to set: /camera_link /zed_left_camera_optical_frame the urdf camera model is: zed_test.xacro I can see that in the new zed ros package the following frames are defined:

<arg name="base_frame" default="zed_camera_center" />

<arg name="left_camera_frame" default="zed_left_camera_frame" />

<arg name="left_camera_optical_frame" default="zed_left_camera_optical_frame" />

<arg name="right_camera_frame" default="zed_right_camera_frame" />

<arg name="right_camera_optical_frame" default="zed_right_camera_optical_frame" />

I can not update it to the new driver, cos I would need to work with the sdk-zed 2.2 because of compatibility problems. Any clue? |

|

Administrator

|

Record a rosbag with zed tf and left/right images, I may check to see if there is something obvious. Your other bags with RGB/Depth looks fine though.

|

|

This post was updated on .

Hi Mathieu

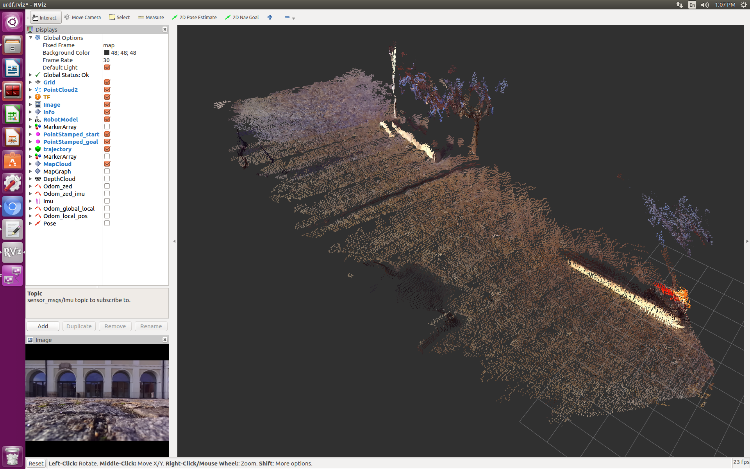

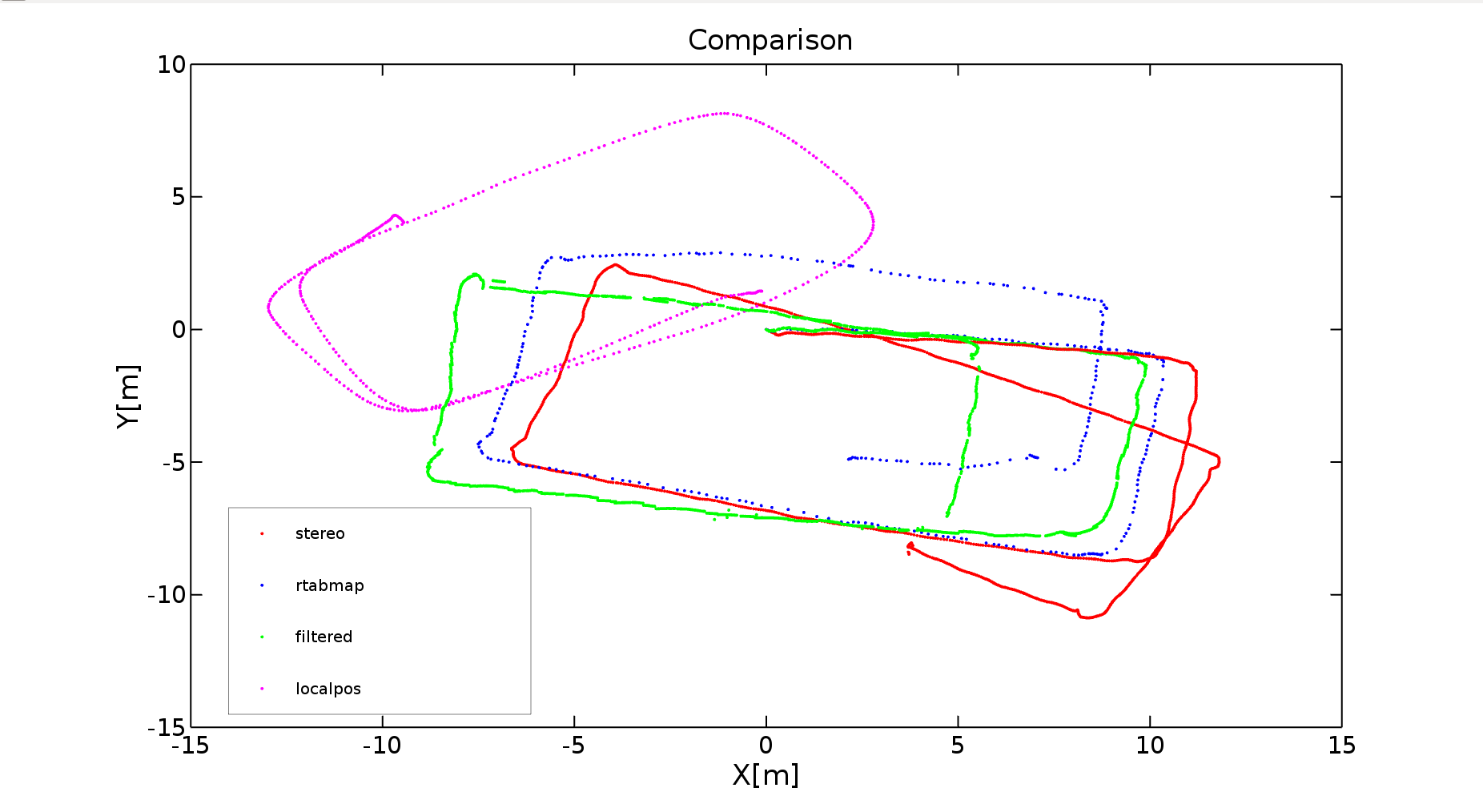

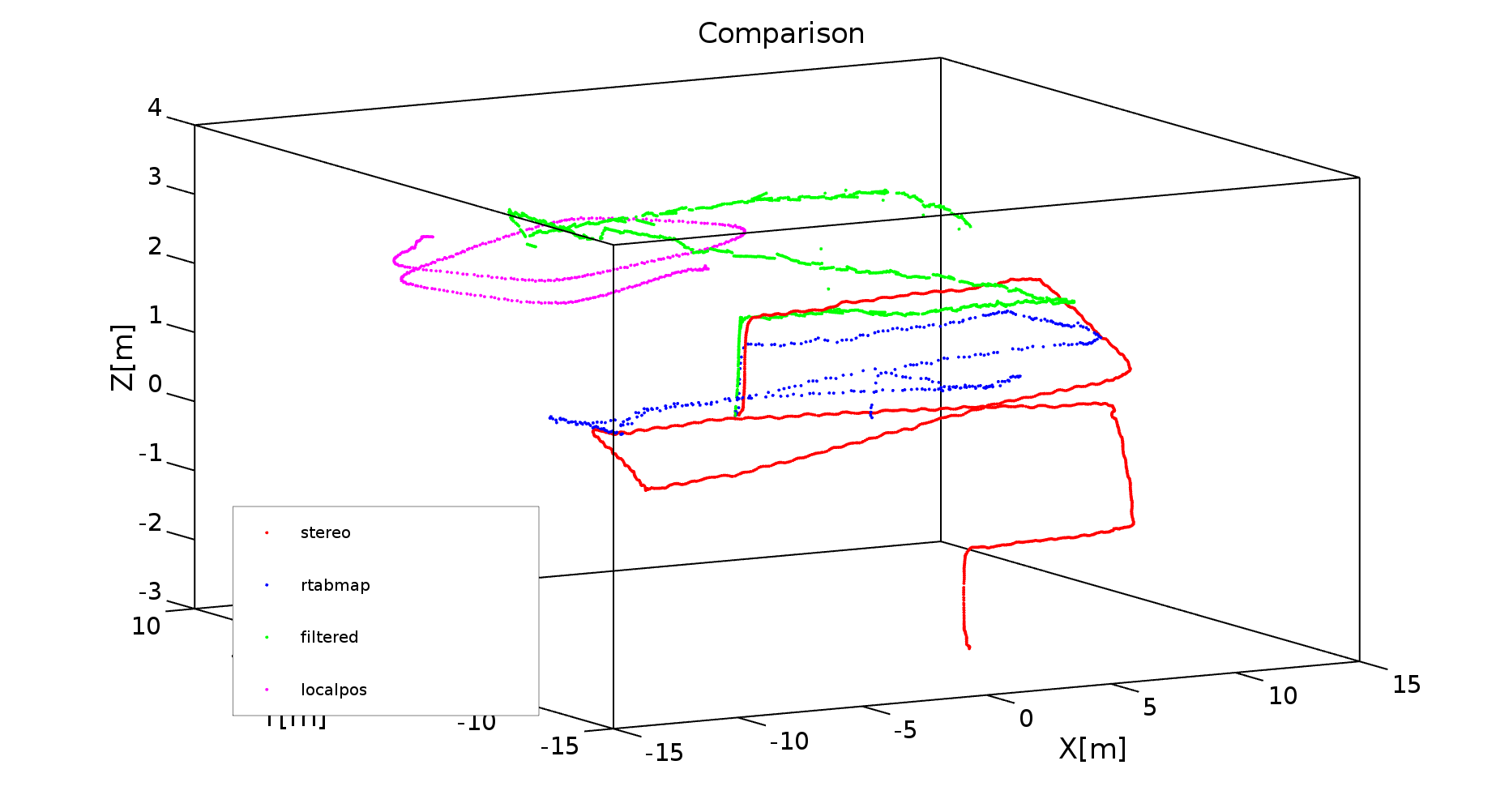

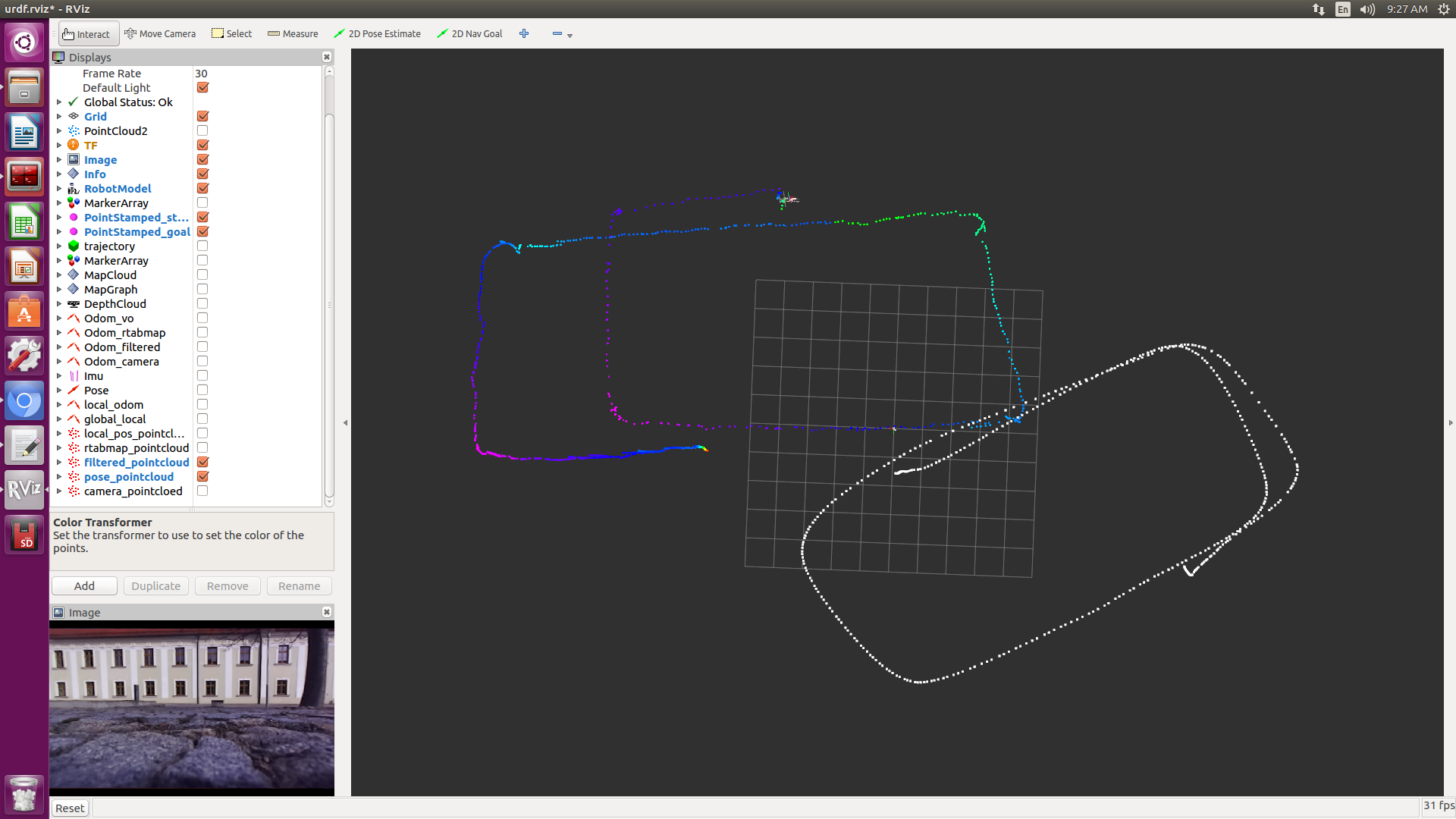

I have recorded a ros bag that contains the following topics among the camera tf as you suggested :) /stereo_camera/left/image_raw_color /stereo_camera/left/image_rect_color /stereo_camera/right/image_raw_color /stereo_camera/right/image_rect_color The bag is: Bytheway, I have ploted in a single plot the following topics: 1. /stereo_camera/odom 2. /stereo_camera/rtabmap/odom 3. /odometry/filtered 4. /mavros/local_position/odom As you can see in the following two pictures:   Just wondering why are shifted and rotated? If you take a look for instance, /mavros/local_position/odomis completely rotated and shifted also the other ones. Here I show you the in RVIZthe comparison between /mavros/local_position/odomand /stereo_camera/rtabmap/odom  The white trajectory is /mavros/local_position/odomwhereas the color one is /stereo_camera/rtabmap/odom AND.......................... ONE MORE QUESTION :) I am planning to make a small mission planner using qgroundcontrol, since the drone will be flying, what shall be way to record data for the rtabmap. I was thinking that in the normal way i record the bag file :) Could you kindly give me your opinion? Thank you very much. |

|

Administrator

|

Hi,

It is difficult to see which trajectory is the better one. As you are flying outdoor, I think it would be nice to setup a GPS RTK to get an accurate trajectory to compare too. It will be a lot easier to debug afterwards. For the mavros estimation stuff, I am not familiar with that, you may ask on mavros github. It depends what is required to fly to follow waypoint, is rtabmap required to get a pose for mavros? Can you just do mavros IMU+GPS planning ignoring the camera data? For rtabmap, to process the bag afterwards, you need at least the RGB+Depth images or Left/Right images (with corresponding camera_info). cheers, Mathieu |

|

This post was updated on .

Hi Mathieu,

Thank you for your answer. Well, 1.- The idea now is to build up a 3D map for drone localization and navigation. And since you have mentioned in one post that the robot_localization config is not good, the imu is poorly synchronized with the camera and/or TF between imu and camera is wrong. I have done camera and imu calibration using kalibr https://github.com/ethz-asl/kalibr/wiki/camera-imu-calibration I have used /mavros/imu/data_raw and not /mavros/imu/data. I hope it is ok :) The calibration is here: http://official-rtab-map-forum.206.s1.nabble.com/file/n5559/camchain-imucam-homeacpcatkin_wskalibr-cdedynamicsdata.yaml Do you know how to set the calibration into the sensor fusion launch file???? 2.- If I use Qgroundcontrol station in a mission waypoint flight mode, I do not think I may need camera data. But if I want to used the 3D rtabmap map I may need the camera data. 3.- If I want to record a bagfile using a real drone flight, what do you recomend? So far I have taken the drone in my hands and simulate a flight :) 4.- I am planning to use qground control station to have a following way point autonomous mission and record data as usual. |

|

Administrator

|

Hi,

1. The camera intrinsics are related to camera_info message data. For the extrinsics (IMU -> camera), those would be published manually on TF with maybe a static_transform_publisher. 2. The px4's EKF2 is configured with GPS+IMU? You may had a visual odometry to EKF2. For the 3D Map, it depends on which odometry topic rtabmap node is subscribing. Ideally, you would want to have rtabmap and px4 subscribing on same visual odometry topic. I am not used to that kind of hardware, so it is difficult more me to say more details, you may refer to how people are using mavros with visual odometry. 3. If you navigate with GPS+IMU using QGroundControl, you may record a rosbag on the side to test SLAM afterwards offline. cheers, Mathieu |

«

Return to Official RTAB-Map Forum

|

1 view|%1 views

| Free forum by Nabble | Edit this page |