Better Loop Closure for Outdoor Use

|

Hello,

The question might be a little too general, but I will appreciate any help and tips. I am trying to map a tree using only visual odometry in RTAB. I have already optimized my RealSense camera to give the best depth reading outdoors. Now, I am running into a problem of RTAB not being able to correctly reconstruct the map. I suspect that by adjusting the RTAB parameters I could overcome this problem. Here are examples of what happens. When I scan the tree vertically, the base of tree would often get misaligned from the initial position. Another instance is that the ground level will be above or below the original level at which the scan began. Background info: I am using a realsense r200 camera attached to a minipc on top of a drone. I collect a bag file of topics necessary for visual odometry in RTAB. Next, I process the bag files with RTAB on a different computer with a strong processor. I would highly appreciate any practical tips or general directions I could probe to increase the quality of maps generated by RTAB. |

|

This post was updated on .

Hi !

Are you sure RealSense r200 is adapted to outdoor applications ? Infrared information is going nonsense when the sun is involved, and it seems your camera is RGBD so it could apply. (the specs on the mouser link only talk about indoor range by the way :S ) See this rtabmap forum thread : matlabbe says " If you are using RGB-D visual odometry, the robot would get lost outside (particularly on a sunny day), " Hope I'm making sense ! |

|

Hi Blupon,

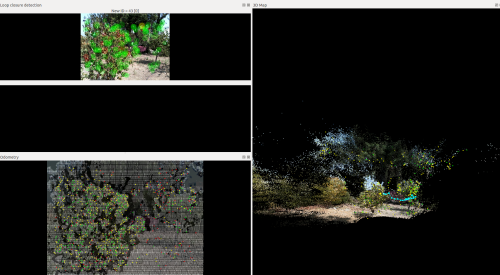

You are right, r200 is not adapted for outdoor use. However, I was able to play around with r200 configuration to make it sustain visual odometry while mapping outside. As you will see bellow, I had some okay results in mapping trees.   As you see the quality is poor; however, my objective is to obtain an overall shape of the tree and especially the trunk. I realize that I may have reached the limit of what is possible with realsense camera, so now I am trying to play with RTAB parameters. Do you have any thoughts on which parameters could yield best loop closure detection? Cheers, Daniyar |

|

I don't know about "best" since it really must depend on weather/camera/place etc, but looking into it quickly I found this list of parameters :

in which you can find those potentially interesting lines // Verify hypotheses RTABMAP_PARAM(VhEp, Enabled, bool, false, uFormat("Verify visual loop closure hypothesis by computing a fundamental matrix. This is done prior to transformation computation when %s is enabled.", kRGBDEnabled().c_str())); RTABMAP_PARAM(VhEp, MatchCountMin, int, 8, "Minimum of matching visual words pairs to accept the loop hypothesis."); or these // Local/Proximity loop closure detection RTABMAP_PARAM(RGBD, ProximityByTime, bool, false, "Detection over all locations in STM."); RTABMAP_PARAM(RGBD, ProximityBySpace, bool, true, "Detection over locations (in Working Memory) near in space."); RTABMAP_PARAM(RGBD, ProximityMaxGraphDepth, int, 50, "Maximum depth from the current/last loop closure location and the local loop closure hypotheses. Set 0 to ignore."); RTABMAP_PARAM(RGBD, ProximityMaxPaths, int, 3, "Maximum paths compared (from the most recent) for proximity detection by space. 0 means no limit."); RTABMAP_PARAM(RGBD, ProximityPathFilteringRadius, float, 0.5, "Path filtering radius to reduce the number of nodes to compare in a path. A path should also be inside that radius to be considered for proximity detection."); RTABMAP_PARAM(RGBD, ProximityPathMaxNeighbors, int, 0, "Maximum neighbor nodes compared on each path. Set to 0 to disable merging the laser scans."); RTABMAP_PARAM(RGBD, ProximityPathRawPosesUsed, bool, true, "When comparing to a local path, merge the scan using the odometry poses (with neighbor link optimizations) instead of the ones in the optimized local graph."); RTABMAP_PARAM(RGBD, ProximityAngle, float, 45, "Maximum angle (degrees) for visual proximity detection."); Also, when you launch RTABMAP using roslaunch, the shell prints lines like: * /rtabmap/rtabmap/Vis/InlierDistance: 0.1 or * /hector_mapping/map_resolution: 0.05 Perhaps playing with those will change something....or break the node execution  Let me know if you manage to play with those parameters and influence your loop closures results IRL ! |

|

Administrator

|

Can you share your bag files and commands used to run them?

|

|

The bagfiles are very big, at least 4GB (how would I share one?)

Here is the command with the topics I collect: $ rosbag record -O bagfiles/trial1 /camera/rgb/image_rect_color /camera/rgb/camera_info /camera/depth_registered/sw_registered/image_rect_raw /tf_static /bebop/odom /tf ##This is the topic I publish myself that provides the transformation between odom and camera_link The following is the rtabmap launch command: $ roslaunch rtabmap_ros rtabmapEnhanced.launch rtabmap_args:="--delete_db_on_start" depth_topic:=/camera/depth_registered/sw_registered/image_rect_raw The launch file is the same as the original except for few parameter changes according to this tutorial: Advance Tuning (Most changes were implemented except for changing the Visual Odometry Strategy into Frame to Frame as it didn't give good results). Also after some suggestions by Blupon: I have played with some RTAB parameters and got much better results. I load the parameters as follows: $ rosparam load rtabParam/rtabParam.yaml Here is how I play the bagfile $ rosbag play --clock /media/administrator/Seagate\ Expansion\ Drive/Daniyar/Rosbags/trial24OrchardOdSmallTrees_360Scan_ofOneTree.bag -r 0.2 I find that playing at a slower rate allows rtab not to lose visual odometry and thus provides better results. Also in case the visual odometry is lost, I replay the bag several times at the place where odometry was lost until the visual odometry is found and the mapping can proceed. Finally, as you may have noticed I am not using the physical odometry provided by the bebop drone. I used to run a command: $ roslaunch rtabmap_ros rtabmap.launch rtabmap_args:="--delete_db_on_start" depth_topic:=/camera/depth_registered/sw_registered/image_rect_raw subscibe_odometry:=true odom_topic:=/bebop/odom frame_id:=odom visual_dometry:=false However, the results were very poor, much worse than just using the visual odometry. Possibly because the /bebop/odom topic is not good enough or I am doing something wrong. Which leads to the question if it is possible to use both physical and visual odometry to correct each other? ########################################################################################### rtabmapEnhanced.launch ############################## rtabmapEnhanced.launch ############################################################################################ rtabParam.yaml Note: Lines with "#" indicate the changes to the original parameters. ############################## rtabParam.yaml |

|

Administrator

|

You can record compressed images to reduce the size of the bag:

rosbag record -O bagfiles/trial1 \ /camera/rgb/image_rect_color/compressed \ /camera/rgb/camera_info \ /camera/depth_registered/sw_registered/image_rect_raw/compressedDepth \ /tf_static \ /bebop/odom \ /tf ##This is the topic I publish myself that provides the transformation between odom and camera_linkWell, if you are going to use visual odometry, don't publish /odom -> /camera_link. To share it, maybe a dropbox or google drive can be used. There are also other free file sharing services on the web now. We can do sensor fusion with robot_localization package to combine odometry from different sources, it is however not trivial. See their doc for more info. cheers, Mathieu |

|

Administrator

|

Hi,

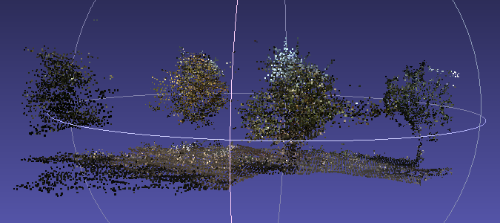

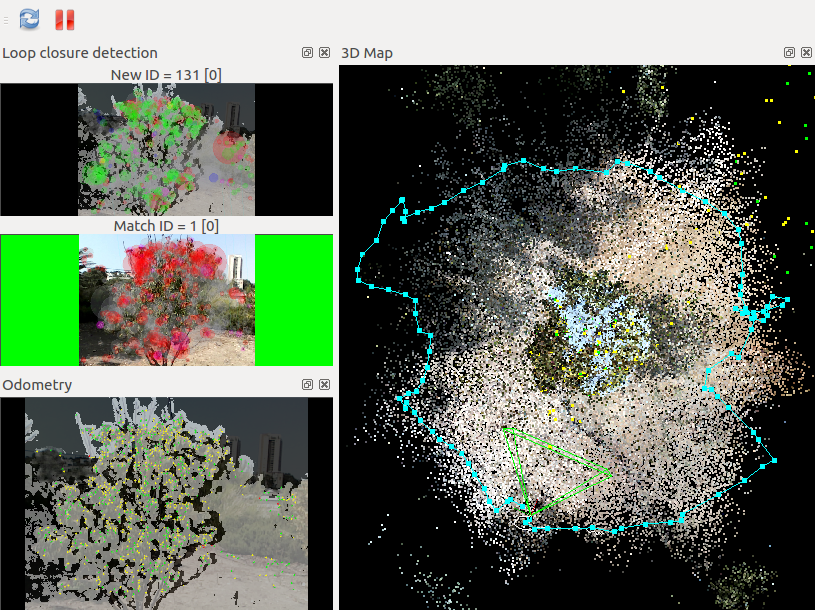

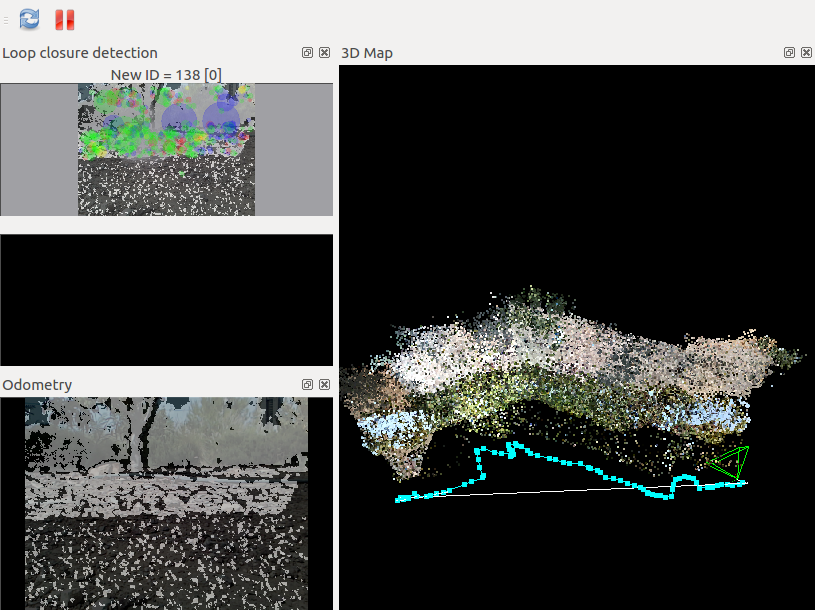

Thx for the bags. First of all, /camera_link should have parent set to /base_link, not /odom. To use visual odometry from rtabmap_ros, bebop should not publish TF /odom -> /base_link. The depth images have also empty lines, caused by registration:  I recommend to use the second approach for R200 on this tutorial to generate the depth image (you may have to use rtabmap source if the nodelet is missing). Here is an example with /odom TF removed from the bag, /camera_link modified with parent /base_link and using the second approach to generate depth image: $ roslaunch rtabmap_ros rtabmap.launch rtabmap_args:="--delete_db_on_start" depth_topic:=/camera/depth/points/image_raw $ rosparam set use_sim_time true /// ****This line is not required without the bag, as realsense driver should already publish "/camera/depth/points" $ rosrun nodelet nodelet standalone rtabmap_ros/point_cloud_xyz depth/image:=/camera/depth_registered/sw_registered/image_rect_raw depth/camera_info:=/camera/rgb/camera_info cloud:=/camera/depth/points _approx_sync:=false $ rosrun rtabmap_ros pointcloud_to_depthimage cloud:=/camera/depth/points camera_info:=/camera/rgb/camera_info image_raw:=/camera/depth/points/image_raw image:=/camera/depth/points/image _approx:=false _decimation:=2  See how the depth image is more full. On right is the top view of moving around the tree, with loop closure detected when coming back at the beginning. The following screenshot is the same experiment but using /bebop/odom, giving a lot worst results:  For the second bag, the results were not as good as with the single tree. There are a lot of very "fast" movements of the camera, which are causing odometry lost. Another problem I have seen is that as the platform is shaking and the color camera of the R200 is a rolling shutter, this causes a lot of visual artifacts in the RGB images. We could limit this effect by using the left IR camera of R200, which has a Global Shutter, as the RGB input to rtabmap. cheers, Mathieu |

«

Return to Official RTAB-Map Forum

|

1 view|%1 views

| Free forum by Nabble | Edit this page |