Clarification on RGB and Depth image has input from Stereo camera

12

12

|

Hello All

I integrated my customize stereo camera to rtab map but i was not satisfied with the disparity genrated by rtab map tool since it was generated by block matching algorithm so i used sgbm algorithm and generated depth and rgb output outside rtab map and gave has rgb and depth in rgbd section. The output what i got was quit decent in terms of point cloud and also in icp but i felt the depth was not properly came out. i assume that it might be bcoz of calibration.i dont know how and what to give has input for the calibration file.I just gave a defult calibration file which i got from the same forum for rgbd camera, can some one suggest me any idea which will be really help full. |

|

Administrator

|

Hi,

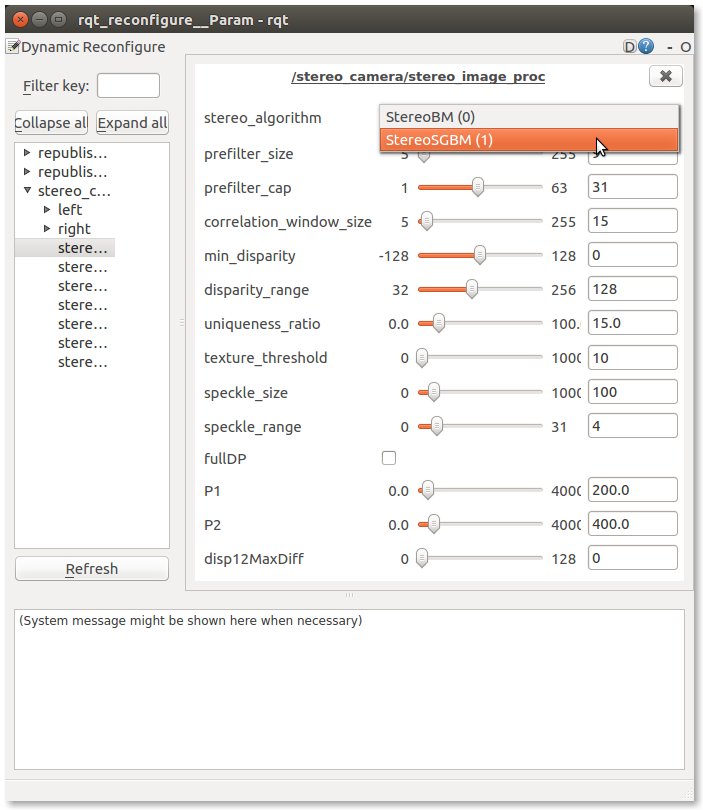

The calibration sent to rtabmap should be the one of the RGB/left image. For your disparity image with sgbm, you should have a left/right camera correctly calibrated. For convenience, I think you can select sgbm in recent stereo_image_proc, in rqt_dynamic_reconfigure you would have a combobox with BM or SGBM. To have correct calibration inputs for stereo_image_proc, see http://wiki.ros.org/camera_calibration/Tutorials/StereoCalibration For generating depth from disparity image, you can look at this nodelet: rtambap_ros/disparity_to_depth. cheers, Mathieu |

|

Hi Mathieu

Mathieu i saw your comment on using "SGBM in rqt_dynamic_reconfigure" but i am using rtab map in linux and there is two option like basic and advance and i couldn't see any option for selecting sgbm.If can you share any screen shot which will be really helpful |

|

Administrator

|

Hi,

When stereo_image_proc is started: $ rosrun rqt_reconfigure rqt_reconfigurethen select stereo_image_proc panel, you will see the stereo strategy combobox:  But yeah, this could be an option that could be added directly to rtabmap for convenience: https://github.com/introlab/rtabmap/issues/77 cheer, Mathieu |

|

In reply to this post by matlabbe

Hi Mathieu,

I have another question concerning the nodelet rtambap_ros/disparity_to_depth. Perhaps it's not the right forum, but maybe you can give me a quick answer. We have a stereo camera which publishes the disparity map with the message type sensor_msgs/Image. The nodelet rtabmap_ros/disparity_to_depth on the other hand needs the message type stereo_msgs/DisparityImage. Can you give me a hint how to deal with this in a short way? Kind regards Felix PS: We need the depth map, that's way we want to make this detour. |

|

Administrator

|

Hi,

The standard ROS way is to send the left and right images to stereo_img_proc, to get the disparity image. The disparity image can then be fed to disparity_to_depth nodelet to convert to a depth image. The message type sensor_msgs/Image is not convenient to represent disparity images (well, it could for compression purpose). To convert to depth we need the baseline and focal distance, which sensor_msgs/DisparityImage has directly. To convert disparity from a sensor_msgs/Image we would have to subscribe also to left and right camera_info to get the baseline and focal distance required to convert disparity values (pixels) to depth values (meters or mm). cheers, Mathieu |

|

Hi Mathieu,

thank you very much for your answer. With this information, I want to process a stereo image pair in the following workflow: 1. use the stereo image pair to get the disparity image via stereo_img_proc 2. use the disparity image to get the depth image via disparity_to_depth 3. use the depth image with the coressponding left image to to SLAM in the RGB-D Mapping mode How can I do this? I want to use the RGB-D Mapping mode because I want to manipulate (e.g. mask) the depth image in order to remove some objects in the 3D map. Kind regards Felix |

|

Administrator

|

When you have the left image and the corresponding depth image, this is like using rtabmap with RGB-D input. From stereo, it is similar to the "Stereo A" approach here. If you need visual odometry, the node rtabmap_ros/stereo_odometry and replace viso2_ros/stereo_odometer node for convenience.

Just to come back on your original question, if your camera can already publish a disparity image, you may modify the camera node to publish directly the depth image (as the camera would have already the calibration info to convert disparity to depth). cheers, Mathieu |

|

Thanks for your answer. I tried to implement a python script in order to convert the disparity from sensor_msgs/Image to stereo/DisparityImage:

#!/usr/bin/env python

# This script converts an disparity image from "sensor_msgs/Image" to "stereo_msgs/DisparityImage"

import rospy

import message_filters

from nerian_stereo.msg import StereoCameraInfo

from sensor_msgs.msg import Image

from stereo_msgs.msg import DisparityImage

from cv_bridge import CvBridge

from sensor_msgs.msg import CameraInfo

disparity = 0

cam_info_left = 0

def callback(disparity_map, stereo_camera_info):

global disparity;

global cam_info_left;

rospy.loginfo(rospy.get_caller_id() + ": Disparity image and camera info received")

disparity_map = CvBridge().imgmsg_to_cv2(disparity_map, "32FC1")

disparity_map = CvBridge().cv2_to_imgmsg(disparity_map,"passthrough")

disparity.publish(header = disparity_map.header, image = disparity_map, f = stereo_camera_info.Q[11], T = stereo_camera_info.T_left_right[0], min_disparity = 0, max_disparity = 127, delta_d = 0.0625)

cam_info_left.publish(stereo_camera_info.left_info)

def listener():

global disparity;

global cam_info_left;

rospy.init_node('disp2dispImage')

image_sub = message_filters.Subscriber('/nerian_stereo/disparity_map', Image)

info_sub = message_filters.Subscriber('/nerian_stereo/stereo_camera_info', StereoCameraInfo)

ts = message_filters.TimeSynchronizer([image_sub, info_sub], 10)

ts.registerCallback(callback)

disparity = rospy.Publisher('/disparity', DisparityImage, queue_size=10)

cam_info_left = rospy.Publisher('/stereo_camera_info', CameraInfo, queue_size=10)

rospy.spin()

if __name__ == '__main__':

listener() Then I tried to use the nodelet rtabmap_ros/disparity_to_depth. But I didn't manage it to work. Can you give me a hint in order to find my mistake? Maybe there is something wrong with the conversion "32FC1"...? Kind regards Felix |

|

Administrator

|

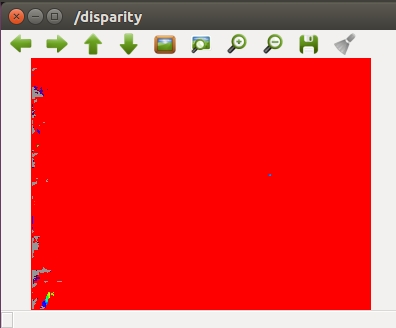

What does the disparity look like before trying to convert it to depth? Can you run the following and show the resulting image:

$ rosrun image_view disparity_view image:=/disparity |

|

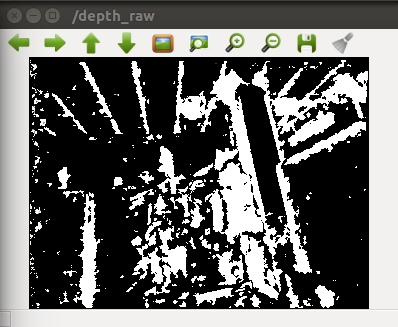

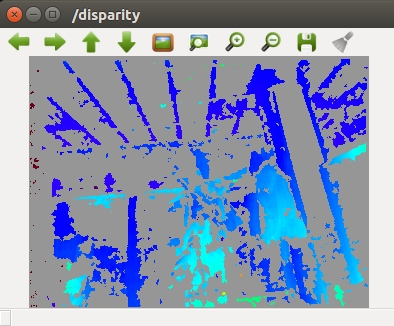

That's the resulting image:

... seems to be wrong!? |

|

Administrator

|

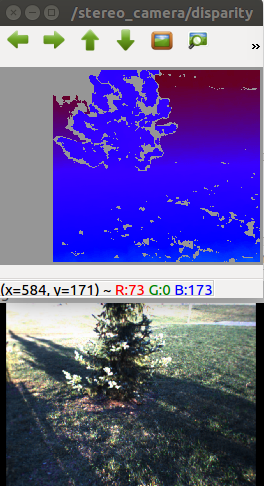

It looks saturated, what are the range of the original values in each pixel of the input image?

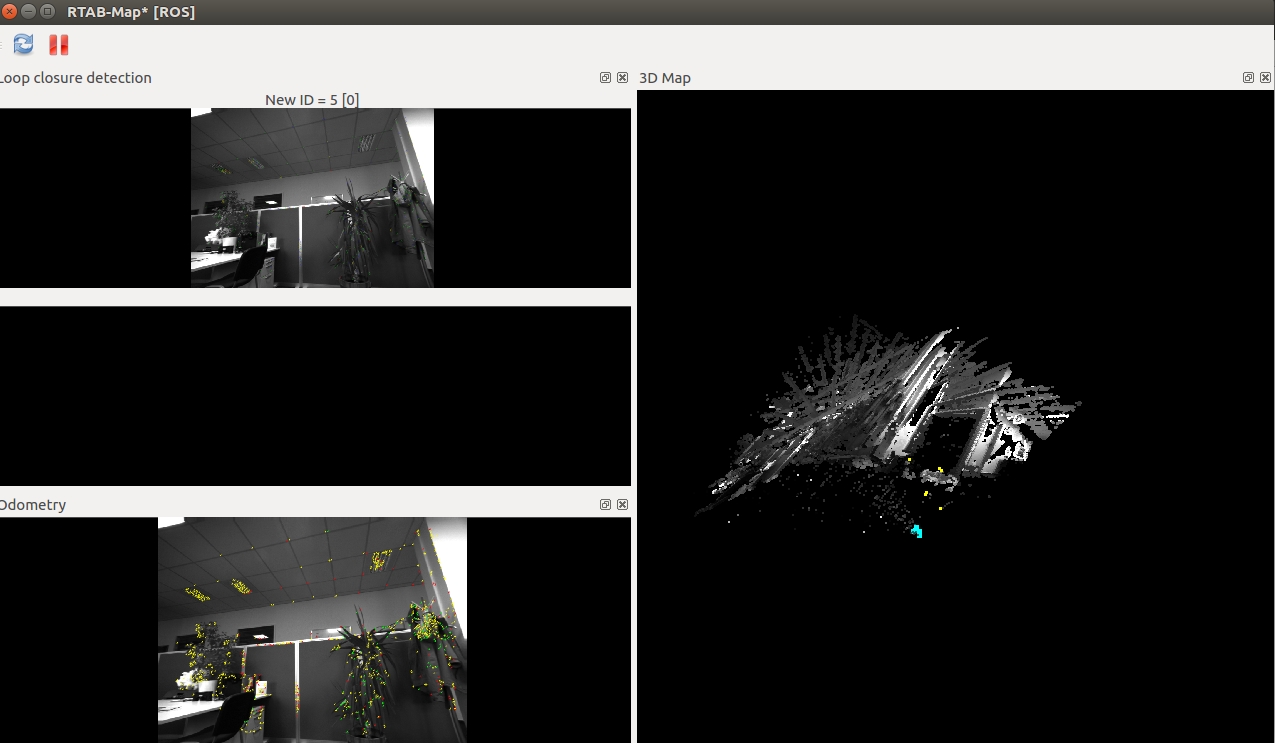

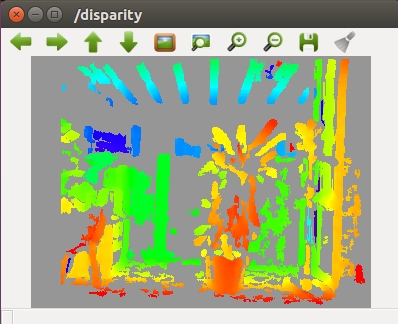

The stereo demo gives this:  |

|

Thanks for your answer.

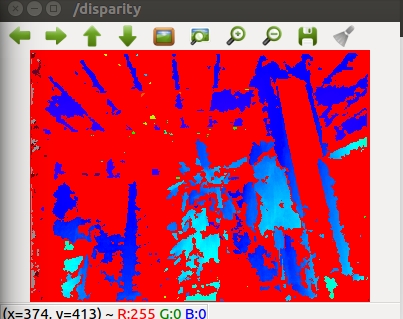

Maybe it's the best if I give you again a brief overview about our setup: - we have a greyscale stereo camera which also can publish the disparity image via type sensor_msgs/Image - we convert this disparity image into the type stereo_msgs/DisparityImage (with the now working python script, see appendix) - than we use the rtabmap_ros/disparity_to_depth nodelet in order to use the RGBD mapping mode in RTAB-Map Here are some outputs of the above steps: 1) The disparity map as type sensor_msgs/Image:  2) The depth map after using rtabmap_ros/disparity_to_depth:  3) Screenshot of RTAB-Map during the run (the 3D point cloud is wrong):  I think there's something wrong with the depth map. We get the following warning during the run: [ WARN] (2018-10-05 12:28:10.157) OdometryF2M.cpp:472::computeTransform() Registration failed: "Not enough inliers 7/20 (matches=46) between -1 and 3" [ WARN] (2018-10-05 12:28:10.158) OdometryF2M.cpp:261::computeTransform() Failed to find a transformation with the provided guess (xyz=-0.035462,0.369827,-1.531558 rpy=-0.010178,-0.183696,0.056159), trying again without a guess. [ WARN] (2018-10-05 12:28:10.251) OdometryF2M.cpp:481::computeTransform() Trial with no guess succeeded! By the way, the ROS node of our camera says the following: "The stereo disparity map that is computed by SceneScan. The disparity map is transmitted as an image message with mono16 encoding. Please note that the disparity map has a 4-bit subpixel resolution. This means that its values have to be divided by 16 in order to receive the true pixel disparity" Thats why we devide the disparity map by 16 in order to convert it into stereo_msgs/DisparityImage. Can you again give us some hints in order to find our mistake? Kind regards, Felix PS: Here's the obove mentioned python script: #!/usr/bin/env python

# This script converts an disparity image from "sensor_msgs/Image" to "stereo_msgs/DisparityImage"

import rospy

import message_filters

from nerian_stereo.msg import StereoCameraInfo

from sensor_msgs.msg import Image

from stereo_msgs.msg import DisparityImage

from cv_bridge import CvBridge

from sensor_msgs.msg import CameraInfo

left_img = 0

disparity = 0

cam_info_left = 0

Q11 = 407.122375488

Tlr0 = -0.249977806853

def callback(left_image, disparity_map):

global left_img;

global disparity;

global Q11;

global Tlr0;

rospy.loginfo(rospy.get_caller_id() + ": Left image and disparity image received")

left_img.publish(left_image)

disparity_map = CvBridge().imgmsg_to_cv2(disparity_map, "32FC1")/16

disparity_map = CvBridge().cv2_to_imgmsg(disparity_map,"passthrough")

disparity.publish(header = left_image.header,image = disparity_map, f = Q11, T = Tlr0, min_disparity = 0, max_disparity = 127, delta_d = 0.0625)

def callback_cam_info(data):

global cam_info_left;

global Q11;

global Tlr0;

rospy.loginfo(rospy.get_caller_id() + ": Camera info received")

Q11 = data.Q[11]

Tlr0 = data.T_left_right[0]

cam_info_left.publish(data.left_info)

def listener():

global left_img;

global disparity;

global cam_info_left;

rospy.init_node('disp2dispImage')

left_sub = message_filters.Subscriber('/nerian_stereo/left_image', Image)

disp_sub = message_filters.Subscriber('/nerian_stereo/disparity_map', Image)

rospy.Subscriber("/nerian_stereo/stereo_camera_info", StereoCameraInfo, callback_cam_info)

ts = message_filters.TimeSynchronizer([left_sub, disp_sub], 10)

ts.registerCallback(callback)

left_img = rospy.Publisher('/left_image', Image, queue_size=10)

disparity = rospy.Publisher('/disparity', DisparityImage, queue_size=10)

cam_info_left = rospy.Publisher('/stereo_camera_info', CameraInfo, queue_size=10)

rospy.spin()

if __name__ == '__main__':

listener() Here we use two callback functions because our camera doesn't publish the camera info fast enough. |

|

Administrator

|

Hi,

Following the documentation, I think the following is wrong: disparity_map = CvBridge().imgmsg_to_cv2(disparity_map, "32FC1")/16I am not sure how imgmsg_to_cv2 works for the conversion in python, but as the input is 16SC1 type, would it be "16SC1" type, then convert to float, then divide by 16? If you want to convert directly the input disparity image to depth, look at the following code (follow disparity.type() == CV_16SC1 type):

cv::Mat depthFromDisparity(const cv::Mat & disparity,

float fx, float baseline,

int type)

{

UASSERT(!disparity.empty() && (disparity.type() == CV_32FC1 || disparity.type() == CV_16SC1));

UASSERT(type == CV_32FC1 || type == CV_16UC1);

cv::Mat depth = cv::Mat::zeros(disparity.rows, disparity.cols, type);

int countOverMax = 0;

for (int i = 0; i < disparity.rows; i++)

{

for (int j = 0; j < disparity.cols; j++)

{

float disparity_value = disparity.type() == CV_16SC1?float(disparity.at<short>(i,j))/16.0f:disparity.at<float>(i,j);

if (disparity_value > 0.0f)

{

// baseline * focal / disparity

float d = baseline * fx / disparity_value;

if(d>0)

{

if(depth.type() == CV_32FC1)

{

depth.at<float>(i,j) = d;

}

else

{

if(d*1000.0f <= (float)USHRT_MAX)

{

depth.at<unsigned short>(i,j) = (unsigned short)(d*1000.0f);

}

else

{

++countOverMax;

}

}

}

}

}

}

if(countOverMax)

{

UWARN("Depth conversion error, %d depth values ignored because they are over the maximum depth allowed (65535 mm).", countOverMax);

}

return depth;

}

Looking at the camera code, they should be published at the same rate and with exact same time. Rtabmap assumes also that camera_info and images are published at exactly the same rate. cheers, Mathieu |

|

Hi Mathieu,

thank you for your help. Finally, we managed it to convert the disparity into depth via the following code lines:

for (int i = 0; i < cv_ptr->image.rows; i++)

{

for (int j = 0; j < cv_ptr->image.cols; j++)

{

if (cv_ptr->image.at<float>(i,j) >= 0x0fff)

{

cv_ptr->image.at<float>(i,j) = -1;

}

else

// depth = focal * baseline / (disparity/16) (from http://wiki.ros.org/nerian_stereo: "The disparity values have to be divided by 16 in order to receive the true pixel disparity.")

// we use the absolute value of the baseline (because here it is negative)

cv_ptr->image.at<float>(i,j) = cam_info->Q[11]*std::abs(cam_info->T_left_right[0])*16./cv_ptr->image.at<float>(i,j);

}

}

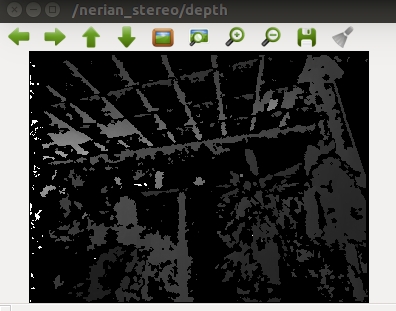

Here is an example of the resulting depth:  Kind regards, Felix PS: By the way, originally the camera only publishs the camera info every second (see https://github.com/nerian-vision/nerian_stereo/blob/88b99b84a5c8d1a8be841968c42ab681d309aa50/src/nerian_stereo_node.cpp#L625 ); that's why we had to adjust the code a little bit. |

|

Administrator

|

Indeed, I didn't look inside the publishCameraInfo function, I assumed it just converted the calibration to camera_info and publish. All standard ros packages like image_proc or stereo_image_proc assumes that camera_info are published at the same rate than the images. I would have patched the line you linked by removing the "if(dt > 1.0)".

cheers, Mathieu |

|

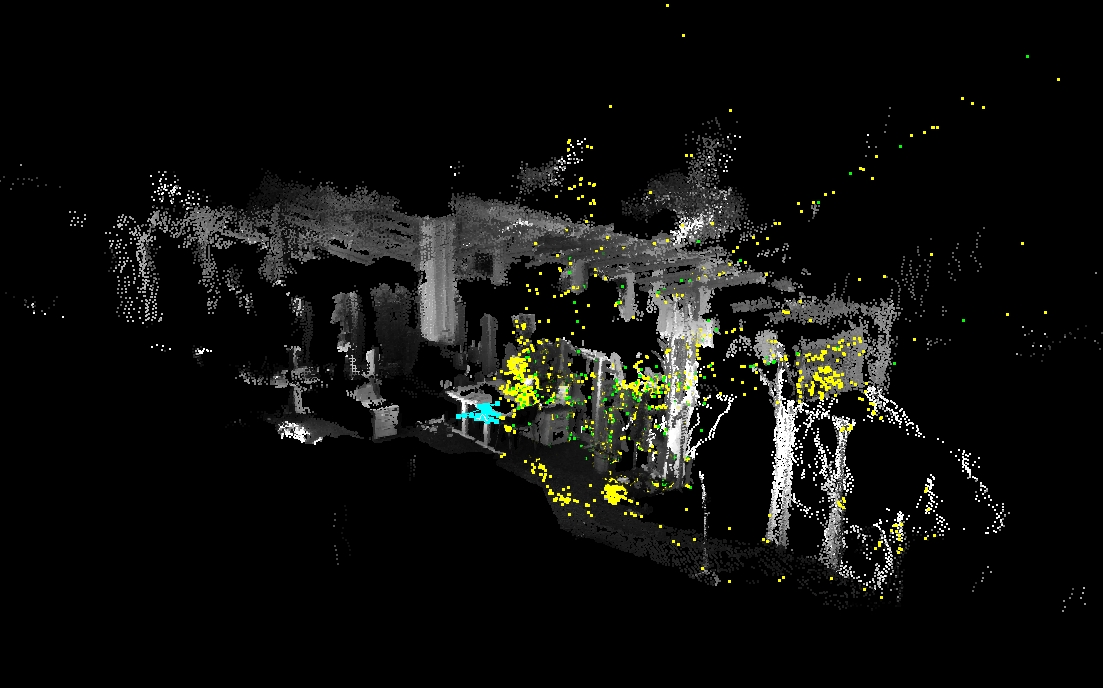

Hi Mathieu,

sorry for asking again about the depth topic. But maybe our above algorithm is not fully correct... The 3D-Map seems not to be correct. By zooming in or out, there are a lot of black areas in the 3D-Map... it is quite annoying. In order to find out where the problem could be, I tried the stereo output of the Nerian camera in the following way: 1. output nerian camera: left and right images 2. use stereo_image_proc/disparity to get the disparity in the "normal" type (stereo_msgs/DisparityImage) 3. use rtabmap_ros/disparity_to_depth to get the depth map To compare this depth map with our depth-algorithm above, I attached two videos of the depth map: depth_rtabmap.mkv depth_our.mkv The first video is about the depth coming from rtabmap_ros/disparity_to_depth; the second video is about our depth-algorithm. Maybe this could help you to find the problem. Here, we have an output of the correct 3D-Map (it is an office scene) with the RGBD mapping using the stereo images with rtabmap_ros/disparity_to_depth:  Kind regards, Felix |

|

Administrator

|

Looking at the video, it looks like that the disparity approach is different from Nerian than from stereo_image_proc. stereo_image_proc uses cv::StereoBM under the hood with some default parameters. To better compare, you may republish the Nerian disparity image in stereo_msgs/DisparityImage format and compare with the disparity image from stereo_image_proc. I don't think it is the "depth conversion" code the difference.

cheers, Mathieu |

|

Hi Mathieu,

thanks again for your reply. I have done a look at the corresponding disparity maps, see 1) The one which comes from the Nerian camera (converted as als stereo_msgs/DisparityImage):  2) The one which we get via stereo_image_proc/disparity from a stereo pair of the Nerian camera (also as stereo_msgs/DisparityImage):  The vizualisation is done with image_view. Can you see any problems with the first disparity map? Could it be that the disparity map which comes directly from the Nerian camera is too dense? Kind regards, Felix |

|

Administrator

|

Hi Felix,

It was easier to see in the videos, but the nerian disparity approach seems to give more dense disparity images. For localization/navigation, dense disparity images (in which textureless surfaces are interpolated) are not too much a problem, and can actually help to clear obstacles. If you are looking for accurate 3D reconstruction, you may want a disparity approach giving only disparity on pixels with a lot of texture (e.g., edges), for which depth can be accurately computed. If you are looking for 3D indoor reconstruction, consider using a RGB-D camera instead. cheers, Mathieu |

«

Return to Official RTAB-Map Forum

|

1 view|%1 views

| Free forum by Nabble | Edit this page |