Combine 3D Lidar and RGB-D Camera to create a single 3D point cloud (sensor fusion)

Combine 3D Lidar and RGB-D Camera to create a single 3D point cloud (sensor fusion)

|

This post was updated on .

Hi!

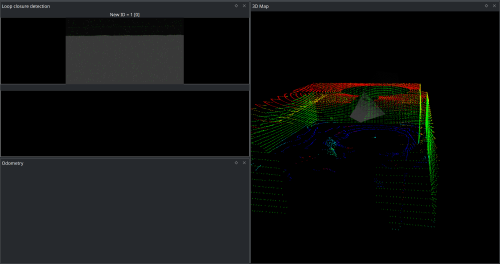

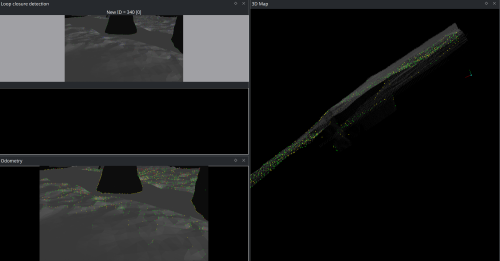

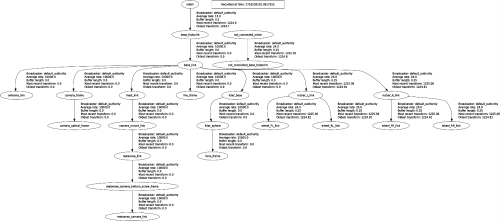

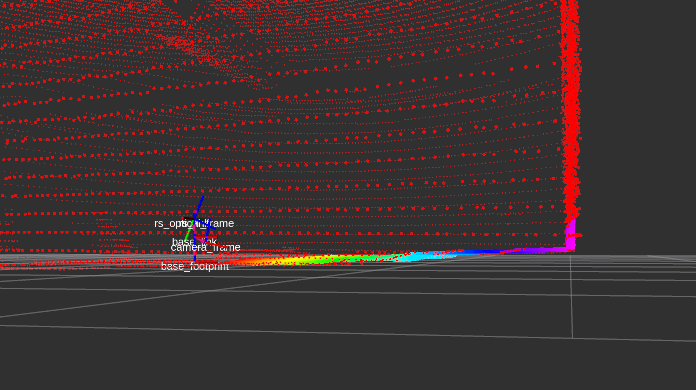

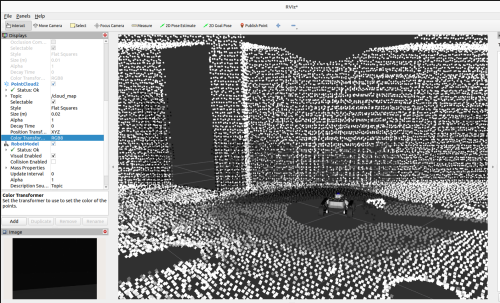

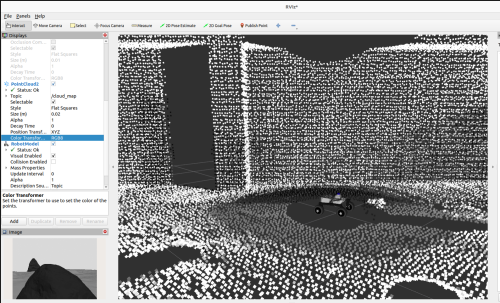

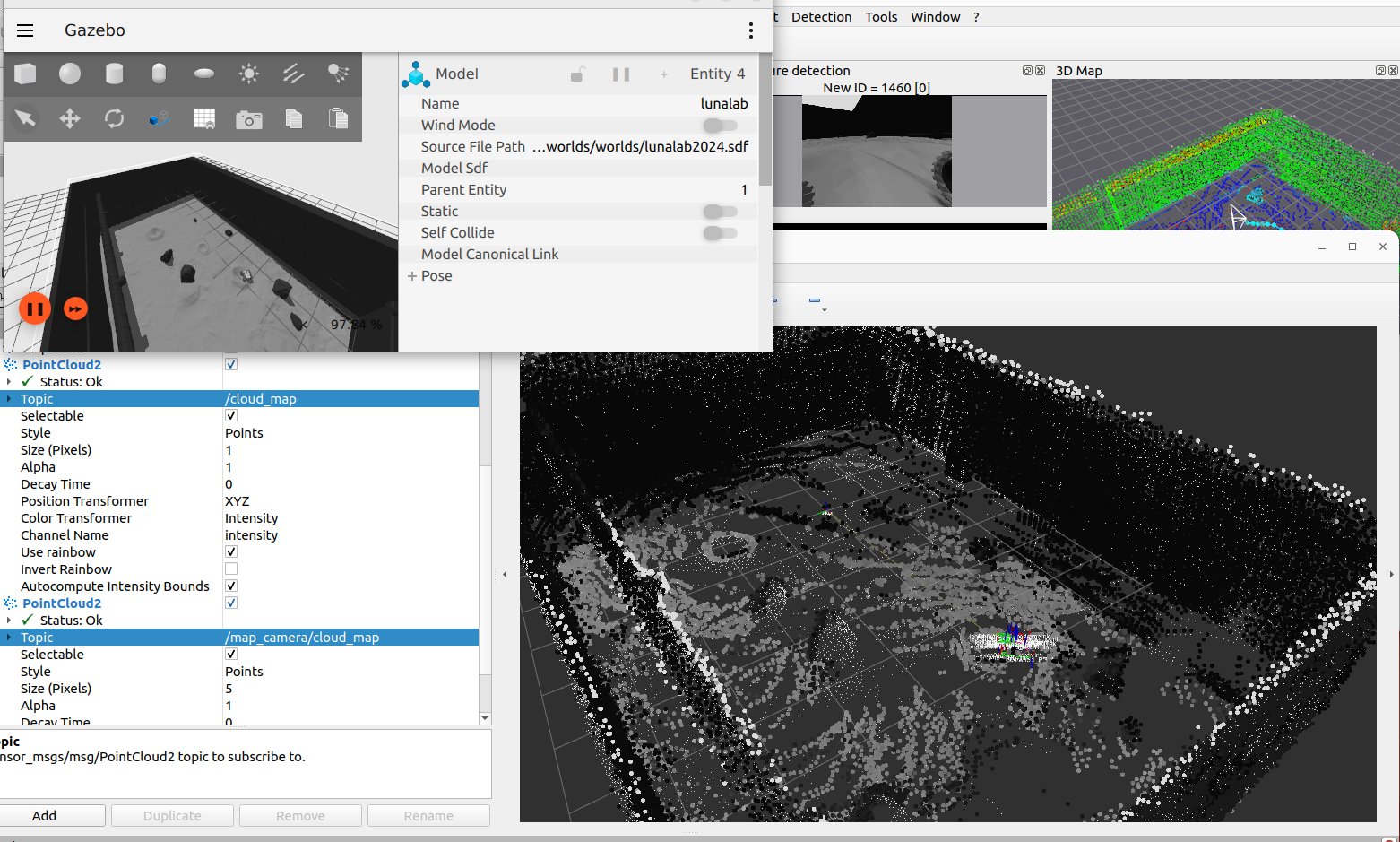

I am quite new to RTAB-MAP for ROS2 and I have used RTAB-MAP in ROS1 for simple map/3D point cloud creation using an RGB-D camera (Realsense D455) in the past. Currently, I am running a ROS2 Gazebo simulation with a virtual robot. The robot is equipped with a 3D LiDAR (Livox), and an RGB-D camera (Realsense D455). I am trying to use RTAB-MAP with ICP odometry (using only the 3D LiDAR), and map the environment using both the point cloud generated from the LiDAR and the RGB-D camera. My goal is to have a combined point cloud from both sensors. So far, I have RTAB-MAP mapping properly using only the LiDAR and ICP Odometry. A 3D point cloud is created as expected, and projected properly on the map frame. I used the velodyne example from the ROS2 branch of RTAB-MAP on github as a reference. However, when I try to add the RGB-D Camera I am facing a few issues. When I try to use both the camera and the LiDAR, it seems that the resulting point clouds are not merged (I have tried using the Point Cloud Assembler, and Point Cloud Aggregator, nodes but my approach does not seem to work). To clarify, I am using: - ICP-Odometry node using LiDAR input - Base RTAB-MAP node (for SLAM) which uses both the LiDAR input and RGB-D input (rgb and depth data from the camera). - Point Cloud assembler node using the data from 'odom_filtered_input_scan' topic, and RGB-D topics from the camera The result of this are two point clouds, which are not combined to produce a single one. The RGB-D point cloud is not even overlaid on the point cloud produced by the LiDAR. See bellow  I tried troubleshooting the problem in the following way: 1. Launch RTAB-MAP using only RGB-D odometry and with the Camera as the main sensor. 2. Check the TF-Tree, and the transformations from the Camera frame to the base_footprint frame. 3. Check the robot URDF model. My conclusions are the following: - When launching RTAB-MAP using only RGB-D odometry, the point cloud seems to be transformed in a weird way (see bellow). As I drive around, the point cloud get's worse and worse.. as expected since I suspect a frame transformation not being properly computed.  - When checking the TF-Tree and transformations everything seems to be correct (see attached image)  - The robot URDF seems fine too. I am quite perplexed because everything works fine with the 3D LiDAR but all problems come from using the camera (even in single RGB-D camera mapping..) If anyone can help me out that would be very nice, and if you want more explanations or clarifications I am happy to provide them. Sorry if I am misusing some terms, I am quite new to this.. To run the simulation, you can have a look out this repo I am using (https://github.com/Althyrios/leo_simulator-ros2). I am also attaching the rtabmap launch file, I am using to combine the two sensors (RGB-D Camera and 3D Lidar). rtabmap_livox-rgbd.py Thanks! - Alex --- Edit: added a missing figure |

|

Administrator

|

Hi,

It seems that the rgbd_camera config in the URDF is wrong, or it is not compatible with the gazebo version I am using. To make the camera work correctly, I had to hard-code these values (based on that example):

diff --git a/leo_description/urdf/macros.xacro b/leo_description/urdf/macros.xacro

index 98cdf40..b440345 100644

--- a/leo_description/urdf/macros.xacro

+++ b/leo_description/urdf/macros.xacro

@@ -750,11 +750,10 @@

<camera>

<image>

<format>R8G8B8</format>

- <width>${gazebo_camera_width}</width>

- <height>${gazebo_camera_height}</height>

+ <width>320</width>

+ <height>240</height>

</image>

- <horizontal_fov>${gazebo_camera_horizontal_fov}</horizontal_fov>

- <vertical_fov>${gazebo_camera_vertical_fov}</vertical_fov>

+ <horizontal_fov>1.047</horizontal_fov>

<clip>

<near>${gazebo_camera_color_clip_near}</near>

<far>${gazebo_camera_color_clip_far}</far>

It is because the cx/cy seem stuck at 160/120, which only work if resolution is 320/240. For the FOV, if I set something else, the cloud doesn't match anymore the lidar cloud.

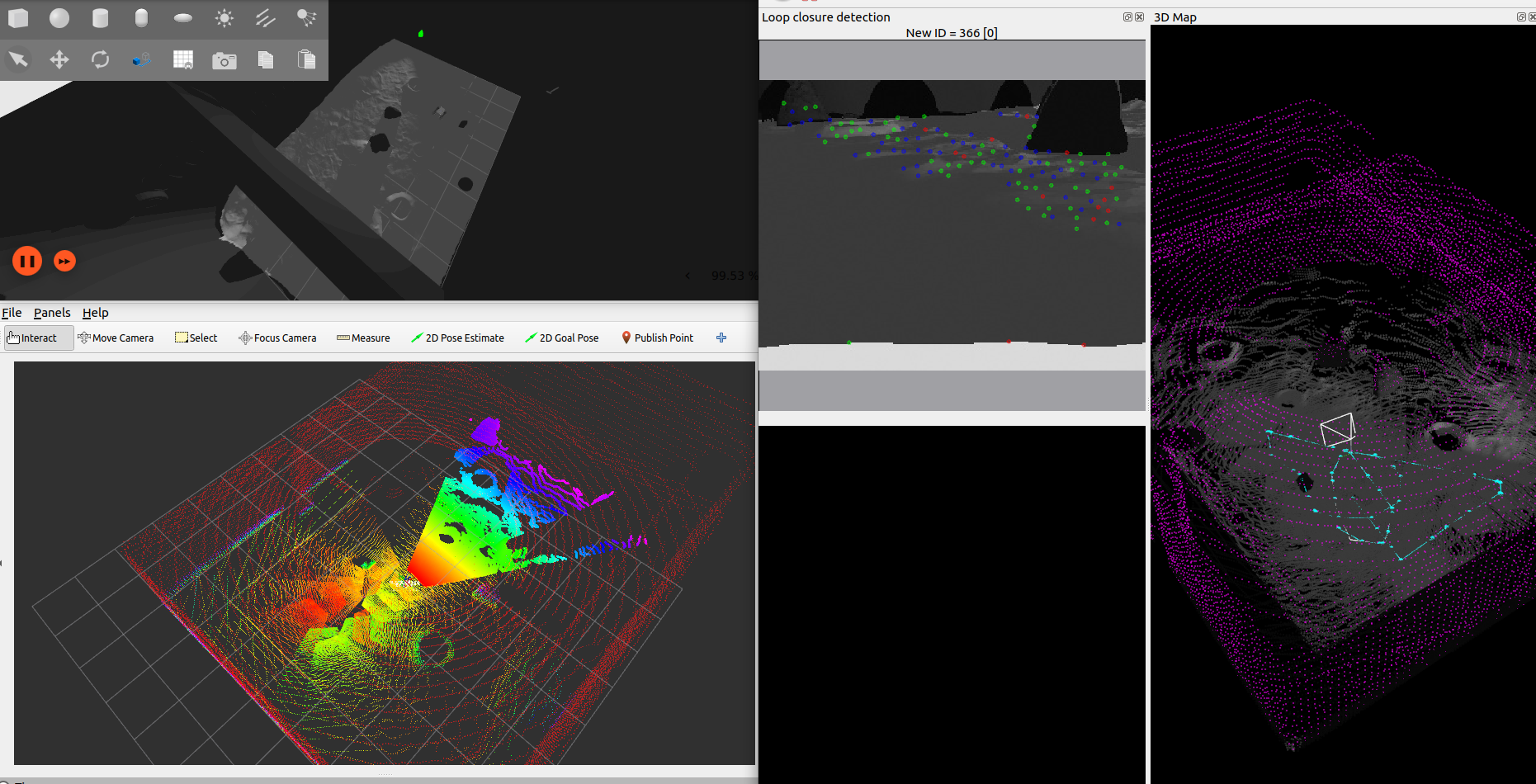

Before  After  For rtabmap config using lidar and camera at the same time, here an updated version of your launch file rtabmap_livox-rgbd_ML.py  cheers, Mathieu |

Re: Combine 3D Lidar and RGB-D Camera to create a single 3D point cloud (sensor fusion)

|

Hi again,

Sorry for the late reply on this thread. Thanks a lot for taking the time to reply to my questions! I have tried to incorporate your proposed solution and here are my findings: 1. The camera's intrinsic parameters were indeed wrong. I too cannot use any other values than the one you suggested. Unsure as to why this is the case currently... The camera's depth cloud is not properly aligned with the floor. 2. Regarding the launch config for rtabmap to produce a point cloud which merges inputs from both the RGBD camera and the 3D LiDAR, I have run into some issues. When I use the code you provided, the point cloud generated only seems to use the LiDAR data and ignores RGBD input. However, I noticed that you had set the parameter: RGBD/CreateOccupancyGrid : False. I experimented and set this one to true, and at the same time added another parameter: Grid/Sensor : 2 , which to my understanding, allows RTABMAP to generate a point cloud using both 3D LiDAR and Depth data. With these two changes, I have been able to get what I need. Yet, I am not sure if this is the correct approach or if I missed something in your response to get the results I desire. Would be possible to confirm or correct my approach please? Cheers, Alex |

|

Administrator

|

Hi Alex,

If you need a single point cloud combining lidar and RGB-D cloud, yes, using "Grid/Sensor: 2" can do the job. However, if you don't need the combined cloud online, it is possible to export both point clouds afterwards separately and combine them in CloudCompare/MeshLab. cheers, Mathieu |

Re: Combine 3D Lidar and RGB-D Camera to create a single 3D point cloud (sensor fusion)

|

Hi Mathieu,

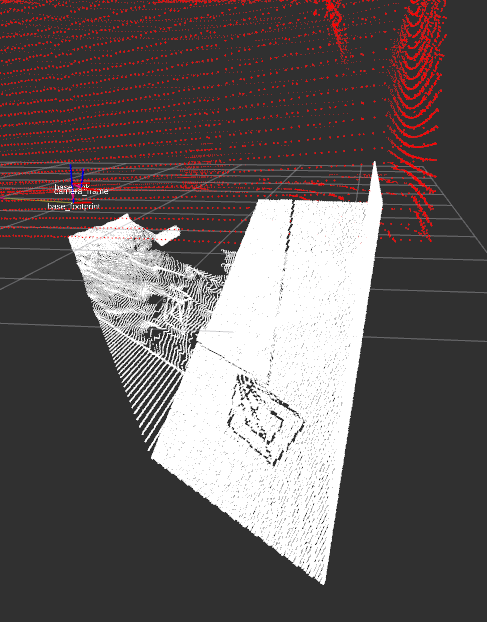

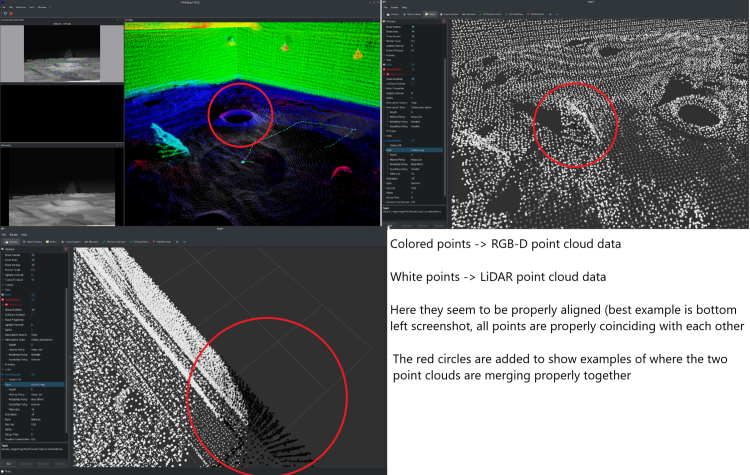

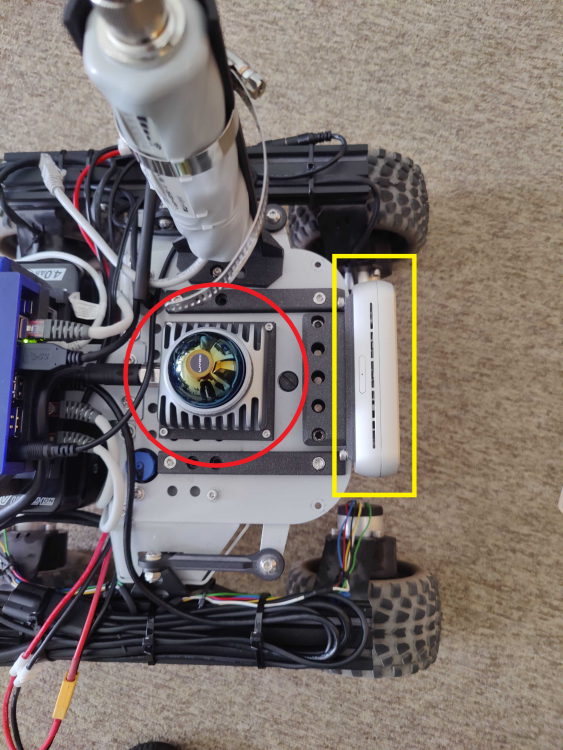

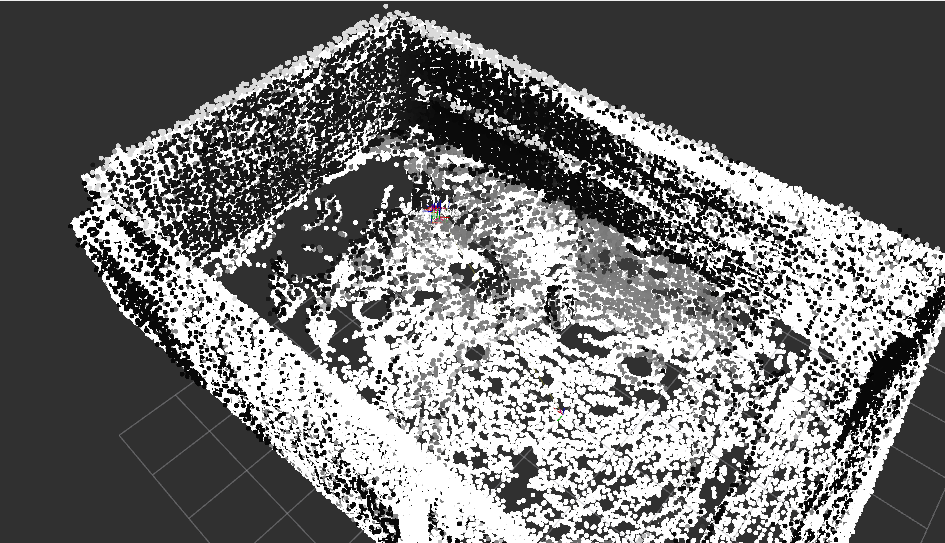

Thanks for the speedy reply! Okay, this clarifies things now. Indeed I need a merged point cloud online, so this solution works well for me :) In parallel, I have been transitioning from the Gazebo simulation to a real robot, using the same configuration (RTABMAP, robot config, etc...). I ran however into a new "problem". When running the launch configuration for RTABMAP on the real robot, (with Grid/Sensor:2), the final point cloud (the merge between RGB-D camera and LiDAR) seems to not be aligned properly. In fact, I notice a considerable displacement between the two (see images). What's more, is that in the Gazebo simulation this displacement is completely absent, which has lead to some confusion on my end. At first I thought that perhaps RTABMAP does not look at the transforms from Camera -> Base_Footprint and LiDAR -> Base_Footprint when merging both point clouds (i.e. simply adds all the points into a single PointCloud2 message without checking whether they should be aligned further), but this does not seem to make sense because in the simulation, RTABMAP correctly merges them and aligns them as expected (no shift in the two point clouds). Another point to add is that both robot descriptions, real and in simulation, are identical. Here are some screenshots to give you a visual example: Simulation:  Real Example:  In the real example, there is a shift between white and colored points, where in fact they should be one on top of another (i.e. part of the same object). This does not happen in the simulation. ---- The RTABMAP launch file remains the same as the one you have provided in the above solution (same thread), just with two parameters changed: 1. "RGBD/CreateOccupancyGrid: true" 2. "Grid/Sensor: 2" I am subscribing to the "/cloud_map" topic which is visualized in the screenshots, and the reference frame for RVIZ is "/map". In terms of the sensor placement on the real/simulated robot, I am also attaching a picture of the robot here:  Red circle ---> LiDAR Livox MID360 Yellow Rectangle ---> RealSense RGB-D Camera D455 It might be naive to suggest, but the displacement noted in the merged pointcloud seems to be more or less the same as the distance between the RGB-D camera and the Lidar.. could this be linked to cause of this issue? My main concern is to figure out a way to properly merge the point clouds in the real-life scenario. Any ideas? Thanks a lot and I look forward to your reply! Please let me know if you need more details, I am happy to provide them! Cheers, Alex |

|

Administrator

|

If the clouds are not aligned when the robot is not moving:

* The transform between the camera and the lidar may not be accurate (sometimes rotation error can cause bigger issue at longer distance), so a lidar/camera calibration may be required. * The scale of the camera point cloud is maybe off (I would expect the lidar scale should be close to reality), because of some bad calibration. A small experiment to verify this is to measure with a tape the distance between the camera and a wall, and compare with the values published by the depth image. If the clouds are not aligned when the robot is moving: * Check if the camera and lidar are using timestamps synced with same computer's clock. * You can try with to enable "odom_sensor_sync:=true" for rtabmap node, this will adjust camera point cloud/lidar based on their stamp and odometry received. Unless the camera can see stuff that the lidar cannot see, you may consider just using the lidar for the occupancy grid. cheers, Mathieu |

Re: Combine 3D Lidar and RGB-D Camera to create a single 3D point cloud (sensor fusion)

|

This post was updated on .

Hi again,

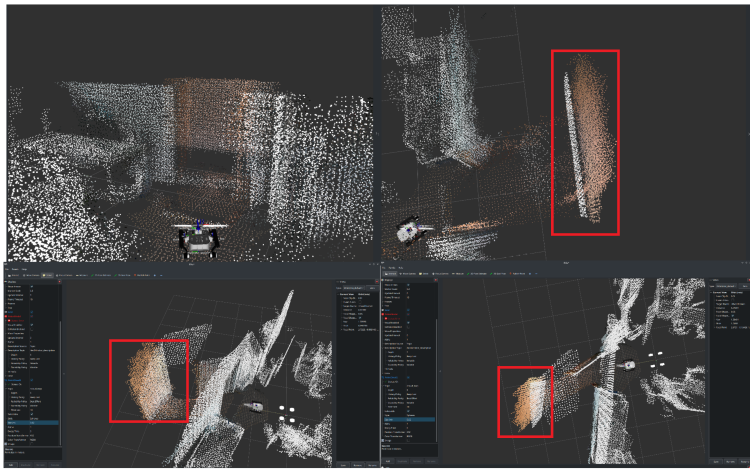

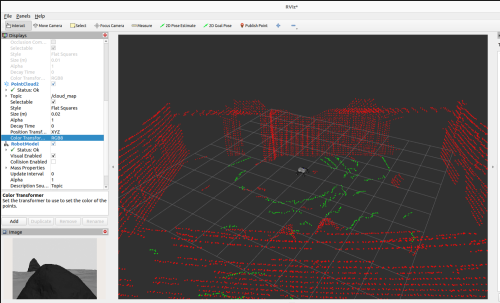

Thanks a lot for the input, indeed the transforms between the camera and LiDAR were not accurate. I remounted the sensors on the robot, checked with the CAD model and redefined the transforms in the URDF. The point clouds are now aligned much better, yet not perfect. As you suggested, camera LiDAR calibration is required which will be one of my next steps! :D That said, I am facing a new issue/concern and would like your input on this if possible please. In this thread, we previously discussed how to combine both point clouds (generated from RGB-D and LiDAR) to generate a single colored point cloud. I transferred this into a simulation to get perfect results for testing purposes. I discovered however that I am not getting the desired result. Perhaps I did not previously explain what I needed well, so I will try to be as detailed and concise as possible. Sorry for the added confusion.. My goal is to use RTABMAP to generate a single point cloud, which is colored incrementally by the RGB-D camera online. That is, as I am moving my robot inside an environment, I want the points that have already been scanned by the LiDAR to be colored based on the RGB data from the camera. If there are points that are only visible from the LiDAR, I want them to also remain in the generated point cloud, and updated with color if the camera sees them. I know that is possible to do in post-processing via the database viewer and exporting the colored LiDAR point cloud, but I would like this done online instead. I did some research and stumbled upon these threads in the forum: 1. http://official-rtab-map-forum.206.s1.nabble.com/Livox-Avia-Depth-cameras-and-organized-point-clouds-td9200.html#a9220 | Possible online coloring of the LiDAR point cloud 2. http://official-rtab-map-forum.206.s1.nabble.com/How-to-utilize-D455-sensor-to-colorize-3d-point-cloud-map-created-using-lidar-td10438.html | Coloring the point cloud in post-processing 3. http://official-rtab-map-forum.206.s1.nabble.com/Painting-a-pointcloud-td8130.html | Looks like this person was able to color the point cloud online but I cannot reproduce this Based on these findings, I updated the launch file in an attempt to achieve the desired results but I have not been successful. What I noticed is that while the initial scan of the environment seems to properly color the LiDAR point cloud with the RGB information, the moment the camera is looking somewhere else, the colored points are immediately replaced by the LiDAR scan, that is, they lose their color. If the camera passes again from a previously scanned location, the points without color (I assume generated from the LiDAR) are replaced with colored ones (I guess generated from the RGB-D camera), and once the camera looks somewhere else, the colored ones are replaced once again with non-color values. Here is a visual example of what I tried explaining so far:   Notice how in the left image, the points in the FoV of the camera are colored, but once the robot rotates to the right (right-hand image) and the camera FoV changes, these previously colored points are then overwritten by the LiDAR scan values. Similarly, the previously scanned points from LiDAR scans, are replaced with colored ones generated from the camera. I suspect that the nodes in charge of mapping are overwriting themselves in the current setup. To generate this results I am using the following RTABMAP launch file rtabmap_livox_rgbd_lidar.py. I saw some examples were they set the following parameters: RGBD/CreateOccupancyGrid : False and Grid/Sensor : 1 (default), and instead add: 'gen_depth': True, 'gen_depth_decimation': 8, 'gen_depth_fill_holes_size':1, But this simply results in a uncolored point cloud generated only from LiDAR in RVIZ. See below:  The only way that I can somehow incorporate color information in the LiDAR point cloud via the RGB-D camera, is if I set : 'RGBD/CreateOccupancyGrid': 'true', 'Grid/Sensor': '2', I know this has been quite a long post, but I tried including as much information as possible. To summarize, my question is whether it is possible to configure RTABMAP to color the LiDAR point cloud online using RGB-D data, and display this in RVIZ by subscribing to the /cloud_map topic. The whole goal is to use the 3D LiDAR to map the entire environment and complement it with RGB-D data once the camera sees an already scanned area online. The repository I am using for the simulation environment is the following: https://github.com/Alexandre-Frantz/leo_simulator-ros2 Also, perhaps this is a long shot but I would be also open to schedule an online meeting with you to perhaps better explain or show you live the results I am getting. I understand however if this is not possible, but at this point I am going around in circles trying to figure this out. I am happy to also provide you with more code should you need it for testing reasons! Thanks a lot for your time and for all your help so far, I am looking forward to your inputs! Best, Alex |

|

Administrator

|

Hi Alex,

When using Grid/Sensor=2 (with Grid/RangeMax=0, Grid/ClusterRadius=1 and Grid/DepthDecimation=1), as soon as the points seen by the camera exit the field of view, the lidar will override the points over time. It is not currently possible to do what you want just by changing some parameters. A workaround would be to use Grid/Sensor=0 in rtabmap node, and enabling gen_depth if you are using a single RGB camera, then use map_assembler node to generate the map cloud from the camera. That way you will have a RGB cloud and a lidar cloud of the map. With an external node, you could subscribe to these 2 clouds, then merge them so that lidar points close to RGB points are filtered (using something like this cloud subtraction function). You will end up with a unique point cloud with RGB and lidar points not overlapping. Or make the lidar points look small and RGB points look bigger in rviz, like this:  Here is the command I used to spawn a map_assembler after launching your launch file: ros2 run rtabmap_util map_assembler --ros-args \ -p Grid/Sensor:="'1'" \ -p Grid/RangeMax:="'0'" \ -p regenerate_local_grids:=true \ -r __ns:=/map_camera \ -p rtabmap:=/rtabmap \ -r mapData:=/mapData \ -p use_sim_time:=true \ -p Grid/ClusterRadius:="'1'" \ -p Grid/DepthDecimation:="'1'"Note that map_assembler has been ported to ros2 recently, and available only if rtabmap_util is rebuilt from source. UPDATE: well I think there is another option not involving any other nodes. You can enable "cloud_subtract_filtering" parameter for rtabmap node. However, some RGB points may be not added if some lidar points were added first at some place.  Regards, Mathieu |

Re: Combine 3D Lidar and RGB-D Camera to create a single 3D point cloud (sensor fusion)

|

This post was updated on .

Hi Mathieu,

Thanks a lot for your input and for your time :) I was able to re-create your proposed solutions successfully, although they do not directly solve my problem, they do allow me to start thinking about other ways to reach my goal. I will be considering the option of involving an external node to filter out the Lidar points with the RGB ones created by the map_assembler. While experimenting with all this, I came across a different approach. I decided to try the following: - Before starting RTAB-MAP. I tried to first colorize the lidar point cloud using the RGB information from the camera. Basically, project the lidar points into the RGB image, extract the color, and assign it to the points from the Lidar point cloud that are currently in the FoV of the camera. An example of what I am describing can be found in this repo: https://github.com/leo-drive/color-point-cloud. - Once the lidar point cloud is colorized, I tried launching RTAB-MAP by providing the colorized point cloud topic to the rtabmap node (/scan_cloud remaped to colorized point cloud topic). I also stopped using the rgbd_sync node since I don´t need the RGB data anymore, assuming that RTABMAP can extract the RGB fields from the colorized point cloud directly and build the map incrementally. Grid/Sensor is set to 0 to use the Lidar for the occupancy grid. Unfortunately, the /cloud_map topic is still giving a segmented map. That is, ground and obstacles color coded green/red respectively. Looks like RGB information assigned to the points after the colorization step is lost, and replaced with values representing ground or obstacle. I was wondering if my approach makes sense, or if RTABMAP is currently capable of adapting to my approach. If not, I will go ahead and try your other proposal (involving an external node). Thanks and looking forward to your reply! Cheers, Alex |

|

Administrator

|

It seems color-point-cloud package returns a point cloud with RGB and Intensity. Based on rtabmap's conversion function, if they have both fields, intensity is used and rgb ignored. Then when generating cloud_map, the green/red color is added by default as the internal cloud doesn't have RGB (intensity instead).

To support that kind of point cloud, we would need to add new types (e.g., "kXYZRGBI", "kXYZRGBIT") here. I created a feature-request on github. However, even if we implement this, the cloud_map would mix RGB and no-RGB points together, which would look like the mixed lidar/RGB points as above. What could be nice is that during Voxel Filtering, if we could tell to mix color from only points having color set (ignoring 0,0,0 values). How the color is mixed right now boils down do these accumulators. So in the RGBA accumulator, that logic would have to be added (instead of average all colors, only average valid colors). So, looks like generating two cloud_map and assembling in external node would be the simplest way to do it for now. Small last note, beware that color-point-cloud's returned cloud doesn't have timestamp field anymore, so that cloud cannot be deskewed. I guess for the coloring to work properly, the lidar should have been already deskewed (so it is why they may don't bother republishing the timestamps), otherwise color will be added to wrong points. cheers, Mathieu |

«

Return to Official RTAB-Map Forum

|

1 view|%1 views

| Free forum by Nabble | Edit this page |