Hello,

I am really unsure on the best way to proceed with our two camera setup. We are using ROS for this.

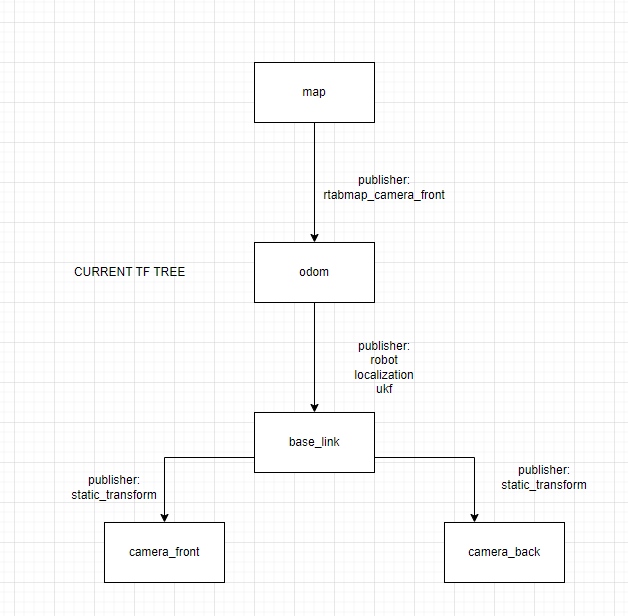

Currently, I am flying a drone that has one d435i camera facing forward feeding into an RTABMAP instance running stereo slam and another d435i facing backwards feeding into a different RTABMAP instance running rgbd slam. Right now, we are using the front facing camera for its odometry and the back facing camera to perform segmentation. our TF tree looks something like this:

The ukf filter is currently only taking in the flight controllers IMU and the front camera's odometry to estimate the odom->base_link transform.

Right now, if i want to use both point clouds in the map space, I just call both cloud_maps and add them together and display them. However, this results in the back camera's cloud being much more muddled than the front cameras. I suspect this has something to do with the fact that only the front camera's is being used to estimate the current odometry.

I was wondering if there is a better way we should be combining the two RTABMAP instances. I'm not attached to our current way of doing it so if anyone has a suggestion that involves completely redoing how it's setup, I'm all ears!

I can provide ROS launch files if needed.