Detected Object Location Mark on RTABMAP

|

Hi everybody, I'm working on RTABMAP and object detection on the map. In my work, I will obtain map of the area using RTABMAP at the same time I will detect and recognize the traffic sign of the object. It is not a problem for me to finding the object and recognition. My problem is, how can I mark the location of the founded sign on the extracted RTABmap in real-time?

P.S: I'm working only on simulated environment and robot in GAZEBO. |

|

Administrator

|

Hi,

You may look at this example: https://www.youtube.com/watch?v=o1GSQanY-Do Objects are detected in 2D using Find-Object, but with a RGB-D camera the 3D pose of the object can be found (using corresponding pixels in the depth image and calibration), then publish on TF the pose. You can look at the find-object code for a TF example (the frames we see appearing in the video): https://github.com/introlab/find-object/blob/master/src/ros/FindObjectROS.cpp cheers, Mathieu |

|

Well, how can i label of the founded object using find_object_2d.launch file?

|

|

Administrator

|

Objects cannot be labeled, only by an ID (number). For example, the video I linked above is setting "objects_path" parameter to this directory.

When an object is detected it will have the same ID than the one of the file name. cheers, Mathieu |

|

I found location of sign using your tf_example_node. I subscribed tf and I listened map to object_id and found the sign locations on the world. I wanna mark on this coordinate on RTABMAP or occupancy grid map. For example my stop sign located at (x,y,z) points on the world frame. How can I mark this (x,y) point on the RTABMAP or occupancy grid map.

Meanwhile, thank you Mr. Mathieu. With my respect, MOT |

|

Administrator

|

Hi,

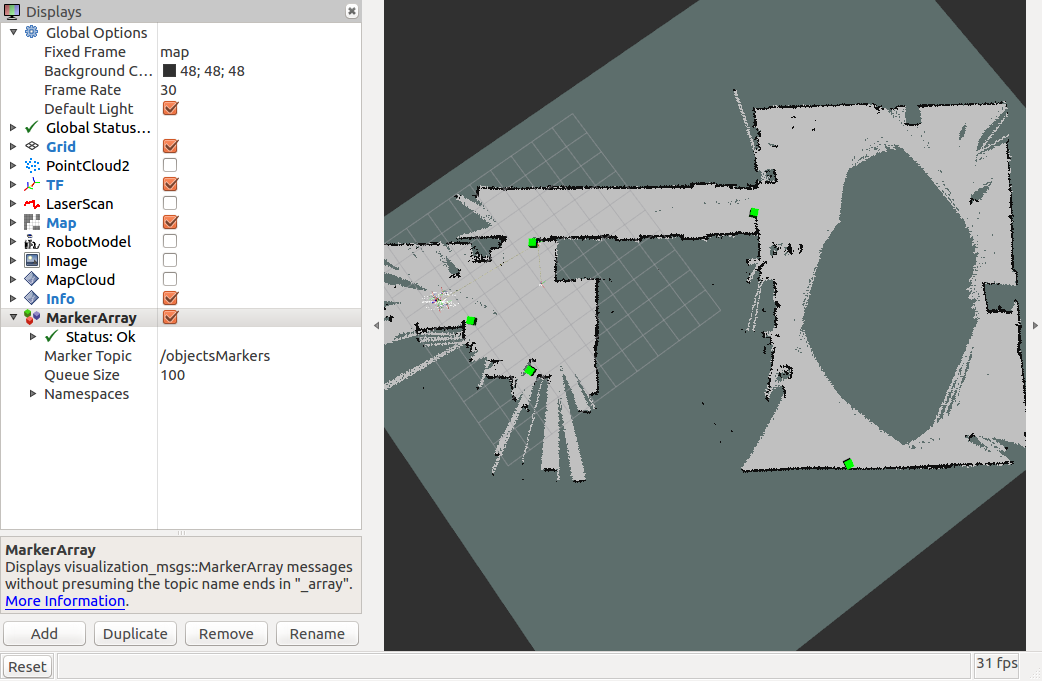

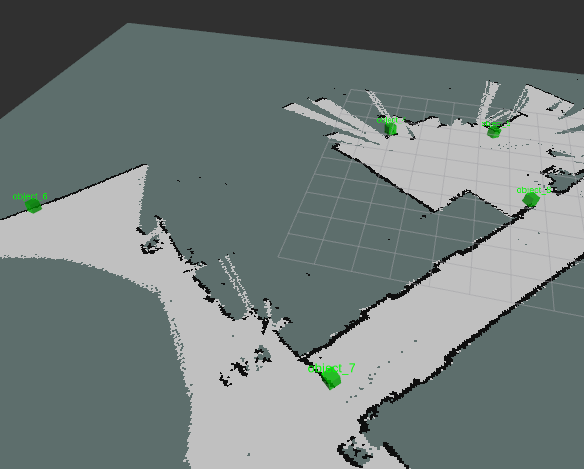

RTAB-Map doesn't have a built-in way to add landmarks to map for visualization or to help graph optimization (maybe in the future it will). The only way right now to save objects (or anything) in the map is to use the User Data approach. An example of this showing Wifi coverage can be found here. Based on that example and the tf_example_node of find_object_2d, I made a new node called UserDataExample shown below. Like tf_example_node, it subscribes to objects detected by find_object_2d, get the 3D poses of the objects and republish them under rtabmap_ros/UserData message format. This message is connected to rtabmap's user_data_async input topic. When rtabmap will receive this message, it will save the data in the next node created. The data (poses of the objects) are then saved in the map and republished through /rtabmap/mapData topic. UserDataExample also subscribes to mapData, parse the graph, interpolate the pose of the detections and republish the poses of the objects on a MarkerArray message that can be shown in RVIZ for convenience. Here the green cubes are the objects detected during the find_object demo of rtabmap.

Code of UserDataExample node: #include <ros/ros.h> #include <tf/transform_listener.h> #include <find_object_2d/ObjectsStamped.h> #include "utilite/UConversion.h" #include <opencv2/core.hpp> #include <rtabmap_ros/UserData.h> #include <rtabmap_ros/MsgConversion.h> #include <rtabmap/core/Compression.h> #include <visualization_msgs/MarkerArray.h> class UserDataExample { public: UserDataExample() : objFramePrefix_("object"), frameId_("base_link") { ros::NodeHandle pnh("~"); pnh.param("object_prefix", objFramePrefix_, objFramePrefix_); pnh.param("frame_id", frameId_, frameId_); ros::NodeHandle nh; subObjects_ = nh.subscribe("objectsStamped", 1, &UserDataExample::objectsDetectedCallback, this); subsMapData_ = nh.subscribe("mapData", 1, &UserDataExample::mapDataCallback, this); pub_ = nh.advertise<rtabmap_ros::UserData>("objectsData", 1); pubMarkers_ = nh.advertise<visualization_msgs::MarkerArray>("objectsMarkers", 1); } // from find_object_2d to rtabmap void objectsDetectedCallback(const find_object_2d::ObjectsStampedConstPtr & msg) { if(msg->objects.data.size()) { cv::Mat data(msg->objects.data.size()/12, 9, CV_64FC1); for(unsigned int i=0; i<msg->objects.data.size(); i+=12) { // get data int id = (int)msg->objects.data[i]; std::string objectFrameId = uFormat("%s_%d", objFramePrefix_.c_str(),id); // "object_1", "object_2" // get pose of the object in base frame tf::StampedTransform pose; try { tfListener_.lookupTransform(frameId_, objectFrameId, msg->header.stamp, pose); } catch(tf::TransformException & ex) { ROS_WARN("%s",ex.what()); return; } data.at<double>(i, 0) = double(id); data.at<double>(i, 1) = ros::Time(msg->header.stamp).toSec(); data.at<double>(i, 2) = pose.getOrigin().x(); data.at<double>(i, 3) = pose.getOrigin().y(); data.at<double>(i, 4) = pose.getOrigin().z(); data.at<double>(i, 5) = pose.getRotation().x(); data.at<double>(i, 6) = pose.getRotation().y(); data.at<double>(i, 7) = pose.getRotation().z(); data.at<double>(i, 8) = pose.getRotation().w(); } rtabmap_ros::UserData dataMsg; dataMsg.header.frame_id = msg->header.frame_id; dataMsg.header.stamp = msg->header.stamp; rtabmap_ros::userDataToROS(data, dataMsg, false); pub_.publish(dataMsg); } } // from rtabmap to rviz visualization void mapDataCallback(const rtabmap_ros::MapDataConstPtr & msg) { if(msg->nodes.size() && nodeToObjects_.find(msg->nodes.back().id) == nodeToObjects_.end()) { int id = msg->nodes.back().id; rtabmap::Signature node = rtabmap_ros::nodeDataFromROS(msg->nodes.back()); if(!node.sensorData().userDataCompressed().empty()) { cv::Mat data = rtabmap::uncompressData(node.sensorData().userDataCompressed()); ROS_ASSERT(data.cols == 9 && data.type() == CV_64FC1); ROS_INFO("Node %d has %d objects", id, data.rows); nodeToObjects_.insert(std::make_pair(id, data)); } } std::map<double, int> nodeStamps; // <stamp, id> std::map<int, rtabmap::Signature> signatures; std::map<int, rtabmap::Transform> poses; std::multimap<int, rtabmap::Link> links; rtabmap::Transform mapToOdom; rtabmap_ros::mapDataFromROS(*msg, poses, links, signatures, mapToOdom); for(std::map<int, rtabmap::Signature>::iterator iter=signatures.begin(); iter!=signatures.end(); ++iter) { // Sort stamps by stamps->id nodeStamps.insert(std::make_pair(iter->second.getStamp(), iter->first)); } // Publish markers accordingly to current optimized graph std::map<int, float> objectsAdded; visualization_msgs::MarkerArray markers; if(nodeToObjects_.size()) { for(std::map<int, rtabmap::Transform>::iterator iter=poses.begin(); iter!=poses.end(); ++iter) { if(nodeToObjects_.find(iter->first) != nodeToObjects_.end()) { cv::Mat data = nodeToObjects_.at(iter->first); for(int i=0; i<data.rows; ++i) { int objId = int(data.at<double>(i,0)); double stamp = data.at<double>(i,1); // The object detection may have been taken between two nodes, interpolate its position. std::map<double, int>::iterator previousNode = nodeStamps.lower_bound(stamp); // lower bound of the stamp if(previousNode!=nodeStamps.end() && previousNode->first > stamp && previousNode != nodeStamps.begin()) { --previousNode; } std::map<double, int>::iterator nextNode = nodeStamps.upper_bound(stamp); // upper bound of the stamp if(previousNode != nodeStamps.end() && nextNode != nodeStamps.end() && previousNode->second != nextNode->second && poses.find(previousNode->second)!=poses.end() && poses.find(nextNode->second)!=poses.end()) { rtabmap::Transform poseA = poses.at(previousNode->second); rtabmap::Transform poseB = poses.at(nextNode->second); double stampA = previousNode->first; double stampB = nextNode->first; UASSERT(stamp>=stampA && stamp <=stampB); rtabmap::Transform v = poseA.inverse() * poseB; double ratio = (stamp-stampA)/(stampB-stampA); v.x()*=ratio; v.y()*=ratio; v.z()*=ratio; rtabmap::Transform robotPose = poseA*v; // transform object pose in map frame rtabmap::Transform objPose( data.at<double>(i,2), data.at<double>(i,3), data.at<double>(i,4), data.at<double>(i,5), data.at<double>(i,6), data.at<double>(i,7), data.at<double>(i,8)); float distanceFromNode = objPose.getNorm(); objPose = robotPose * objPose; if(objectsAdded.find(objId) == objectsAdded.end()) { visualization_msgs::Marker marker; marker.header.frame_id = msg->header.frame_id; marker.header.stamp = msg->header.stamp; marker.ns = "objects"; marker.id = objId; marker.action = visualization_msgs::Marker::ADD; marker.pose.position.x = objPose.x(); marker.pose.position.y = objPose.y(); marker.pose.position.z = objPose.z(); Eigen::Quaterniond q = objPose.getQuaterniond(); marker.pose.orientation.x = q.x(); marker.pose.orientation.y = q.y(); marker.pose.orientation.z = q.z(); marker.pose.orientation.w = q.w(); marker.scale.x = 0.3; marker.scale.y = 0.3; marker.scale.z = 0.3; marker.color.a = 1.0; marker.color.r = 0.0; marker.color.g = 1.0; marker.color.b = 0.0; marker.type = visualization_msgs::Marker::CUBE; //marker.type = visualization_msgs::Marker::TEXT_VIEW_FACING; //marker.text = uFormat("%s_%d", objFramePrefix_.c_str(), objId); markers.markers.push_back(marker); objectsAdded.insert(std::make_pair(objId, distanceFromNode)); } else { // update pose of the marker only if current observation is closer to robot if(distanceFromNode < objectsAdded.at(objId)) { for(unsigned int j=0; j<markers.markers.size(); ++j) { if(markers.markers[j].id == objId) { markers.markers[j].pose.position.x = objPose.x(); markers.markers[j].pose.position.y = objPose.y(); markers.markers[j].pose.position.z = objPose.z(); Eigen::Quaterniond q = objPose.getQuaterniond(); markers.markers[j].pose.orientation.x = q.x(); markers.markers[j].pose.orientation.y = q.y(); markers.markers[j].pose.orientation.z = q.z(); markers.markers[j].pose.orientation.w = q.w(); break; } } objectsAdded.at(objId) = distanceFromNode; } } } } } } if(!markers.markers.empty()) { pubMarkers_.publish(markers); } } } private: std::string objFramePrefix_; ros::Subscriber subObjects_; ros::Subscriber subsMapData_; tf::TransformListener tfListener_; ros::Publisher pub_; ros::Publisher pubMarkers_; std::map<int, cv::Mat> nodeToObjects_; std::string frameId_; }; int main(int argc, char * argv[]) { ros::init(argc, argv, "user_data_example"); UserDataExample sync; ros::spin(); } To make it work with demo_find_object.launch, add the following: <launch> <node name="user_data_example" pkg="my_package" type="user_data_example" output="screen"> <remap from="mapData" to="/rtabmap/mapData"/> </node> <group ns="rtabmap"> <node name="rtabmap" ...> <remap from="user_data_async" to="/objectsData"/> ... </node> </group> </launch> Final note: When a loop closure is detected and the graph is optimized, the objects will move with the graph so that their poses are always valid, thus matching the optimized occupancy grid. cheers,Mathieu |

|

Administrator

|

Hi,

I integrated the example above directly in rtabmap_ros project for convenience. See file SaveObjectsExample.cpp code. The code also includes a fix for the wrong interpolation pose's angle (here). Running that example from the original find-object example (demo_find_object.launch has been also updated to include save_objects option): $ roslaunch rtabmap_ros demo_find_object.launch save_objects:=true $ rosbag play --clock demo_find_object.bag  cheers, Mathieu |

|

Hi, I'm working on RTABMAP and object detection on the map.

When I run the example(demo_find_object.launch with save_objects:=true) I fail to publish markers accordingly to current optimized graph. I traced the code SaveObjectsExample.cpp, I found I cannot pass the conditional below: if(previousNode != nodeStamps.end() && nextNode != nodeStamps.end() && previousNode->second != nextNode->second && poses.find(previousNode->second)!=poses.end() && poses.find(nextNode->second)!=poses.end()) I demo the example with the source bag demo_find_object.bag I likes RTABMAP very much, i really hope someone can help, please! |

| Free forum by Nabble | Edit this page |