Differences between ROS output vs. desktop

Differences between ROS output vs. desktop

|

Hello,

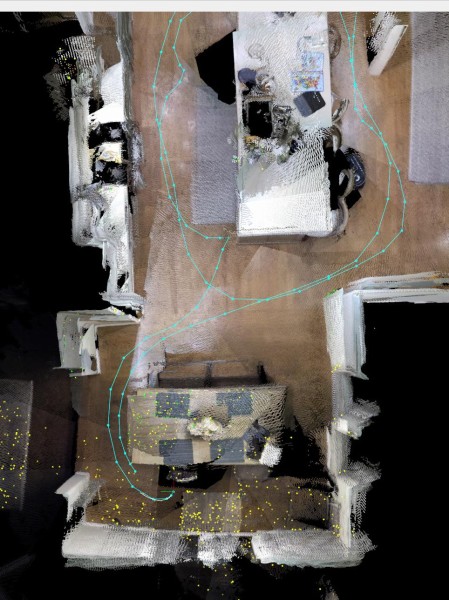

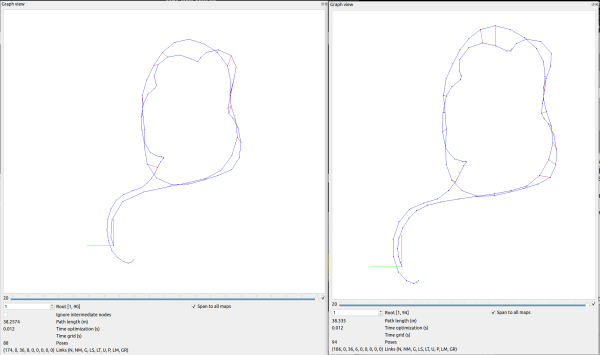

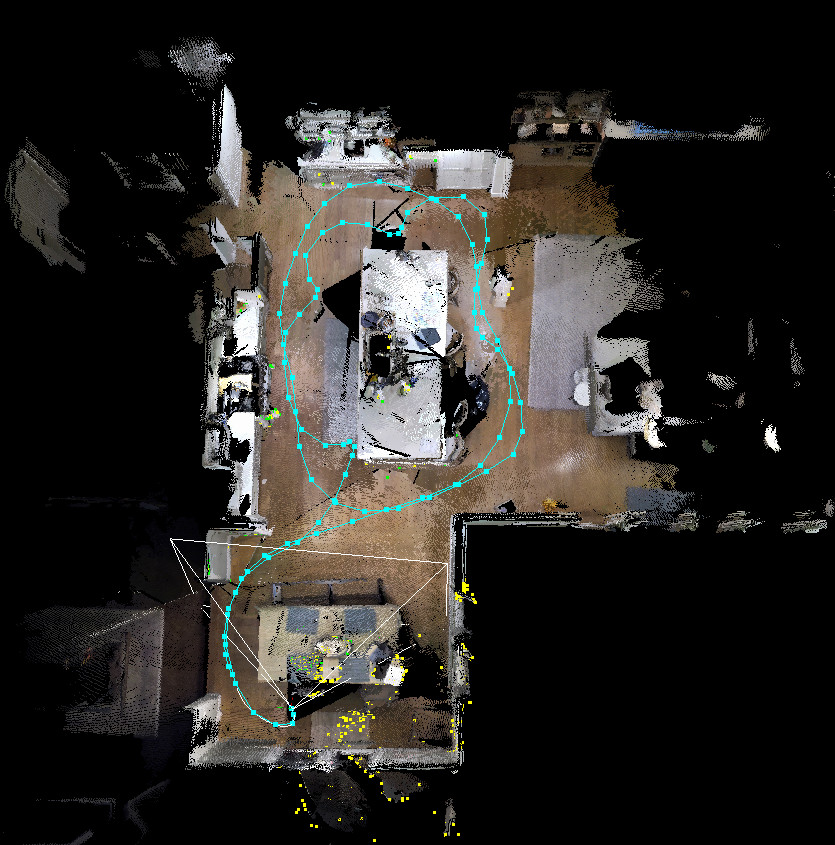

Thank you very much for all the excellent work with RTAB-Map. I have been working for some time to achieve an optimal point cloud map using a robot equipped with a Kinect for Azure depth cam. I am doing localization against it, but also running some object segmentation so I need some really clean clouds. Thanks to some of the posts in this forum I'm able to generate a pretty nice map in the RTAB desktop application, but I am getting different subpar results when I run the same recording through the ROS node, referencing the same config file. I’m running the same rtabmap binary built within the ROS workspace. The ROS-generated point cloud has several large offsets between the walls as the run loops around the same areas. Desktop:  ROS:  The two maps seem to be doing roughly the same number of loop closures, but in different places. Here is a side-by-side of the two graph views:  Do you have any ideas where the discrepancies might be between the two setups? - Desktop database - ROS-generated database - ROS launch file - Original MKV file Playback via ROS driver: ros2 run azure_kinect_ros2_driver azure_kinect_node --ros-args -p recording_file:=kitchen_loop19_tilt30_720p_30fps_wfov.mkv And to get mkv playback working in the RTAB desktop app I had to apply this patch. Thank you for your help! |

|

Administrator

|

This post was updated on .

Hi,

after investigating the results, it seems in ROS2 you are not subscribing to a rectified RGB image. Following ROS1 suggestions from my pull request here: https://github.com/microsoft/Azure_Kinect_ROS_Driver/pull/166 here is the corresponding ROS2 version for RGB+Depth:

# compute quaternion of the raw /imu

ros2 run imu_filter_madgwick imu_filter_madgwick_node --ros-args \

-r /imu/data_raw:=/imu \

-p use_mag:=false \

-p publish_tf:=false

# Rectify RGB image /rgb/image_raw -> /rgb/image_rect

ros2 run image_proc image_proc --ros-args \

-r camera_info:=/rgb/camera_info \

-r image:=/rgb/image_raw \

-r image_rect:=/rgb/image_rect

# RTAB-Map

ros2 launch rtabmap_launch rtabmap.launch.py \

rtabmap_args:="--delete_db_on_start" \

rgb_topic:=/rgb/image_rect \

depth_topic:=/depth_to_rgb/image_raw \

camera_info_topic:=/rgb/camera_info \

frame_id:=camera_base \

approx_sync:=true \

approx_sync_max_interval:=0.1 \

wait_imu_to_init:=true \

imu_topic:=/imu/data \

qos:=1 \

queue_size:=30

# Launch the MKV

ros2 run azure_kinect_ros_driver node --ros-args \

-p recording_file:=kitchen_loop19_tilt30_720p_30fps_wfov.mkv \

-p color_enabled:=true \

-p fps:=30 \

-p depth_mode:=WFOV_2X2BINNED

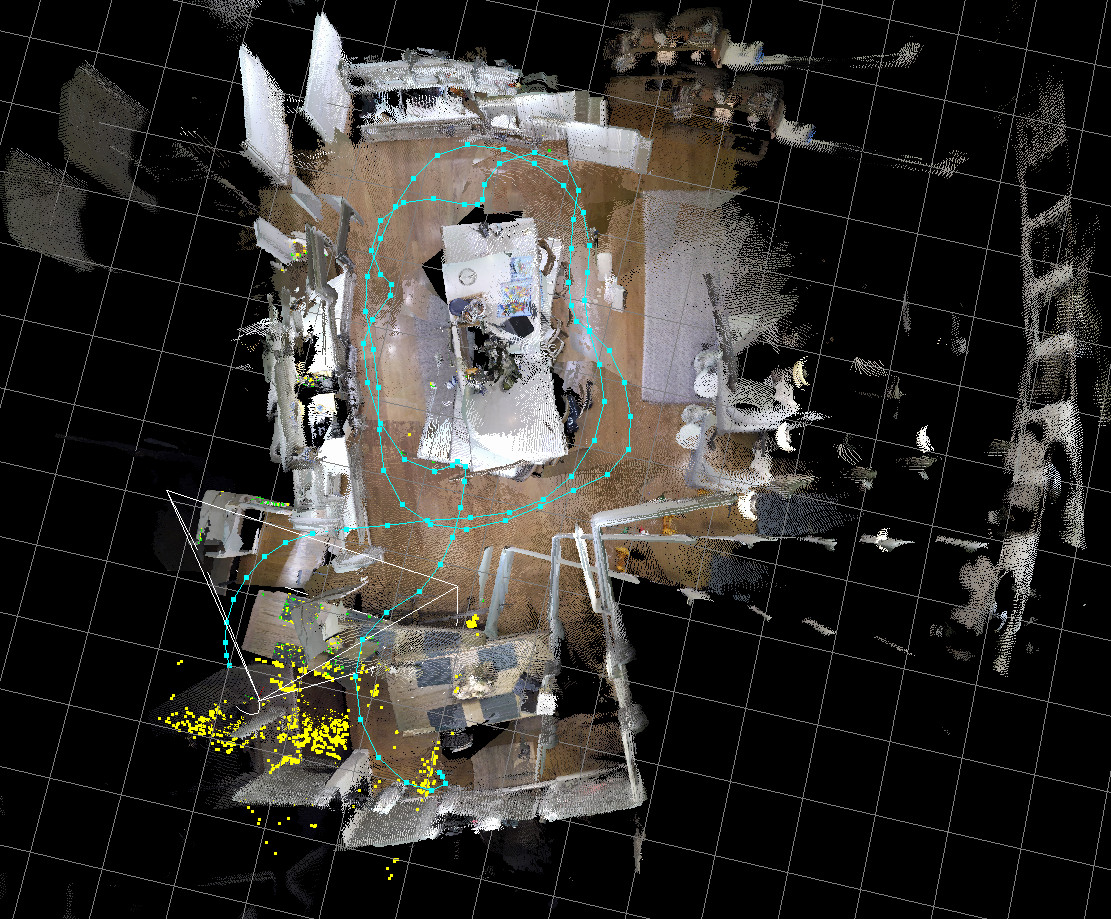

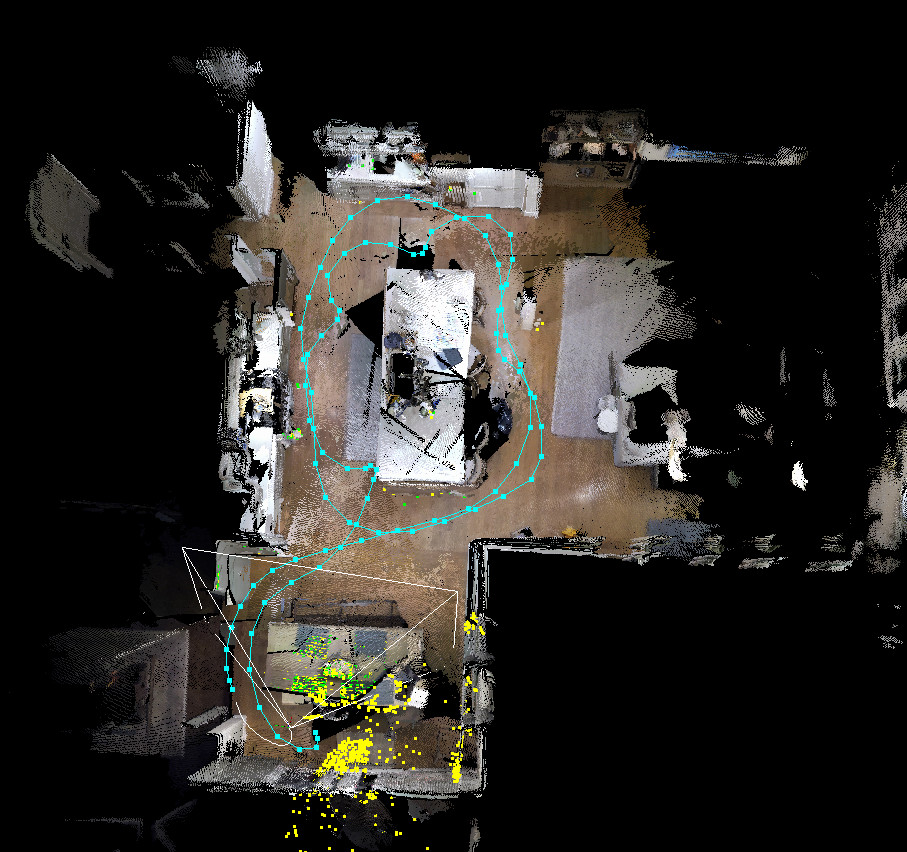

When showing the graph without loop closures, it is easier to see that when using raw RGB images, the visual odometry is drifting a lot:  This is visual odometry with rectified RGB images:  This is with rectified RGB and with loop closures (results very similar to RTAB-Map standalone):  EDIT: Thank you for the patch, I integrated it in this commit: https://github.com/introlab/rtabmap/commit/59d220c54f58c66f3d11484176003ba89ef6bdf5 cheers, Mathieu |

Re: Differences between ROS output vs. desktop

|

It's working great now. I thought the RGB images were already being rectified in the k4a driver, but I guess not. Thank you Mathieu!

One last question, I am running some segmentation, etc. on the RGB images and subscribing to /voxel_cloud to get the 3D point of each pixel. With this configuration, I'm assuming these point clouds will be registered to the images from /rgb/image_rect? Thanks again! |

«

Return to Official RTAB-Map Forum

|

1 view|%1 views

| Free forum by Nabble | Edit this page |