Error creating Texture Cameras

Error creating Texture Cameras

|

This post was updated on .

Hey,

I was trying to run the following piece of code to create a mesh from the following example: http://official-rtab-map-forum.206.s1.nabble.com/Is-there-a-RTABMap-document-td1162.html. My piece of the code is: ------ for(std::map<int, Transform>::iterator iter=optimizedPoses.begin(); iter!=optimizedPoses.end(); ++iter) { Signature node = nodes.find(iter->first)->second; // uncompress data node.sensorData().uncompressData(); pcl::PointCloud<pcl::PointXYZRGB>::Ptr tmp = util3d::cloudRGBFromSensorData( node.sensorData(), 4, // image decimation before creating the clouds 4.0f, // maximum depth of the cloud 0.0f); pcl::PointCloud<pcl::Normal>::Ptr tmpWithNormals = util3d::computeNormals(tmp); pcl::PointCloud<pcl::PointXYZRGBNormal>::Ptr tmpNoNaN(new pcl::PointCloud<pcl::PointXYZRGBNormal>); pcl::PointCloud<pcl::PointXYZRGBNormal>::Ptr tmpNoNaN2(new pcl::PointCloud<pcl::PointXYZRGBNormal>); std::vector<int> index; //Compute cloud with normals pcl::concatenateFields(*tmp,*tmpWithNormals,*tmpNoNaN); //Remove NaN from clouds pcl::removeNaNNormalsFromPointCloud(*tmpNoNaN, *tmpNoNaN2, index); if(!tmpNoNaN2->empty()) { *cloud += *util3d::transformPointCloud(tmpNoNaN2, iter->second); // transform the point cloud to its pose } cameraModels.insert(std::make_pair(iter->first, node.sensorData().cameraModels()[0])); images.insert(std::make_pair(iter->first, node.sensorData().imageRaw())); } ----------------------- I am obtaining the following error: [FATAL] (2018-04-02 16:44:55.233) util3d_surface.cpp:637::createTextureCameras() Condition (depthIter->second.type() == CV_32FC1 || depthIter->second.type() == CV_16UC1) not met! what(): [FATAL] (2018-04-02 16:44:55.233) util3d_surface.cpp:637::createTextureCameras() Condition (depthIter->second.type() == CV_32FC1 || depthIter->second.type() == CV_16UC1) not met! Can someone help me understanding this issue? Thanks, Bruno |

|

Administrator

|

Hi,

The interface has changed. The "images" map should contain depth images, not RGB images. So changing your code to: images.insert(std::make_pair(iter->first, node.sensorData().depthRaw()));The depth image is also optional, so you can just send an empty map of images. Current interface is: pcl::TextureMesh::Ptr RTABMAP_EXP createTextureMesh( const pcl::PolygonMesh::Ptr & mesh, const std::map<int, Transform> & poses, const std::map<int, CameraModel> & cameraModels, const std::map<int, cv::Mat> & cameraDepths, float maxDistance = 0.0f, // max camera distance to polygon to apply texture float maxDepthError = 0.0f, // maximum depth error between reprojected mesh and depth image to texture a face (-1=disabled, 0=edge length is used) float maxAngle = 0.0f, // maximum angle between camera and face (0=disabled) int minClusterSize = 50, // minimum size of polygons clusters textured const std::vector<float> & roiRatios = std::vector<float>(), // [left, right, top, bottom] region of interest (in ratios) of the image projected. const ProgressState * state = 0, std::vector<std::map<int, pcl::PointXY> > * vertexToPixels = 0); For convenience, to automatically merge all camera RGB images into single texture, use mergeTextures(). Here the "images" should be the RGB images (vertexToPixels is the last output of the createTextureMesh): cv::Mat RTABMAP_EXP mergeTextures( pcl::TextureMesh & mesh, const std::map<int, cv::Mat> & images, // raw or compressed, can be empty if memory or dbDriver should be used const std::map<int, std::vector<CameraModel> > & calibrations, // Should match images const Memory * memory = 0, // Should be set if images are not set const DBDriver * dbDriver = 0, // Should be set if images and memory are not set int textureSize = 4096, int textureCount = 1, const std::vector<std::map<int, pcl::PointXY> > & vertexToPixels = std::vector<std::map<int, pcl::PointXY> >(), // needed for parameters below bool gainCompensation = true, float gainBeta = 10.0f, bool gainRGB = true, //Do gain compensation on each channel bool blending = true, int blendingDecimation = 0, //0=auto depending on projected polygon size and texture size int brightnessContrastRatioLow = 0, //0=disabled, values between 0 and 100 int brightnessContrastRatioHigh = 0, //0=disabled, values between 0 and 100 bool exposureFusion = false, //Exposure fusion can be used only with OpenCV3 const ProgressState * state = 0); The easiest way to export textures is to let textureCount set to 1, then follow example of export here with singleTexture. cheers, Mathieu |

Re: Error creating Texture Cameras

|

Hi ,

Thanks for the explanation. Although, it wasn't quite clear how can I use the ouput of createMeshtexture on mergetexture() and export it. Could you create a quick example please? Currently, I have the following code: ---- pcl::TextureMesh::Ptr textureMesh = util3d::createTextureMesh( mesh, optimizedPoses, cameraModels, images); cv::Mat merg_texture_mesh = util3d::mergeTextures(textureMesh,images,cameraModels); --- I am not quite sure how to proceed from here. Thanks, Bruno |

Re: Error creating Texture Cameras

|

Hi,

I could improve my code and generate a texture. Although, I can't visualize with pcl viewer. My code to generate the texture mesh is: --- // Merge into one only texture and add color to mesh std::vector<std::map<int, pcl::PointXY> > textureVertexToPixels; cv::Mat merg_texture_mesh = util3d::mergeTextures(*textureMesh,images_RGB,cameraModels_array); cv::imwrite("/home/brunoeducsantos/mapping/examples/build-mapping_rtabmap-Desktop-Default/image.png", merg_texture_mesh); textureMesh->tex_materials[0].tex_file = "/home/brunoeducsantos/mapping/examples/build-mapping_rtabmap-Desktop-Default/image.png"; pcl::io::save("mesh3.obj", *textureMesh); ---- The code regarding the pcl viewer is: ----------- #include <iostream> #include <pcl/visualization/cloud_viewer.h> #include <iostream> #include <pcl/io/io.h> #include <pcl/io/pcd_io.h> #include <boost/thread/thread.hpp> #include <pcl/common/common_headers.h> #include <pcl/features/normal_3d.h> #include <pcl/visualization/pcl_visualizer.h> #include <pcl/console/parse.h> using namespace std; int main() { //Load point cloud //pcl::PointCloud<pcl::PointXYZRGBNormal>::Ptr cloud (new pcl::PointCloud<pcl::PointXYZRGBNormal>); //pcl::io::loadPCDFile("/home/brunoeducsantos/mapping/examples/build-mapping_rtabmap-Desktop-Default/rtabmap_cloud.pcd",*cloud); //Load mesh pcl::TextureMesh mesh; pcl::io::load("/home/brunoeducsantos/mapping/examples/build-mapping_rtabmap-Desktop-Default/mesh3.obj",mesh); boost::shared_ptr<pcl::visualization::PCLVisualizer> viewer (new pcl::visualization::PCLVisualizer ("3D Viewer")); viewer->setBackgroundColor (0, 0, 0); //viewer->addPointCloud<pcl::PointXYZRGBNormal> (cloud, "sample cloud"); //viewer->setPointCloudRenderingProperties (pcl::visualization::PCL_VISUALIZER_POINT_SIZE, 3, "sample cloud"); viewer->addTextureMesh(mesh,"text",0); viewer->addCoordinateSystem (1.0); //Loop while (!viewer->wasStopped ()) { viewer->spinOnce(); //boost::this_thread::sleep (boost::posix_time::microseconds (100000)); } } --------------------------------------------------------------- |

|

Administrator

|

This post was updated on .

Hi,

based on the example on this post, with the new interface:

std::map<int, Signature> nodes;

std::map<int, Transform> optimizedPoses;

std::multimap<int, Link> links;

rtabmap->get3DMap(nodes, optimizedPoses, links, true, true);

pcl::PointCloud<pcl::PointXYZRGBNormal>::Ptr cloud(new pcl::PointCloud<pcl::PointXYZRGBNormal>);

std::map<int, CameraModel> cameraModels;

std::map<int, cv::Mat> images;

float voxel = 0.01f;

for(std::map<int, Transform>::iterator iter=optimizedPoses.begin(); iter!=optimizedPoses.end(); ++iter)

{

Signature node = nodes.find(iter->first)->second;

// uncompress data

node.sensorData().uncompressData();

pcl::PointCloud<pcl::PointXYZRGB>::Ptr tmp = util3d::cloudRGBFromSensorData(

node.sensorData(),

4, // image decimation before creating the clouds

3.0f, // maximum depth of the cloud

voxel); // Voxel grid filtering

pcl::PointCloud<pcl::PointXYZRGBNormal>::Ptr tmpWithNormals = util3d::computeNormals(tmp);

*cloud += *util3d::transformPointCloud(tmpWithNormals, iter->second); // transform the point cloud to its pose

cameraModels.insert(std::make_pair(iter->first, node.sensorData().cameraModels()[0]));

images.insert(std::make_pair(iter->first, node.sensorData().imageRaw()));

}

cloud = util3d::voxelize(cloud, voxel);

pcl::io::savePCDFile("cloud.pcd", *cloud);

pcl::PolygonMesh::Ptr mesh(new pcl::PolygonMesh)

pcl::Poisson<pcl::PointXYZRGBNormal> poisson;

poisson.setDepth(8);

poisson.setInputCloud(cloud);

poisson.reconstruct(*mesh);

pcl::io::savePLYFile("mesh.ply", *mesh);

std::vector<std::map<int, pcl::PointXY> > vertexToPixels;

pcl::TextureMesh::Ptr textureMesh = util3d::createTextureMesh(

mesh,

optimizedPoses,

cameraModels,

std::map<int, cv::Mat>(),

0.0f, // max camera distance to polygon to apply texture

0.0f, // maximum depth error between reprojected mesh and depth image to texture a face (-1=disabled, 0=edge length is used)

0.0f, // maximum angle between camera and face (0=disabled)

50, // minimum size of polygons clusters textured

std::vector<float>(), // [left, right, top, bottom] region of interest (in ratios) of the image projected.

0,

&vertexToPixels);

cv::Mat globalTexture = util3d::mergeTextures(

textureMesh,

images, // raw or compressed, can be empty if memory or dbDriver should be used

ccameraModels, // Should match images

0, // Should be set if images are not set

0, // Should be set if images and memory are not set

4096,

1,

vertexToPixels);

textureMesh->tex_materials[0].tex_file = "mesh.jpg"; // relative path, the *.mtl file will point to texture in same directory as the OBJ

cv::imwrite(textureMesh->tex_materials[0].tex_file, globalTexture);

pcl::io::saveOBJFile("mesh.obj", *textureMesh);

For PCLVisualizer, after being saved on disk, it could work (this visualizer needs that textures are saved to disk before adding a TextureMesh). In rtabmap, the CloudViewer class (a wrapper of PCLVisualizer) can add textureMesh without having to save the texture on disk., with addCloudTextureMesh():

// Need to build with Qt though

CloudViewer viewer;

viewer.addCloudTextureMesh("mesh", textureMesh, globalTexture);

cheers, Mathieu |

Re: Error creating Texture Cameras

|

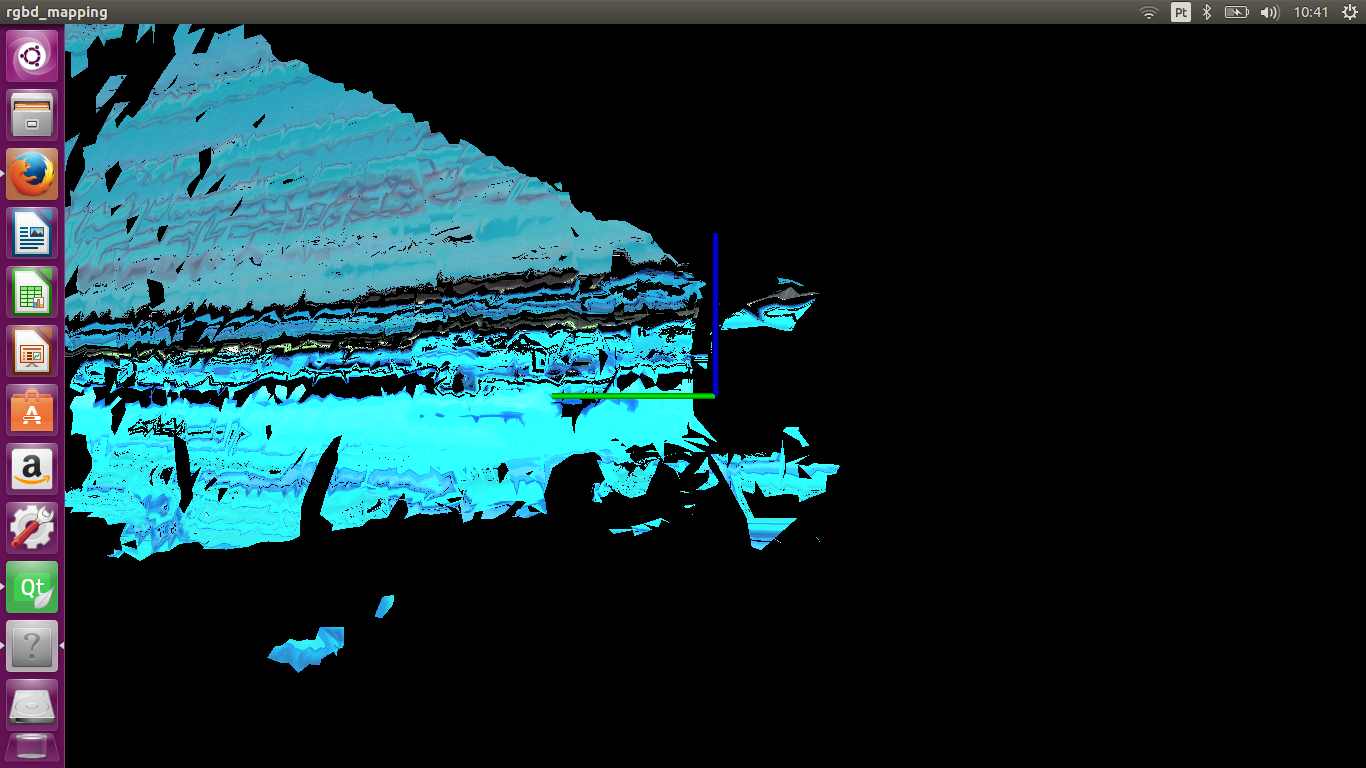

Hi Mathieu,

Thank you for your kind help. I added on my code the cloudviewer. Although, I am obtaining a weird mesh texture.  Can you help me understanding what is wrong ? Thanks, Bruno |

Re: Error creating Texture Cameras

|

PS: The code used for mesh reconstruction was:

/pcl::PolygonMesh::Ptr mesh = util3d::createMesh( //cloud, //voxel*3.0f); |

Re: Error creating Texture Cameras

|

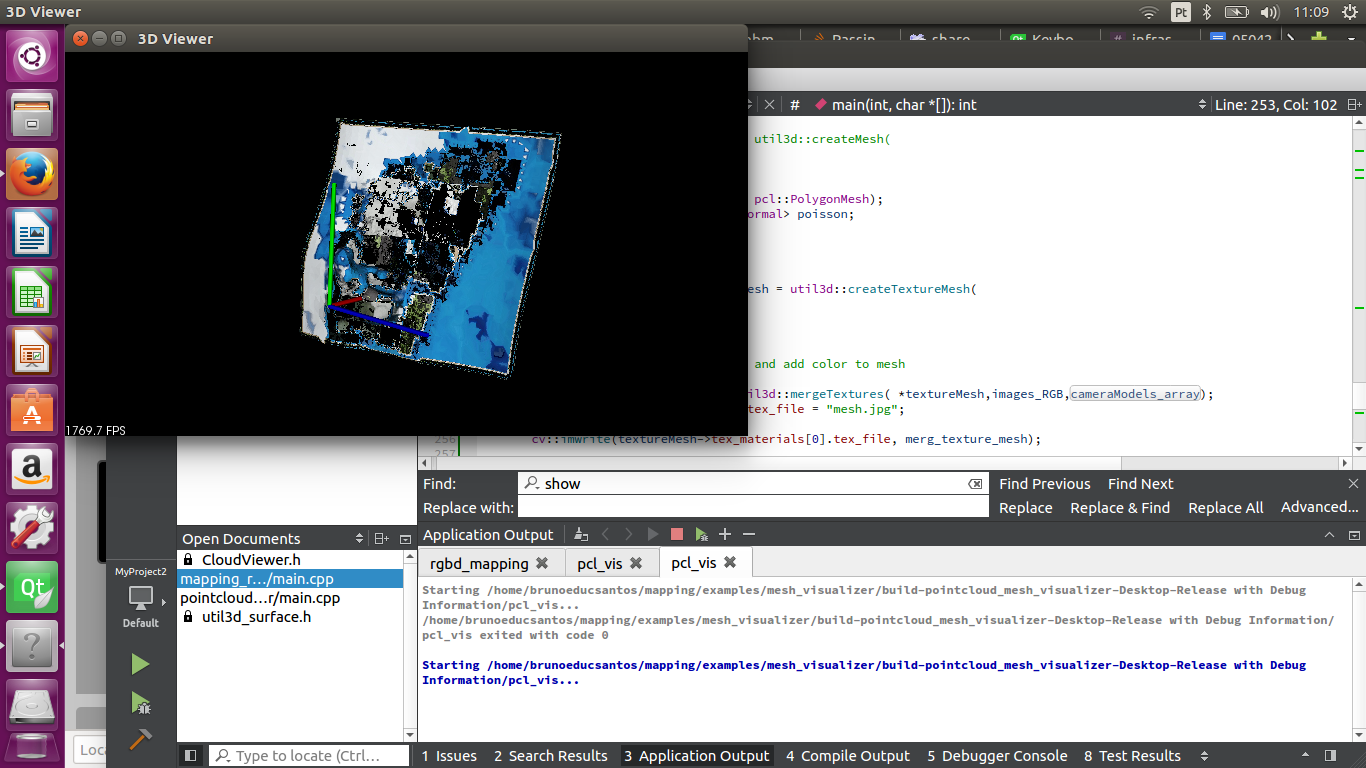

Using this piece of code:

pcl::PolygonMesh::Ptr mesh(new pcl::PolygonMesh); pcl::Poisson<pcl::PointXYZRGBNormal> poisson; poisson.setDepth(8); poisson.setInputCloud(cloud); poisson.reconstruct(*mesh); The mesh reconstruction is the following:

|

|

Administrator

|

There were indeed some issues with the code above as I didn't have time to compile it. Here is a working example:

#include "rtabmap/core/Rtabmap.h"

#include "rtabmap/core/util3d_surface.h"

#include "rtabmap/core/util3d_filtering.h"

#include "rtabmap/core/util3d.h"

#include "rtabmap/core/util3d_transforms.h"

#include <pcl/io/pcd_io.h>

#include <pcl/io/ply_io.h>

#include <pcl/io/obj_io.h>

#include <pcl/surface/poisson.h>

#include <stdio.h>

// gui stuff

#include <QApplication>

#include "rtabmap/gui/CloudViewer.h"

using namespace rtabmap;

int main(int argc, char * argv[])

{

printf("Open database and load map...\n");

rtabmap::Rtabmap rtabmap;

rtabmap.init(ParametersMap(), "/PATH/TO/A/DATABASE");

std::map<int, Signature> nodes;

std::map<int, Transform> optimizedPoses;

std::multimap<int, Link> links;

rtabmap.get3DMap(nodes, optimizedPoses, links, true, true);

pcl::PointCloud<pcl::PointXYZRGBNormal>::Ptr cloud(new pcl::PointCloud<pcl::PointXYZRGBNormal>);

std::map<int, std::vector<CameraModel> > cameraModels;

std::map<int, cv::Mat> images;

float voxel = 0.01f;

printf("Assembling %d clouds... ", (int)optimizedPoses.size());

for(std::map<int, Transform>::iterator iter=optimizedPoses.begin(); iter!=optimizedPoses.end(); ++iter)

{

Signature node = nodes.find(iter->first)->second;

// uncompress data

node.sensorData().uncompressData();

pcl::IndicesPtr indices(new std::vector<int>);

pcl::PointCloud<pcl::PointXYZRGB>::Ptr tmp = util3d::cloudRGBFromSensorData(

node.sensorData(),

4, // image decimation before creating the clouds

3.0f, // maximum depth of the cloud

0.0f,

indices.get());

tmp = util3d::voxelize(tmp, indices, voxel); // Voxel grid filtering

pcl::PointCloud<pcl::Normal>::Ptr normals = util3d::computeNormals(tmp);

pcl::PointCloud<pcl::PointXYZRGBNormal>::Ptr tmpWithNormals(new pcl::PointCloud<pcl::PointXYZRGBNormal>);

pcl::concatenateFields(*tmp, *normals, *tmpWithNormals);

*cloud += *util3d::transformPointCloud(tmpWithNormals, iter->second); // transform the point cloud to its pose

cameraModels.insert(std::make_pair(iter->first, node.sensorData().cameraModels()));

images.insert(std::make_pair(iter->first, node.sensorData().imageRaw()));

}

pcl::io::savePCDFile("cloud.pcd", *cloud);

printf("saved cloud.pcd\n");

printf("Mesh reconstruction... ");

pcl::PolygonMesh::Ptr mesh(new pcl::PolygonMesh);

pcl::Poisson<pcl::PointXYZRGBNormal> poisson;

poisson.setDepth(8);

poisson.setInputCloud(cloud);

poisson.reconstruct(*mesh);

// Remove polygons far from the original cloud

util3d::denseMeshPostProcessing<pcl::PointXYZRGBNormal>(mesh, 0, 0, cloud);

pcl::io::savePLYFile("mesh.ply", *mesh);

printf("saved mesh.ply\n");

printf("Texturing... ");

std::vector<std::map<int, pcl::PointXY> > vertexToPixels;

pcl::TextureMesh::Ptr textureMesh = util3d::createTextureMesh(

mesh,

optimizedPoses,

cameraModels,

std::map<int, cv::Mat>(),

0.0f, // max camera distance to polygon to apply texture

0.0f, // maximum depth error between reprojected mesh and depth image to texture a face (-1=disabled, 0=edge length is used)

0.0f, // maximum angle between camera and face (0=disabled)

50, // minimum size of polygons clusters textured

std::vector<float>(), // [left, right, top, bottom] region of interest (in ratios) of the image projected.

0,

&vertexToPixels);

// cleanup textureless polygons

util3d::cleanTextureMesh(*textureMesh, 0);

cv::Mat globalTexture = util3d::mergeTextures(

*textureMesh,

images, // raw or compressed, can be empty if memory or dbDriver should be used

cameraModels, // Should match images

0, // Should be set if images are not set

0, // Should be set if images and memory are not set

4096,

1,

vertexToPixels);

std::string tmpTexName = textureMesh->tex_materials[0].tex_file;

textureMesh->tex_materials[0].tex_file = "mesh.jpg"; // relative path, the *.mtl file will point to texture in same directory as the OBJ

cv::imwrite(textureMesh->tex_materials[0].tex_file, globalTexture);

pcl::io::saveOBJFile("mesh.obj", *textureMesh);

printf("saved mesh.obj\n");

// VTK issue:

// tex_coordinates should be linked to points, not

// polygon vertices. Points linked to multiple different TCoords (different textures) should

// be duplicated.

for (unsigned int t = 0; t < textureMesh->tex_coordinates.size(); ++t)

{

if(textureMesh->tex_polygons[t].size())

{

pcl::PointCloud<pcl::PointXYZ>::Ptr originalCloud(new pcl::PointCloud<pcl::PointXYZ>);

pcl::fromPCLPointCloud2(textureMesh->cloud, *originalCloud);

// make a cloud with as many points than polygon vertices

unsigned int nPoints = textureMesh->tex_coordinates[t].size();

UASSERT(nPoints == textureMesh->tex_polygons[t].size()*textureMesh->tex_polygons[t][0].vertices.size()); // assuming polygon size is constant!

pcl::PointCloud<pcl::PointXYZ>::Ptr newCloud(new pcl::PointCloud<pcl::PointXYZ>);

newCloud->resize(nPoints);

unsigned int oi = 0;

for (unsigned int i = 0; i < textureMesh->tex_polygons[t].size(); ++i)

{

pcl::Vertices & vertices = textureMesh->tex_polygons[t][i];

for(unsigned int j=0; j<vertices.vertices.size(); ++j)

{

UASSERT(oi < newCloud->size());

UASSERT_MSG(vertices.vertices[j] < originalCloud->size(), uFormat("%d vs %d", vertices.vertices[j], (int)originalCloud->size()).c_str());

newCloud->at(oi) = originalCloud->at(vertices.vertices[j]);

vertices.vertices[j] = oi; // new vertex index

++oi;

}

}

pcl::toPCLPointCloud2(*newCloud, textureMesh->cloud);

}

}

QApplication app(argc, argv);

CloudViewer viewer;

viewer.addCloudTextureMesh("mesh", textureMesh, globalTexture);

viewer.show();

app.exec();

return 0;

}

Note that the TextureMesh format is slightly different for exporting OBJ and for visualization with VTK.

Hope this will help, Mathieu |

Re: Error creating Texture Cameras

|

Thanks for the clear work examples. Really awesome!

Can you explain in further detail the VTK loop? Thanks again, Bruno |

Re: Error creating Texture Cameras

|

PS: Sometimes I am obtaining the following error:

[FATAL] (2018-04-10 10:35:25.509) util3d_surface.cpp:1541::mergeTextures() Condition (u < textureSize-emptyImage.cols) not met! Can you give feedback about it? Thanks |

Re: Error creating Texture Cameras

|

I am using a Kinnect v2.

|

|

Administrator

|

This post was updated on .

When exporting to OBJ, the tex_coordinates correspond to polygon vertices so that size(tex_polygons) X polygonSize = size(tex_coords). For VTK, tex_coordinates should correspond to index in the point cloud, not tex_polygons, well what we do is to duplicate points that are shared by polygons so that size(tex_polygons) X polygonSize = size(tex_coords) = size(cloud).

EDIT That VTK loop is now in util3d::fixTextureMeshForVisualization() function for convenience. If you can update the git to this version: https://github.com/introlab/rtabmap/commit/57a62dbbfd3ca07a28d54ce782f4111093fb7468, I added a message to show more info about the assert. cheers, Mathieu |

Re: Error creating Texture Cameras

|

Thanks Mathieu.

How can I evaluate if I got a good mesh reconstruction? Can you point out to some resource ? Thanks, Bruno |

|

Administrator

|

Hi Bruno,

well the current way is to see visually if the geometry looks good and represents well the environment. For more in depth reconstruction evaluation, we would have to do this with a simulated environment (which we know the exact representation of the environment) or that we have very accurate lidar to create the point clouds of the environment, then compare against the point clouds from rtabmap. I think CloudCompare can be used to do such comparison. cheers, Mathieu |

«

Return to Official RTAB-Map Forum

|

1 view|%1 views

| Free forum by Nabble | Edit this page |