Further Processing of camera images & RTAB data -- best way to do it?

|

Hi Mathieu and all!

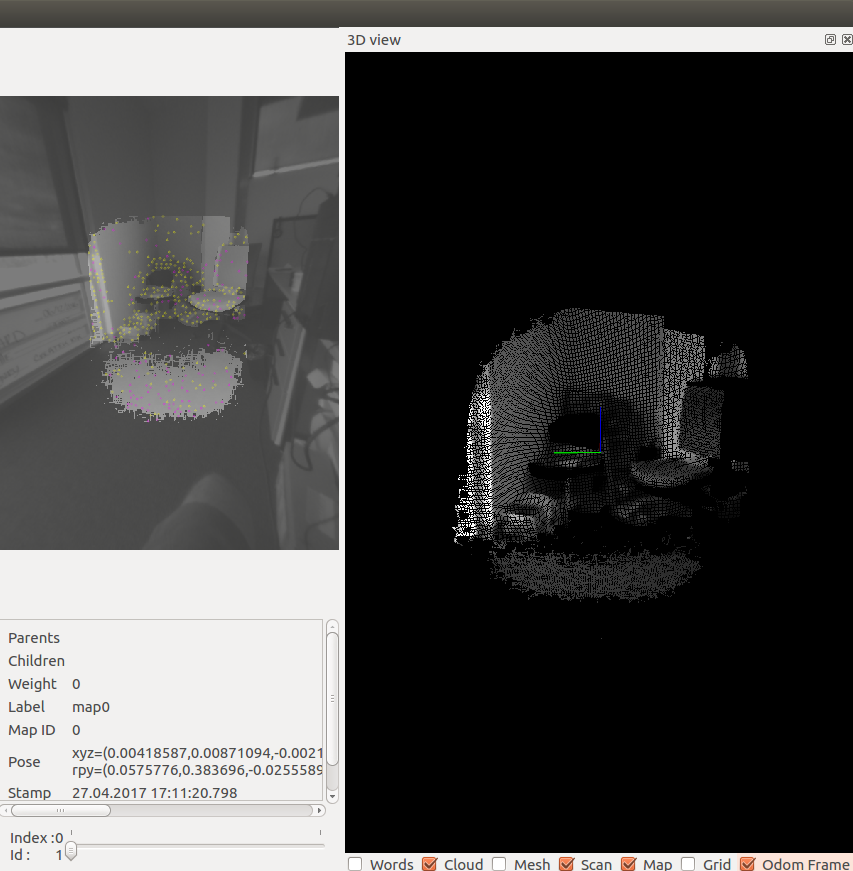

I'm currently developing an application where I do object detection with YOLO's CNN, and then some additional processing, in addition to running SLAM through RTAB-Map. I was wondering what is the best way to route data, and what capabilities RTAB-Map already offers in that regard. To be more precise, there is the following data flow in my setup: camera images, odometry => RTAB-Map => metric map, graph camera images => YOLO => list of object labels with corresponding bounding boxes ("labeled BBs") labeled BBs, odometry => Our own module => filtered bounding boxes 1. Does RTAB-Map offer incremental updates for publishing map updates? Our own module will keep a copy of the map for its own purposes, and it would be good to be able to receive incremental updates as the map gets bigger. 2. Since YOLO might incur significant delays on some computers, the data that our own module receives might not be synchronized well (i.e., labeled BBs arrive much later than camera pose). It might be better to use some cached data of RTAB-Map. Does RTAB-Map offer to retrieve a set of {image, camera pose, (local) map} at a later time? If yes, the best way to do it is would be to create our own message, which contains the set just mentioned, and passes it through YOLO and our own module in a linear fashion. My guess would be that this is offered through the graph structure that it publishes. 3. Any other smart way to solve this task? 4. How would loop closures influence the aforementioned aspects of RTAB-Map (incremental updates, cached data)? Thank you very much. - ibd |

|

Administrator

|

Hi,

Thx referring YOLO object detection, I didn't know about that, kinda cool. 1. I assume you are on ROS? If so, the /rtabmap/mapData output can be used to get incremental data to map. For example, you can look at the rtabmap_ros/MapCloud rviz plugin (which is based on official PointCloud2 rviz plugin) to see how new data is added to 3D map. In MapData msg, there is the optimized graph of the map and only the latest node of "nodes" contains the latest data added to map. So only data of one node is published at after each map update (at default 1 Hz), keeping low bandwidth. Like rtabmapviz or rtabmap_ros/MapCloud rviz plugin, the cloud of the new data is created and added to their list of clouds kept in cache on their side. The optimized graph is used to adjust the poses of all created clouds so far if a loop closure happened for example. 2. If the timestamps of YOLO results are set accordingly to image captured, TF can be used to get a pose in the past. Note the TF has limited history buffered, I think the time to keep past transforms can be increased by a parameter. With the graph sent by rtabmap, we could find the node having the same timestamp than the detection (if same image is used for rtabmap than YOLO) or get the two nodes with timestamps before and after the YOLO result. The pose can be then interpolated between the two nodes, similar to this example where the WiFi captures were done between nodes. 3. Is the goal getting the object 3D pose referred to map? You would need to keep the depth image along with RGB image used with YOLO, to be able to triangulate its 3D local pose in camera frame. Then to get in map frame, the approach above could be used to get 3D pose accordingly to map's graph. You can also look at the find_object_2d's 3D example to see TF of objects published after a detection. 4. The graph published in /rtabmap/mapData contains always all poses in the map, optimized if there was a loop closure. To detect if the poses have changed, either compare all poses with some poses cached from previous msg or use /rtabmap/info topic (which can be exactly synchronized with mapData) and see if "loopClosureId' and "proximityDetectionId" are non-zero. cheers, Mathieu |

|

Hi again!

You might also be interested in YOLO 9000, which can detect many object classes! However there is currently no ROS node available for it. 1. & 4. Yes, in fact I am on the same tango setup as described in my other thread. Thank you, I will look into this optimization once I need it. 2. Since I am using tango, I can cache the TF as you described. Since the delay is rather small (<1s), I'm just assuming that there is usually no loop closure that would change a TF in the past. If it is necessary, I will check out your interpolation example. 3. Yes, exactly. Thank you for your example. I think in the end it will turn out to be an exercise in projection between image and camera coordinates, and in putting together the correct transformations to go between the camera frame to the map frame. Does RTAB-map offer functionality to take the depth image and the "regular" image (used for odometry & loop closure) from different cameras? Asking because tango offers a fisheye camera with a very wide field of view, it might be nicer for loop closure than the color camera (which is the same as the depth camera, by using the infrared spectrum). |

|

Administrator

|

Hi,

as how loop closure and visual odometry is done in rtabmap, a registered depth image is required with the RGB one. We could set the fisheye as the color image input (fisheye would need to be rectified), but the registered depth won't cover the whole field of view of the fisheye (just a small part at the center with low resolution). Features are extracted only where there is a valid depth.  cheers, Mathieu |

«

Return to Official RTAB-Map Forum

|

1 view|%1 views

| Free forum by Nabble | Edit this page |