Global Optimizing with Loop Closure and Navigation After Mapping.

|

Hello,

I'm working on mapping and navigation in an apple orchard using the rtabmap SLAM with stereo odometry from an OAK-D-PR-W POE camera. I'm following the ROS tutorial for stereo handheld mapping (https://wiki.ros.org/rtabmap_ros/Tutorials/StereoHandHeldMapping). I have two questions: Mapping issue: When moving from the 2nd to 3rd row in the orchard, my map sometimes creates errors. These errors occasionally get fixed when loop closure is detected and optimizes the overall map. However, sometimes loop closure doesn't optimize the map well, resulting in duplicated rows and poor mapping quality. Is there a way to delete or refine specific poses that are causing problems in the mapping? I've attached an image of my map showing this issue. database link : https://drive.google.com/file/d/1Y5tr5iF25V79tsQ_Gwst_YG-Ni_uftZf/view?usp=sharing Navigation issue: I'm using the teb local planner with move_base. During navigation, my robot initially localizes itself within the map correctly, but when I provide waypoint goals, it sometimes loses localization in the middle of the rows. This causes incorrect positioning on the map and consequently navigation problems. Is there a method to improve localization using only visual information, without adding additional sensors? Thank you for your help! |

|

Administrator

|

Hi,

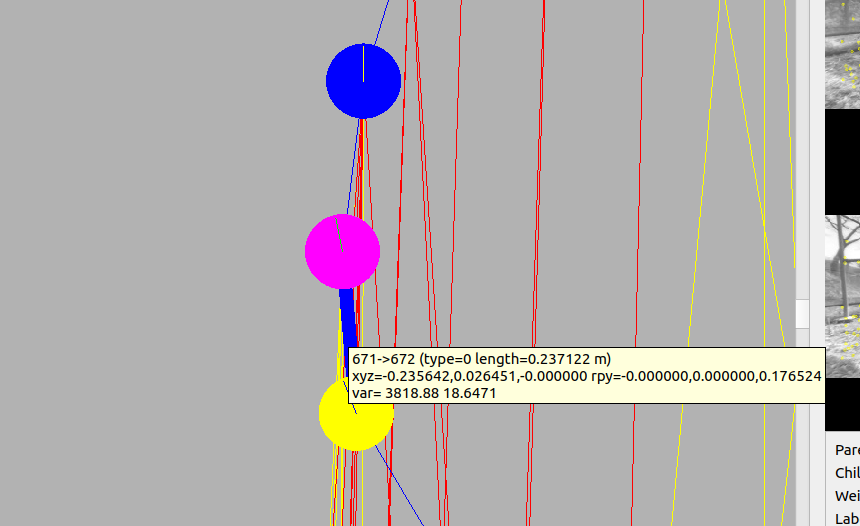

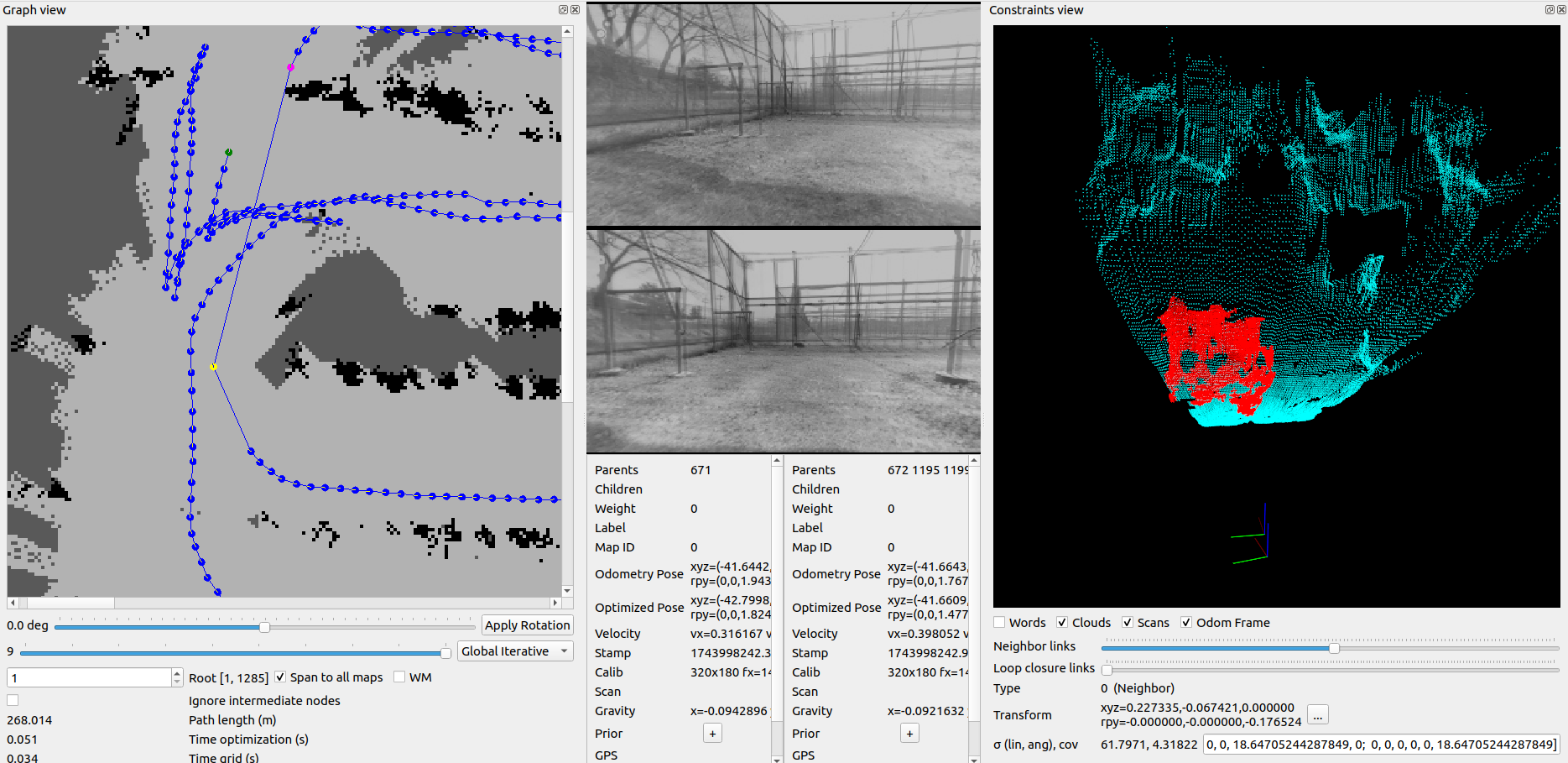

It looks like there are some stereo issues that is causing the VO to drift more. 1) The stereo rectification doesn't look perfect, there is a 1 pixel vertical shift between the left and right cameras:  2) Bad time sync between left and right cameras causing very large covariance on some links like this:  Looking at the images, we clearly see that the left image is synced with the wrong right image:  I've shown on the right the resulting point clouds for two consecutive frames. The red one is generated from the top image, where we see that disparity is way larger than the one below for similar point of view. I think this issue appears to be worst when the robot is rotating at the end of each row. I would try to get a good map before trying to navigate in it. Here would be the steps to improve VSLAM: 1) Improve stereo calibration, 2) Fix stereo sync, 3) If VO looks drifting too much even after fixing 1 and 2, you may check to integrate a VIO approach instead, 4) For visual localization, using SIFT/SURF/SuperPoint could help to localize over time. I see you are outdoor, classic features like ORB/BRIEF/SIFT/SURF are quite sensible to illumination changes / shadows, features like SuperPoint may be more robust in those cases. cheers, Mathieu |

|

Hello,

Thank you for your previous suggestions regarding camera calibration. After several attempts, I was able to achieve the following reprojection errors: rgb Reprojection Error: 1.588567

For your 2nd suggestion can you please let me know that how i fix the stereo_sync issue as I am using the rtabmap_launch file.

|

|

Administrator

|

For the stereo calibration, the reprojection errors look okay, but do a quick visual check by comparing the two rectified images in Gimp for example (with two layers, top one 50% transparent) to see really that there is no more vertical shift. The reprojection error could be good for each individual camera, but you may also check the reprojection error after stereo rectification. From experience, the hardest part to get right in stereo calibration are the stereo extrinsics (rotation and translation between the cameras), not the individual camera intrinsics.

For the stereo sync issue: a. If the camera driver is publishing left/right images with exact same stamp, make sure you use approx_sync:=false in rtabmap. If it is already your setup and there is still bad sync like above, then it is a camera driver issue. You should ask them why their images with exact same stamps are not synchronized. b. If the camera driver is publishing left/right images with slightly different stamps but very close (<1 ms), assuming on hardware side they all shutter at the same time, you can set approx_sync:=true and set approx_sync_max_interval:=0.001 (very small accepted interval). If even with that there are bad synchronizations, you would have to ask on your camera driver's support. For SuperPoint, I don't actively test with latest pytorch/cuda versions, last time I tried I created a doc here: https://github.com/introlab/rtabmap/tree/master/archive/2022-IlluminationInvariant#how-reproduce-results-shown-in-the-paper to reproduce the results of the last paper (comparing SuperPoint). There is also instructions to build a Docker version with SuperPoint support. I'll suggest to improve stereo calibration and stereo sync in first phase, then jump to SuperPoint later. cheers, Mathieu |

|

Hello,

I've been following your recommendations from last week, but I'm still uncertain if stereo rectification is the root cause of my issue. Upon inspecting both the left and right images, I didn't observe any vertical shift: GIMP_VErticalShiftChecking_150.mp4 Additionally, I've checked the timestamps from my stereo camera, and both the left and right topics appear to be synchronized. I used "approx_sync:=true" and set "approx_sync_max_interval:=0.001" in my code: Timestamps:

|

|

Administrator

|

Hi,

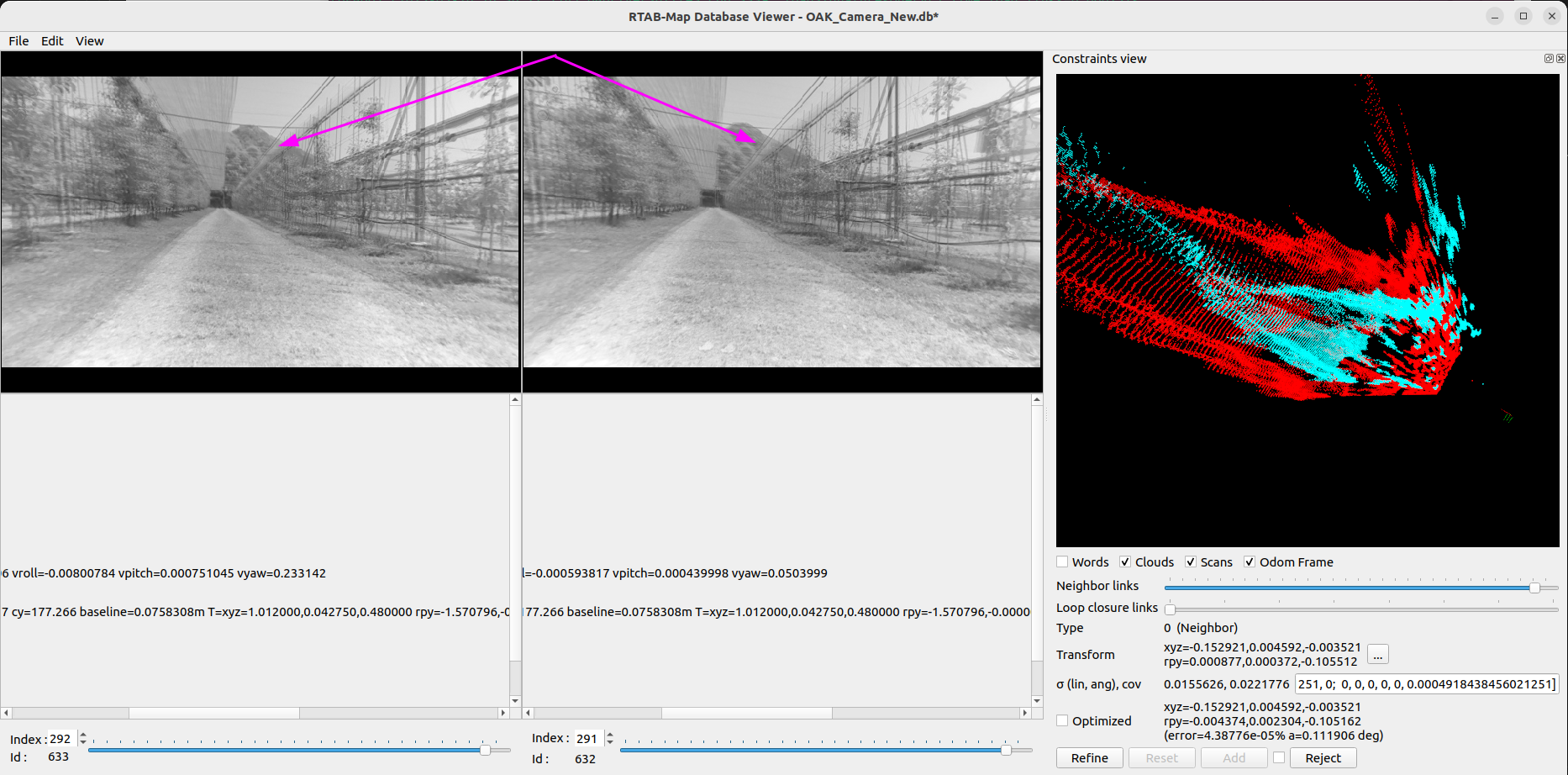

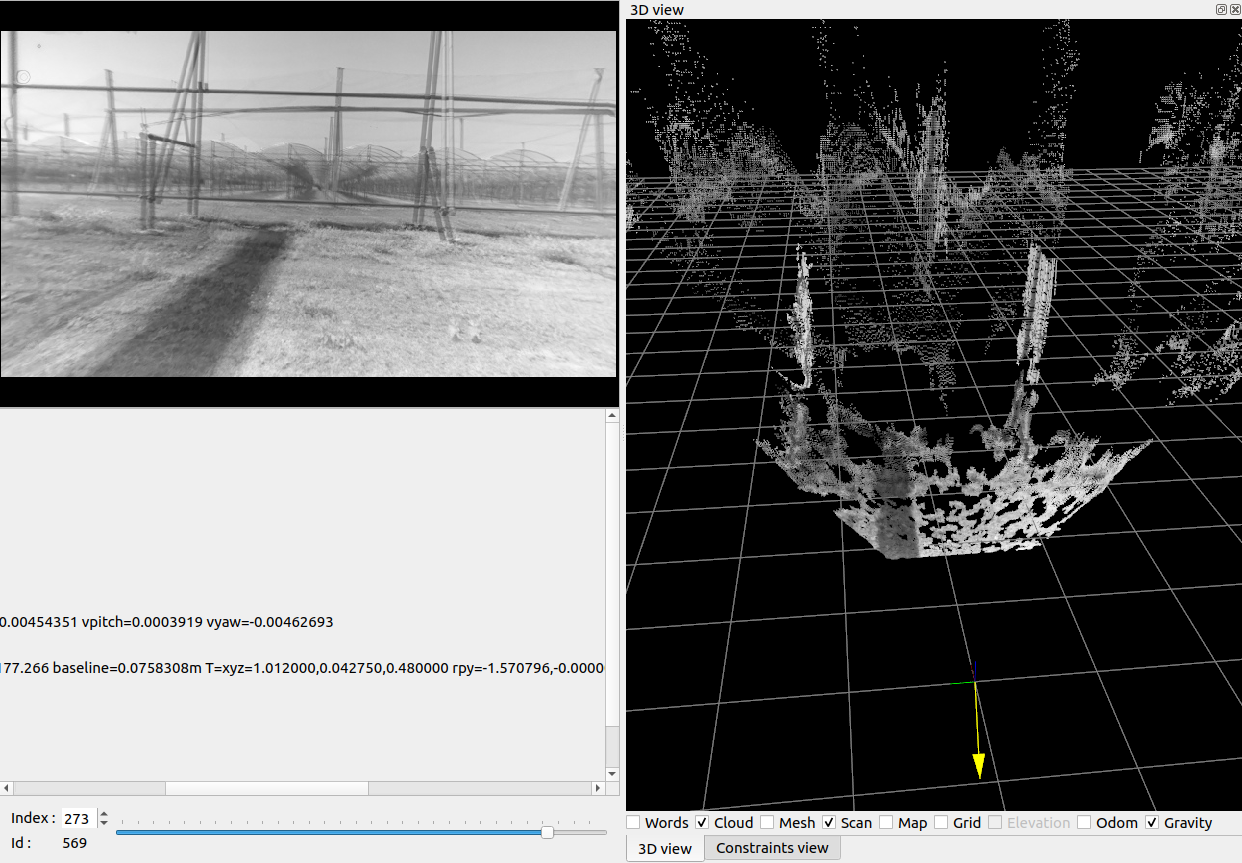

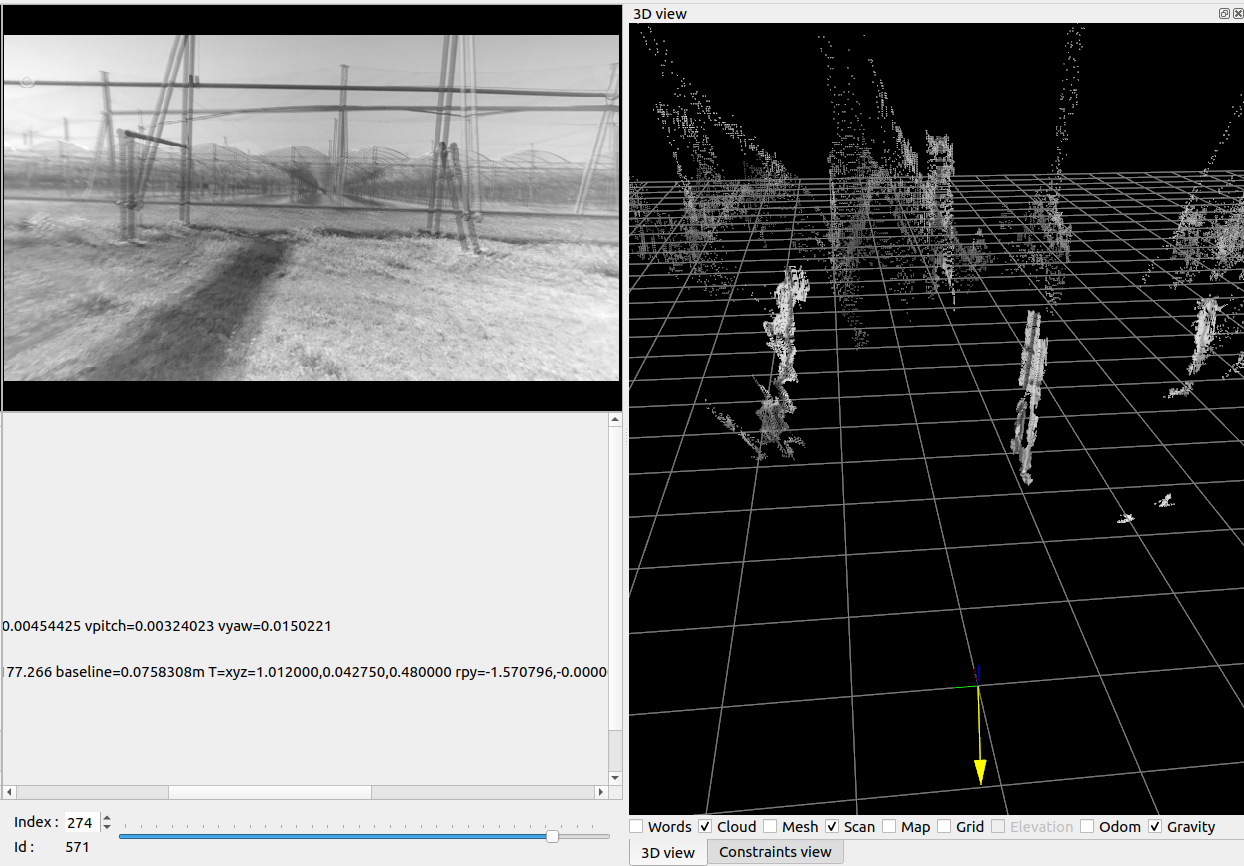

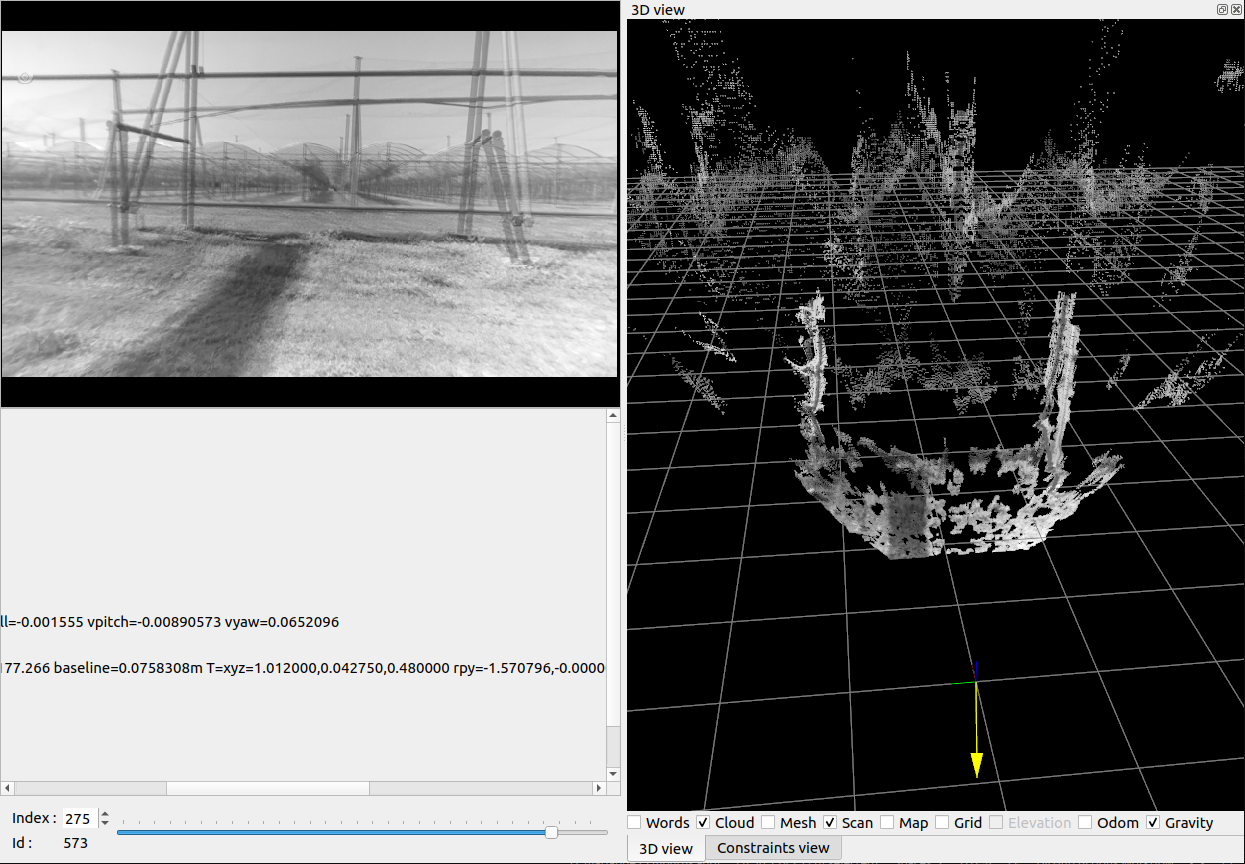

From your video, I see a small vertical shift here:  I looked into OAK database, and I can still see some time sync issues. Even if the driver is reporting same stamp, it seems the cameras don't actually shut exactly at the same time. If you record left and right images (in a rosbag) at the same time while doing very fast rotation, it may be more obvious. Here is an example of two consecutive images, in the Constraints View, the point cloud scale appears different:  Here another example of 3 consecutives frames, where the second one no disparity could be computed on the ground. We can see in the stereo image that the horizontal shift doesn't make sense accordingly to the first and third frames (look at the poles, the disparity looks smaller on left side but higher on right side of the image on the second one):    For the realsense database, I don't see any stereo sync issue. The trajectory looks like expected. The image resolution is quite low (~120p), a slightly higher resolution (~480p) could help to get more loop closures. Using SIFT can also help (if your opencv is built with it). rtabmap-reprocess --Mem/UseOdomFeatures false --Kp/DetectorStrategy 1 realsense.db output.db It seems you didn't feed IMU from the camera, it could help to keep the map aligned with gravity. For VIO integration, you would need to experiment with some approaches that you can find. To integrate with rtabmap, if they provide an odometry topic, then it is kinda plug-and-play to feed that topic to rtabmap node. For SuperPoint, I recently updated my computer with latest pytorch and it worked. Only thing is to make sure to re-create the superpoint.pt file from your current pytorch version, using this tracing script:

import torch

import torchvision

from demo_superpoint import SuperPointNet

model = SuperPointNet()

model.load_state_dict(torch.load("superpoint_v1.pth"))

model.eval()

example = torch.rand(1, 1, 640, 480)

traced_script_module = torch.jit.trace(model, example)

traced_script_module.save("superpoint_v1.pt")

cheers, Mathieu |

|

Hello Sir,

I am sorry for the delayed response, as i am working on it. I have successfully implemented SuperPoint and built RTABMap with CUDA=ON to shift feature detection to the GPU using your solution from issue #1330. I plan to test SuperPoint's performance as well. Could you please advise which processing metrics I should monitor in the RTABMap GUI to verify that my system is now accelerating RTABMap performance? I want to clearly observe the performance difference between algorithms running on CPU versus GPU. Actually, I've noticed that when my map becomes longer, the RTABMap GUI doesn't update images as frequently. So, I am going to shift some algorithm's on GPU. Thank you for your assistance. Best regards |

|

Administrator

|

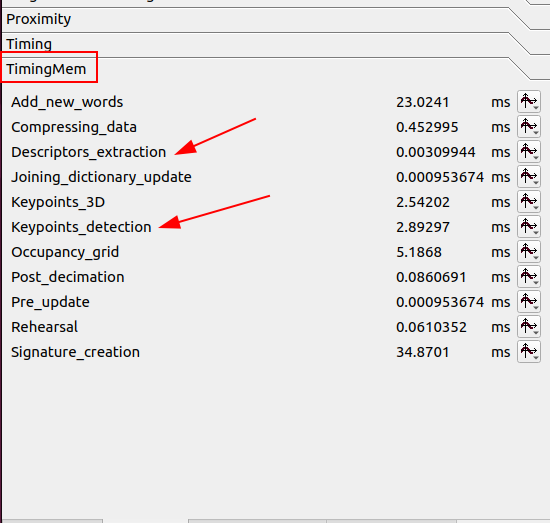

In terms of timing performance, you can open Statistics panel in rtabmap_viz and open TimingMem tab. For feature detectors, look at statistics like "Keypoints_detection" and "Descriptors_extraction". If you feed odometry info topic to rtabmap_viz (subscribe_odom_info:=true), there will be also an Odometry tab that will show up with some statistics. If you change also features for visual odometry, this is where you would see the differences.

|

«

Return to Official RTAB-Map Forum

|

1 view|%1 views

| Free forum by Nabble | Edit this page |