Help with optimized Tango settings?

|

Hi everyone

I'm using the Asus Zenfone AR with Tango with RTAB-Map app. I have a small room that I'm creating my tests in. Generally there's a lot of items in the room which should be ideal for this RTAB approach, but I'm having some trouble with the accuracy of the vertical planar surfaces (the walls). I think it might be the loop closures or something in that direction, since, it seems, that the walls get a little skewed, which I think is why I get the error in the accuracy. I've tried multiple optimizations with postprocessing and different runs of the .db on standalone desktop app afterwards, but it seems to me that the initial scan just might not be good enough. In general there's also some "ghosting" apparent with multiple layers of wall outside each other, although it differs a little depending on the settings I use on the desktop. It seems to me that it makes a small drift or rotation on walls in the back and bottom corner, which means the accuracy at this point is between 35-60 mm. I need the accuracy to be maximum 20mm, which I know the sensors can handle. I've tried using SIFT, SURF and GFFT-BRIEF. I've tried bumping the visual features to 400 and 1000. Any thoughts on optimal settings for the Tango device for this kind of environment, if the initial scan simply isn't good enough? I've tried another app called AR Planar which seems to get it right with a precision of 5 mm. This app seems to recognise wall sections that roughly align and then merge them when scanned over multiple times. Is the proximity setting doing the same thing in RTAB Map? Any help or suggestions on this is appreciated. I'm crazy about the potential of this program for me, if I can just get the accuracy a little better :-) I have a few links here for the database, and for some screenshots of some of the settings I've tried. https://www.dropbox.com/sh/022jhjfqwxetk80/AAAAIqRzn6cOHThnGClHS9Sda?dl=0 On a side note, there's an option in the Tango app to use the fish-eye lens, but that doesn't seem to work on my phone. Is this only for the Phab, or what is it for? |

Re: Help with optimized Tango settings?

|

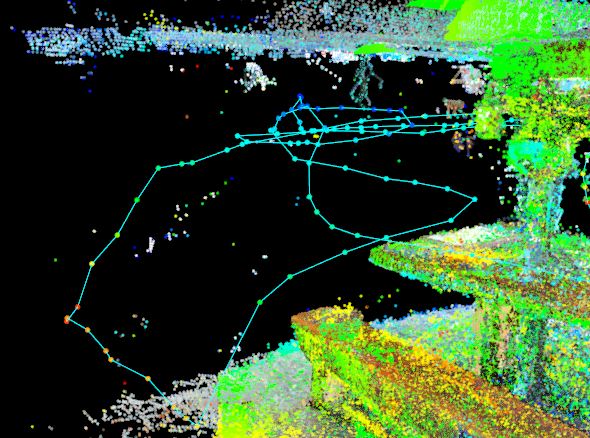

I opened the db in the editor to watched the path...

The resulting odometry is all over the place and not particularly smooth looking. even for handheld  you want a smoother path; more like  My sense is that you're panning (up->down->rotate) motion makes it really hard for VIO to stay accurate (e.g. you're really stressing the inertial integration). If you move in less purely rotational movements and with fewer directional inversions, you may find that not only does the pose drift less, but also that the loop closures tend to be a bit more reliable. in short a bit more flying style, less gimbal if you move in a more: 1) Fly in 2) Circularly pan with some "conical" tilting and then 3) fly out 4) pick a new path for next Step 1 You have to make sure you don't end up "in" featureless areas which can be a real challenge in tight areas, but If you use this pattern, you can create lots of short loops . the loop closure detection work very well when you reproduce an already observed *trajectory* on a subject with good and unique detail (features).... Not just the same subject in the frame. you want to reproduce the same path to a (fixed) subject to maximize the ability to close loops. hope that helps. AR planer is doing a realtime reconstruction from a voxel model, which RTAB is not. each have their strengths. loop closure is RTABS strength, meshing and planar simplification aren't. |

|

Administrator

|

In reply to this post by nufanDK2

Hi,

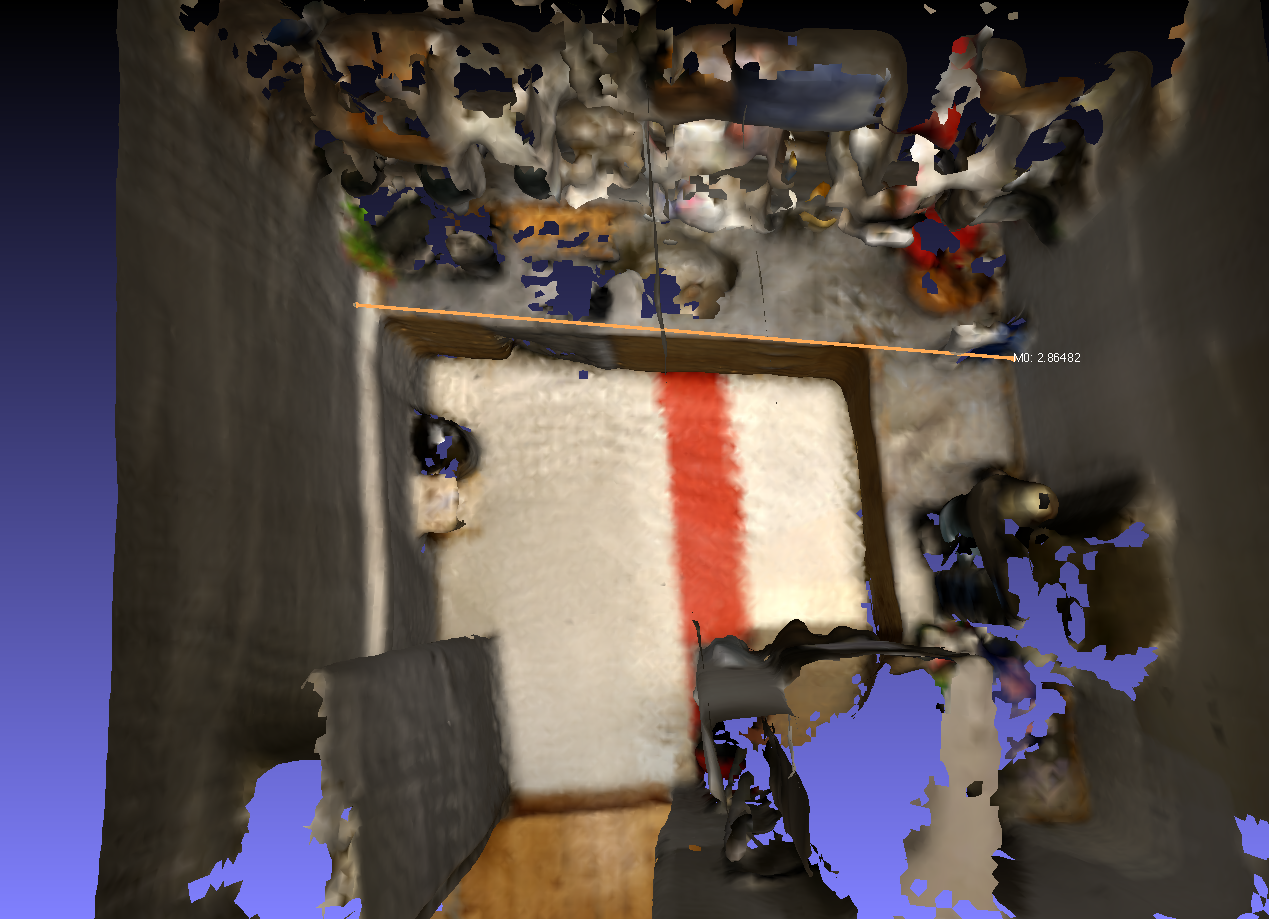

The fish-eye mode is working only on Phab2Pro. Note that underlaying motion estimation is still using fish-eye on all tango platforms. I have difficulty to see what exactly you are measuring. I opened your database in RTAB-Map, exported the cloud with meshing (Poisson depth 8), opened it in MeshLab then measured this:  and another measuring trial (kinda difficult in MeshLab to measure without being able to constraint to 2 axes):  When meshing, the algorithm tries to find a surface approximating the point cloud, so removing close layers of point clouds (or double-wall effect). On the phone, if you choose Export->Optimized mesh->Colored mesh, you should have a similar mesh. I don't see gross errors of mapping in this scan (unless the room should be perfectly rectangular), but following Eric's recommendations may reduce the drift of Tango a little more. cheers, Mathieu |

|

In reply to this post by nufanDK2

Thank you both so much for your answers!

I feel a little silly for asking the question, when seeing the measurements taken by matlabbe. I hope I haven't wasted your time. I'm very grateful for the info provided though. It has taught me a lot. It's true that the trajectory is all over the place. I once saw an article on photogrammetry (or was it 3D scanning), which gave some pointers, that the optimal workflow, was to stand with the back to a wall, and then scan the opposing wall, and preferably perpendicular to the scanned area.  In a faulty effort to achieve this, along with trying to get a lot of loop closures, I can see that I clearly overcomplicated my scan. Thank you again for the suggestions Eric. That method should also reduce the time needed for the scan. And thank you matlabbe for the suggestion on the mesh. It actually fixes the problem I've been having with the pointcloud boundaries having thickness. Excellent! :-) |

«

Return to Official RTAB-Map Forum

|

1 view|%1 views

| Free forum by Nabble | Edit this page |