High accuracy, small space mapping

|

Hello Mathieu,

I am working on a project where I want to create a highly accurate ( 5-10cm graph error ) map of a small ( 20x20m ) grass ( or dirt ) field, a sample picture of which is shown below.

The traversal path I have chosen is the boustrophedon path, with a step of about 1m between the long parallel lines, to cover the whole field. I am using a small robot with a mounted ZED stereo camera on top, and I am navigating it with teleop. The only other sensor on this robot is a small IMU I can use for localization sensor fusion. The obvious observations from the picture provided, are that the only available features are patterns on the grass and dirt, so the camera has to be tilted to face the ground as much as possible? What algorithms/configurations would you suggest regarding stereo visual odometry, in order to optimize accuracy? (image feed resolution, feature type, optical flow vs block matching etc). Is the accuracy of 5-10cm error even feasible with this setup on this type of environment? Thank you, Chris |

|

Administrator

|

Hi Chris,

5-10 cm is possible if there are loop closures in the path. Boustrophedon may not be the best path for loop closures. In your example, there would be no loop closures, so the whole trajectory is dependent on odometry drift. At least if the robot is teleoperated back to initial position to find a loop closure, it will correct most odometry drift. See this video for example, note the error when the robot comes back to base. Using 720p images would be enough, 1080p takes a lot more memory. As you are using the ZED, you may use the depth image computed already by the SDK. In outdoor environment like that, the depth image would be quite already good (so you can use rgbd_odometry). With 720p images, you may increase GFTT/MinDistance to 7 or more to get uniformly distributed features. For the camera orientation, it depends at which height it is mounted. The rule of thumb is to have half of the image with objects/terrain under baseline*100 meters (e.g., for a baseline of 12 cm, half of the image should be under 12 meters). If the camera height is close to ground, having the camera looking straight forward would be ok. If the camera is 1/1.5 meters over the ground, a little tilt toward the ground may be required. Note that far features are useful too to get good orientation estimation. Close features are needed to correctly estimate the scale of the environment. cheers, Mathieu |

|

Hello Mathieu,

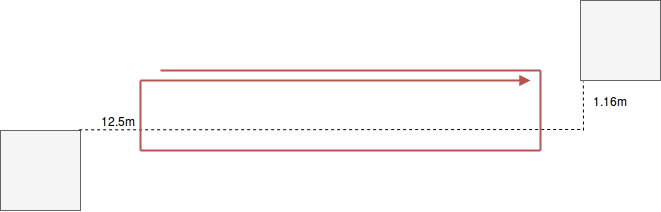

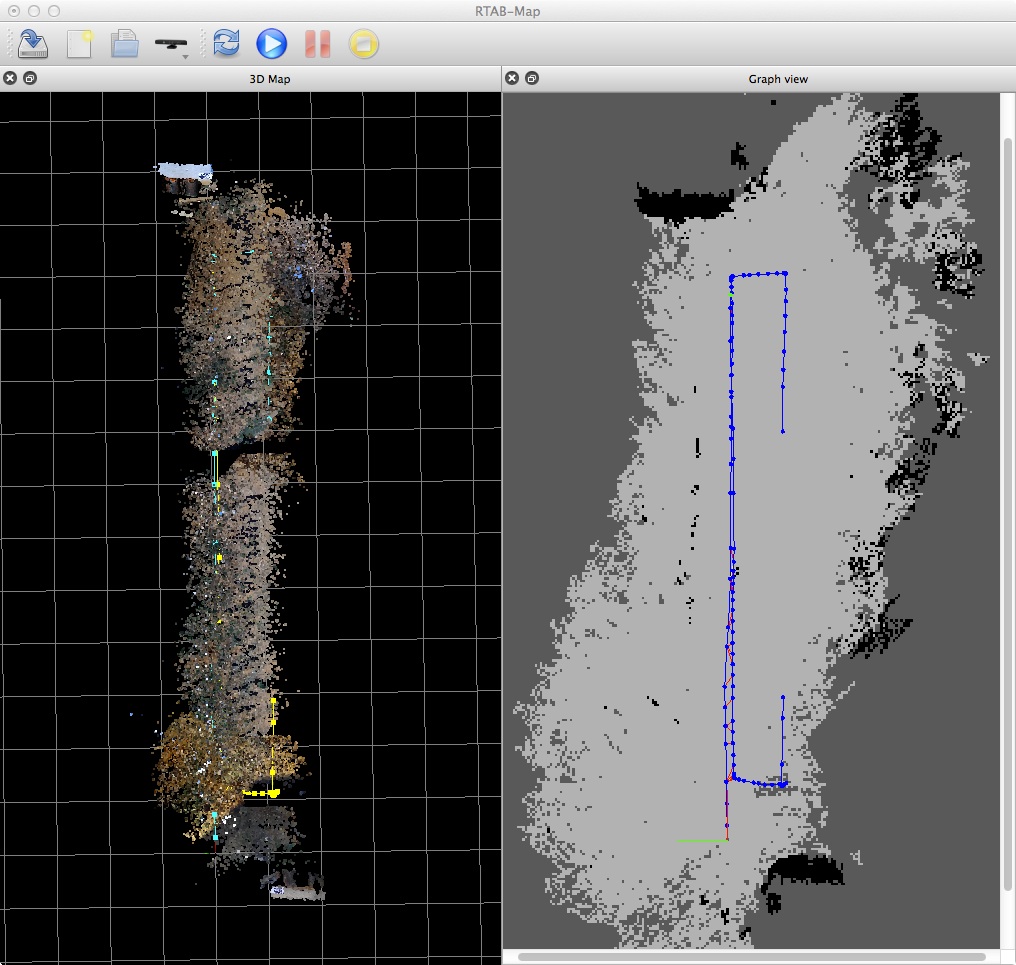

This is probably a more general question, but since it came up in the tests performed in the environment described above, I am going to ask you here. The generated map has a considerable error in scale and orientation. A rough schematic of the area covered ( trajectory is shown with the red arrow and the distances of the two chimneys with the dashed line ) is here:  The area is considered almost completely flat (although i don't want to force 3Dof, because I want to be able to work with non flat areas). The database of the run is here. I have tested RTAB-Map in feature rich, indoor environments and it has worked increadibly well. In this environment the scaling as well as the orientation is quite off. I was wondering what the possible reasons for this might be and I came up with the following: 1. Bad extrinsics calibration of the camera ( is there a way in RTAB-Map I can evaluate this?). I use my phone to measure the angles at which the camera is placed on the robot, so I do not have perfect accuracy. 2. Often registration failures. I keep getting the message [ WARN] (2018-01-23 11:30:07.144) OdometryF2M.cpp:386::computeTransform() Registration failed: "Not enough inliers 0/20 (matches=226) between -1 and 220" [ WARN] [1516699807.145082160, 1516360146.712630571]: Odometry lost! Odometry will be reset after next 5 consecutive unsuccessful odometry updates... which sometimes leads to odometry reset. I am wondering if I can examine every one of those failures in rtabmap-databaseViewer ( like I can examine a frame by frame disparity inliers count, for example) so I can tune the parameters of the matching algorithm for the particular environment. 3. Not many distant features in sight of the camera for good orientation estimation ( as you mentioned earlier). Based on your experience, is the camera in a good angle? Any input on this is highly appreciated, Chris |

|

Administrator

|

Hi,

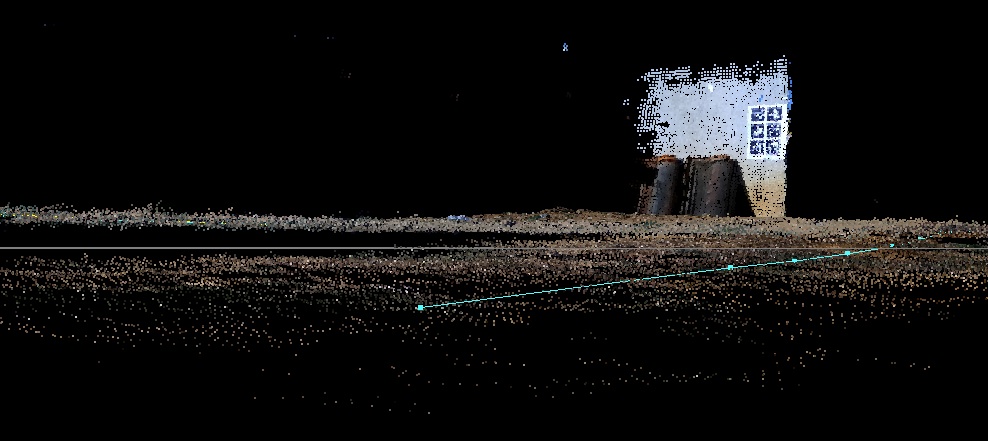

Looking at the database, you may want to set Odom/ResetCountdown=0 to avoid resetting odometry and creating new sessions (having only one session helps to get better correction on loop closures). The problem is to set parameters to avoid losing odometry tracking. SURF is set as visual feature, and looking at the total processing time per frame, I suggest to use another feature that is faster to compute. For example, Vis/FeatureType=6 with GFTT/MinDistance=7. What is the odometry time shown in ros info on terminal? For loop closure detection, you may don't need as much features as for visual registration, you can set Kp/MaxFeatures=400/500 for example. I see also that generated dense disparity images have depth set for non valid disparity (far pixels without disparity), set StereoBM/MinDisparity=0. For the scale, I didn't measure it on a 3D application, but from the 1 meter grid in rtabmap, it seems close to your actual measurements. Maybe 13 instead of 12.5. This is mainly caused by calibration (baseline may be too large).  For orientation, are you refering to pitch error like in this image (closest blue path is like under the ground)?  It is more related to odometry accuracy. If rtabmap is built with g2o, you could try OdomF2M/BundleAdjustment=1 with Odom/GuessMotion=true and OdomF2M/MaxSize=2000/3000. cheers, Mathieu |

«

Return to Official RTAB-Map Forum

|

1 view|%1 views

| Free forum by Nabble | Edit this page |