How to calibrate when using CameraRGBDImages

|

Hello,

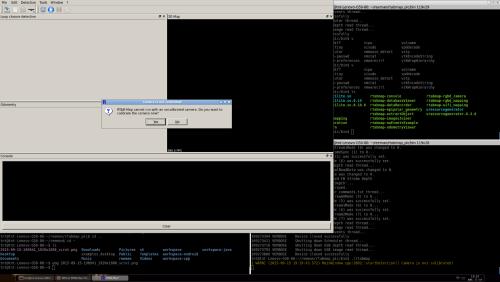

When I try to read sensor data which combine RGB image and depth image, It encounter errors. I intend to generate sensor data from CameraRGBDImages. In this case, Any way Can I calibrate the camera firstly? one more question: what is the limitation to RGB image and depth image? It should be png format not jpg format? Thanks in advance. code block: camera = new rtabmap::CameraRGBDImages("rgb_png","depth_png"); if(camera->init()) { if(camera->isCalibrated()) { rtabmap::CameraThread cameraThread(camera); odomThread.start(); cameraThread.start(); odomViewer.exec(); cameraThread.join(true); odomThread.join(true); } else { printf("The camera is not calibrated! You should calibrate the camera first.\n"); delete camera; } } else { printf("Failed to initialize the camera! Please select another driver (see \"--help\").\n"); delete camera; }

|

|

Administrator

|

Hi,

In the camera->init(), you can set the path to a calibration file (the format is calibration folder and calibration name). For example, if you have this a file named "kinect.yaml" in the same directory as your executable:

if(camera->init(".", "kinect"))

...

The camera.yaml file is calibration file. For a simple example, for a Kinect camera (focal fx/fy~=525, cx=319.5, cy=239.5), this would look like this:

%YAML:1.0

camera_name: kinect

image_width: 640

image_height: 480

camera_matrix:

rows: 3

cols: 3

data: [ 525., 0., 3.1950000000000000e+02, 0., 525.,

2.3950000000000000e+02, 0., 0., 1. ]

distortion_coefficients:

rows: 1

cols: 5

data: [ 0., 0., 0., 0., 0. ]

distortion_model: plumb_bob

rectification_matrix:

rows: 3

cols: 3

data: [ 1., 0., 0., 0., 1., 0., 0., 0., 1. ]

projection_matrix:

rows: 3

cols: 4

data: [ 525., 0., 3.1950000000000000e+02, 0., 0., 525.,

2.3950000000000000e+02, 0., 0., 0., 1., 0. ]

Note that this file can be generated from Preferences->Source->"Simple calibration" button. CameraRGBDImages assumes also that the RGB and depth images are already rectified and registered together (it ignores distortion/rectification coefficients). The RGB image can be JPG, BMP or PNG (common image formats). The depth image should be compressed (or not) with lossless format (PNG) encoded unsigned 16 bits mono (values are in mm). cheers, Mathieu |

«

Return to Official RTAB-Map Forum

|

1 view|%1 views

| Free forum by Nabble | Edit this page |