Improving Mapping Accuracy in RTAB-Map – Seeking Advice and Assistance

|

This post was updated on .

Hello everyone,

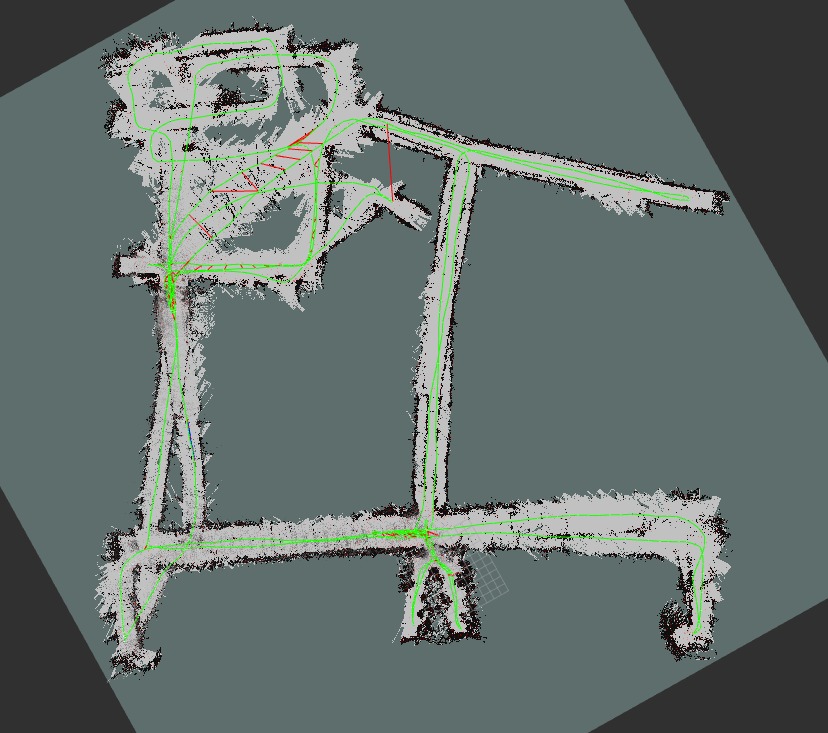

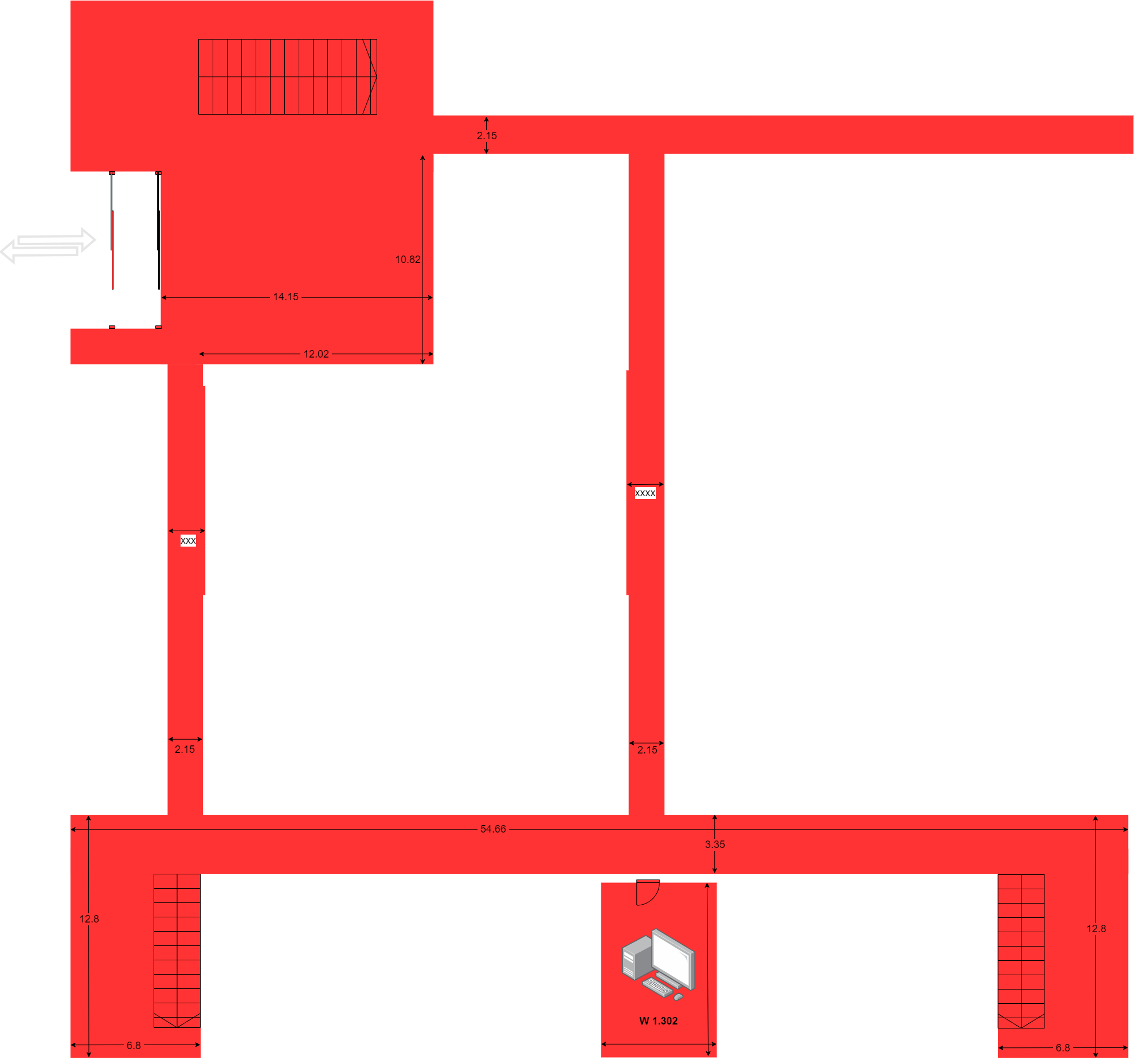

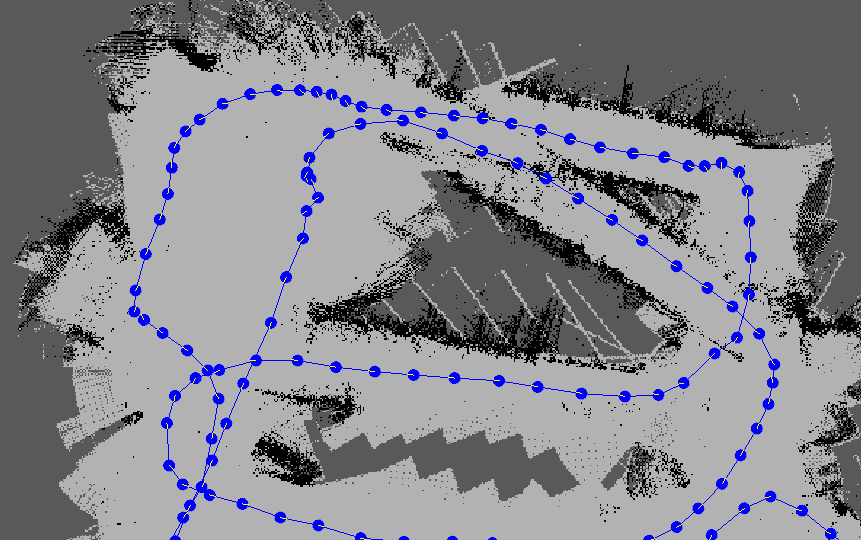

I'm currently working with RTAB-Map in ROS2 and facing issues with the accuracy of my generated map. My goal is to create a reliable map that allows localization in both directions of a building. My Setup & Issue: I recorded a long ROS bag in both directions of the building to ensure localization works from different approaches. However, I am experiencing significant overlapping issues and poor alignment, leading to low mapping accuracy. The generated map does not match the real structure well, making localization unreliable. Unfortunately, my current mapping result (see attached image) is unsatisfactory, as it does not accurately reflect the building’s structure. What I Need Help With: Configuration Advice: If anyone has experience with RTAB-Map tuning, I would appreciate any suggestions on how to improve the map accuracy (e.g., parameter tuning, filtering, post-processing). Mapping Assistance: If someone is willing to try and generate a better map using my recorded ROS bag data, I would be extremely grateful. I have attached an approximate floor plan of the building to give a rough idea of what the map should look like. For reference, my ROS bag file is available for download at the following link: https://drive.google.com/drive/folders/1O-AxZq4SOhJGS_qLU_mdi89gLUw1mzFN?usp=drive_link Thank you in advance for any help or insights! I truly appreciate any suggestions or assistance. Best regards   |

|

Administrator

|

Hi,

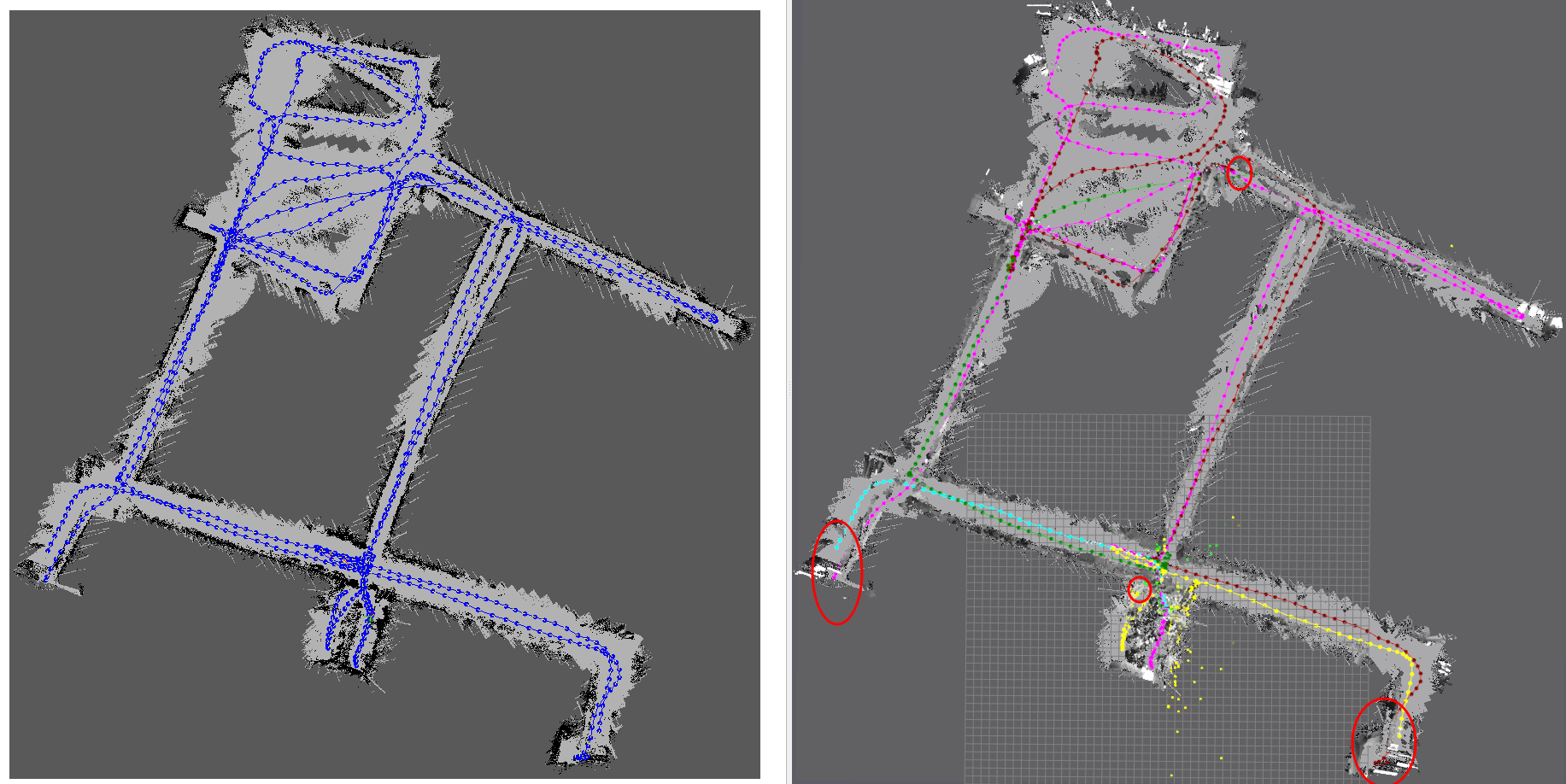

Here is a config I tried:

ros2 launch rtabmap_launch rtabmap.launch.py \

args:="-d \

--Reg/Force3DoF true \

--Grid/3D false \

--Grid/RayTracing true \

--Grid/MaxGroundHeight 0.1 \

--Grid/NormalsSegmentation false \

--Rtabmap/LoopThr 0.25 \

--Vis/MinInliers 30" \

odom_args:="\

--Vis/MinInliers 10 \

--Vis/CorGuessWinSize 40 \

--Odom/VisKeyFrameThr 30 \

--Odom/ResetCountdown 30 \

--Odom/GuessSmoothingDelay 0.5" \

rgb_topic:=/camera/right/image_rect \

camera_info_topic:=/camera/right/camera_info \

depth_topic:=/camera/stereo/image_raw \

frame_id:=base_link \

rgbd_sync:=true \

approx_rgbd_sync:=false

ros2 bag play THU_Map_W01_0.db3 --clock

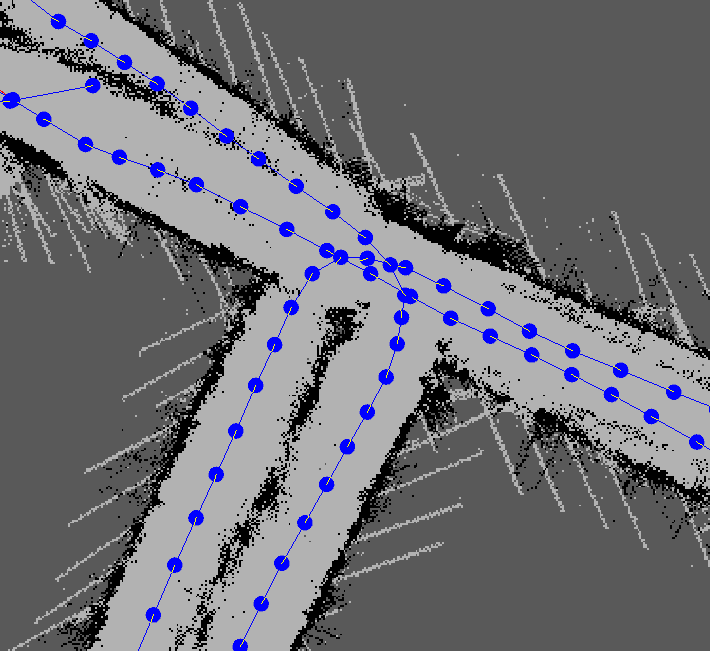

The red circles are where visual odometry got lost, because the images were pretty much featureless. The trajectory of the camera doesn't have many overlaps with same point of view, and sometime a whole path is taken in reverse direction so no loop closures are possible:   Here some ideas to improve the system: * Add a [hardware synchronized] back camera: this will improve a lot loop closure detection when moving in reverse direction in the corridors. This will also improve the lack of features with a single camera that makes visual odometry to get lost. Another benefit is that it implicitly increases the FOV, which would make VO a more accurate. * Integrate wheel odometry + IMU fusion to the system, and use it as guess for rtabmap's VO. If VO cannot be computed, rtabmap's VO can just re-forward the wheel odometry and/or reset using latest wheel odometry pose. * If upgrading hardware is not possible, integrate visual odometry or visual inertial odometry approaches that are more robust to textureless environments (i.e. low number of features to track). cheers, Mathieu |

|

Thank you Mathieu! I tested with the same settings, but unfortunately, I'm still getting unsatisfactory results (see image). Do you have any idea what could be causing this? How can I improve the result?

Best regards

|

|

Administrator

|

Your results are similar to what I could get. My suggestions on my previous post:

|

«

Return to Official RTAB-Map Forum

|

1 view|%1 views

| Free forum by Nabble | Edit this page |