Is there a RTABMap document?

|

Hi everyone,

I had compiled the RTABMap libraries and run the samples code such as RGBD Mapping and Loop-closure detection very well. Now I'm trying to use RTABMap library in my project, but I cannot find any document to teach me how to use the RTABMap APIs. I want to write a small program which can capture image by ASUS Xtion Pro Live and can dump the mapped point cloud or even the mesh. For example, how can I get the point cloud data in the program in the RGBD-Mapping example? Is there anyone know how to use them? Thanks. |

|

Administrator

|

Hi,

Only documentation about using RTAB-Map's C++ API is in these examples: * RGB-D mapping: https://github.com/introlab/rtabmap/wiki/Cplusplus-RGBD-Mapping * RGB-D mapping (no threads): https://github.com/introlab/rtabmap/wiki/Cplusplus-RGBD-Mapping#example-without-threads-and-events * Loop closure detection: https://github.com/introlab/rtabmap/wiki/Cplusplus-Loop-Closure-Detection I updated the C++ RGB-D Mapping example to include an example of generating the point cloud and saving it in PCD format: https://github.com/introlab/rtabmap/wiki/Cplusplus-RGBD-Mapping

std::map<int, Signature> nodes;

std::map<int, Transform> optimizedPoses;

std::multimap<int, Link> links;

rtabmap->get3DMap(nodes, optimizedPoses, links, true, true);

pcl::PointCloud<pcl::PointXYZRGB>::Ptr cloud(new pcl::PointCloud<pcl::PointXYZRGB>);

for(std::map<int, Transform>::iterator iter=optimizedPoses.begin(); iter!=optimizedPoses.end(); ++iter)

{

Signature node = nodes.find(iter->first)->second;

// uncompress data

node.sensorData().uncompressData();

pcl::PointCloud<pcl::PointXYZRGB>::Ptr tmp = util3d::cloudRGBFromSensorData(

node.sensorData(),

4, // image decimation before creating the clouds

4.0f, // maximum depth of the cloud

0.01f); // Voxel grid filtering

*cloud += *util3d::transformPointCloud(tmp, iter->second); // transform the point cloud to its pose

}

cloud = util3d::voxelize(cloud, 0.01f);

pcl::io::savePCDFile("cloud.pcd", *cloud);

//pcl::io::savePLYFile("cloud.ply", *cloud); // to save in PLY format

To generate a mesh from the cloud above, you can use directly the PCL or these convenient methods in rtabmap: util3d::computeNormals(), util3d::createMesh(). cheers |

|

Hi,

Thank you so much!! I'm going to study your new sample codes. |

|

In reply to this post by matlabbe

Hi,

I had successfully use the createMesh() function to save a mesh in ply format. I saw that there is a function named createTextureMesh(). Can you give me a example about how to use the function? Thank you. |

|

Administrator

|

Hi,

For util3d::createTextureMesh(), you will need the created mesh from util3d::createMesh(), the camera models and the corresponding images. Here is an example from the code above:

std::map<int, Signature> nodes;

std::map<int, Transform> optimizedPoses;

std::multimap<int, Link> links;

rtabmap->get3DMap(nodes, optimizedPoses, links, true, true);

pcl::PointCloud<pcl::PointXYZRGBNormal>::Ptr cloud(new pcl::PointCloud<pcl::PointXYZRGBNormal>);

std::map<int, CameraModel> cameraModels;

std::map<int, cv::Mat> images;

float voxel = 0.02f;

for(std::map<int, Transform>::iterator iter=optimizedPoses.begin(); iter!=optimizedPoses.end(); ++iter)

{

Signature node = nodes.find(iter->first)->second;

// uncompress data

node.sensorData().uncompressData();

pcl::PointCloud<pcl::PointXYZRGB>::Ptr tmp = util3d::cloudRGBFromSensorData(

node.sensorData(),

4, // image decimation before creating the clouds

3.0f, // maximum depth of the cloud

voxel); // Voxel grid filtering

pcl::PointCloud<pcl::PointXYZRGBNormal>::Ptr tmpWithNormals = util3d::computeNormals(tmp);

*cloud += *util3d::transformPointCloud(tmpWithNormals, iter->second); // transform the point cloud to its pose

cameraModels.insert(std::make_pair(iter->first, node.sensorData().cameraModels()[0]));

images.insert(std::make_pair(iter->first, node.sensorData().imageRaw()));

}

cloud = util3d::voxelize(cloud, voxel);

pcl::io::savePCDFile("cloud.pcd", *cloud);

pcl::PolygonMesh::Ptr mesh = util3d::createMesh(

cloud,

voxel*4.0f);

pcl::TextureMesh::Ptr textureMesh = util3d::createTextureMesh(

mesh,

optimizedPoses,

cameraModels,

images,

"."); // temporary directory for generated textures

pcl::io::saveOBJFile("mesh.obj", *textureMesh);

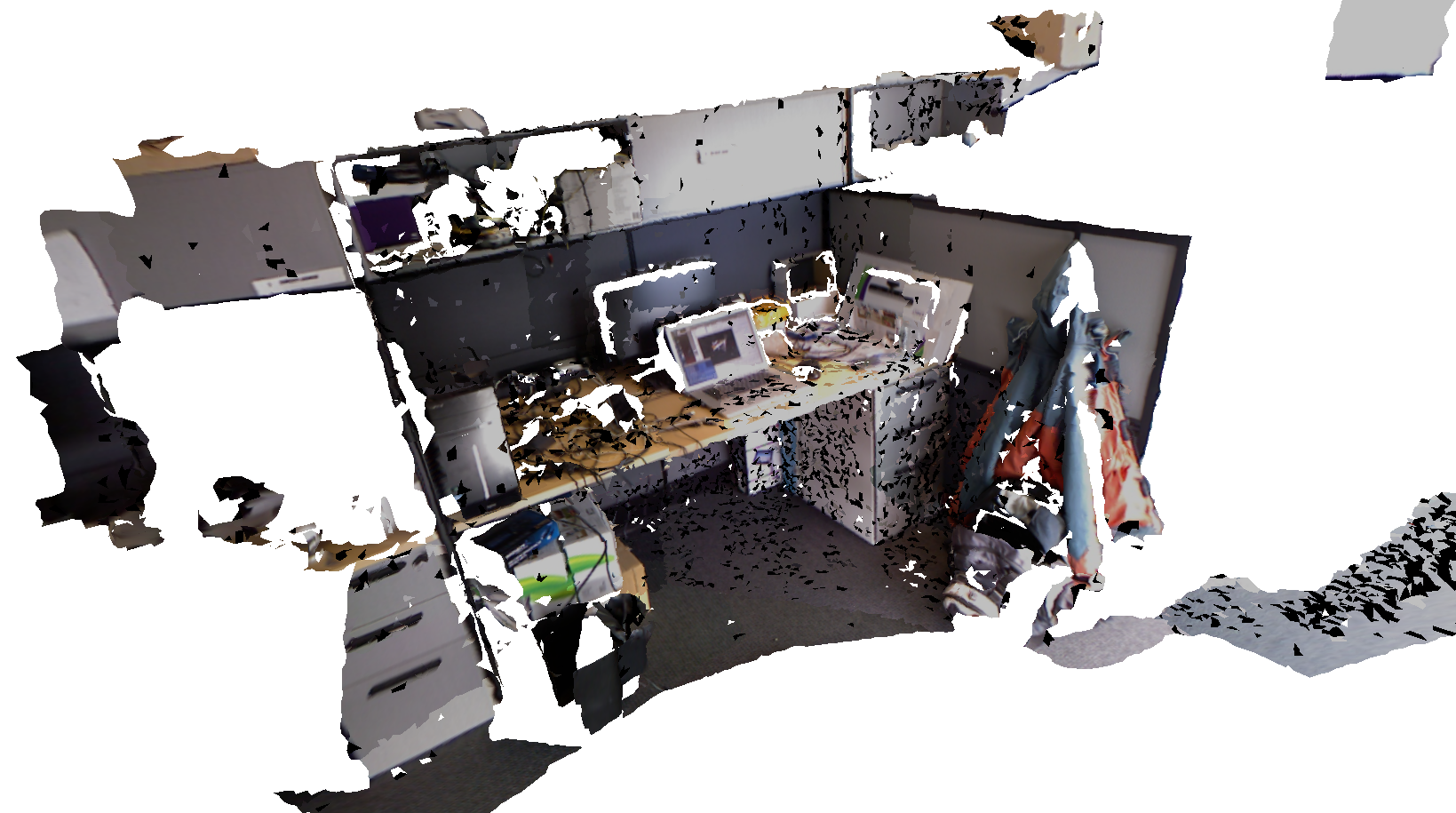

cloud.pcd:  mesh.obj:  cheers |

|

Thanks for your help.

I had succeed to save the texture mesh. But I still have some questions... 1. When I mapped large scene, the createMesh() will tell me the following message...  Although it always can save the mesh, I want to know what happened and how to deal with the warning. 2. The createTextureMesh() seems to be time-consuming. Does this function depends on the image resolution or something else? How can I speed up it? 3. How can I use ICP in my project? Thanks!! |

|

Administrator

|

Hi,

1. These are warnings from PCL when it does nearest neighbor searching. They can be safely ignored. They would happen for example if the voxel size is small and points are farther than the voxel size. 2. That method projects RGB images on the mesh. If the mesh has a lot of polygons, the time will increase significantly. You can increase the voxel size to reduce the number of polygons or use util3d::meshDecimation() method to reduce the number of polygons by the specified factor. 3. To use ICP, follow PCL's ICP tutorial or use these convenience wrappers. To use it for Registration in RTAB-Map, you should create a point cloud from the depth image, then add it to SensorData sent to odometry/rtabmap. You can the set parameter "Reg/Strategy=2" to use Vis+Icp registration (1 is Icp-only). cheers |

|

Hello,

I noticed that you have mentioned about how to reduce the number of polygons, but how am I supposed to use util3d::meshDecimation() method? I didn't figure out. Any hint would be appreciated. |

|

Administrator

|

Hi,

The declaration: pcl::PolygonMesh::Ptr RTABMAP_EXP meshDecimation(const pcl::PolygonMesh::Ptr & mesh, float factor);The factor is how much (in percentage) you want to decimate the mesh. For example, setting to 0.8 will filter 80% of the polygons, so for a mesh of 100 000 polygons, you will get an output mesh of 20 000 polygons. cheers, Mathieu |

|

Hello,

Thank you so much for your response. I've managed to use the method meshDecimation to filter polygons. However, it seems to reach its limit when the factor is set to 0.99. Actually I have to filter 99.5% of the polygons so I can have a 3D model with less than 65000 vertices to be imported into Unity. What else method am I supposed to use in order to do that? I really appreciate your help. Alex. |

|

Administrator

|

This post was updated on .

How the mesh is created? With the code above, you can increase voxel size, you will get less polygons. If you use Poisson reconstruction, we can decrease the depth to produce a lot less polygons:

pcl::Poisson<pcl::PointXYZRGBNormal> poisson; poisson.setDepth(7); // <-- lower to get less polygons poisson.setInputCloud(cloud); poisson.reconstruct(*mesh); cheers, Mathieu |

«

Return to Official RTAB-Map Forum

|

1 view|%1 views

| Free forum by Nabble | Edit this page |