Issue with K4A RGB projection into Livox pointcloud

Issue with K4A RGB projection into Livox pointcloud

|

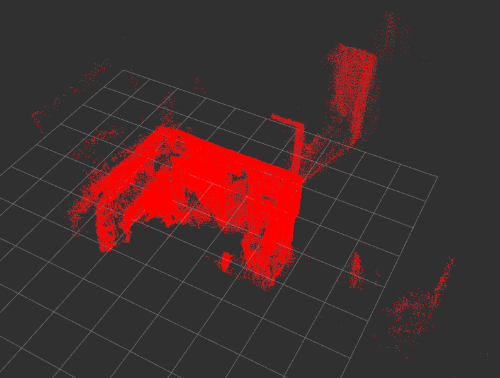

I have been experimenting with Livox Avia to generate map clouds, and the results have been quite good. Here is a short scan of one section of a room at high density:

As we have been discussing in more detail over in the Github repo, our goals are to fuse K4A (Azure Kinect) RGB streams with Livox point cloud for better visualization and 3D reconstruction. I have calibrated K4A to Livox Avia and have an extrinsics matrix (4x4), however it is not immediately clear how that could be converted to a 3x3 transform. So we have attempted to project the K4A /rgb/image_raw topic into the /livox/lidar pointcloud using pointcloud_to_depthimage, as follows: rosrun rtabmap_ros pointcloud_to_depthimage \ cloud:=/livox/lidar \ camera_info:=/rgb/camera_info \ _decimation:=2 \ _fill_holes_size:=30 I am aware the image will be without rectification, however I simply wanted to confirm that it is possible to create a depth image with these two topics. I have also made the assumption that a static_transform_publisher is not required for this to work, expecting that the image / cloud could be synced but would not be aligned without the TF transform. So the procedure, now, is as follows: 1. roslaunch livox_ros_driver livox_lidar.launch 2. roslaunch azure_kinect_ros_driver driver.launch 3. rosrun rtabmap_ros pointcloud_to_depthimage (with the parameters above). 4. rosrun rviz rviz and change to livox_frame fixed frame, subscribe to both /rtabmap/cloud map and /livox/lidar_transformed. Both do not yield anything on screen. Which steps have I missed? For clarification, when starting RViz, the following error messages are displayed: [ERROR] [1611608164.598253559]: Ignoring transform for child_frame_id "imu_link" from authority "unknown_publisher" because of an invalid quaternion in the transform (0.500000 0.000000 0.000000 0.000000) [ERROR] [1611608164.598475620]: Ignoring transform for child_frame_id "rgb_camera_link" from authority "unknown_publisher" because of an invalid quaternion in the transform (0.500000 0.000000 0.000000 0.000000) These are coming from K4A, but I presumed they can be ignored, at least for this depth projection test. |

|

Administrator

|

Hi, You would have to set fixed_frame_id as the code will assert with approx=true. The fixed_frame_id parameter can be set to the parent base frame of the system (e.g., base_link), or odom if you have an external guess odometry. Using odometry frame helps to correctly transform the point cloud to timestamp of the camera frame (to correctly reproject the point cloud in the camera when sensors are not synchronized and moving). This what is done with Tango example here, in which the point clouds are published at 5Hz but the camera at 30Hz, we used Tango's VIO to know the position of the cloud against the camera frame at the right time (assuming the camera already moved when the point cloud has been taken). TF is needed to make this work (at least one between the sensors). The resulting depth image don't have any distortion. It is then assumed that the corresponding RGB frame has to be rectified to match the depth image. The output of pointcloud_to_depthimage is a depth image (rqt_image_view), not a point cloud. From the 4 steps you are doing, rtabmap is not started, so /rtabmap/cloud won't be published. How did you calibrate K4A against livox? You don't have to convert to 3x3, create the TF from that 4x4 transform (convert the 3x3 rotation matrix of 4x4 to quaternion, and take x,y,z directly from last column of 4x4). Note that the large field-of-view of the K4A could be an advantage over livox sensor, if distance to subject to scan is short. cheers, Mathieu |

Re: Issue with K4A RGB projection into Livox pointcloud

|

This post was updated on .

The first thing we tried was a custom launch file, and it was using livox_frame as the fixed_frame_id. Here is the launch file we were experimenting with. Of note, these args (Line 50): <arg name="rtabmapviz" default="false"/> <arg name="scan_20_hz" default="true"/> <arg name="voxel_size" default="0.15"/> <arg name="rgb_topic" value="/rgb/image_raw" /> <arg name="camera_info_topic" value="/rgb/camera_info" /> <arg name="approx_sync" value="true" /> <arg name="frame_id" value="livox_frame" /> ...and an rtabmap rgbd_sync section (Line 87): <group ns="rtabmap"> <node pkg="nodelet" type="nodelet" name="rgbd_sync" args="standalone rtabmap_ros/rgbd_sync"> <remap from="rgb/image" to="$(arg rgb_topic)"/> <remap from="depth/image" to="/image_raw"/> <remap from="rgb/camera_info" to="$(arg camera_info_topic)"/> </node> There are probably other things wrong with this launch, including the absence of a TF subscriber, which you have indicated is mandatory for this to work. Regarding our K4A / Livox calibration, we used the Livox SDK camera-lidar calibration tool, and the resulting generated extrinsics are: 1.634574254408049931e-03 -9.999083605427132593e-01 -1.343870097109125909e-02 -3.064075431168198499e-02 -2.918370089044166130e-02 1.338529620719275393e-02 -9.994844398227433757e-01 -1.389091727855990832e-01 9.995727286042492832e-01 2.025922562512091473e-03 -2.915914725136253480e-02 -2.769531223365457456e-02 0.000000000000000000e+00 0.000000000000000000e+00 0.000000000000000000e+00 1.000000000000000000e+00 Livox is mounted on top about 10cm directly above the Kinect. Presuming these are in radians, then the proper node in the launch file should look like this (please confirm): <node pkg="tf" type="static_transform_publisher" name="livox_broadcaster" args="-3.064075431168198499e-02 -1.389091727855990832e-01 -2.769531223365457456e-02 -0.5043333 0.5101249 -0.4888305 -0.4964526 rgb_camera_link livox_frame 100" /> Unfortunately, trying to use this node produces the following errors: [ERROR] [1611621084.537495497]: "guess_from_tf" is true, but guess cannot be computed between frames "livox_frame_stabilized" -> "livox_frame". Aborting odometry update... [ WARN] [1611621084.547469278]: Setting "Grid/FromDepth" parameter to false (default true) as "subscribe_scan", "subscribe_scan_cloud" or "gen_scan" is true. The occupancy grid map will be constructed from laser scans. To get occupancy grid map from cloud projection, set "Grid/FromDepth" to true. To suppress this warning, add Warning: TF_OLD_DATA ignoring data from the past for frame livox_frame at time 720.598 according to authority unknown_publisher Possible reasons are listed at http://wiki.ros.org/tf/Errors%20explained at line 277 in /tmp/binarydeb/ros-melodic-tf2-0.6.5/src/buffer_core.cpp I can get rid of these messages by flipping the TF subscriber order: <node pkg="tf" type="static_transform_publisher" name="livox_broadcaster" args="-3.064075431168198499e-02 -1.389091727855990832e-01 -2.769531223365457456e-02 -0.5043333 0.5101249 -0.4888305 -0.4964526 rgb_camera_link livox_frame 100" /> But then there is another problem --- every 5 seconds, the following is displayed: [ WARN] [1611622826.041840831]: /rtabmap/rgbd_sync: Did not receive data since 5 seconds! Make sure the input topics are published ("$ rostopic hz my_topic") and the timestamps in their header are set. /rtabmap/rgbd_sync subscribed to (approx sync): /rgb/image_raw \ /rgbd_image \ /rgb/camera_info I can subscribe to /livox/lidar with rostopic hz but attempting to subscribe to /rtabmap/rgbd_image yields: ERROR: Cannot load message class for [rtabmap_ros/RGBDImage]. Are your messages built? |

Re: Issue with K4A RGB projection into Livox pointcloud

|

This post was updated on .

I have been doing some digging, and it appears that the issue comes down to some kind of infinite loop that is happening in the pointcloud_to_depthimage node. Specifically, it is this code block (I have bolded the line where it breaks):

image_transport::ImageTransport it(nh); depthImage16Pub_ = it.advertise("image_raw", 1); // 16 bits unsigned in mm depthImage32Pub_ = it.advertise("image", 1); // 32 bits float in meters pointCloudTransformedPub_ = nh.advertise<sensor_msgs::PointCloud2>(nh.resolveName("cloud")+"_transformed", 1); if(approx) { approxSync_ = new message_filters::Synchronizer<MyApproxSyncPolicy>(MyApproxSyncPolicy(queueSize), pointCloudSub_, cameraInfoSub_); approxSync_->registerCallback(boost::bind(&PointCloudToDepthImage::callback, this, _1, _2)); } else { fixedFrameId_.clear(); exactSync_ = new message_filters::Synchronizer<MyExactSyncPolicy>(MyExactSyncPolicy(queueSize), pointCloudSub_, cameraInfoSub_); exactSync_->registerCallback(boost::bind(&PointCloudToDepthImage::callback, this, _1, _2)); } pointCloudSub_.subscribe(nh, "cloud", 1); cameraInfoSub_.subscribe(nh, "camera_info", 1); If approx is set to False with no fixed_frame_id, then it will endlessly loop on the first "exactSync", apparently for the same reason. I am still trying to track down the reason, but during debugging, there is a constant cycle with no exit as follows: 1. The cons struct in tuple_basic.hpp is called -- cons() : head(), tail() {} 2. stl_deque.h is used to create a new deque base -- _Deque_base<_Tp, _Alloc>::_M_initialize_map(size_t __num_elements) 3. stl_deque attempts to return Map_alloc_traits::allocate(__map_alloc, __n); It goes around and around like that and never registers the callback. Looking at _num_elements, it is in the millions, so clearly, some buffer isn't being flushed somewhere, though I don't know this code well enough to even begin to know where to look. This doesn't look like an RTAB-Map problem, but rather a C++ library problem. Listing packages on my Xavier AGX, here is what is shown: libnetcdf-c++4/bionic,now 4.2-8 arm64 [installed,automatic] libsigc++-2.0-0v5/bionic,now 2.10.0-2 arm64 [installed,automatic] libstdc++-7-dev/bionic-updates,bionic-security,now 7.5.0-3ubuntu1~18.04 arm64 [installed] libstdc++6/bionic-updates,bionic-security,now 8.4.0-1ubuntu1~18.04 arm64 [installed] ...and.... gcc/bionic-updates,bionic-security,now 4:7.4.0-1ubuntu2.3 arm64 [installed] gcc-7/bionic-updates,bionic-security,now 7.5.0-3ubuntu1~18.04 arm64 [installed] gcc-7-base/bionic-updates,bionic-security,now 7.5.0-3ubuntu1~18.04 arm64 [installed] gcc-8-base/bionic-updates,bionic-security,now 8.4.0-1ubuntu1~18.04 arm64 [installed] libgcc-7-dev/bionic-updates,bionic-security,now 7.5.0-3ubuntu1~18.04 arm64 [installed] libgcc1/bionic-updates,bionic-security,now 1:8.4.0-1ubuntu1~18.04 arm64 [installed] So there is likely some interpreter confusion going on here, and that is the original reason the there are not messages being returned on the /image and /image_raw topic published by pointcloud_to_depthimage. It certainly publishes the topics, but then can't populate them due to the issue listed above. |

Re: Issue with K4A RGB projection into Livox pointcloud

|

I'm not sure there is a solution to this problem. Hopefully, @matlabbe will have an answer.

It looks like pointcloud_to_depthimage only works if both the cloud and the RGB stream are synced (with rtabmap/rgbd_sync, for example), but rgbd_sync requires a registered depth image. The registered depth image would come from pointcloud_to_depthimage, but this cannot work without synchronizing the streams. It's a Catch 22, as far as I can see. The Livox has a default publishing frequency of 10Hz and the Azure Kinect is publishing RGB images at a minimum of 5Hz and a maximum of 30Hz. So it looks like running pointcloud_to_depthimage is accumulating Pointcloud2 data at a faster rate than RGB image_raw topic, and there is no way to sync timestamps. For this reason, pointcloud_to_depthimage never runs its callback, it is stuck at the subscribing step. Even if I take the publishing frequency of the Livox down to 5Hz, pointcloud_to_depthimage never makes a callback. This behavior is seen on other systems with at least one non-K4A RGB camera. Can we somehow sync 3D LiDAR data with RGB images prior to attempting a projection? That's what is needed here. Without it, it doesn't seem like pointcloud_to_depthimage can work. |

Re: Issue with K4A RGB projection into Livox pointcloud

|

Here is further clarification of this problem, in case anyone else is seeing the sync issue:

rosrun tf static_transform_publisher 0 0 -0.1 5 0 0 0 rgb_camera_link livox_frame 200 rostopic hz /rgb/camera_info subscribed to [/rgb/camera_info] average rate: 5.013 min: 0.198s max: 0.201s std dev: 0.00126s window: 5 average rate: 5.003 min: 0.198s max: 0.202s std dev: 0.00125s window: 10 average rate: 5.004 min: 0.198s max: 0.202s std dev: 0.00127s window: 15 average rate: 4.999 min: 0.198s max: 0.202s std dev: 0.00120s window: 20 average rate: 5.001 min: 0.198s max: 0.202s std dev: 0.00125s window: 25 rostopic hz /livox/lidar subscribed to [/livox/lidar] average rate: 5.013 min: 0.197s max: 0.201s std dev: 0.00160s window: 5 average rate: 5.004 min: 0.197s max: 0.201s std dev: 0.00116s window: 10 average rate: 5.003 min: 0.197s max: 0.201s std dev: 0.00102s window: 15 average rate: 5.001 min: 0.197s max: 0.201s std dev: 0.00093s window: 20 average rate: 5.001 min: 0.197s max: 0.203s std dev: 0.00113s window: 25 So we see that both topics from RGB and LiDAR are synchronized via the static_transform_publisher. Now, here is the problem. I run: rosrun rtabmap_ros pointcloud_to_depthimage \ cloud:=/livox/lidar \ camera_info:=/rgb/camera_info \ _fixed_frame_id:=rgb_camera_link Now the sync window has shifted by 1 for the LiDAR: rostopic hz /livox/lidar subscribed to [/livox/lidar] average rate: 5.038 min: 0.197s max: 0.200s std dev: 0.00135s window: 4 average rate: 5.014 min: 0.197s max: 0.201s std dev: 0.00116s window: 9 average rate: 5.011 min: 0.197s max: 0.201s std dev: 0.00093s window: 14 average rate: 5.006 min: 0.197s max: 0.202s std dev: 0.00111s window: 19 average rate: 5.005 min: 0.197s max: 0.202s std dev: 0.00106s window: 24 It's not clear why this is happening, but now, if I attempt to subscribe to the /image topic from pointcloud_to_depthimage: rostopic hz /image subscribed to [/image] no new messages no new messages no new messages no new messages Now the confirmation --- I will change the Pointcloud2 source from /livox/lidar to /points2 (from the Azure Kinect), also a Pointcloud2 sensor message type. rosrun rtabmap_ros pointcloud_to_depthimage \ cloud:=/points2 \ camera_info:=/rgb/camera_info \ _fixed_frame_id:=rgb_camera_link And the result is.... rostopic hz image subscribed to [/image] average rate: 4.541 min: 0.216s max: 0.225s std dev: 0.00364s window: 4 average rate: 4.566 min: 0.206s max: 0.225s std dev: 0.00597s window: 8 average rate: 4.600 min: 0.206s max: 0.225s std dev: 0.00543s window: 13 average rate: 4.613 min: 0.204s max: 0.225s std dev: 0.00596s window: 17 So pointcloud_to_image will publish on the Image topic when using Azure Kinect's /point2 as a source, but not Livox's /livox/lidar, even technically it is of the same exact type -- and I have confirmed that xfer_format=2 is set in the Livox launch file in order to force standard pointcloud2 (pcl :: PointXYZI) format. I intuit there must be an obvious solution to this problem, but so far, only the sync issue makes any logical sense. |

|

Administrator

|

Hi,

To make this work: rosrun rtabmap_ros pointcloud_to_depthimage \ cloud:=/livox/lidar \ camera_info:=/rgb/camera_info \ _fixed_frame_id:=rgb_camera_linkYou should make sure /livox/lidar is published, /rgb/camera_info is published, and the timestamp in both topics is set and there is no offset between them (like each sensor working on its own clock). If you have odometry independent of the camera and lidar, I would set fixed_frame_id to odom frame id to get better reprojection. EDIT: IF you have independent odometry, you coudl use rtabmap_ros/point_cloud_assembler node to assemble livox clouds for some time before doing the reprojection (to get a denser depth image). cheers, Mathieu |

«

Return to Official RTAB-Map Forum

|

1 view|%1 views

| Free forum by Nabble | Edit this page |