Lidar based 3d SLAM in sparse feature environment

Reply |

Threaded

Open this post in threaded view

|

More ♦

♦

| Loading... |

| Reply to author |

| Edit post |

| Move post |

| Delete this post |

| Delete this post and replies |

| Change post date |

| Print post |

| Permalink |

| Raw mail |

Lidar based 3d SLAM in sparse feature environment

|

10 posts

|

This post was updated on Jun 09, 2024; 6:47pm.

Hello, I’m currently working on a drone project with the goal of the drone being able to autonomously explore and build a map of a building that doesn’t have many visual features e.g. blank walls and sparse furniture.

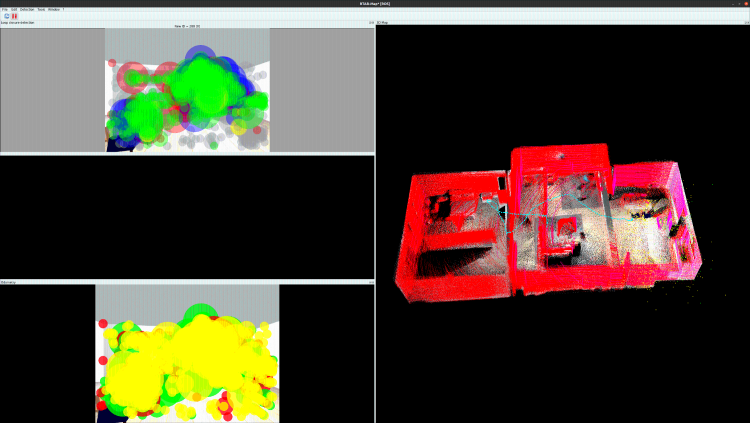

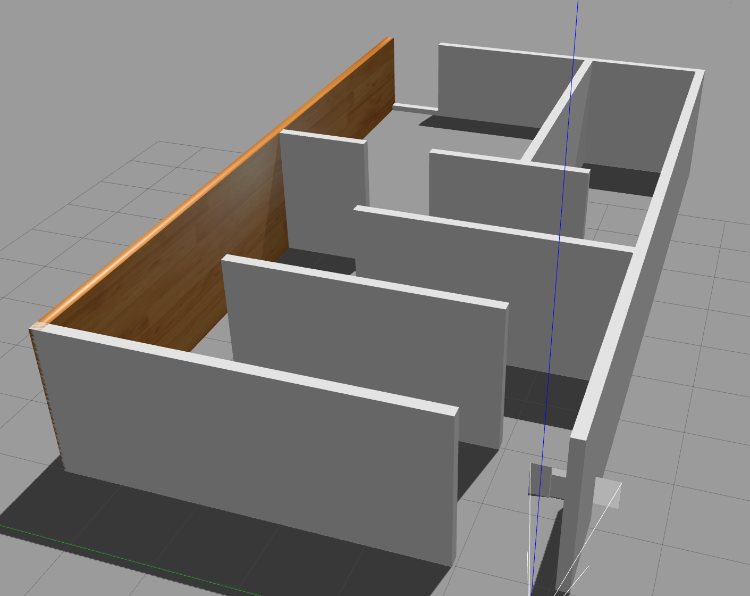

I recently came across the rtabmap_drone_example repository which is build on PX4 and RTABMAP and wanted to adapt this to my usecase. The drone also contains a Velodyne VLP16 Lidar which I added to the available Gazebo model and configuration giving promising initial results on maps with lots of visual features like the aws-robomaker-small-house-world. Working map:  Result:  The current configuration however doesn’t work well for maps without many visual features as the odometry starts to drift right after taking of. Can you provide some guidance on possible approaches for getting stable odometry in such an environment. The real-world test map will be build out of MDF and contain sparse furniture. Failure map:  Current slam.launch: <?xml version="1.0"?>

<launch>

<arg name="localization" default="false"/>

<arg name="rtabmap_viz" default="true"/>

<arg name="ground_truth" default="false"/>

<arg if="$(arg localization)" name="pre_args" default=""/>

<arg unless="$(arg localization)" name="pre_args" default="-d"/>

<node pkg="nodelet" type="nodelet" name="imu_to_tf" args="standalone rtabmap_util/imu_to_tf">

<remap from="imu/data" to="/mavros/imu/data"/>

<param name="fixed_frame_id" value="base_link_stabilized"/>

<param name="base_frame_id" value="base_link"/>

</node>

<!-- To connect rtabmap planning stuff with move_base below -->

<param name="/rtabmap/rtabmap/use_action_for_goal" value="true"/>

<remap from="/rtabmap/move_base" to="/move_base"/>

<!-- VSLAM -->

<param name="/rtabmap/rtabmap/latch" value="false"/> <!-- For some reason, if we latch grid_map, the global costmap inflation layer will create holes on robot path. To avoid holes, republish grid_map on each update (latching disabled). -->

<include file="$(find rtabmap_launch)/launch/rtabmap.launch">

<arg name="localization" value="$(arg localization)"/>

<arg name="args" value="$(arg pre_args) --Optimizer/GravitySigma 0.1 --Vis/FeatureType 10 --Kp/DetectorStrategy 10 --Grid/MapFrameProjection true --NormalsSegmentation false --Grid/MaxGroundHeight 1 --Grid/MaxObstacleHeight 1.6 --RGBD/StartAtOrigin true" />

<arg name="rtabmap_viz" value="$(arg rtabmap_viz)" />

<arg name="frame_id" value="base_link" />

<arg name="odom_guess_frame_id" value="base_link_stabilized" />

<arg name="rgb_topic" value="/r200/camera/color/image_raw" />

<arg name="depth_topic" value="/r200/camera/depth/image_raw" />

<arg name="camera_info_topic" value="/r200/camera/color/camera_info" />

<arg name="imu_topic" value="/mavros/imu/data"/>

<arg name="wait_imu_to_init" value="true"/>

<arg name="approx_sync" value="true"/>

<arg name="subscribe_scan_cloud" value="true"/>

<param name="subscribe_depth" value="false"/>

<param name="subscribe_rgbd" value="false"/>

<param name="subscribe_rgb" value="false"/>

<arg name="scan_cloud_topic" value="/velodyne_points"/>

<arg if="$(arg ground_truth)" name="ground_truth_frame_id" value="world"/>

<arg if="$(arg ground_truth)" name="ground_truth_base_frame_id" value="base_link_gt"/>

</include>

<!-- Costmap -->

<node pkg="nodelet" type="nodelet" name="camera_points_xyz" args="standalone rtabmap_util/point_cloud_xyz">

<remap from="depth/image" to="/r200/camera/depth/image_raw"/>

<remap from="depth/camera_info" to="/r200/camera/depth/camera_info"/>

<remap from="cloud" to="camera_cloud" />

<param name="decimation" type="double" value="4"/>

<param name="voxel_size" type="double" value="0.0"/>

<param name="approx_sync" type="bool" value="true"/>

</node>

<!-- navigation -->

<node pkg="move_base" type="move_base" respawn="false" name="move_base" output="screen">

<remap from="map" to="/rtabmap/grid_map"/>

<remap from="odom" to="/rtabmap/odom"/>

<param name="base_local_planner" value="dwa_local_planner/DWAPlannerROS" />

<rosparam file="$(find rtabmap_drone_example)/param/costmap_common_params.yaml" command="load" ns="global_costmap" />

<rosparam file="$(find rtabmap_drone_example)/param/costmap_common_params.yaml" command="load" ns="local_costmap" />

<rosparam file="$(find rtabmap_drone_example)/param/global_costmap_params.yaml" command="load" />

<rosparam file="$(find rtabmap_drone_example)/param/local_costmap_params.yaml" command="load" />

<rosparam file="$(find rtabmap_drone_example)/param/base_local_planner_params.yaml" command="load" />

</node>

<node name="empty_voxels_markers" pkg="rtabmap_costmap_plugins" type="voxel_markers" args="voxel_grid:=/move_base/local_costmap/voxel_layer/voxel_grid visualization_marker:=/voxels_empty">

<param name="cell_type" value="0"/>

</node>

<node name="marked_voxels_markers" pkg="rtabmap_costmap_plugins" type="voxel_markers" args="voxel_grid:=/move_base/local_costmap/voxel_layer/voxel_grid visualization_marker:=/voxels_marked" />

<!-- joystick -->

<rosparam file="$(find rtabmap_drone_example)/config/joy_config.yaml" />

<node pkg="joy" type="joy_node" name="joy_node">

<param name="autorepeat_rate" value="5"/>

</node>

<node pkg="teleop_twist_joy" type="teleop_node" name="teleop_node" output="screen">

<param name="autorepeat_rate" value="5"/>

</node>

<!-- Ground truth -->

<node if="$(arg ground_truth)" pkg="tf" type="static_transform_publisher" name="worldtomap_broadcaster" args="0 0 0 0 0 0 1 world map 100" />

<node if="$(arg ground_truth)" pkg="rtabmap_util" type="gazebo_ground_truth.py" name="gazebo_ground_truth" output="screen">

<param name="frame_id" value="world"/>

<param name="child_frame_id" value="base_link_gt"/>

<param name="gazebo_frame_id" value="drone::base_link"/>

</node>

</launch>

Any help is greatly appreciated.

Update: I now tried out some different combinations of sensors and maps. Results can be seen below: AWS RoboMaker Small House WorldVSLAM (default settings of repository)<include file="$(find rtabmap_launch)/launch/rtabmap.launch">

<arg name="localization" value="$(arg localization)"/>

<arg name="args" value="$(arg pre_args) --Optimizer/GravitySigma 0.1 --Vis/FeatureType 10 --Kp/DetectorStrategy 10 --Grid/MapFrameProjection true --NormalsSegmentation false --Grid/MaxGroundHeight 1 --Grid/MaxObstacleHeight 1.6 --RGBD/StartAtOrigin true" />

<arg name="rtabmap_viz" value="$(arg rtabmap_viz)" />

<arg name="frame_id" value="base_link" />

<arg name="odom_guess_frame_id" value="base_link_stabilized" />

<arg name="rgb_topic" value="/r200/camera/color/image_raw" />

<arg name="depth_topic" value="/r200/camera/depth/image_raw" />

<arg name="camera_info_topic" value="/r200/camera/color/camera_info" />

<arg name="imu_topic" value="/mavros/imu/data"/>

<arg name="wait_imu_to_init" value="true"/>

<arg name="approx_sync" value="true"/>

<arg if="$(arg ground_truth)" name="ground_truth_frame_id" value="world"/>

<arg if="$(arg ground_truth)" name="ground_truth_base_frame_id" value="base_link_gt"/>

</include>

Result: https://youtu.be/X2k-8kg89g8Camera + Lidar with Visual Odometry<include file="$(find rtabmap_launch)/launch/rtabmap.launch">

<arg name="localization" value="$(arg localization)"/>

<arg name="args" value="$(arg pre_args) --Optimizer/GravitySigma 0.1 --Vis/FeatureType 10 --Kp/DetectorStrategy 10 --Grid/MapFrameProjection true --NormalsSegmentation false --Grid/MaxGroundHeight 1 --Grid/MaxObstacleHeight 1.6 --RGBD/StartAtOrigin true" />

<arg name="rtabmap_viz" value="$(arg rtabmap_viz)" />

<arg name="frame_id" value="base_link" />

<arg name="odom_guess_frame_id" value="base_link_stabilized" />

<arg name="rgb_topic" value="/r200/camera/color/image_raw" />

<arg name="depth_topic" value="/r200/camera/depth/image_raw" />

<arg name="camera_info_topic" value="/r200/camera/color/camera_info" />

<arg name="imu_topic" value="/mavros/imu/data"/>

<arg name="wait_imu_to_init" value="true"/>

<arg name="approx_sync" value="true"/>

<arg name="subscribe_scan_cloud" value="true"/>

<arg name="scan_cloud_topic" value="/velodyne_points"/>

<arg if="$(arg ground_truth)" name="ground_truth_frame_id" value="world"/>

<arg if="$(arg ground_truth)" name="ground_truth_base_frame_id" value="base_link_gt"/>

</include>

Result: https://youtu.be/V5ZD5773K80Camera + Lidar with ICP Odometry <include file="$(find rtabmap_launch)/launch/rtabmap.launch">

<arg name="localization" value="$(arg localization)"/>

<arg name="args" value="$(arg pre_args) --Optimizer/GravitySigma 0.1 --Vis/FeatureType 10 --Kp/DetectorStrategy 10 --Grid/MapFrameProjection true --NormalsSegmentation false --Grid/MaxGroundHeight 1 --Grid/MaxObstacleHeight 1.6 --RGBD/StartAtOrigin true" />

<arg name="rtabmap_viz" value="$(arg rtabmap_viz)" />

<arg name="frame_id" value="base_link" />

<arg name="odom_guess_frame_id" value="base_link_stabilized" />

<arg name="rgb_topic" value="/r200/camera/color/image_raw" />

<arg name="depth_topic" value="/r200/camera/depth/image_raw" />

<arg name="camera_info_topic" value="/r200/camera/color/camera_info" />

<arg name="imu_topic" value="/mavros/imu/data"/>

<arg name="wait_imu_to_init" value="true"/>

<arg name="approx_sync" value="true"/>

<arg name="subscribe_scan_cloud" value="true"/>

<arg name="scan_cloud_topic" value="/velodyne_points"/>

<arg name="icp_odometry" value="true"/>

<arg if="$(arg ground_truth)" name="ground_truth_frame_id" value="world"/>

<arg if="$(arg ground_truth)" name="ground_truth_base_frame_id" value="base_link_gt"/>

</include>

Result: https://youtu.be/KtJFd-DVsB4Custom mapSame settings as above: Custom map camera: https://youtu.be/WW58vcVW380 Custom map camera + lidar: https://youtu.be/hG3MEwAEl9s Custom map ICP: https://youtu.be/4z_wYMdYGU0 |

Reply |

Threaded

Open this post in threaded view

|

More ♦

♦

| Loading... |

| Reply to author |

| Edit post |

| Move post |

| Delete this post |

| Delete this post and replies |

| Change post date |

| Print post |

| Permalink |

| Raw mail |

Re: Lidar based 3d SLAM in sparse feature environment

|

Administrator

4446 posts

|

If your target environment is textureless and you have access to a lidar, I'll would do icp_odometry. The problem in your test with icp_odometry is that default ICP parameters are tuned for 2D lidar, not 3D lidar. There is a test_velodyne.launch example here using velodyne for hand-held mapping.

Feeding the IMU to icp_odometry can also help for better ICP. From your launch file, you may be able to continue using rtabmap.launch by adding those parameters related to lidar (based on test_velodyne.launch): odom_args:=" Icp/MaxTranslation 2 Icp/CorrespondenceRatio 0.01 Odom/ScanKeyFrameThr 0.4 OdomF2M/ScanSubtractRadius 0.15 OdomF2M/ScanMaxSize 15000 ..." rtabmap_args:=" Icp/PointToPlane true Icp/Iterations 10 Icp/VoxelSize 0.15 Icp/Epsilon 0.001 Icp/PointToPlaneK 20 Icp/PointToPlaneRadius 0 Icp/MaxTranslation 3 Icp/MaxCorrespondenceDistance 1.5 Icp/PM true Icp/PMOutlierRatio 0.7 Icp/CorrespondenceRatio 0.2 RGBD/ProximityMaxGraphDepth 0 RGBD/ProximityPathMaxNeighbors 1 Mem/LaserScanNormalK 20 Reg/Strategy 1 ..." Notes: - icp_odometry node combines rtabmap_args and odom_args together (that's why I've overriden 2 ICP parameters for odom). - I skipped to mention deskewing stuff to keep example simple in simulation, but you would need to re-enable it for real-world tests. - In the test_velodyne.launch example, there is also a point_cloud_assembler node, you can use it if you want to record ALL scans in the database. cheers, Mathieu |

Reply |

Threaded

Open this post in threaded view

|

More ♦

♦

| Loading... |

| Reply to author |

| Edit post |

| Move post |

| Delete this post |

| Delete this post and replies |

| Change post date |

| Print post |

| Permalink |

| Raw mail |

Re: Lidar based 3d SLAM in sparse feature environment

|

10 posts

|

Hey Mathieu, thanks for the information about the ICP Odometry settings for the 3d Lidar. After updating the ICP parameters I was able to fly on the above mentioned map without flyaways (for the most part). Still there is quite a large Z-drift while flying (around 0.5-1m when exploring the whole map). As I'm pretty new to RTABMAP and SLAM in general parameter-tuning proves quite difficult. Do you have any tips for how to best approach such a situation?

Current config: https://github.com/TannerGilbert/rtabmap_drone_example/blob/master/launch/slam.launch |

Reply |

Threaded

Open this post in threaded view

|

More ♦

♦

| Loading... |

| Reply to author |

| Edit post |

| Move post |

| Delete this post |

| Delete this post and replies |

| Change post date |

| Print post |

| Permalink |

| Raw mail |

Re: Lidar based 3d SLAM in sparse feature environment

|

Administrator

4446 posts

|

Can you share a database? 0.5-1m offset seems quite large if you have a 360 3D lidar.

|

Reply |

Threaded

Open this post in threaded view

|

More ♦

♦

| Loading... |

| Reply to author |

| Edit post |

| Move post |

| Delete this post |

| Delete this post and replies |

| Change post date |

| Print post |

| Permalink |

| Raw mail |

Re: Lidar based 3d SLAM in sparse feature environment

|

10 posts

|

Hey Mathieu,

sorry for the late reply. We shifted our focus from the simulation to tests with real data as we experienced problems on the simulated data with multiple algorithms. Furthermore I also needed a couple of weeks to get more familiar with the unterlying theory of SLAM so I can do more than changing parameters and hoping it works out. Your replies on lots of Github issues and questions in this forum were lots of help for this so huge thanks for that. Still as I had a look at the simulation today I will share my findings on this first. I compared the ground truth odometry obtained through libgazebo_ros_p3d.so with the odometry obtained from /rtabmap/odom and it seemed to match pretty well. Also the drone moving upwards can be seen in both topics.  I'm not sure why this happens though when looking at the offboard_node.cpp file. As my focus mainly shifted on using real hardware and working with real data I would have some new questions. First I'm not quite sure what happens when a Loop Closure is detected. As far as I understand /rtabmap/odom is not changed on loop closure. Here I mainly have two questions 1) what topic/transform should be used to provide the PX4 with /mavros/vision_pose/pose and 2) if I use RTABMAP with the Octomap integration for exploration how can I take loop closure into account? Additionally for the real data I noticed that the Depth and RGB projection from my Realsense D455 is not perfectly aligned with the Lidar data (Velodyne VLP-16). Do you have any suggestion for tuning for that? Current real-world results:    Simulation bag (using https://github.com/TannerGilbert/rtabmap_drone_example): https://drive.google.com/file/d/1bHPLPg9y_sIOW-vzoqYTJY3LvFQAo-EE/view?usp=drive_link I'll also try to provide a Rosbag of a real flight where the camera and lidar alignment inperfections can be observed as soon as I got confirmation that I'm allowed to share. Thanks again for creating such a great library and for all the help you offer. Cheers, Gilbert |

Reply |

Threaded

Open this post in threaded view

|

More ♦

♦

| Loading... |

| Reply to author |

| Edit post |

| Move post |

| Delete this post |

| Delete this post and replies |

| Change post date |

| Print post |

| Permalink |

| Raw mail |

Re: Lidar based 3d SLAM in sparse feature environment

|

Administrator

4446 posts

|

In our rtabmap drone example, we created a node publishing vision_pose at 50 Hz from latest available TF map->base_link. The octomap published by rtabmap is updated on loop closures. You can also use /octomap_global_frontier_space to know where is the frontier (unknown space next to empty space) for exploration (see https://www.youtube.com/watch?v=S_IlPVMYVkM for example of what it looks like -> cyan cubes). Make sure depth from realsense is aligned with RGB. To calibrate extrinsics between the camera RGB frame and the lidar frame, you can do it manually or automatically using some lidar-camera calibration tools. To manually do it, I generally set initial translation/rotation (measured roughly with a measuring tape or from CAD) between RGB camera frame of realense and the lidar frame, then refine that TF rotation by looking in RVIZ with Camera View display and adding the point cloud of the lidar as overlay. However, that may not be as accurate than using an automated tool but is pretty fast to do for a rough test. cheers, Mathieu |

Reply |

Threaded

Open this post in threaded view

|

More ♦

♦

| Loading... |

| Reply to author |

| Edit post |

| Move post |

| Delete this post |

| Delete this post and replies |

| Change post date |

| Print post |

| Permalink |

| Raw mail |

Re: Lidar based 3d SLAM in sparse feature environment

|

10 posts

|

This post was updated on Aug 13, 2024; 6:28am.

As always thanks for all the help Mathieu.

I spend the last few weeks checking out how to do sensor calibration and have seen some improvments for the depth overlay but the depth spread of the Realsense is still quite large. I used cam_lidar_calibrationfor the extrinsics between lidar and camera. Results seem decent when comparing to CAD. For Lidar to IMU configuration I used lidar_imu_calib to get the rotation and measured the translation as I could not get any plausible results for the translation through calibration. Current open questions: - For using /mavros/vision_pose/pose it's recommended to have at least 30 Hz but the ICP odometry adjusts to the frequency of the Lidar (Velodyne VLP-16 in my case which by default only has 10 Hz). To get around this I currently publish the map->base_link with higher frequency. Do you think it makes sense to increase the Velodyne RPM to get up to 20 Hz but lower the angular resolution? - Is there some way to increase Z accuracy as we currently experience quite a large offset (can be seen for the bag provided below) - What other settings should I have a look at the improve localization and overall map quality? Current config: <?xml version="1.0"?>

<launch>

<arg name="rtabmap_viz" default="true" />

<arg name="use_imu" default="true" />

<arg name="imu_topic" default="/mavros/imu/data" />

<arg name="scan_20_hz" default="false" /> <!-- If we launch the velodyne with "rpm:=1200"

argument -->

<arg name="deskewing" default="true" />

<arg name="slerp" default="false" /> <!-- If true, a slerp between the first and last time will

be used to deskew each point, which is faster than using tf for every point but less accurate -->

<arg name="organize_cloud" default="$(arg deskewing)" /> <!-- Should be organized if deskewing is

enabled -->

<arg name="scan_topic" default="/velodyne_points" />

<arg name="frame_id" default="base_link" />

<arg name="queue_size" default="10" /> <!-- Set to 100 for kitti dataset to make sure all scans

are processed -->

<arg name="queue_size_odom" default="1" /> <!-- Set to 100 for kitti dataset to make sure all

scans are processed -->

<arg name="loop_ratio" default="0.2" />

<arg name="resolution" default="0.1" /> <!-- set 0.05-0.3 for indoor, set 0.3-0.5 for outdoor

(0.4 for kitti) -->

<arg name="iterations" default="10" />

<!-- Grid parameters -->

<arg name="ground_is_obstacle" default="true" />

<arg name="grid_max_range" default="5" />

<!-- For F2M Odometry -->

<arg name="ground_normals_up" default="false" /> <!-- set to true when velodyne is always

horizontal to ground (ground robot, car, kitti) -->

<arg name="local_map_size" default="15000" />

<arg name="key_frame_thr" default="0.4" />

<!-- For FLOAM Odometry -->

<arg name="floam" default="false" /> <!-- RTAB-Map should be built with FLOAM

http://official-rtab-map-forum.206.s1.nabble.com/icp-odometry-with-LOAM-crash-tp8261p8563.html -->

<arg name="floam_sensor" default="0" /> <!-- 0=16 rings (VLP16), 1=32 rings, 2=64 rings (kitti

dataset) -->

<!-- IMU orientation estimation and publish tf -->

<node if="$(arg use_imu)" pkg="nodelet" type="nodelet" name="imu_to_tf"

args="standalone rtabmap_util/imu_to_tf">

<remap from="imu/data" to="$(arg imu_topic)" />

<param name="fixed_frame_id" value="$(arg frame_id)_stabilized" />

<param name="base_frame_id" value="$(arg frame_id)" />

</node>

<!-- Lidar Deskewing -->

<node if="$(arg deskewing)" pkg="nodelet" type="nodelet" name="lidar_deskewing" args="standalone rtabmap_util/lidar_deskewing" output="screen">

<param name="wait_for_transform" value="0.05"/>

<param name="fixed_frame_id" value="$(arg frame_id)_stabilized"/>

<param name="slerp" value="$(arg slerp)"/>

<remap from="input_cloud" to="$(arg scan_topic)"/>

</node>

<arg if="$(arg deskewing)" name="scan_topic_deskewed" default="$(arg scan_topic)/deskewed"/>

<arg unless="$(arg deskewing)" name="scan_topic_deskewed" default="$(arg scan_topic)"/>

<!-- Localization-only mode -->

<arg name="localization" default="false"/>

<arg if="$(arg localization)" name="rtabmap_args" default=""/>

<arg unless="$(arg localization)" name="rtabmap_args" default="--delete_db_on_start"/>

<group ns="rtabmap">

<node pkg="rtabmap_odom" type="icp_odometry" name="icp_odometry" output="screen" args="$(arg rtabmap_args)">

<remap from="scan_cloud" to="$(arg scan_topic_deskewed)" />

<param name="frame_id" type="string" value="$(arg frame_id)" />

<param name="odom_frame_id" type="string" value="odom" />

<param name="queue_size" type="int" value="$(arg queue_size_odom)" />

<param name="wait_for_transform_duration" type="double" value="0.2" />

<param if="$(arg scan_20_hz)" name="expected_update_rate" type="double" value="25" />

<param unless="$(arg scan_20_hz)" name="expected_update_rate" type="double" value="15" />

<remap if="$(arg use_imu)" from="imu" to="$(arg imu_topic)" />

<param if="$(arg use_imu)" name="guess_frame_id" type="string"

value="$(arg frame_id)_stabilized" />

<param if="$(arg use_imu)" name="wait_imu_to_init" type="bool" value="true" />

<!-- ICP parameters -->

<param name="Icp/PointToPlane" type="string" value="true" />

<param name="Icp/Iterations" type="string" value="$(arg iterations)" />

<param if="$(arg floam)" name="Icp/VoxelSize" type="string" value="0" />

<param unless="$(arg floam)" name="Icp/VoxelSize" type="string"

value="$(arg resolution)" />

<param name="Icp/DownsamplingStep" type="string" value="1" /> <!-- cannot be increased

with ring-like lidar -->

<param name="Icp/Epsilon" type="string" value="0.001" />

<param if="$(arg floam)" name="Icp/PointToPlaneK" type="string" value="0" />

<param unless="$(arg floam)" name="Icp/PointToPlaneK" type="string" value="20" />

<param name="Icp/PointToPlaneRadius" type="string" value="0" />

<param name="Icp/MaxTranslation" type="string" value="2" />

<param name="Icp/MaxCorrespondenceDistance" type="string" value="$(eval resolution*10)" />

<param name="Icp/PM" type="string" value="true" />

<param name="Icp/PMOutlierRatio" type="string" value="0.7" />

<param name="Icp/CorrespondenceRatio" type="string" value="0.01" />

<param if="$(arg ground_normals_up)" name="Icp/PointToPlaneGroundNormalsUp"

type="string" value="0.8" />

<!-- localization mode -->

<param name="Mem/InitWMWithAllNodes" type="string" value="$(arg localization)"/>

<param if="$(arg localization)" name="Mem/IncrementalMemory" type="string" value="false"/>

<param unless="$(arg localization)" name="Mem/IncrementalMemory" type="string" value="true"/>

<!-- Odom parameters -->

<param name="Odom/ScanKeyFrameThr" type="string" value="$(arg key_frame_thr)" />

<param if="$(arg floam)" name="Odom/Strategy" type="string" value="11" />

<param unless="$(arg floam)" name="Odom/Strategy" type="string" value="0" />

<param name="OdomF2M/ScanSubtractRadius" type="string" value="$(arg resolution)" />

<param name="OdomF2M/ScanMaxSize" type="string" value="$(arg local_map_size)" />

<param name="OdomLOAM/Sensor" type="string" value="$(arg floam_sensor)" />

<param name="OdomLOAM/Resolution" type="string" value="$(arg resolution)" />

<param if="$(eval not deskewing and scan_20_hz)" name="OdomLOAM/ScanPeriod"

type="string" value="0.05" />

<param if="$(eval not deskewing and not scan_20_hz)" name="OdomLOAM/ScanPeriod"

type="string" value="0.1" />

<param if="$(arg deskewing)" name="OdomLOAM/ScanPeriod" type="string" value="0" />

</node>

<node pkg="rtabmap_slam" type="rtabmap" name="rtabmap" output="screen" args="$(arg rtabmap_args)">

<param name="frame_id" type="string" value="$(arg frame_id)" />

<param name="subscribe_depth" type="bool" value="true"/>

<param name="subscribe_rgb" type="bool" value="true"/>

<param name="subscribe_scan_cloud" type="bool" value="true" />

<param name="approx_sync" type="bool" value="true" />

<param name="wait_for_transform_duration" type="double" value="0.2" />

<remap from="scan_cloud" to="assembled_cloud" />

<remap from="rgb/image" to="/camera/color/image_raw"/>

<remap from="depth/image" to="/camera/aligned_depth_to_color/image_raw"/>

<remap from="rgb/camera_info" to="/camera/color/camera_info"/>

<remap from="depth/camera_info" to="/camera/depth/camera_info"/>

<remap from="imu" to="$(arg imu_topic)" />

<!-- RTAB-Map's parameters -->

<param name="Rtabmap/DetectionRate" type="string" value="1" />

<param name="RGBD/NeighborLinkRefining" type="string" value="false" />

<param name="RGBD/ProximityBySpace" type="string" value="true" />

<param name="RGBD/ProximityMaxGraphDepth" type="string" value="0" />

<param name="RGBD/ProximityPathMaxNeighbors" type="string" value="10" />

<param name="RGBD/AngularUpdate" type="string" value="0.05" />

<param name="RGBD/LinearUpdate" type="string" value="0.05" />

<param name="Mem/NotLinkedNodesKept" type="string" value="false" />

<param name="Mem/STMSize" type="string" value="30" />

<param name="Mem/LaserScanNormalK" type="string" value="20" />

<!-- localization mode -->

<param name="Mem/InitWMWithAllNodes" type="string" value="$(arg localization)"/>

<param if="$(arg localization)" name="Mem/IncrementalMemory" type="string" value="false"/>

<param unless="$(arg localization)" name="Mem/IncrementalMemory" type="string" value="true"/>

<!-- <param name="Mem/LocalizationDataSaved" type="string" value="true"/> --> <!-- Whether map should be updated in localization mode (default=false)-->

<param if="$(arg localization)" name="RGBD/StartAtOrigin" type="string" value="true"/>

<param name="Reg/Strategy" type="string" value="1" />

<param name="Grid/CellSize" type="string" value="$(eval resolution)" />

<param name="Grid/RangeMax" type="string" value="$(arg grid_max_range)" />

<param name="Grid/RayTracing" type="string" value="true" />

<param name="Grid/ClusterRadius" type="string" value="1" />

<param name="Grid/GroundIsObstacle" type="string" value="$(arg ground_is_obstacle)" />

<param name="Optimizer/GravitySigma" type="string" value="0.3" />

<!-- ICP parameters -->

<param name="Icp/VoxelSize" type="string" value="$(arg resolution)" />

<param name="Icp/PointToPlaneK" type="string" value="20" />

<param name="Icp/PointToPlaneRadius" type="string" value="0" />

<param name="Icp/PointToPlane" type="string" value="true" />

<param name="Icp/Iterations" type="string" value="$(arg iterations)" />

<param name="Icp/Epsilon" type="string" value="0.001" />

<param name="Icp/MaxTranslation" type="string" value="3" />

<param name="Icp/MaxCorrespondenceDistance" type="string" value="$(eval resolution*10)" />

<param name="Icp/PM" type="string" value="true" />

<param name="Icp/PMOutlierRatio" type="string" value="0.7" />

<param name="Icp/CorrespondenceRatio" type="string" value="$(arg loop_ratio)" />

</node>

<node if="$(arg rtabmap_viz)" name="rtabmap_viz" pkg="rtabmap_viz" type="rtabmap_viz"

output="screen">

<param name="frame_id" type="string" value="$(arg frame_id)" />

<param name="odom_frame_id" type="string" value="odom" />

<param name="subscribe_odom_info" type="bool" value="true" />

<param name="subscribe_scan_cloud" type="bool" value="true" />

<param name="approx_sync" type="bool" value="false" />

<remap from="scan_cloud" to="odom_filtered_input_scan" />

<remap from="odom_info" to="odom_info" />

</node>

<node pkg="nodelet" type="nodelet" name="point_cloud_assembler" args="standalone rtabmap_util/point_cloud_assembler" output="screen">

<remap from="cloud" to="$(arg scan_topic_deskewed)"/>

<remap from="odom" to="odom"/>

<param if="$(arg scan_20_hz)" name="max_clouds" type="int" value="20" />

<param unless="$(arg scan_20_hz)" name="max_clouds" type="int" value="10" />

<param name="fixed_frame_id" type="string" value="" />

<param name="queue_size" type="int" value="$(arg queue_size)" />

</node>

</group>

</launch>

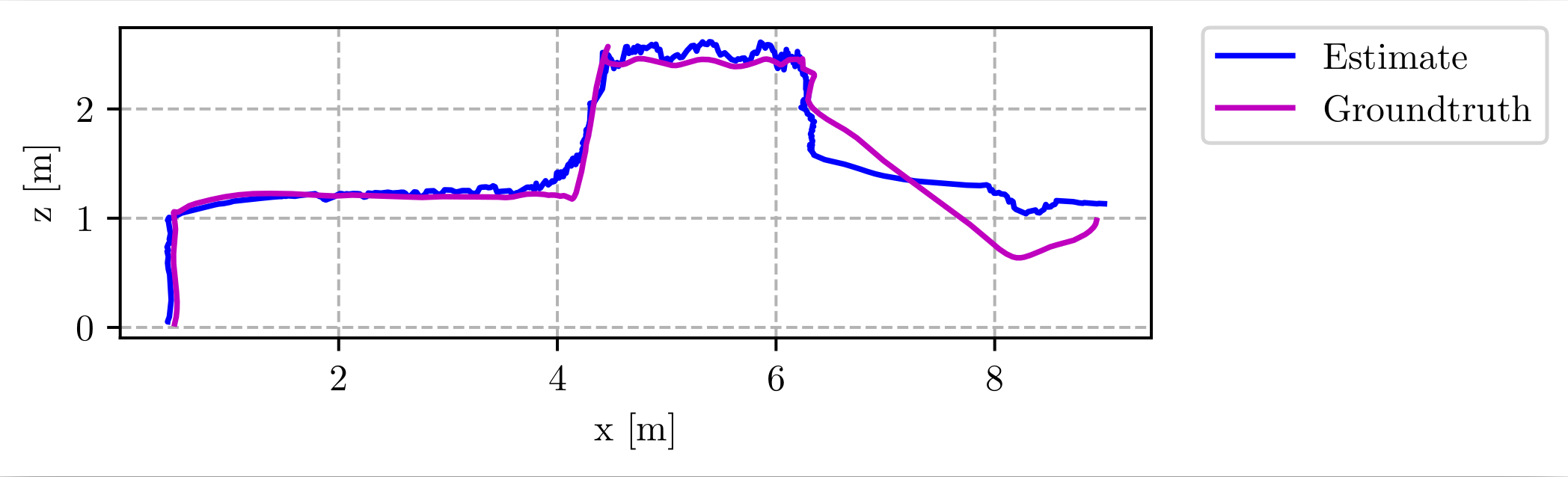

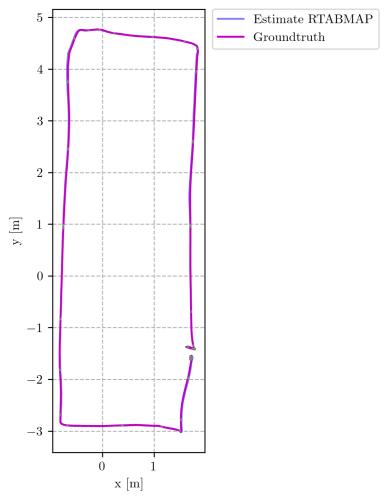

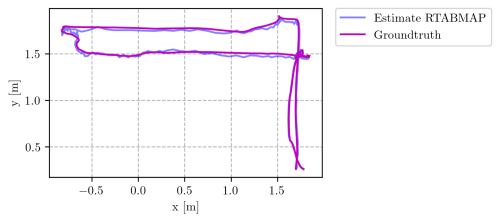

Rosbag of real test flight (Note /mavros/vision_pose/pose is Optitrack data): https://drive.google.com/file/d/1VyCl1a7A4dyc669E1452nhGhAXetonFD/view?usp=drive_link Trajectory difference with current settings (Ground truth measured with Optitrack):   Update: I switched from the above launch file that has rtabmap_slam/rtabmap and rtabmap_odom/icp_odometry separately to a launch filer using rtabmap.launch similar to the rtabmap_drone_example and managed to achieve a lot better results on the above mentioned bag. As always thanks for all the help. Cheers, Gilbert |

Reply |

Threaded

Open this post in threaded view

|

More ♦

♦

| Loading... |

| Reply to author |

| Edit post |

| Move post |

| Delete this post |

| Delete this post and replies |

| Change post date |

| Print post |

| Permalink |

| Raw mail |

Re: Lidar based 3d SLAM in sparse feature environment

|

Administrator

4446 posts

|

Hi,

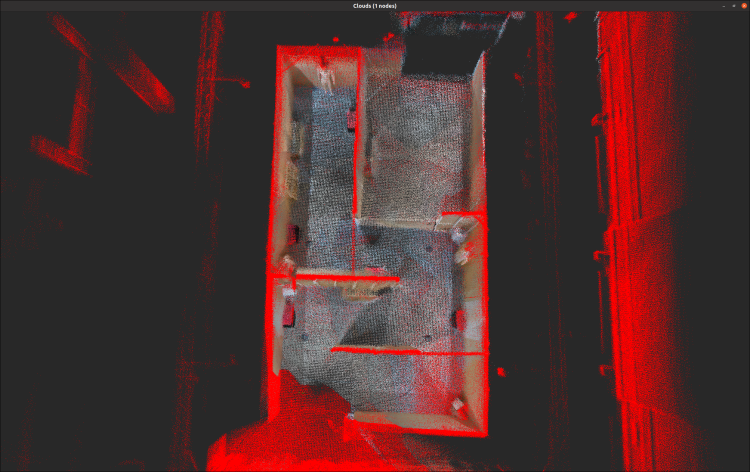

I checked the bag and the velodyne_points topic looks not generated correctly. We assume that a full 360 rotation of points are published in same topic, which seems not the case here:  I think it may be the cause why it is jumping that much. cheers, Mathieu |

Reply |

Threaded

Open this post in threaded view

|

More ♦

♦

| Loading... |

| Reply to author |

| Edit post |

| Move post |

| Delete this post |

| Delete this post and replies |

| Change post date |

| Print post |

| Permalink |

| Raw mail |

Re: Lidar based 3d SLAM in sparse feature environment

|

10 posts

|

Thanks for having a look. I figured out the problem for that bag. I changed the rate of the Lidar to 900 RPM but it didn't apply properly on the Lidar itself so there was a mismatch between the RPM set in the ROS node and the actual lidar.

As always really appreciate the help. Best, Gilbert |

Reply |

Threaded

Open this post in threaded view

|

More ♦

♦

| Loading... |

| Reply to author |

| Edit post |

| Move post |

| Delete this post |

| Delete this post and replies |

| Change post date |

| Print post |

| Permalink |

| Raw mail |

Re: Lidar based 3d SLAM in sparse feature environment

|

10 posts

|

This post was updated on Aug 17, 2024; 2:27pm.

Regarding initialization of the initial pose in SLAM mode. Is there any way to set the initial pose to xyz=0,0,0 and rpy=0,0,0 similar to <arg name="initial_pose" default="0 0 0 0 0 0"/> in localization mode? Currently it always takes the pose estimate from /mavros/imu/data which means map x axis is not aligned with the starting position of the drone.

Cheers, Gilbert |

Reply |

Threaded

Open this post in threaded view

|

More ♦

♦

| Loading... |

| Reply to author |

| Edit post |

| Move post |

| Delete this post |

| Delete this post and replies |

| Change post date |

| Print post |

| Permalink |

| Raw mail |

Re: Lidar based 3d SLAM in sparse feature environment

|

Administrator

4446 posts

|

Hi,

The initial pose in SLAM mode is based on the first odometry topic received. icp_odometry would normally starts at (0,0,0) (0,0,0) or "initial_pose" if set, and reset_odom_to_pose service can be used to reset odom at at particular position. I didn't verified this, but using guess_frame_id at the same time should not matter. cheers, Mathieu |

Reply |

Threaded

Open this post in threaded view

|

More ♦

♦

| Loading... |

| Reply to author |

| Edit post |

| Move post |

| Delete this post |

| Delete this post and replies |

| Change post date |

| Print post |

| Permalink |

| Raw mail |

Re: Lidar based 3d SLAM in sparse feature environment

|

10 posts

|

Hi Mathieu,

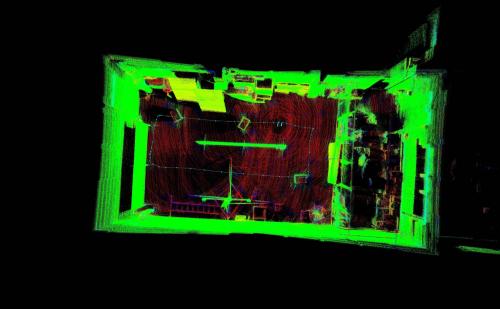

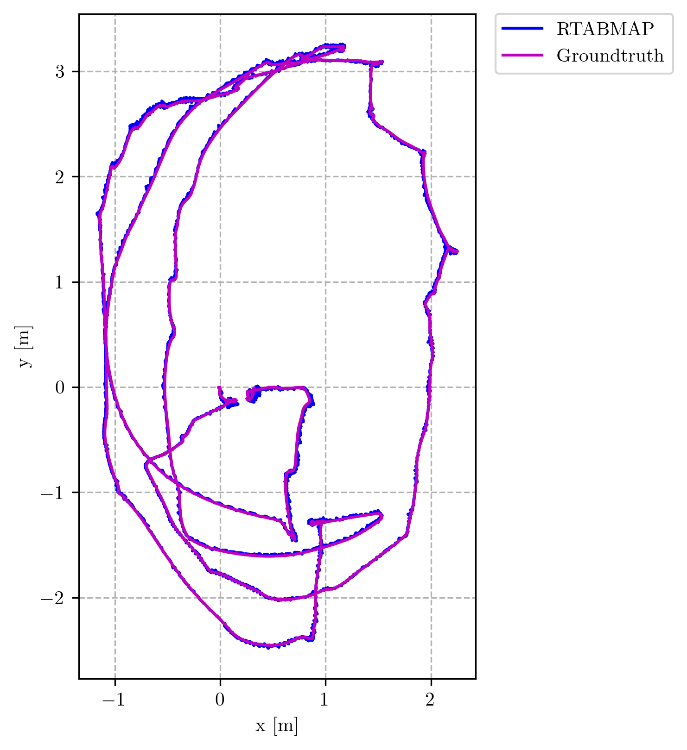

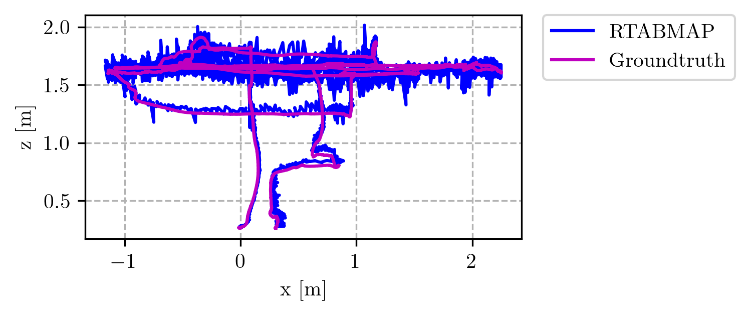

sorry for not posting any major updates for a while. Our team participated in a drone competition last week and achieved really good results. SLAM via RTABMAP worked quite well for the environment as we made two major changes to our setup. We tilted the LIDAR up and the camera (Intel Realsense) down and then merged the PointClouds before feeding them into RTABMAP. This allowed us to always see the floor solving our Z issues.  Again many thanks for all the help. Cheers, Gilbert |

Reply |

Threaded

Open this post in threaded view

|

More ♦

♦

| Loading... |

| Reply to author |

| Edit post |

| Move post |

| Delete this post |

| Delete this post and replies |

| Change post date |

| Print post |

| Permalink |

| Raw mail |

Re: Lidar based 3d SLAM in sparse feature environment

|

Administrator

4446 posts

|

Hi Gilbert,

Great to hear that you got satisfied results! Mathieu |

«

Return to Official RTAB-Map Forum

|

632 views

| Free forum by Nabble | Edit this page |