Livox Avia, + Depth cameras and organized point clouds.

Livox Avia, + Depth cameras and organized point clouds.

|

Hi, I'm interested in get organized/structured pointclouds to as the unorganized/unstructured pointclouds are problematic for reconstruction as you need make normals etc

I have a livox Avia with possibility of Realsenses R200 and D435i or OAK-D and OAK-D Pro W Dev(150FOV global shutter), but actually installed D435i, probably is better make it work first time with this one. If it is possible I would like can get organized pointclouds with the lidar and the camera, and colourized with the RGB camera stream. Right now, I have everything synched by nPTP, I have the camera/ lidar extrinsics in a 4x4 matrix that I don't know convert to TF without help as I don't know maths or programming, I have the internal Livox IMU delay, but I can get the d435 if needed, (maybe fuse them is better?). I can get external odometry synched and quite robust but without loop closure. I can get unorganized coloured pointcloud2 from other node (the same gives the odometry). I can add RTK via Ublox M8T+RTKlib or PPK if it is needed. With this info can you tell me if I can get organized pointclouds? Maybe passing laser cloud to depth with rtabmap pointcloud to depth node and reproject it? I tried but I got nothing, probably I do something wrong as I didn't read how to use that node yet. Thanks, if you have a global idea about how do it is enough, I will find how to do it or ask if I get stuck, I don't want take you many time. In other post here about Livox Avia you asked to the person how he got the Avia/Camera extrinsics in a 4x4 matrix but you didn't get answer , the way to do it is with this https://github.com/hku-mars/livox_camera_calib They have other node to get the IMU extrinsics and delay. I hope that help you |

|

Administrator

|

I assume your 4x4 matrix looks like

[R00 R01 R02 Tx] [R10 R11 R12 Ty] [R20 R21 R22 Tz] [0 0 0 1]You can find some examples on internet on how to convert the rotation matrix (R3x3) to a Quaternion or Euler angles. You can then use a static_transform_publisher to publish the transform (note that you have already the translation part Tx-Ty-Tz). What do you mean by "organized pointclouds"? do you want an organized 3D map organized (which I am not sure it is possible) or that for each RGB frame, you generate the corresponding Depth image from the lidar data? There are some options with rtabmap to color a lidar point cloud or to generate depth image from lidar registered to RGB camera. cheers, Mathieu |

Re: Livox Avia, + Depth cameras and organized point clouds.

|

Hi thanks, I will find out that part of the matrix, I should know it already.

By organized pointclouds I mean the same data/pointcloud type that the terrestrial survey scanners make, I think is they give a depth matched with pixels and use .las files often, have a different structure of data than the pointclouds tha livox or Velodine gives. I think is the same type of pointcloud that the R200 and kinect made, maybe correspond with the called registered in Rtabmap Thanks! |

|

Administrator

|

Hi,

in ROS, an organized point cloud is a PointCloud2 where height > 1 and you can get NaN 3D points. A dense point cloud would be a PointCloud2 with height=1 and no invalid points. Velodyne can generate organized or dense PointCloud2, ouster generates organized PointCloud2. Organized is the inverse of "is_dense" here: http://docs.ros.org/en/melodic/api/sensor_msgs/html/msg/PointCloud2.html PCL will use the term organized: https://pointclouds.org/documentation/tutorials/basic_structures.html A depth image is then referred as organized. For survey scanner, they may generate RGB image + Depth image, or even an organized point cloud with RGB channel for each point. The LAS format can support this. With RTAB-Map, we can export in LAS for convenience. If you feed a depth image to rtabmap, you will be able to export RGB + Depth and the generated colored point cloud. If you feed only RGB + Lidar scan, you won't be able to export depth image for each RGB frame, only the colored point cloud. However, with some changes to rtabmap-export tool (with --cam_projection option), you could save the depth images from lidar data, as they are generated already here (replacing that function with this one for each camera). If exporting corresponding depth images to rgb frames based on lidar data is what you want, I could check to add the option. cheers, Mathieu |

Re: Livox Avia, + Depth cameras and organized point clouds.

|

Thanks so much by you great answer and even consider the possibility of add the depth+RGB from lidar's pointclouds.

I will try to sell you why I think it would be useful you add such feature to Rtabmap  , it is a little long and my English is quite bad. , it is a little long and my English is quite bad.

I think always would be interesting have the option to rtabmap get ordened pointclouds (with NaN as you said) if the lidar don't support it natively (would be great as livox are cheaper ) and can get depth+RGB from Lidar pointclouds and saved channel RGB per pixel in LAS format. Not sure if both things can be done at the same time and are compatible with each other, so the depth data would come from a ordered pointcloud and later added the RGB data. I think it would add a new functionality to RTBMAP and with it to ROS (now is mainly mapping for navigation use), can use Rtabmap to make 3D scanner for large scale and its data would be supported by Reality Capture or Metashape and they can even fuse that lidar ordered pointclouds with photogrammetry. An example of use is can use such data for 3D modeling, 3D assets creation for video games i.e Unreal Engine, easy creation BIM maps, and even just with get ordened pointclouds would be much more easy make surface reconstruction,as I understand you would get normals to make the mesh easier. I think it could give cheap access to such kind of data for academic, hobby use, no many people can access to a terrestrial scanner and no many people share that scans / pointclouds for learning. Right now, import a PCL dense pointcloud2 in Cloud compare/meshlab , make normals ( many times are not made properly) and meshing it with a decent quality is a very hard job. Can mesh things better thanks to the above could be used for 3d model creation or in example scan better a big sculpture, a facade, or heritage conservation. A great use for robotics would be can make much easier maps for navigation based in meshes with mobe_base_flex and mesh_navigation packages https://github.com/uos/mesh_navigation This would help to make reliable robots with less computing power., Helping again to the academic and hobby users. Those are the uses that I at least would give it. Another use in robotics would be for simulation, with rtabmap givinge such data a "cheap" rig could make very easy nice maps for simulation for autoware, gazebo, Isaac Sim.. I have a handheld LiVOX system with the camera realsense d435/lidar/IMU and GNSS positioning as option, everything is synchronized and calibrated and have quite powerful computer (i7), I would be glad in make any test you need to add this features take you the less effort possible, I cannot help in many more to be honest. |

|

Administrator

|

Hi,

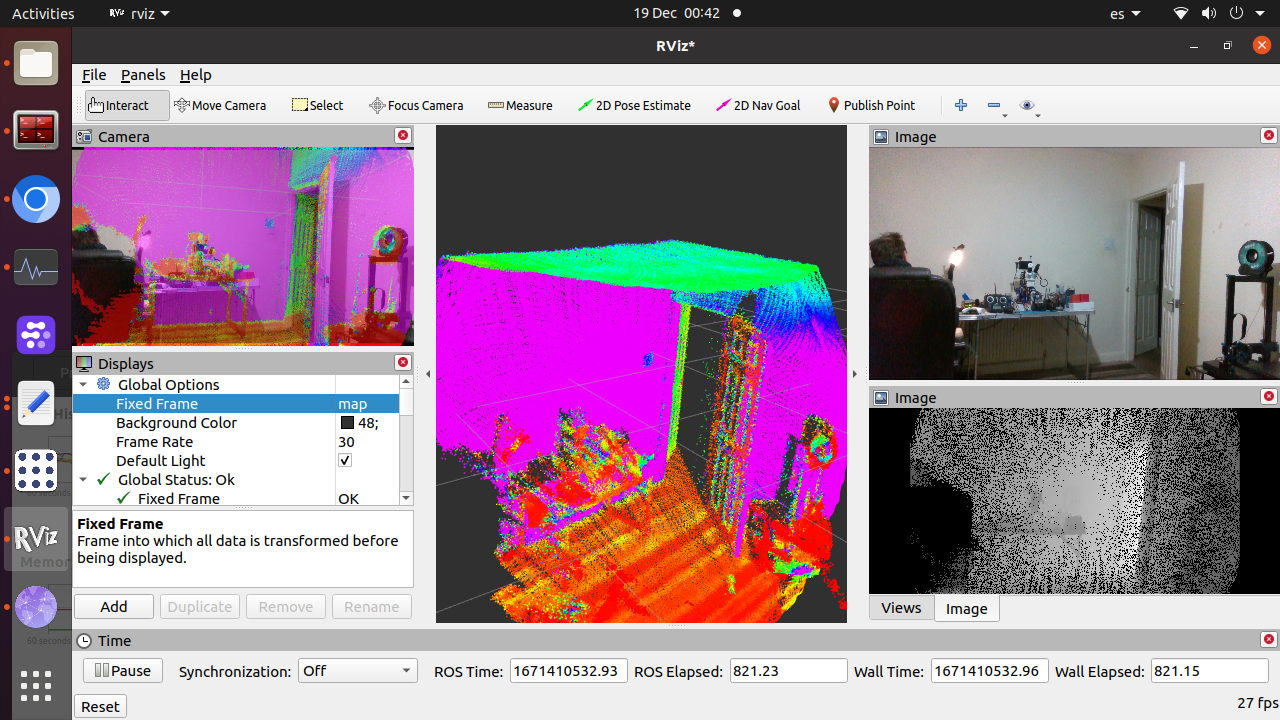

Thanks for sharing all those applications. Some of those ideas I already did with RTAB-Map, but the options are not always obvious. I did what I suggested to do in my previous post in that commit. I created a launch file for testing it here (velodyne+d435i) with this setup:  In this example, I use only IMU and RGB camera of the D435i. From the resulting velodyne+RGB database, we can export in various formats, but I'll show LAS and RGB+depth frames + poses lidar + poses of the cameras: rtabmap-export --las --cam_projection --scan --images --poses_camera --poses rtabmap.db Output LAS:  Example of 1 RGB frame with corresponding generated depth image from lidar reprojection:

|

Re: Livox Avia, + Depth cameras and organized point clouds.

|

Oh that is amazing, thank you so much.

I will try from there make it work with the LIVOX Avia, with rtabmap odometry and external odometry with internal and external IMUs, will take me a while, but I will share anything positive I get. I will try with OAK-D too. I'm hope some people can use this new Rtabmap possibility. Thanks! |

Re: Livox Avia, + Depth cameras and organized point clouds.

|

This post was updated on .

Hi, I'm traying to follow your .launch example. I'm using d435i its imu and I have also available the Avia's IMU. In your example you have camera/imu as imu topic, as I do. But in my case this section is not working:

<!-- IMU orientation estimation and publish tf accordingly to os_sensor frame -->

<node pkg="nodelet" type="nodelet" name="imu_nodelet_manager" args="manager">

<remap from="imu/data_raw" to="$(arg imu_topic)"/>

<remap from="imu/data" to="$(arg imu_topic)/filtered"/>

</node>

<node pkg="nodelet" type="nodelet" name="imu_filter" args="load imu_filter_madgwick/ImuFilterNodelet imu_nodelet_manager">

<param name="use_mag" value="false"/>

<param name="world_frame" value="enu"/>

<param name="publish_tf" value="false"/>

</node>

<node pkg="nodelet" type="nodelet" name="imu_to_tf" args="load rtabmap_ros/imu_to_tf imu_nodelet_manager">

<remap from="imu/data" to="$(arg imu_topic)/filtered"/>

<param name="fixed_frame_id" value="$(arg frame_id)_stabilized"/>

<param name="base_frame_id" value="$(arg frame_id)"/>

</node>

<!-- Lidar Deskewing -->

<node if="$(arg deskewing)" pkg="nodelet" type="nodelet" name="lidar_deskewing" args="standalone rtabmap_ros/lidar_deskewing" output="screen">

<param name="wait_for_transform" value="0.01"/>

<param name="fixed_frame_id" value="$(arg frame_id)_stabilized"/>

<param name="slerp" value="$(arg slerp)"/>

<remap from="input_cloud" to="$(arg scan_topic)"/>

</node>

I receive errors as follow

[ERROR] (2022-12-13 21:49:16.308) CoreWrapper.cpp:2385::imuAsyncCallback() IMU received doesn't have orientation set, it is ignored.

[ERROR] (2022-12-13 21:49:16.313) CoreWrapper.cpp:2385::imuAsyncCallback() IMU received doesn't have orientation set, it is ignored.

[ERROR] (2022-12-13 21:49:16.318) CoreWrapper.cpp:2385::imuAsyncCallback() IMU received doesn't have orientation set, it is ignored.

[ERROR] (2022-12-13 21:49:16.324) CoreWrapper.cpp:2385::imuAsyncCallback() IMU received doesn't have orientation set, it is ignored.

[ERROR] (2022-12-13 21:49:16.328) CoreWrapper.cpp:2385::imuAsyncCallback() IMU received doesn't have orientation set, it is ignored.

[ WARN] [1670968156.333317291]: /rtabmap/rtabmapviz: Did not receive data since 5 seconds! Make sure the input topics are published ("$ rostopic hz my_topic") and the timestamps in their header are set. If topics are coming from different computers, make sure the clocks of the computers are synchronized ("ntpdate"). Parameter "approx_sync" is false, which means that input topics should have all the exact timestamp for the callback to be called.

/rtabmap/rtabmapviz subscribed to (exact sync):

/rtabmap/odom_filtered_input_scan \

/rtabmap/odom_info

And this

[ WARN] [1670968155.052019801]: /rtabmap/rtabmap: Did not receive data since 5 seconds! Make sure the input topics are published ("$ rostopic hz my_topic") and the timestamps in their header are set. If topics are coming from different computers, make sure the clocks of the computers are synchronized ("ntpdate"). If topics are not published at the same rate, you could increase "queue_size" parameter (current=10).

/rtabmap/rtabmap subscribed to (approx sync):

/rtabmap/odom \

/camera/color/image_raw \

/camera/color/camera_info \

/rtabmap/assembled_cloud

I had other issues but I were removing erros little to little

In the lidar driver side, this message appear when I run rtabmap in other window [ERROR] [1670968066.774769420]: Client [/lidar_deskewing] wants topic /livox/lidar to have datatype/md5sum [sensor_msgs/PointCloud2/1158d486dd51d683ce2f1be655c3c181], but our version has [livox_ros_driver/CustomMsg/e4d6829bdfe657cb6c21a746c86b21a6]. Dropping connection. [ERROR] [1670968144.717018573]: Client [/lidar_deskewing] wants topic /livox/lidar to have datatype/md5sum [sensor_msgs/PointCloud2/1158d486dd51d683ce2f1be655c3c181], but our version has [livox_ros_driver/CustomMsg/e4d6829bdfe657cb6c21a746c86b21a6]. Dropping connection. |

|

Administrator

|

Hi,

For the CoreWrapper error, which topic did you set to rtabmap node? See https://github.com/introlab/rtabmap_ros/blob/7b2c6354c63f1dff7872406ef88907d0c722c0df/launch/tests/test_velodyne_d435i_deskewing.launch#L144 You can rostopic echo the imu topic you linked and see if there is a quaternion, otherwise debug upstream. For the " /rtabmap/odom \ /camera/color/image_raw \ /camera/color/camera_info \ /rtabmap/assembled_cloud " warning, check if they are published, if not check upstream nodes. For the last error for lidar_deskewing, you are not feeding a PointCloud2 topic to it. Note that lidar_deskewing has been tested only with ring-like lidars like Ouster and Velodyne. It may not work for other kind of lidar. cheers, Mathieu |

Re: Livox Avia, + Depth cameras and organized point clouds.

|

Respect to your question I left everything IMU related as default, /camera/imu, I didn't modified it as I use d435 as you.

To be honest I don't understand how the IMU part in the launch works, as only there is a input, camera/imu but two remaps, imu/data looks feed the odometry,and imu/data_raw looks feed the Madgwick filter node . I think maybe my t265 published imu data and imu data raw, but my d435i doesn't publish any raw data, so I think I have an IMU topic missing as I have not filtered or raw IMU data published. Should I add a second IMU topic form a external IMU or lidar's IMU? Should I substitute (arg imu_topic)/filtered by Livox/IMU, mavors/IMU I.e? I hope I what I said is understandable |

|

Administrator

|

In that example, the imu output of D435i only contain acceleration and gyro data. rtabmap needs orientation, so we need to compute it. The orientation can be computed with madgwick node.

When I launch D435i camera node (like in that launch file), you get D435i imu topic on `/camera/imu`. We feed `/camera/imu` to madgwick filter as its input `imu/data_raw` (remap). The madgwick output `imu/data` is remapped to `/camera/imu/filtered`. `/camera/imu/filtered` is fed to imu_to_tf node, which will create a stabilized TF. That TF is used for lidar deskewing, but also for icp_odometry guess transform. `/camera/imu/filtered` is also fed to rtabmap node for gravity constraints. |

Re: Livox Avia, + Depth cameras and organized point clouds.

|

This post was updated on .

In reply to this post by FPSychoric

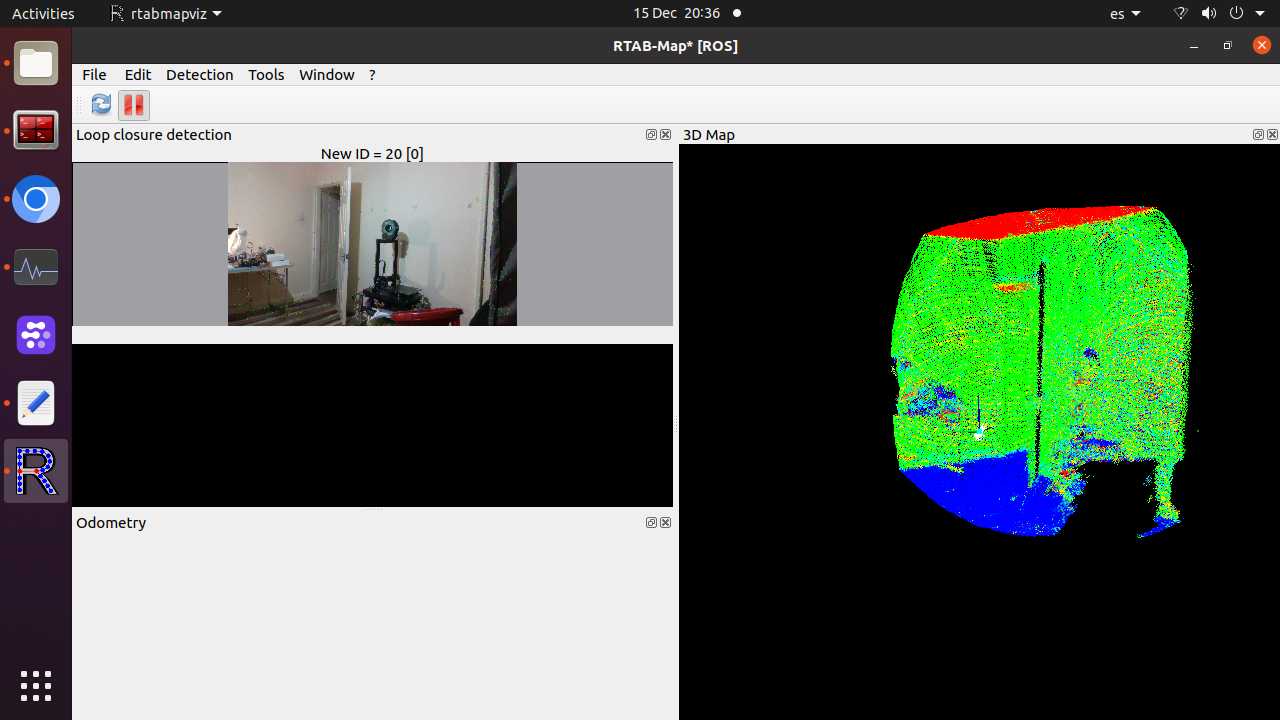

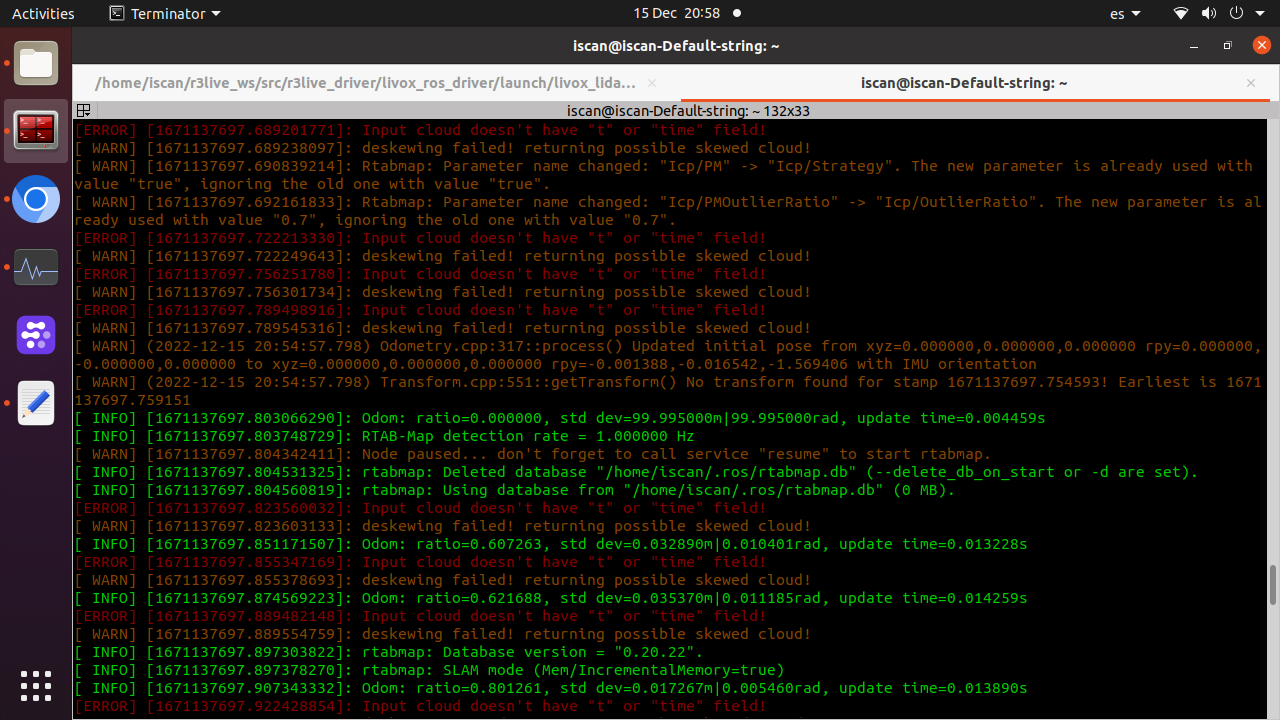

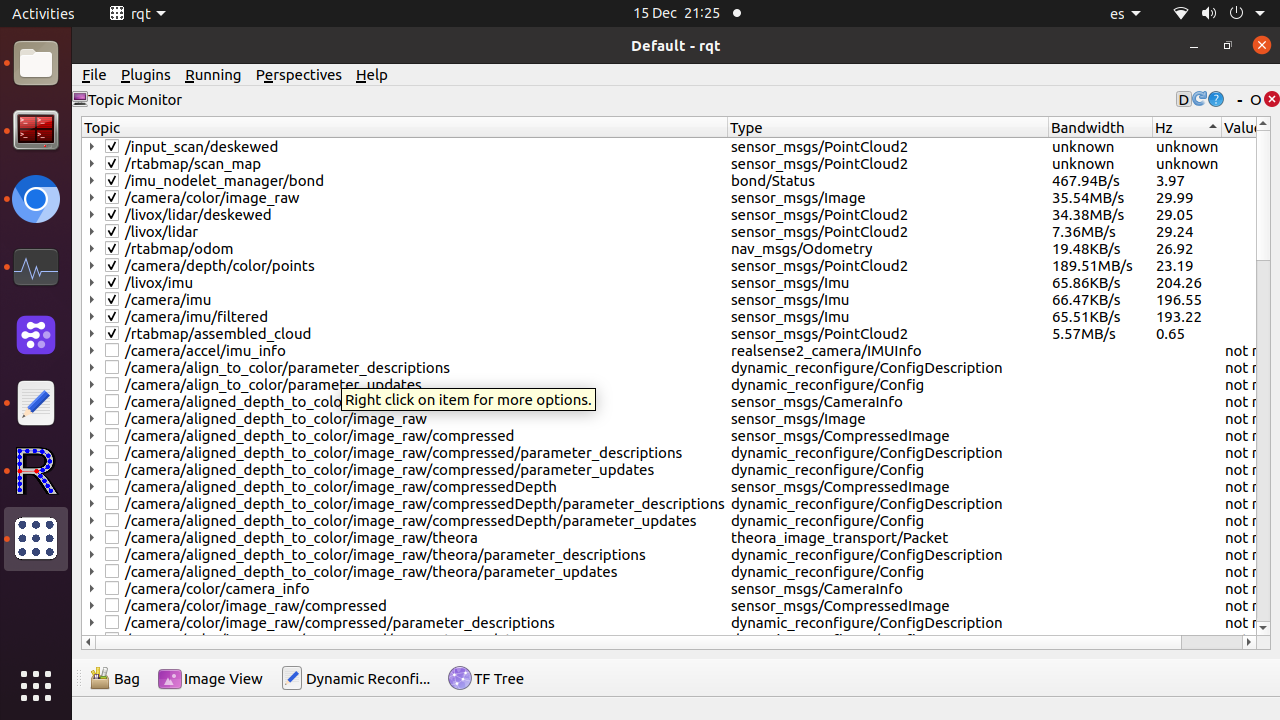

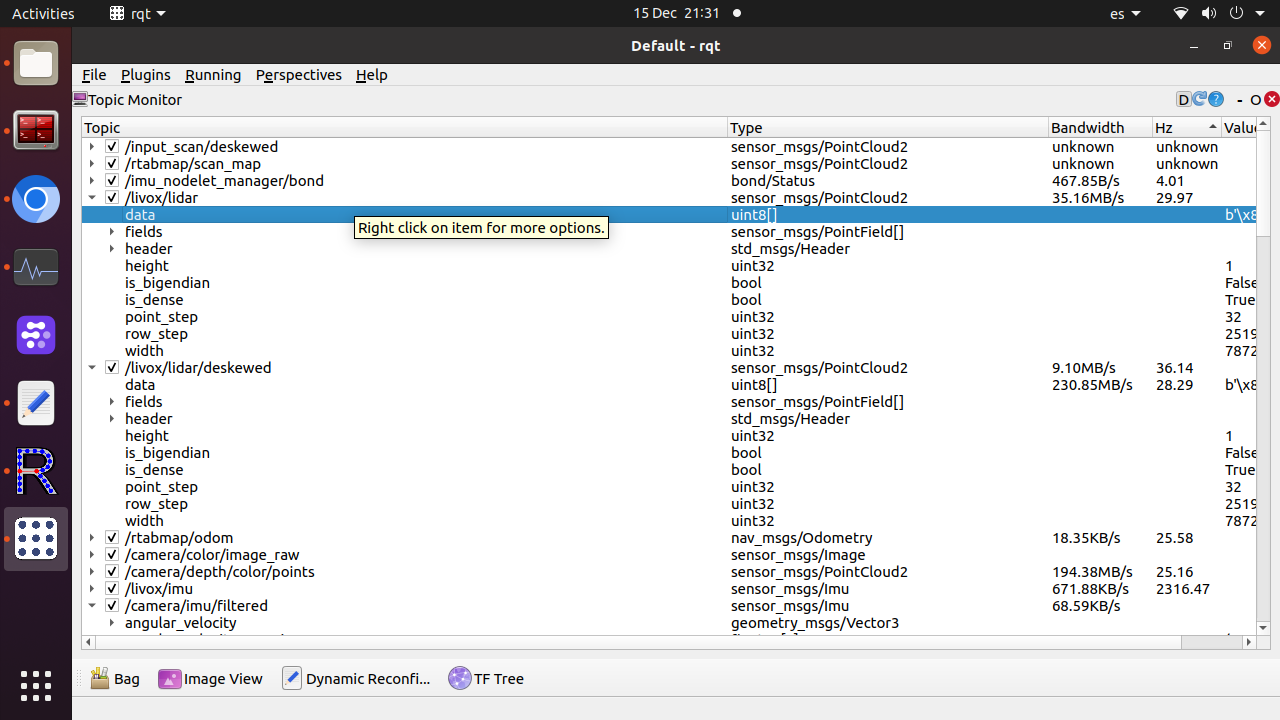

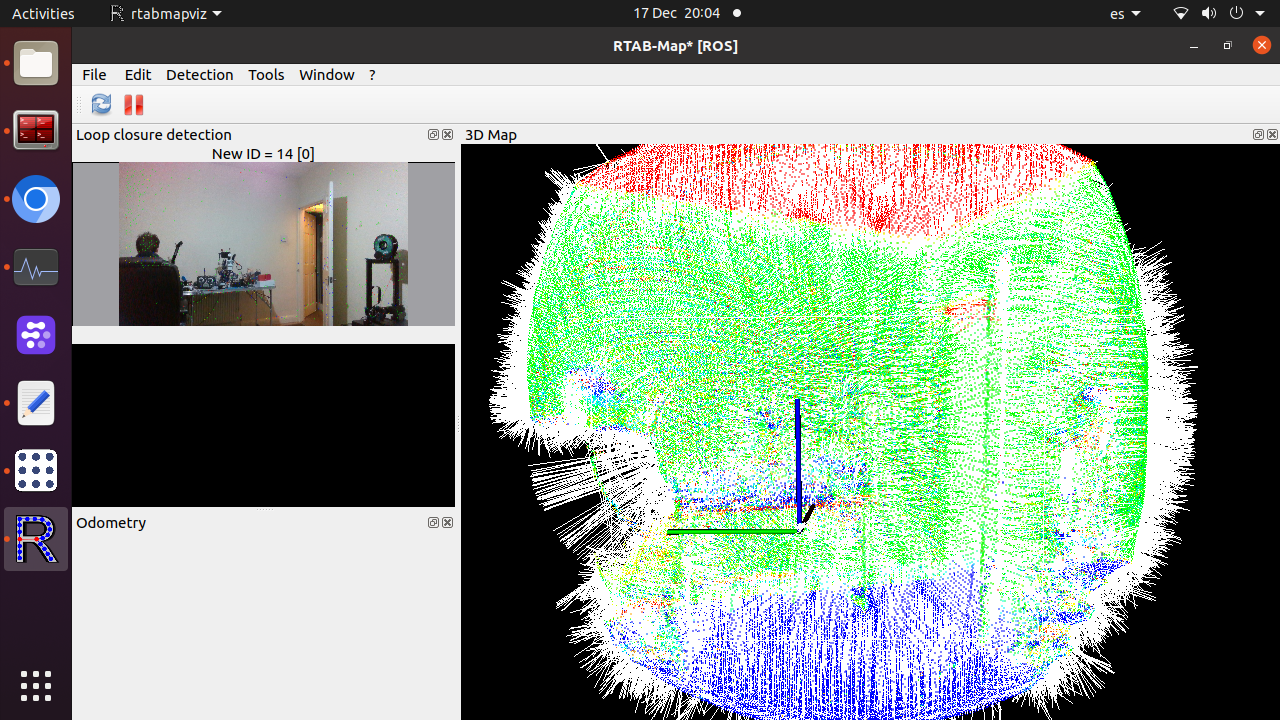

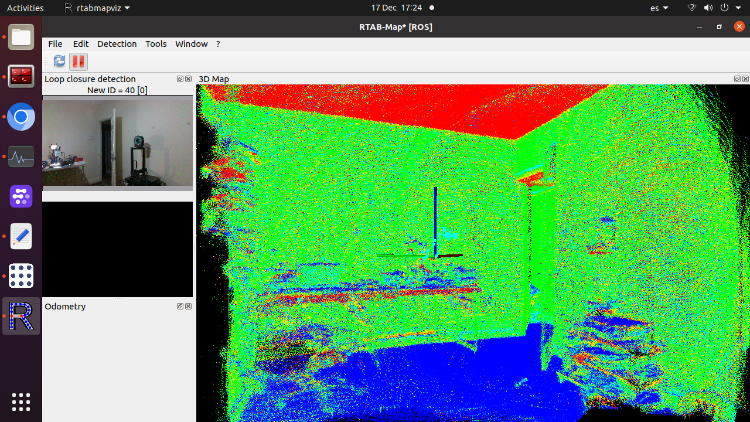

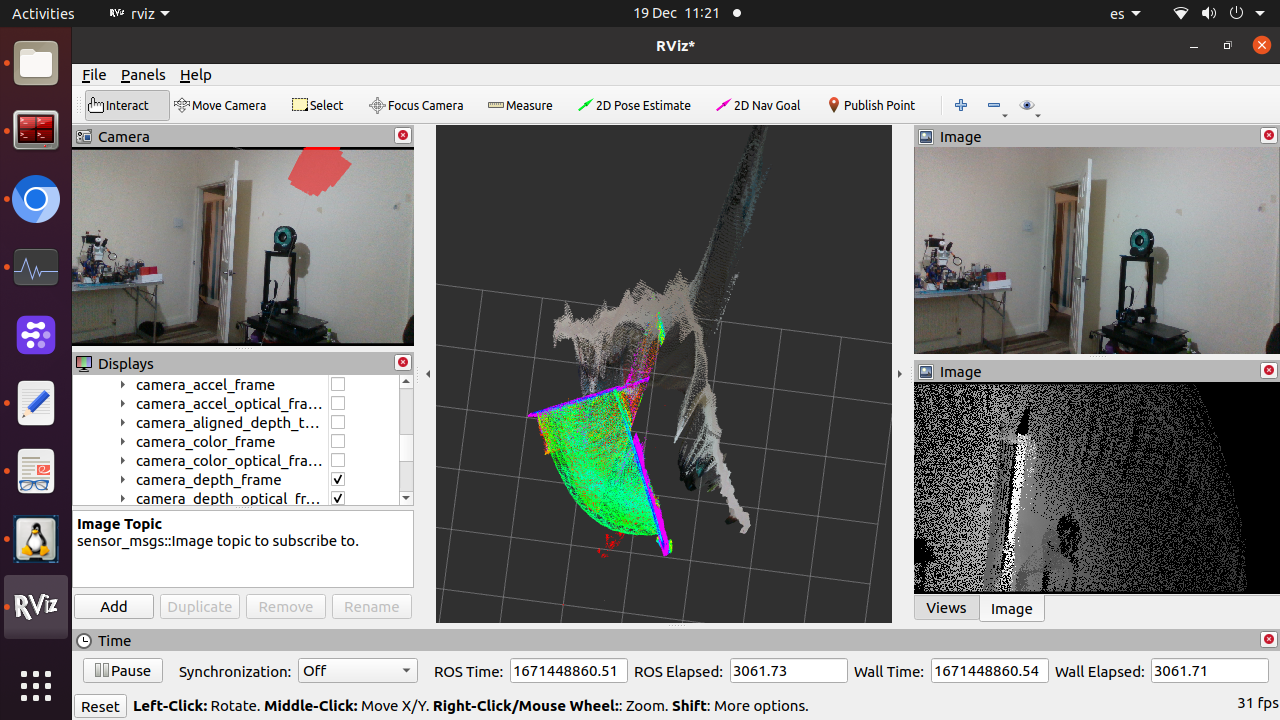

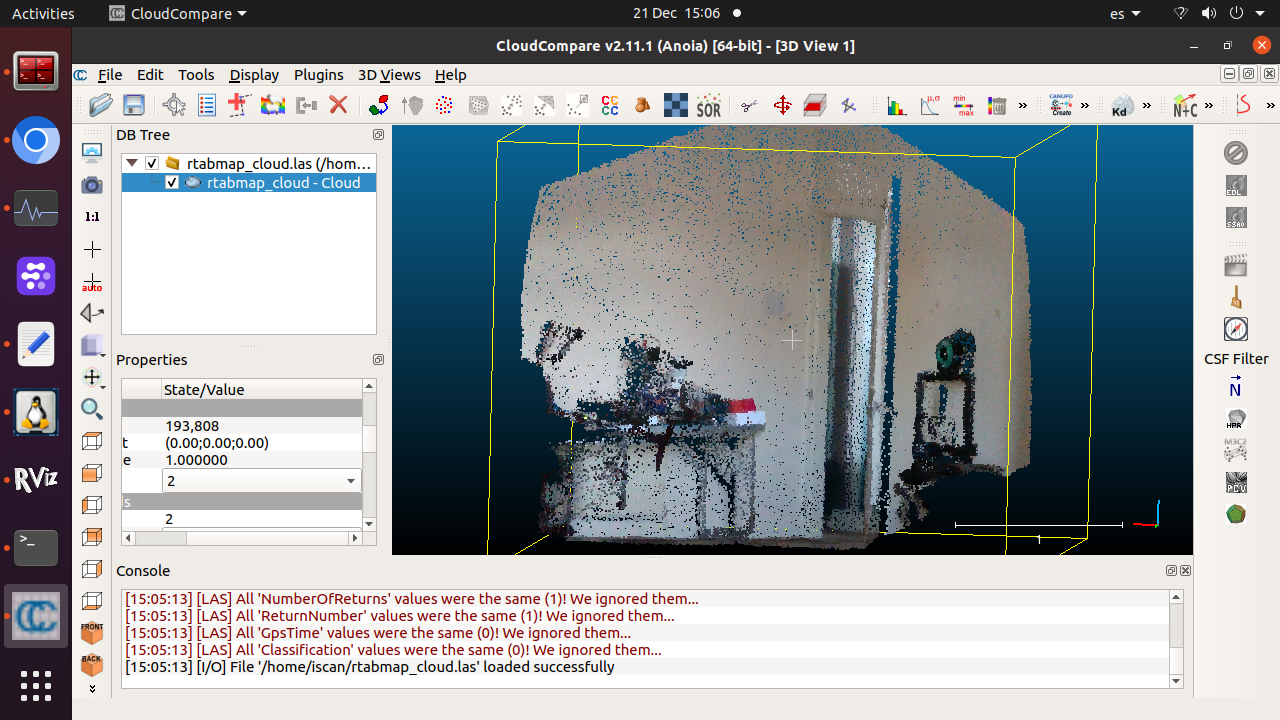

well I could fix the Pointcloud2 format, and the IMU issues were solved and the thing started to map quite good for a first test in small room, and it looks the loop closure is working too as seen in the images, but not sure really it is doing something, as I think the VO node is not working.

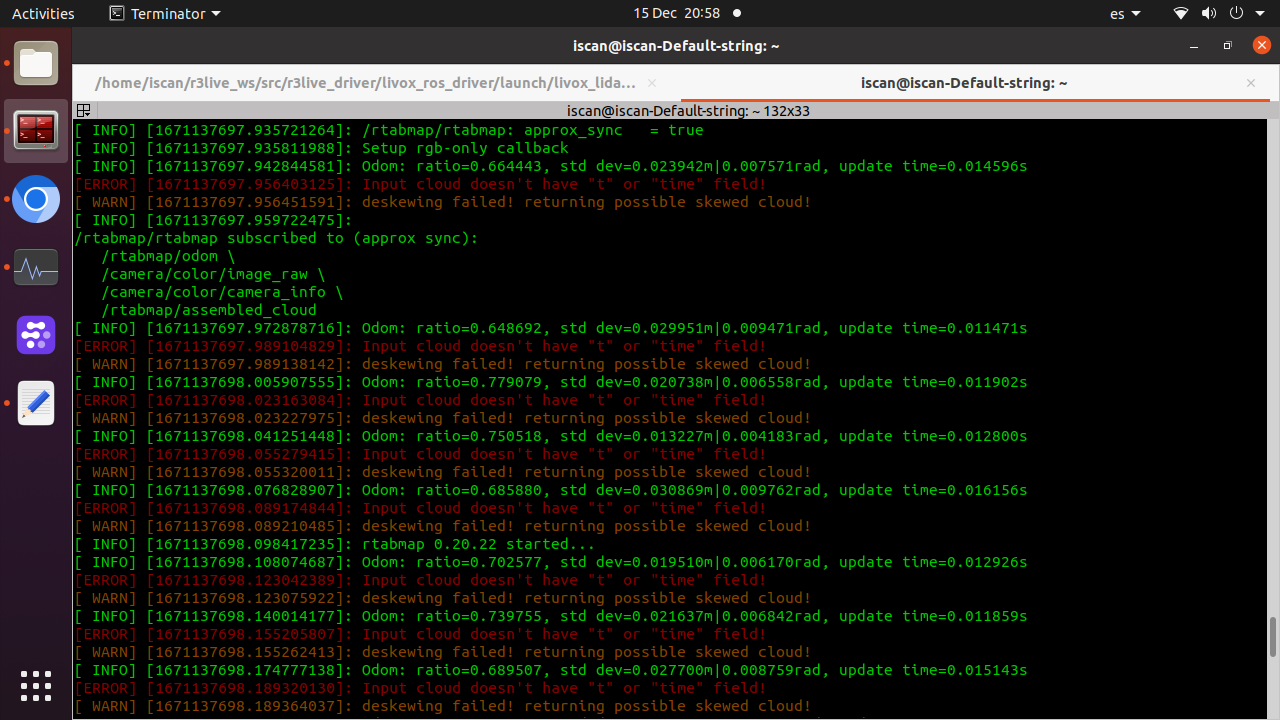

Now my issues/warnings is the seen in the pictures.   I'm getting a lidar/livox/deskewed topic, It is published at 30hz as expected and I can see the cloud in rviz/rtabviz. What I seen is that it maybe is not working, even if it is being published as, maybe one of the errors aim to it, if deskewing function does make ordered pointclouds I would expect read in its topic header is_dense=false allowing NaN points as it explained in PCL site and in this link i.e. https://github.com/IntelRealSense/realsense-ros/issues/1563. here images of the relevant topics with fps and is_dense true   By the way I just seen hidden in Livox github repository a deskewing tool that publish /livox_unidistort https://github.com/Livox-SDK/livox_cloud_undistortion. To be honest I don't know if undistort a pointcloud is the same that deskewing and both the same of a ordered pointcloud. Respect to ordered pointclouds, what I found is that Rtabviz, when export the map to .ply, give you the option to save the pointcloud in ordered type, which I think it is what I would like to get, not sure how to check if it is the same ordered pointcloud I refer above n my first message, but I noted that to mesh it in Cloud Compare didn't need calculate the normals, which is nice and a symptom that maybe that is all what I need (with rgb colors), not sure if deskewing node is such thing or it is a needed step to can save oredered pointclouds. Deskewing and lidar pointclouds undistortion a new thing for me, as almost ordered pointclouds. I'm not getting RGB point cloud but it starting to look promising. I even now have the option to send deskweked pointcloud to rtabmap if I get a advante if do it, supposing your node and that tool do the same thing. I think is a nice think for the moment discover livox works with rtabmab. Off course if your interested in test anything even if it is not related with my question , just let me know I will be happy test any that rise your curiosity. Edit As update , just say the LIVOX deskew package doesn't work with Rtabmap due to timestamps, as it use lidar time and the modified driver use ROS/system time, and this, doesn't work with the deskew pkge, so it looks a wall for some as me that don't know coding. The pointcloud even if it now works with Rtabmap, still have missing the time argument in the pointcloud (but the topic has good timestamp), because that is the error t/time. Velodynes and Oister gives such time field, Livox doesn't. Still there is other softwares that deskew and some allow the pointcloud time modification, so I will try copy the system or use their deskewed pointcloud2 as input for Rtabmap as possible workaround. LiO-Livox and Lio-SAM do that, but they are for Livox Horizon and is not clear the compatibility with Avia. |

|

Administrator

|

Can you share a rosbag of the livox raw point cloud, with the imu topic you are using (and /tf, /tf_static topics)? I don't own a Livox lidar, so I cannot really say if it could be compatible with my lidar_deskewing node. Yes the https://github.com/Livox-SDK/livox_cloud_undistortion package seems doing lidar deskewing, so you may switch to it. I see a lot of errors that the input point cloud doesn't have "time" or "t" fields, so the returned point cloud is exactly the same as the input (skewed), so lidar_deskewing is not working for this setup.

Deskewing or undistorting a lidar scan is mostly related to moving lidars (scanning while moving). For survey lidar, you don't need deskewing as it is fixed during a scan. |

Re: Livox Avia, + Depth cameras and organized point clouds.

|

This post was updated on .

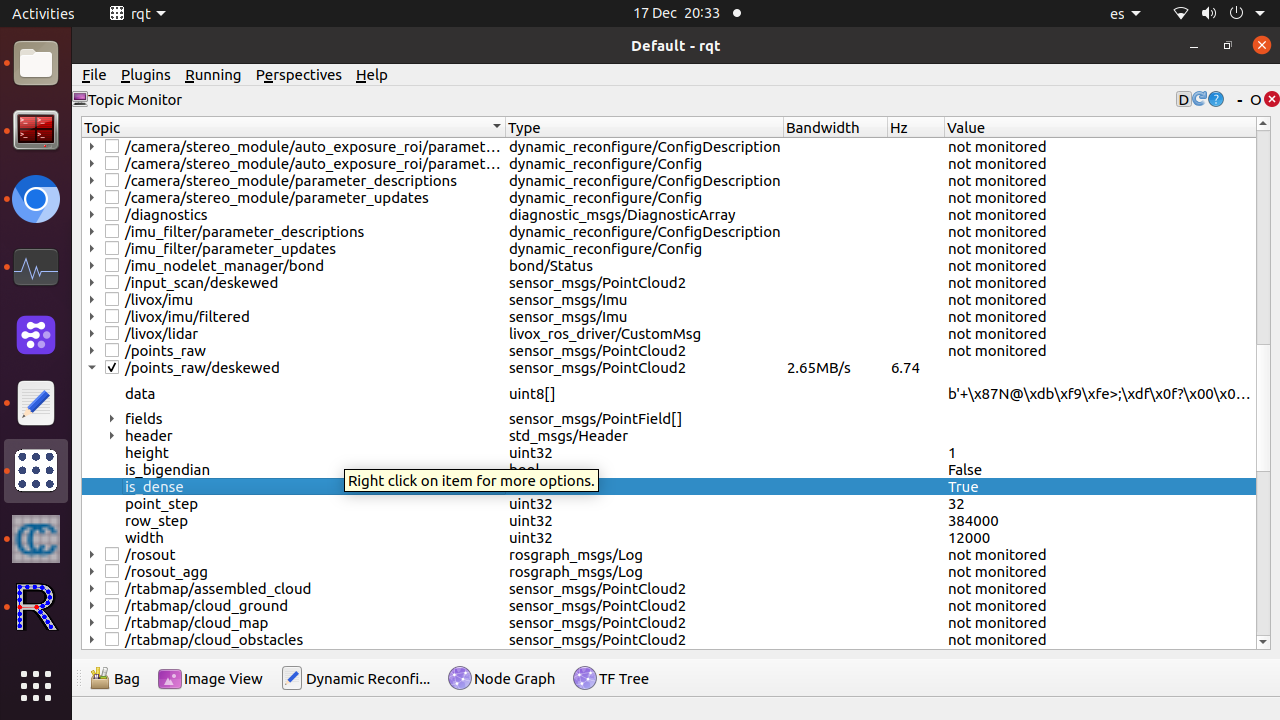

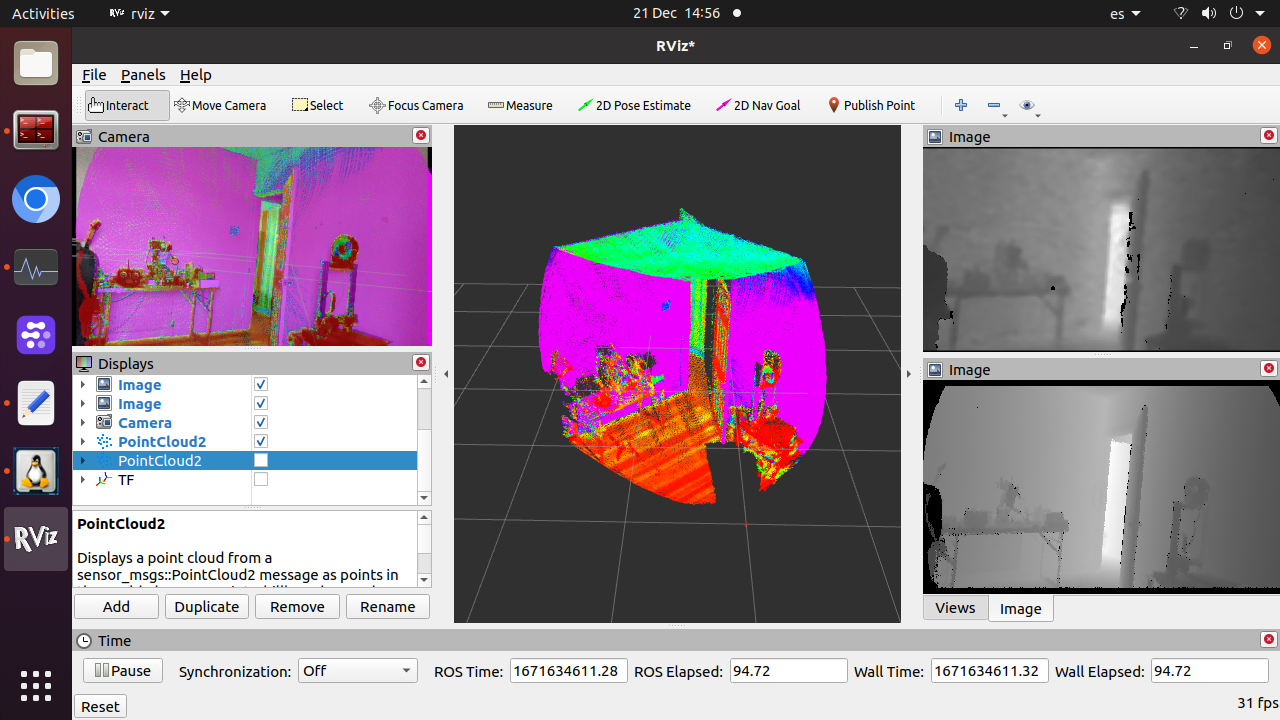

Hi some news, quite good, I I got everything working without errors. I did get the lidar works sending the data, time field and timestamp as rtabmap needs. The livox deskewing package just wont work in my setup.

I get all topics, deskewed point clouds, they have normals and I can mesh them. I used camera projection from rviz exporting options in .PLY. What I see when I export and make the cloud from the laser scan, the option "save as organized point cloud is shadowed" , I guess it is my next step and related with this that you said: "A depth image is then referred as organized. For survey scanner, they may generate RGB image + Depth image, or even an organized point cloud with RGB channel for each point. The LAS format can support this. With RTAB-Map, we can export in LAS for convenience. If you feed a depth image to rtabmap, you will be able to export RGB + Depth and the generated colored point cloud. If you feed only RGB + Lidar scan, you won't be able to export depth image for each RGB frame, only the colored point cloud. However, with some changes to rtabmap-export tool (with --cam_projection option), you could save the depth images from lidar data, as they are generated already here (replacing that function with this one for each camera)." the issues were fixed, do you want still the .bag file, would you prefer outdoors? livox are quite bad indoors, [but with rtabmap works better than fastlio2, maybe a need tunning both to can compare, I should to do a lot of test, just I need now find a workflow and learn to optimise, setup rtabmap, add some node as depth from laserscan and VO and test external odometry from orbslam or fastlio i.e. How it performs in precision,cpu/memory use not sure, for me looks ok, deskewing looks a quite heavy task, maybe like loop clousure. Without Rtabviz works quite more light.      A possible problem I have is my camera/lidar is calibrated against camera_colour_frame I think ( or maybe color_optical_frame?, but in the TF publisher of rtabmap's launch, is being publishing to camera_link frame (transform from the matrix 4x4) so I think such TF should be camera_link frame - camera_color_frame. Also I added a TF with the extrinsic lidar/lidar IMU, not sure if that is better or even necessary, but I think so, it is not published by livox skd or driver. Thanks for all your help, I will upload the .bag if you still need it, I made it work with livox imu and d435 imu which I should use in your bag? . |

Re: Livox Avia, + Depth cameras and organized point clouds.

|

This post was updated on .

In reply to this post by FPSychoric

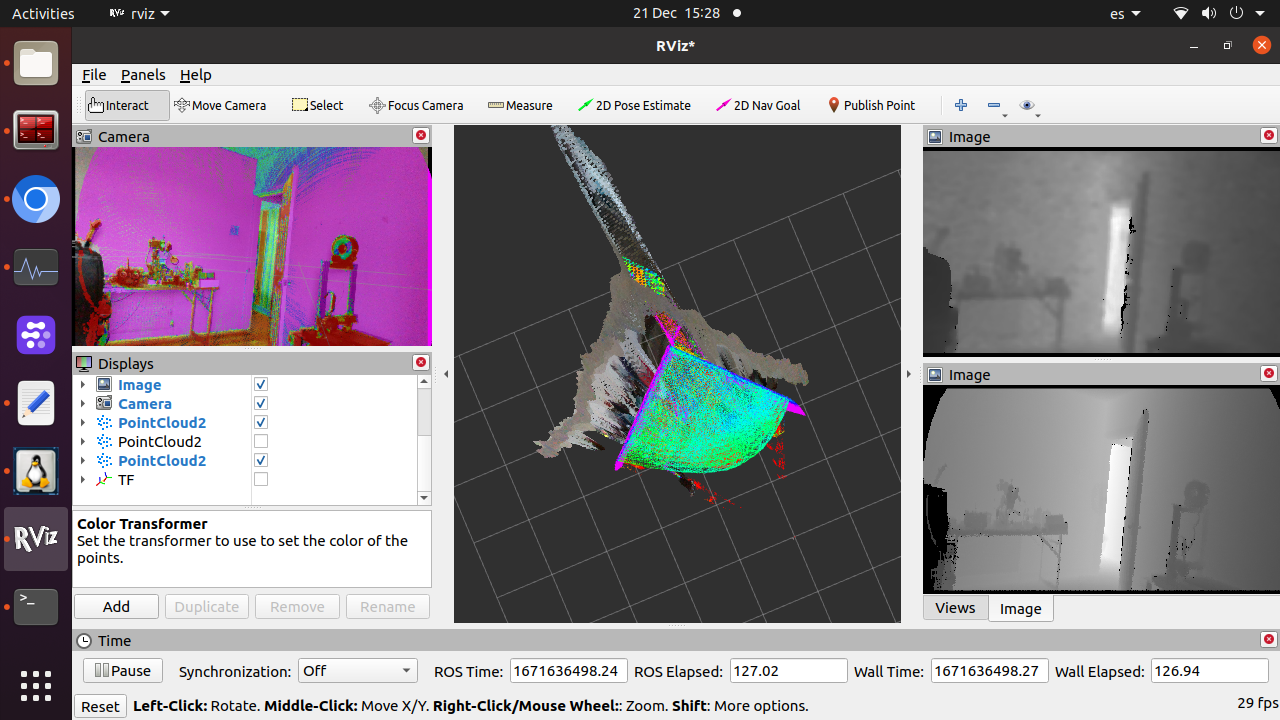

Hi , following with the good news I got work the laser_scan to depth node, so now I can convert the deskewed point cloud to depth and I should be able to get organized point clouds from that depth. The export command to .las worked with the deskewed point clouds, I didnt try yet with the depth+RGB. I have a last issue to align the RGB and d435 point cloud and ̣color image, with the lidar pointcloud. It is due to my 4x4 matrix conversion that is wrong. I could make work that matrix in other slam packages, no without issues as some had the matrix inverted.

My extrinsic camera/lidar looks like this and it works in other software, introduced as matrix, it is not needed convert it to TF, and there is were my problems start by lack of maths knowledge. -0.00166874,-0.999969,-0.00768157,-0.013776 -0.00286365,0.0076861,-0.999967,0.0347174 0.999995,-0.00164645,-0.00287661,-0.0972432 0,0,0,1 I take the 3 right values for x y z I introduce remain 3x3 matrix in this website: https://www.energid.com/resources/orientation-calculator and here https://www.andre-gaschler.com/rotationconverter/ I tried every result I tried ingress the output of quaternion or angle-axis from the calculator to TF and TF2 publisher in the rtabmap launch <node unless="$(arg use_sim_time)" pkg="tf" type="static_transform_publisher" name="livox_frame_to_camera_link" args="x y z qx qy qz _frame camera_link 10"/> or <node unless="$(arg use_sim_time)" pkg="tf2_ros" type="static_transform_publisher" name="livox_frame_to_camera_link" args="x y z qx qy qz qw livox_frame camera_link 10"/> I tried changing camera_link by camera_color_optical_frame, which have sense as it is the frame of camera_info topic i used to calibration, but didnt work. Maybe I'm putting wrong numbers or format I'm no able to align the frames as shown in the picture, does rtabmap need a expecial matrix transformation or need be calculated in a different way than the online calculator?  Even if I put the transform 0 0 0 0 0 0 camera and lidar the point clouds of both doesnt fit , which I cannot really undersand, but in that case at least the orientation is close to right or right.  I don't expect you help me with the transformation as it is not a rtabmap thing, but would be nice if you can confirm that the way I do it should work, as if so , the only thing I can imagine as origen of this issue is that my matrix is against color_camera_optical_frame instead camera_link as they have not the same ENU coordinate. Maybe should I use a specific calibration tool for Rtabmap that gives direct results as Kalibr? Thanks! |

|

Administrator

|

I suggest to add TF display in RVIZ. You will better see the actual transform between your camera and lidar frame (and see if it is just an inverted transform, or an optical rotation missing).

From this matrix: -0.00166874,-0.999969,-0.00768157,-0.013776 -0.00286365,0.0076861,-0.999967,0.0347174 0.999995,-0.00164645,-0.00287661,-0.0972432 0,0,0,1It means: x->z y->-x z-> -yso it looks like transforming ros base frame (x->forward, y->left, z->up) to image frame (x->right, y->down, z->forward). In general, this is the optical rotation (convert image frame into base frame): <node pkg="tf2_ros" type="static_transform_publisher" name="camera_link_to_optical" args="0 0 0 -1.57 0 -1.57 camera_link camera_optical_link 10"/> <node pkg="tf2_ros" type="static_transform_publisher" name="livox_frame_to_camera_link" args="-0.013776 0.0347174 -0.0972432 0 0 0 livox_frame camera_link 10"/>You should make sure your image topics have optical frame as frame_id. The optical rotation would be fixed, but the extrinsics calibration between camera and lidar would be included in livox_frame_to_camera_link. Or like you tried, combining optical rotation and camera/lidar translation in same TF, but crefully debug with TF in RVIZ. You can show up Camera display to see if RGB -> point cloud registration is good (before trying any mapping!). cheers, Mathieu |

Re: Livox Avia, + Depth cameras and organized point clouds.

|

This post was updated on .

So many thanks, that helped me a lot , it worked and aligned almost the frames, still I needed compensate a very small deviation , maybe due to the camera moved from when did the calibration, but i found how do it with a homemade trick without need recalibrate, the next time I will use kalibr maybe it is easier to align.

I will learn more about that matrix and operations with them, I got some extra info to read, but I lacks of maths base. I think the result is everything I could expect and even better, I can get close to 100% of depth coverage.  it just perform like a hyper precise RGBD camera. I still have a weird issue with the 435 depth and pointcloud from it (one of the reasons I never was able to align the frames), it doesn't fit with the lidar point cloud , it is not due to the frame alignment, it is due the scale, just the 3d space mapped in the d435 is bigger, maybe a bug somewhere or calibration issue or quality/noise/filter issue, have not sense what I see in the screen, and RGB image fits two both pointclouds the lidar one, and the d435 one, and in other softwares too but the ponint clouds doesn't fit. Probably it is not related with rtabmap as that pointcloud of the image is from the pointcloud generated from the d435 internally, but also externally form its aligned depth topic, doesn't mater non will fit with the lidar.  Other thing I found, maybe is not a issue, maybe it is like that and is a limitation or a option in CC that I dont know, in importing maybe, but with the commands rtabmap-export --las --cam_projection --scan --images --normals --poses_camera --poses rtabmap.db rtabmap-export --las --cam_projection --scan --images --poses_camera --poses rtabmap.db I cannot get normals as happen when I export the lidar RGB point cloud in .ply from Rtabviz and that is so convenient to meshing. both commands give in the output these lines, Computing normals of the assembled cloud... (k=20, 474073 points) Computing normals of the assembled cloud... done! (0.583967s, 474073 points) Adjust normals to viewpoints of the assembled cloud... (474073 points) Adjust normals to viewpoints of the assembled cloud... (0.310252s, 474073 points but they are not in CC. Any idea? This is the pointcloud from the .las file, looks nice.  Thanks so much by your time and apologies by take it. |

|

Administrator

|

Hi,

The scale difference is maybe caused by a wrong camera_info topic (intrinsics not correctly scaled). For LAS format, the normals should be included. Not sure why on your case. Can you share the database? cheers, Mathieu |

Re: Livox Avia, + Depth cameras and organized point clouds.

|

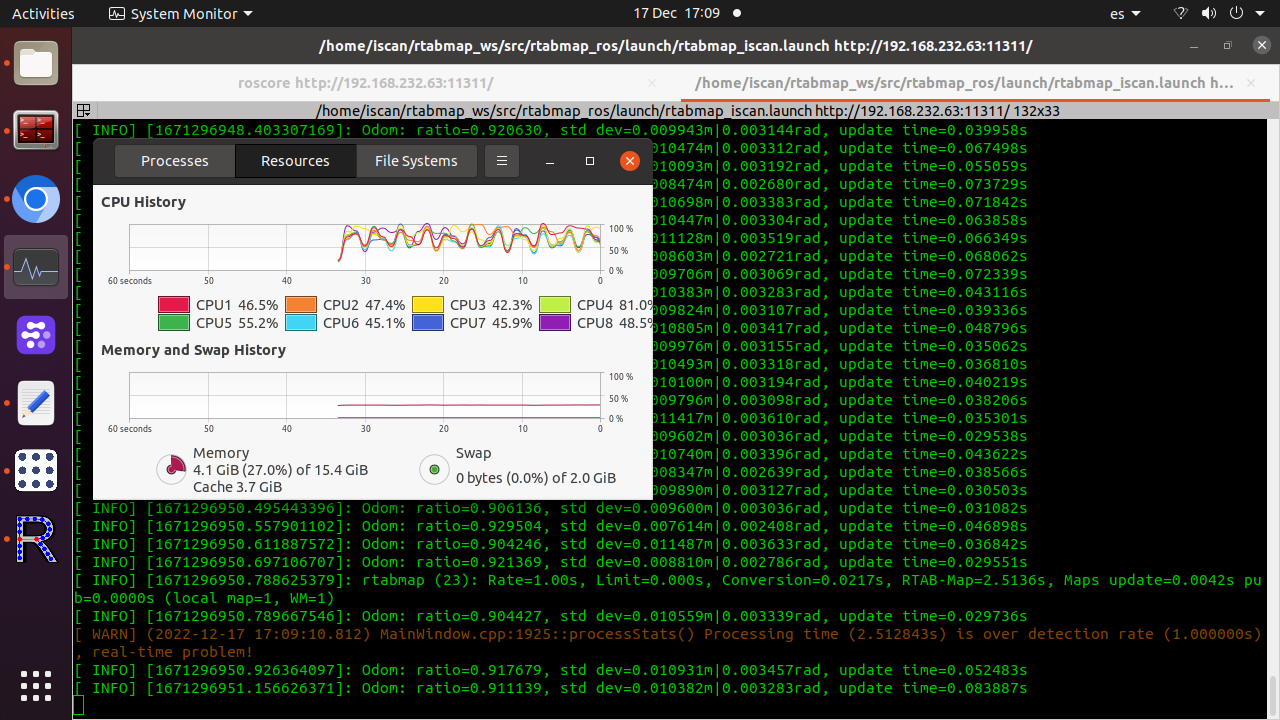

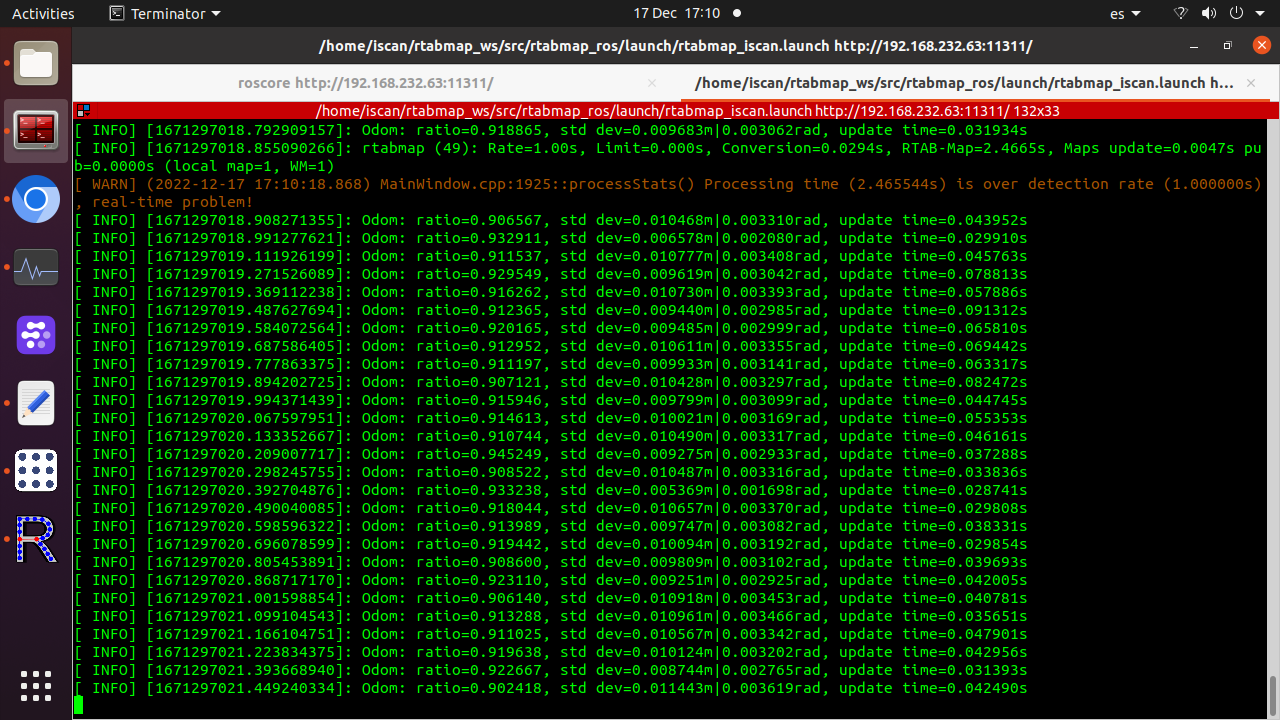

Ok, I fixed the camera issue, some bad firmware that left the calibration with wrong values, load default eeprom values fixed it

I went to take sample of data, but discovered that I was having high CPU load and topics started to don't arrive at time and I got errors than before didn't have. So my database is not good. Other thing I discovered is I cannot find the way to make Rtabmap work with bags, gave issues of TF, so I couldn't get data from the bag to give you a database. In the next days I will go out and record a data base, but I will load less the CPU, in my house it was working nicely but when I went outdoor, Rtabmap while record bags simultaneously demanded too much to the CPU, it was at 80% but it looks performance fall without need get the 100% load in CPU. Still I get RGB misaligment, I need calibrate outdoors too. Later I put a short video with the mapping session and the Rtabmap errors in case you are interested, I guess they will disappear with lower CPU load Thanks. |

«

Return to Official RTAB-Map Forum

|

1 view|%1 views

| Free forum by Nabble | Edit this page |