Load old database to do localization

|

This post was updated on .

Hey Mathieu,

I am having trouble to load the pre-mapped data base to rtabmap. I tried to turn on the localization mode, but it seems like it's creating another new data base and the old point cloud is not in. What may go wrong? Also is there a way to make the global costmap more robust like the local cost map which actually does obstacle detection? maybe assigning the minimal obstacle heights to thresh out the noises? If we have a very good CPU, which parameters you suggest us to tune in order to make the odometry run faster? Thanks very much. -- Best Regards Di Zeng Transcend Robotics - Robotics Engineer |

|

Administrator

|

Hi Di,

Did you start the localization mode from the launch file? When launching rtabmap in localization mode, the map will be empty until a localization is found with the map (the robot doesn't know where it is on start). So the robot should be already in a known environment. In localization mode, the database is read-only, so it should not be modified. You can always browse what is in your database using the database viewer: "$ rtabmap-databaseViewer ~/.ros/rtabmap.db". There are some parameters (here and here for rtabmap node) to filter the noise of the point cloud used for the projection map. Can you show a screenshot of RVIZ with the /rtabmap/cloud_map and /map topics shown? To get odometry running faster, most of the parameters depend on the image size. You can try with smaller images. If your images are 720p or more, you may want to increase GFTT distance (minimum distance between extracted features): <node pkg="rtabmap_ros" type="rgbd_odometry" name="rgbd_odometry"> <param name="GFTT/MinDistance" type="string" value="10"/> <!-- default 5 pixels --> <!-- reduce the number of features matched/extracted --> <param name="OdomF2M/MaxSize" type="string" value="1000"/> <!-- maximum features map size, default 2000 --> <param name="Vis/MaxFeatures" type="string" value="600"/> <!-- maximum features extracted by image, default 1000 --> </node>This will also give more uniformly distributed extracted features across the image. Other feature types can be used (FAST/BRIEF is the fastest, but features may not be uniformly distributed): $ rosrun rtabmap_ros rgbd_odometry | grep FeatureType Param: Vis/FeatureType = "6" [0=SURF 1=SIFT 2=ORB 3=FAST/FREAK 4=FAST/BRIEF 5=GFTT/FREAK 6=GFTT/BRIEF 7=BRISK 8=GFTT/ORB.] Frame-to-Frame odometry can also be faster: <node pkg="rtabmap_ros" type="rgbd_odometry" name="rgbd_odometry"> <param name="Odom/Strategy" type="string" value="1"/> <!-- 0=Frame-to-Map (F2M) 1=Frame-to-Frame (F2F) --> <!-- Optical flow may give more matches, but less robust correspondences --> <param name="Vis/CorType" type="string" value="0"/> <!-- Correspondences: 0=Features Matching, 1=Optical Flow --> </node> cheers |

|

Yeah, I started the localization mode from launch file.

I will attach the screen shots of the proj_map later today~. I am still trying to compiling rtabmap with OpenCV 3.0 |

|

In reply to this post by matlabbe

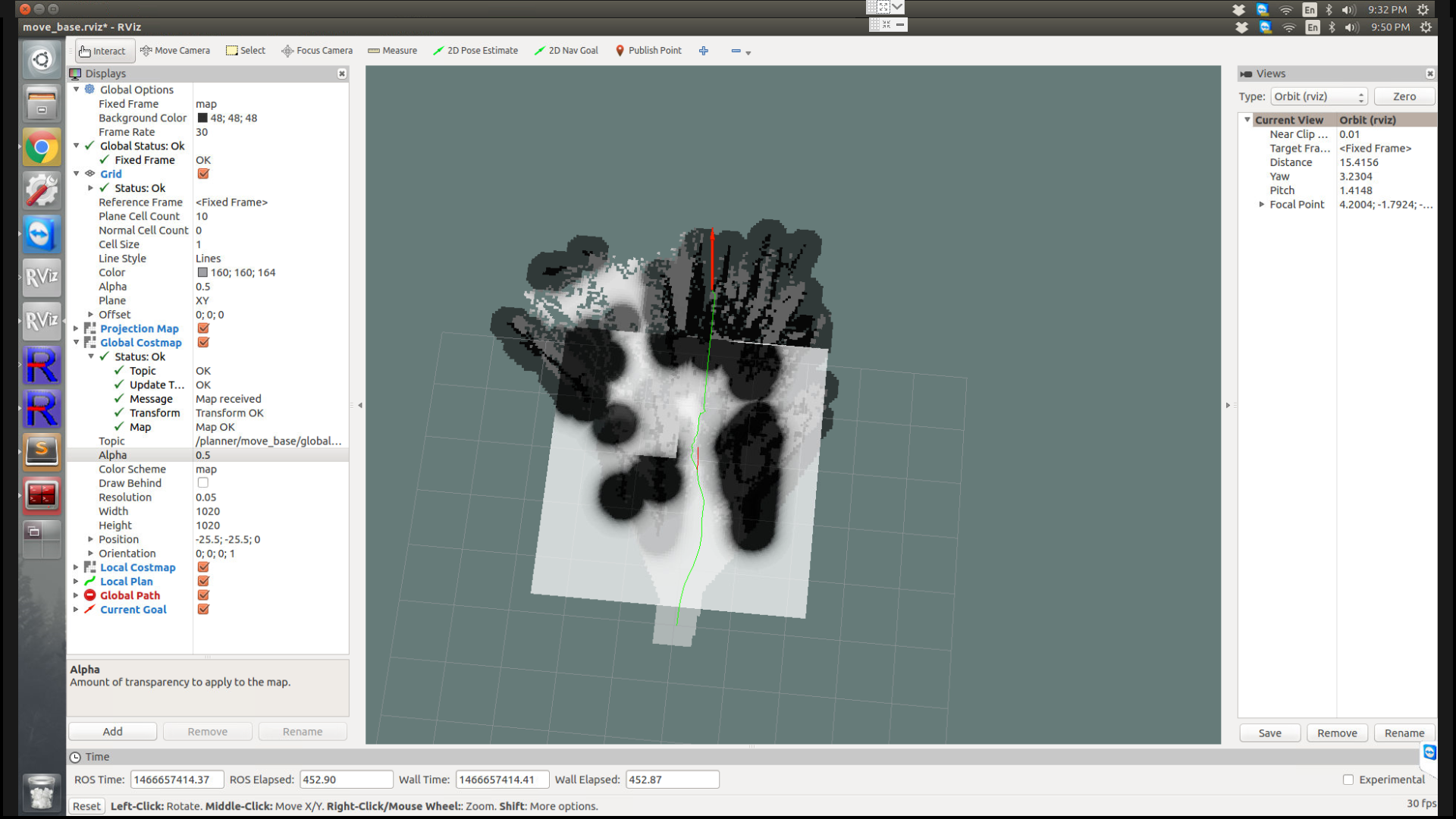

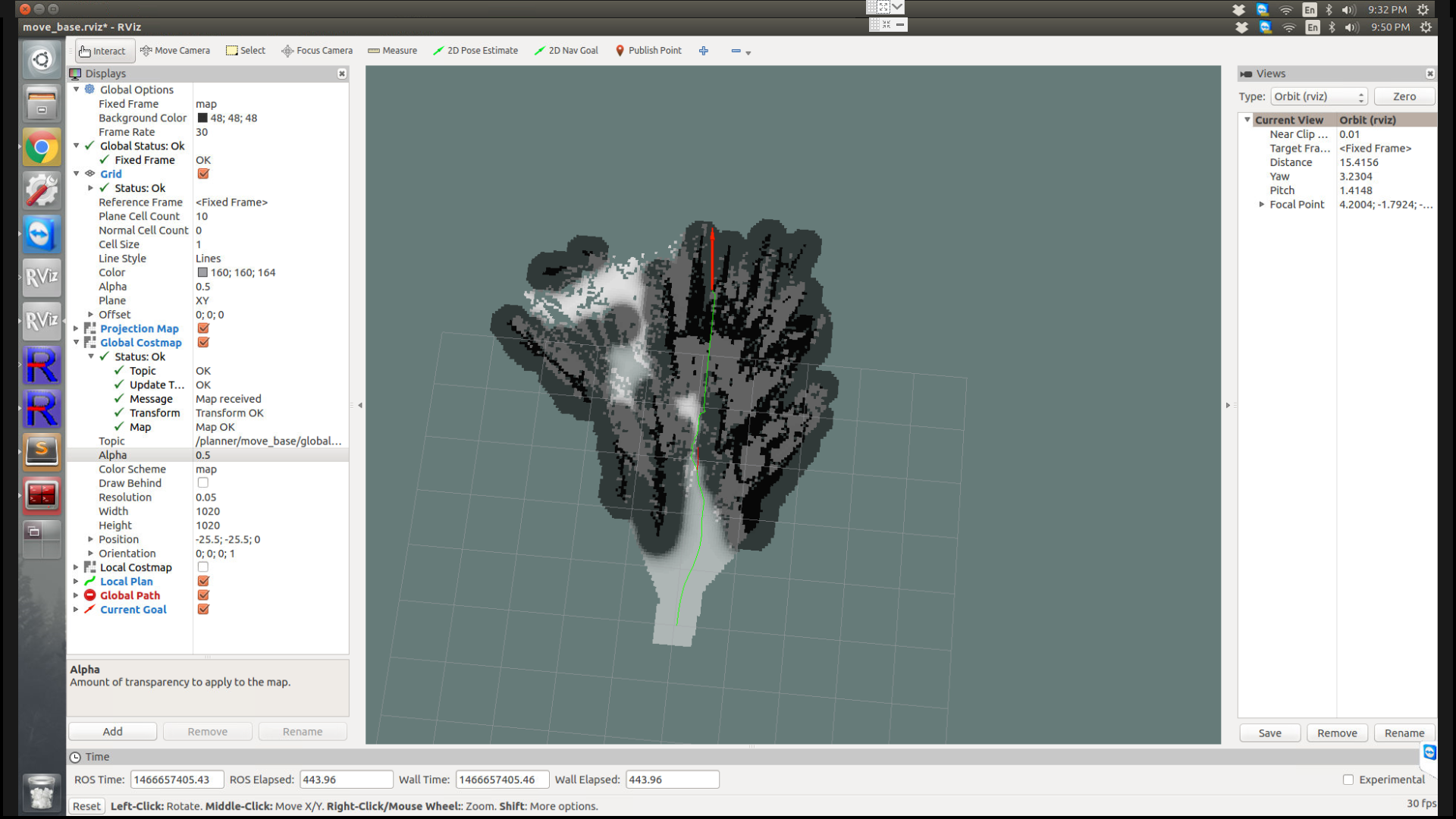

Hey Mathieu, As you can see, the global map tends to be too sensitive compared to the local cost map, as I increased the , it's doing better, but still sometimes a outlier in point cloud of the 3D map would cause a huge issue, because it blocked the only way of moving forward. Is there a way to update the global costmap based on the local costmap? How to increase the tendency of letting rtabmap update the Long Term Memory or the 3D Mapdata based on the recent observation in Short Term Memory? |

|

Administrator

|

The point cloud looks very noisy. Is it from the ZED camera? I think there is a parameter to set "Quality" depth map instead of "Performance" (this reduces a little the noise).

Another way to reduce the noise created in /rtabmap/proj_map is to use these parameters (see pcl::RadiusOutlierRemoval() for more info): <param name="cloud_noise_filtering_radius" value="0.05"/> <param name="cloud_noise_filtering_min_neighbors" value="2"/> cheers |

|

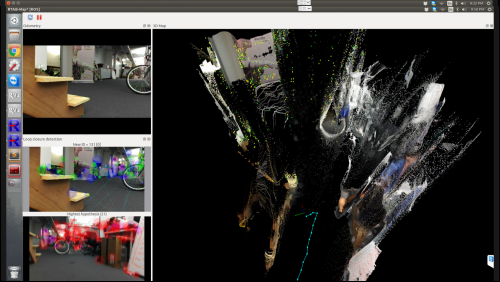

The point cloud is calculated from rtabmap, Zed is using Quality mode.

Seems like the filtering is doing very good job in costmap. I should try using the same parameters but use the stereo mode to see if the point cloud is doing better job. Is there a way to make the mapping less sensitive to the dynamic obstacle? Now if a person stands in front of camera, even after he moves, the person gets mapped. |

|

In reply to this post by matlabbe

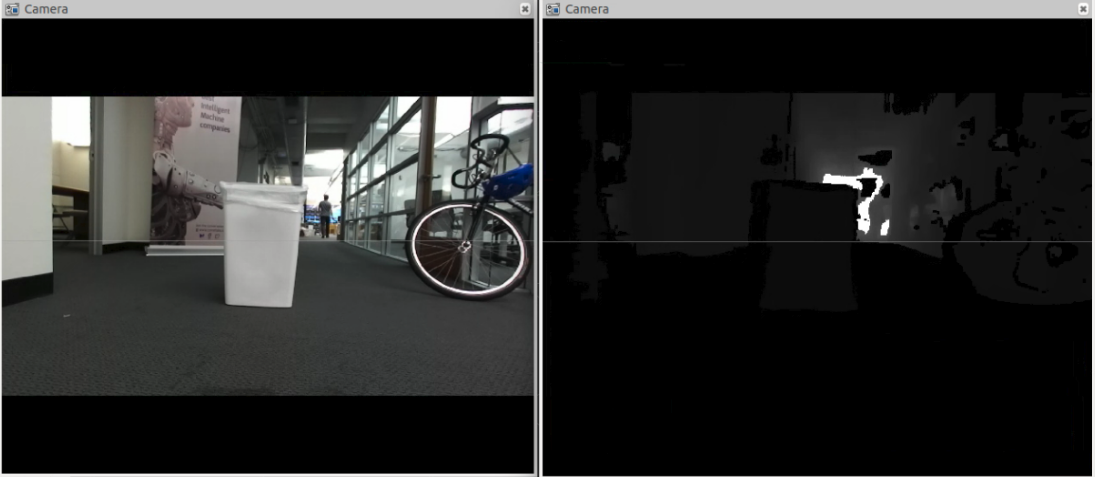

Hey Mathieu Here is a image of RBGD, what do you think about the quality of the depth?  On Thu, Jun 23, 2016 at 10:34 PM, Di Zeng <[hidden email]> wrote:

Best Regards Di Zeng Transcend Robotics - Robotics Engineer Phone: 301-222-7046 |

|

Administrator

|

Hi,

The quality of the depth is better visualized when showing the point cloud. You can subscribe in RVIZ to "/camera/point_cloud/cloud" topic (PointCloud2) published by the zed ros wrapper. cheers |

|

Administrator

|

About dynamic obstacles... if the robot doesn't move, it will not add new point clouds to map. However, if it is moving and someone pass by, the person will be added to 3D map. For the proj_map, if the robot traverses again the same area without people, it will clear the space in the map.

cheers, Mathieu |

«

Return to Official RTAB-Map Forum

|

1 view|%1 views

| Free forum by Nabble | Edit this page |