Mapping with KnectV2 and Husky A200

|

Hi Mathieu;

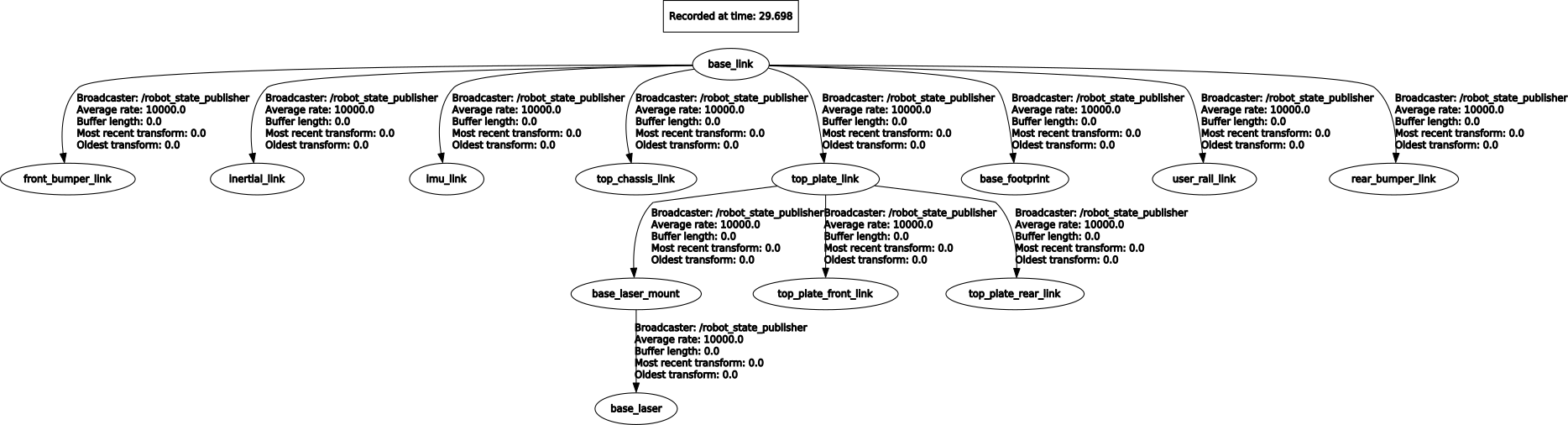

I'm working on Husky A200 + Kinect V2 + laser LMS111. I create a simulation on gazebo to reproduce the tutorial 2.1http://wiki.ros.org/rtabmap_ros/Tutorials/SetupOnYourRobot#Kinect_.2B-_Odometry_.2B-_2D_laser. In the simulation, everything works perfectly. I download the model into catkin_ws and make it. After I just change the robot description to include the xacro and them everything went ok. Now I'm using the real robot with this TF tree.

<node pkg="tf" type="static_transform_publisher" name="kinect_to_base_link" args="0.3206 0.000 0.299635 0 0 0 /top_plate_link /kinect2 100"/> <node pkg="tf" type="static_transform_publisher" name="kinect2_to_kinect2_link" args="0 0 0 0 0 0 /kinect2 /kinect2_link 100"/> For RTAB I used the example code in the tutorial 2.1 with modification to get the map through RGB-D sensor

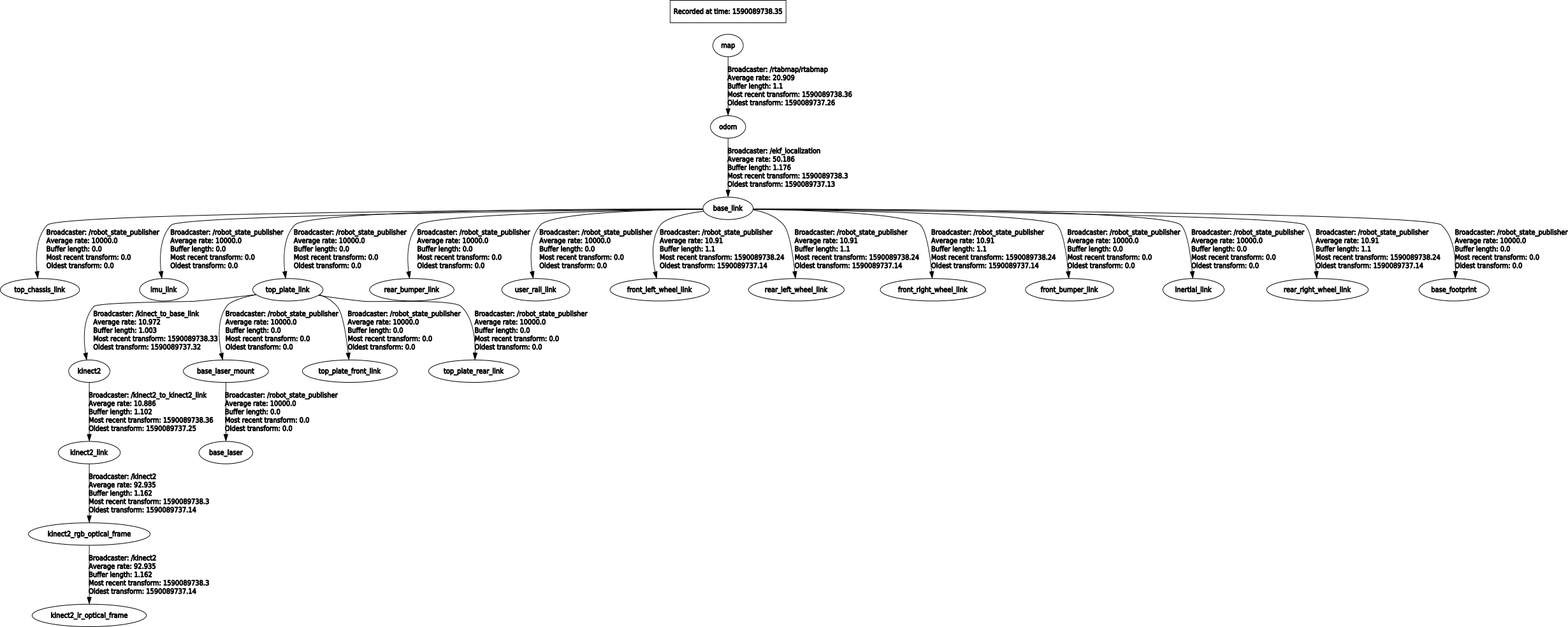

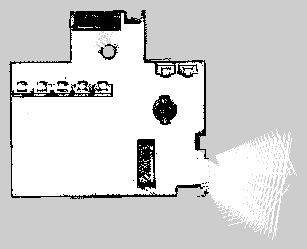

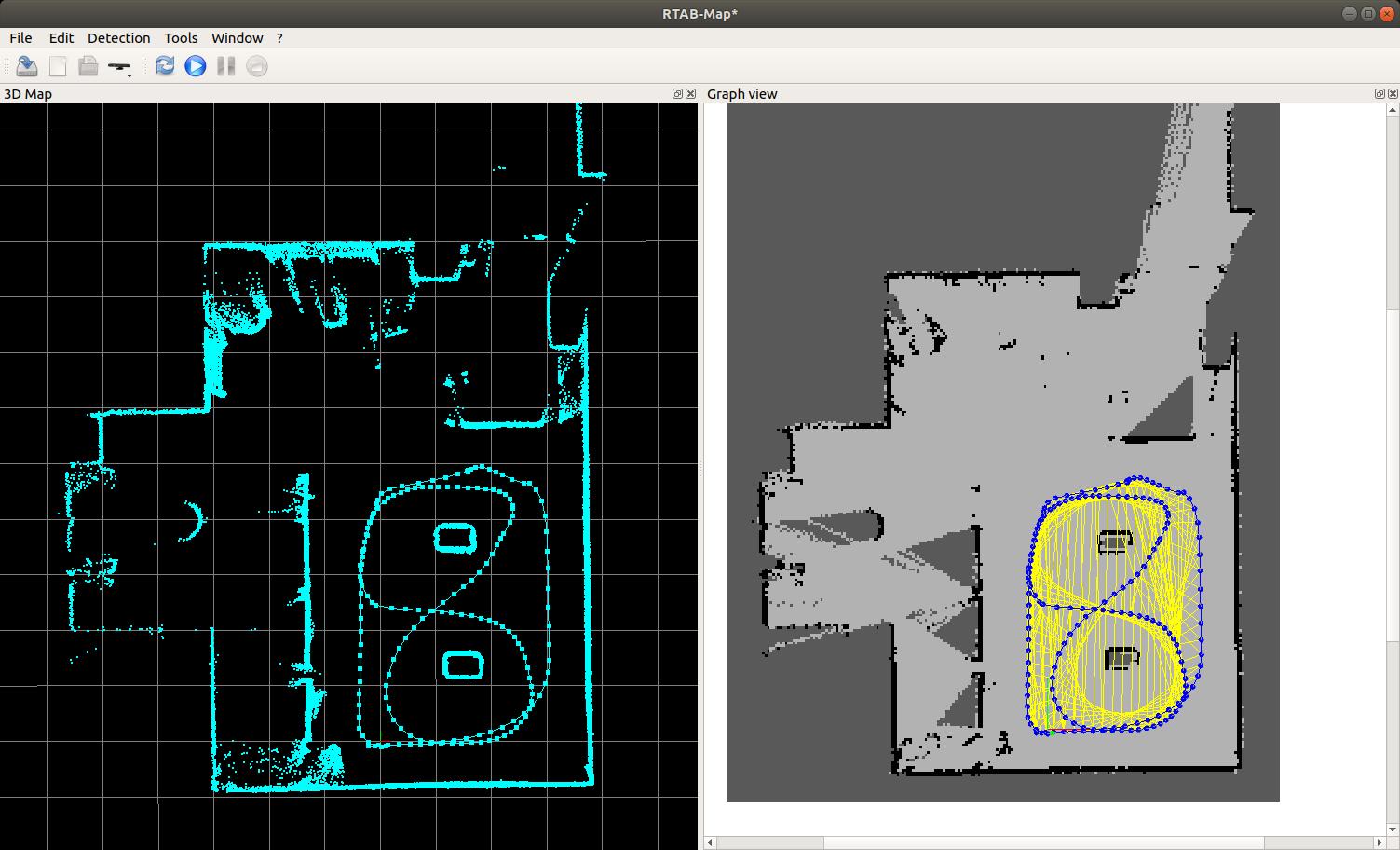

Now I have the TF from kinect attached to robot TF as below  With this setup, the produced map is different from what I expected. The below image shows the obtained map  Do you know what I could have done to not get the correct map? thank you Best Regards |

|

Administrator

|

Hi,

there is maybe a missing optical rotation on the kinect2, which is thinking it is looking up. Like in this example, you would have to change: <node pkg="tf" type="static_transform_publisher" name="kinect2_to_kinect2_link" args="0 0 0 0 0 0 /kinect2 /kinect2_link 100"/>by <node pkg="tf" type="static_transform_publisher" name="kinect2_to_kinect2_link" args="0 0 0 -1.5707963267948966 0 -1.5707963267948966 /kinect2 /kinect2_link 100"/> You can also open RVIZ, set the global fixed frame to map, and show kinect2 point cloud to see if it is correctly oriented. cheers, Mathieu |

|

This post was updated on .

Hi Mathieu

Thank you for the advice. The system is now working. However, the obtained map very different that i got through simulation.

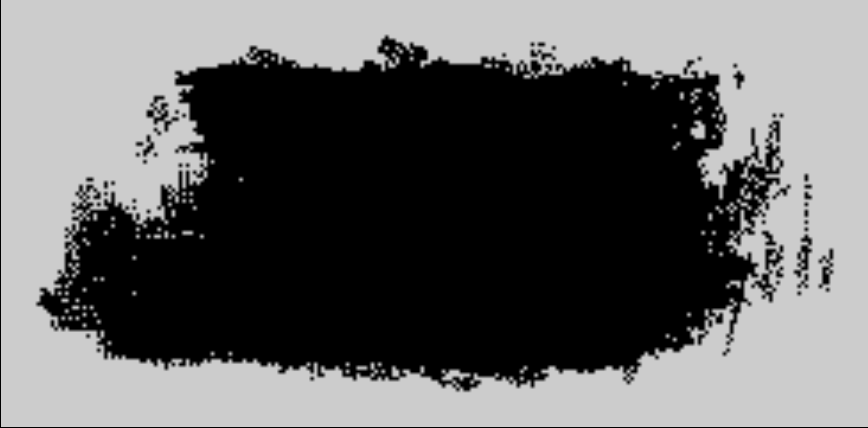

Here is the map obtained with above configuration.

and here is the map obtained with the simulation system (tutorial 2.1)  Here is the RTAB-Map parameters collected from terminal:

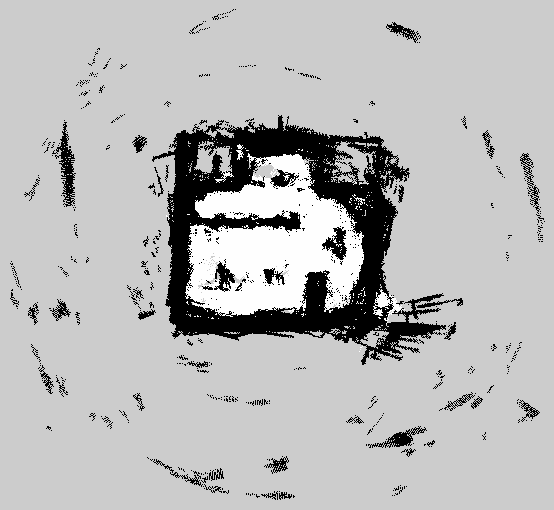

Is there anything that I can do to improve the map quality? ******************************************************************* ******************************************************************* EDIT ****************************************************************** ******************************************************************* I switched the odometry. Previously I was using the odometry from wheel encoders, now I'm using the odometry combined from IMU and well encoders. Due to this I got a better map.  But steel far from what I expect and have many outliers. |

|

Administrator

|

Hi,

Your odometry may be drifting a lot more in reality than in simulation. However, I see that you are using RGBD/NeighborLinkRefining=true with a lidar. Try with Grid/FromDepth=false to see if the lidar map looks good. Do you have a database to share? If the map with lidar doesn't look good (it should be similar than you have in simluation), ICP/ parameters may need some tuning. If the lidar map is good, it means that the TF between the lidar and camera may be wrong, e.g., if you show scan and camera point cloud in RVIZ, do they match perfectly? cheers, Mathieu |

|

Hi Mathieu

Sorry for the delay. A create a data base to share with you. This link contain the full data recorded(13GB) https://drive.google.com/file/d/1eRwzcTtlKeig2A2VhNUyGqd_1f73G-aW/view?usp=sharing This link contain the same database but compressed ( rosbag compress ....) (3GB) https://drive.google.com/file/d/1kEp3Hyv4oVQj7js_rvdnCiVf-PF0wHmh/view?usp=sharing I'm focusing exclusively on mapping mode. Using this database I generated a map. However, there are many places that were marked as occupied even though it was free. another quick question: How did you generate your bagfile? It's so small!!! My database is around 13GB/3GB and I only made a few turns with my robot. thakyou! |

|

Administrator

|

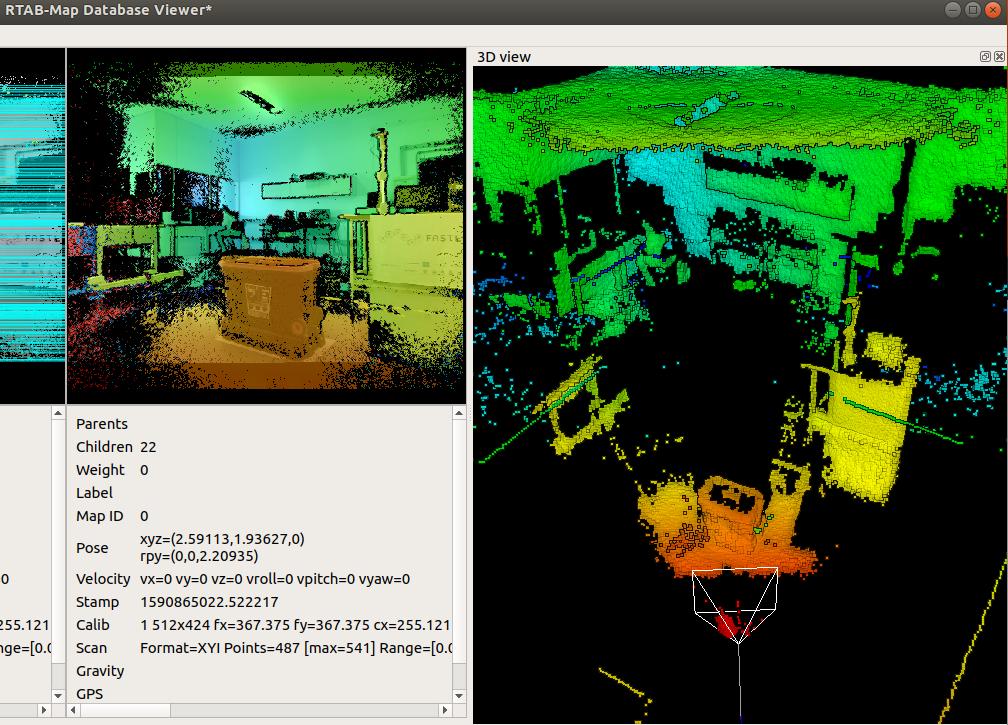

Hi,

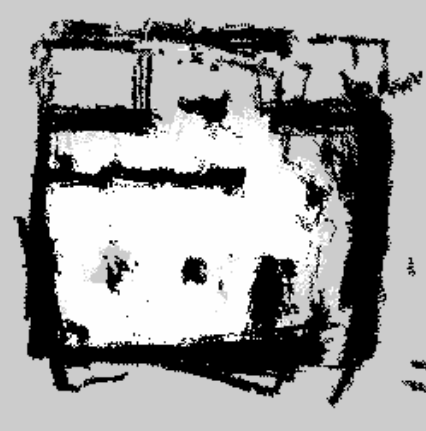

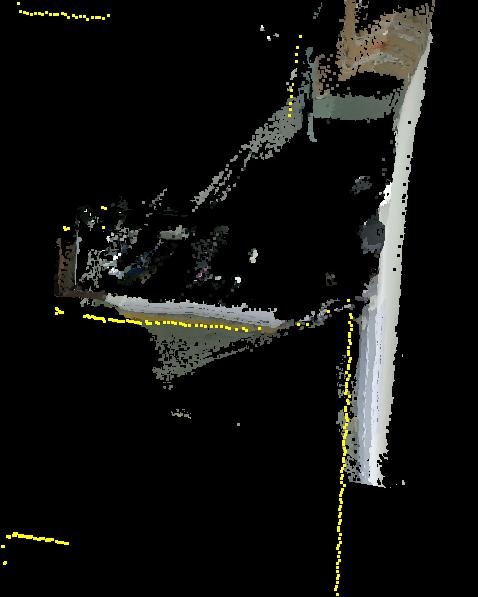

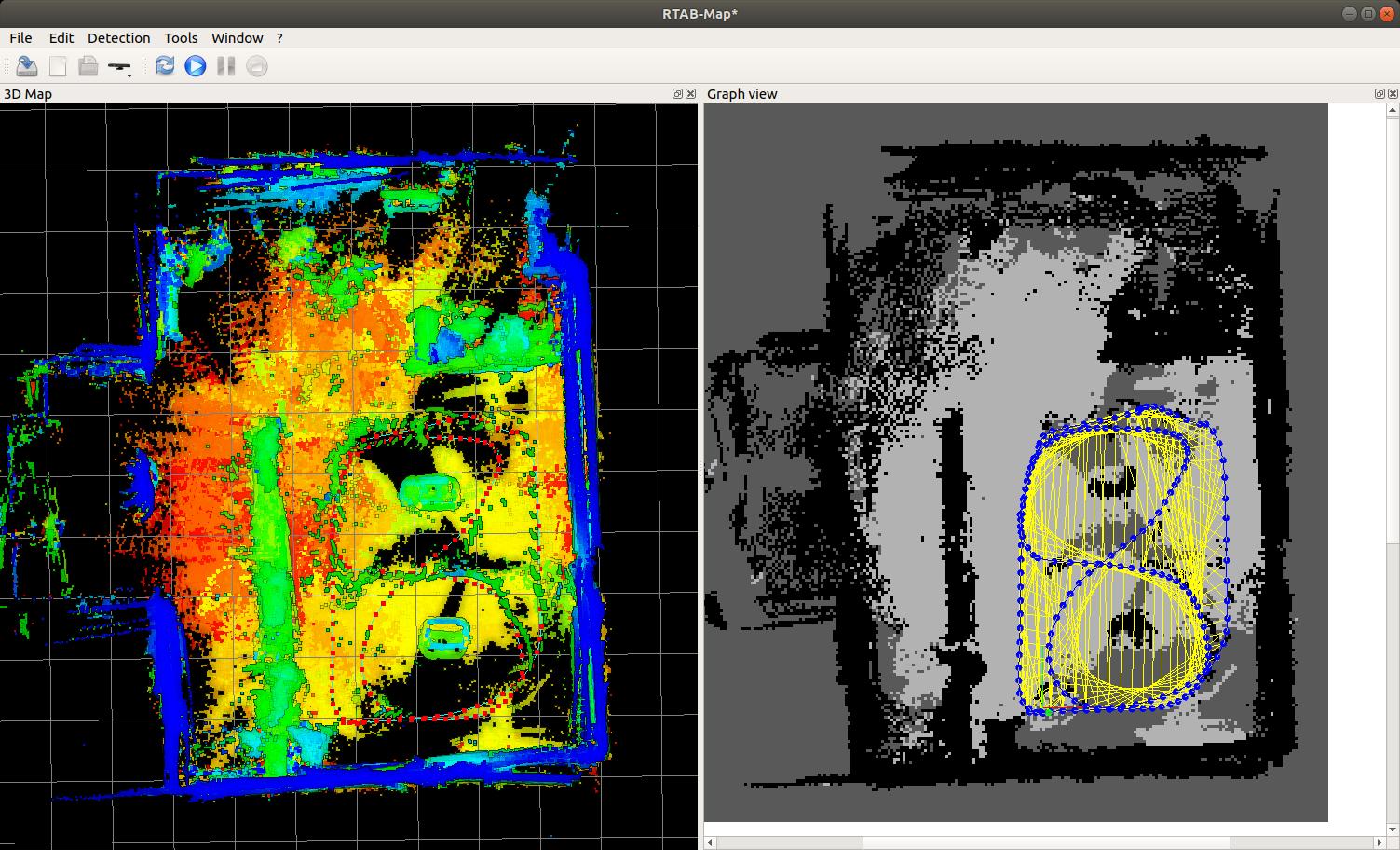

It seems the lidar scan and camera cloud are not perfectly aligned together (it would require some tuning about the static TF, show both the camera cloud and lidar scan in RVIZ, set base_link as the fixed frame in global options):  When correcting odometry based on lidar, the lidar map looks pretty crisp (see below), but the corresponding 3D map from the camera has large walls. Here are two examples. Grid map created from camera:

$ roslaunch rtabmap_ros rtabmap.launch \

args:="-d \

--Reg/Force3DoF true \

--RGBD/NeighborLinkRefining true \

--Grid/FromDepth true \

--Reg/Strategy 1 \

--Grid/MinGroundHeight -1 \

--Grid/MaxObstacleHeight 2 \

--Grid/RangeMax 5" \

rgb_topic:=/kinect2/sd/image_color_rect \

depth_topic:=/kinect2/sd/image_depth_rect \

camera_info_topic:=/kinect2/sd/camera_info \

visual_odometry:=false \

odom_topic:=/odometry/filtered \

rgbd_sync:=true \

use_sim_time:=true \

frame_id:=base_link \

scan_topic:="/scan" \

subscribe_scan:=true \

approx_rgbd_sync:=false

Grid map created from lidar:

$ roslaunch rtabmap_ros rtabmap.launch \

args:="-d \

--Reg/Force3DoF true \

--RGBD/NeighborLinkRefining true \

--Grid/FromDepth false \

--Reg/Strategy 1" \

rgb_topic:=/kinect2/sd/image_color_rect \

depth_topic:=/kinect2/sd/image_depth_rect \

camera_info_topic:=/kinect2/sd/camera_info \

visual_odometry:=false \

odom_topic:=/odometry/filtered \

rgbd_sync:=true \

use_sim_time:=true \

frame_id:=base_link \

scan_topic:="/scan" \

subscribe_scan:=true \

approx_rgbd_sync:=false

Other problems: 1) Sometimes the camera is generating depth values very close to camera. This could be filtered with Grid/RangeMin to 0.5.  2) The RGB and depth is not always aligned, this could affect localization accuracy, though here we refine with lidar, so those errors would be corrected (see how texture doesn't match with depth edges).  3) With iai_kinect2, I prefer to use qhd topics instead of sd topics, because sd introduces fake features because of the black pixels. About the size of the rosbag, do you mean that demo_mapping.bag? We recorded compressed images at lower frame rate. When visual odometry doesn't have to be computed, we can reduce frame rate to 2-5 Hz for the camera. In your bag, you also recorded /kinect2/sd/points and /scan_inv that are not needed for rtabmap. cheers, Mathieu |

«

Return to Official RTAB-Map Forum

|

1 view|%1 views

| Free forum by Nabble | Edit this page |