Mapping with RPi4+Tango+Lidar

|

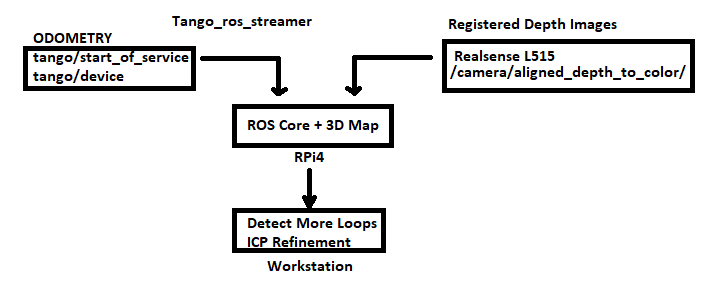

I have a project in mind for mapping on a raspberry pi 4, essentially just using the Pi as an indicator of loop closures and as a way to assemble registered depth images from a lidar camera into a 3D map. I have a few questions as I am trying to figure out how plausible this setup would be.

To limit the load on the Raspberry Pi, I plan to use a Tango device to compute odometry which will be published to ROS running on the Raspberry Pi. Rtabmap will be run with limited STM and only display the most recent nodes in 3D view, but would ideally indicate when successful loop closures occur. At the same time, nodes consisting of registered depth images from a RealSense L515 would be added to the database.  I am wondering: If the Raspberry Pi must find the loop closures, will it need to re-extract visual features from either the tango or L515 RGB images? Is there a way to pass along odometry features to the Pi, and would that be computationally less expensive? If I would like to recompute odometry on a workstation computer, will I need to record a rosbag of images at a higher detection rate than RTAB-Map (~1 Hz)? I am assuming that with the limited FOV of the L515 it may still be better to rely on visual odometry over ICP-based methods. Is there a visual odometry / feature extraction method that is best suited to post-processing on a workstation? For instance if a method is generally too computationally expensive for real time localization but has lower error. Any guidance is appreciated. Thanks for all the work that has gone into this project. |

|

Administrator

|

Hi Alan,

If loop closure detection has to be done online on RPI4, rtabmap would have to re-extract features from L515 RGB images. Doing loop closure detection on RPI4 is possible, as the frame rate can be low (e.g., 1 or 2 Hz). To recompute odometry, you indeed have to record >10Hz L515 rgb and depth images. I don't know what is the field of view of the L515, but I expect that you will have difficulty to get odometry as good as Tango already. Using Tango has some issues when synchronizing with external sensors, time synchronization drift and TF should be accurately set between Tango camera frame and the L515 base frame. For odometry, I just tested binary features like BRIEF and ORB because they are the faster to compute for online purpose. Photogrammetry approaches often use SIFT features, and do global bundle adjustments. If real-time is not required, record high resolution images and probably use SIFT features. Note that if you want to record HD data in a rosbag aside loop closure detection on RPI4 to get online feedback, rtabmap would have to set Mem/ImagePreDecimation and Mem/ImagePostDecimation to 2 or 4 to reduce feature extraction time. cheers, Mathieu |

«

Return to Official RTAB-Map Forum

|

1 view|%1 views

| Free forum by Nabble | Edit this page |