Mapping with multiple stereocameras, localization with multiple monochrome monocular cameras

Mapping with multiple stereocameras, localization with multiple monochrome monocular cameras

|

This post was updated on .

Hello, Mathieu!

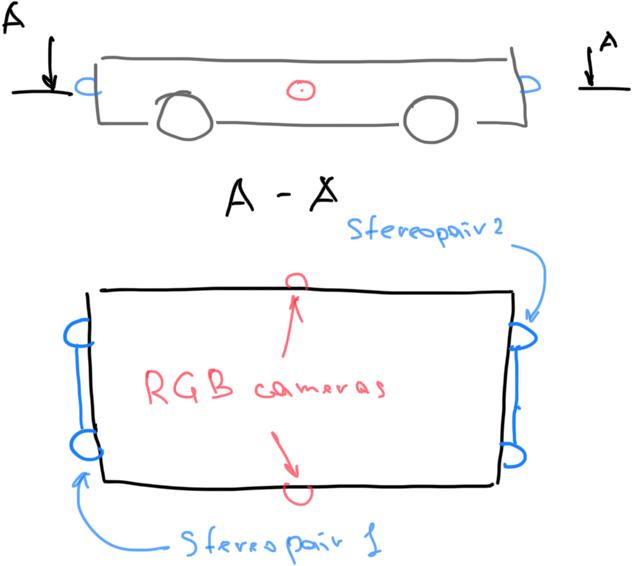

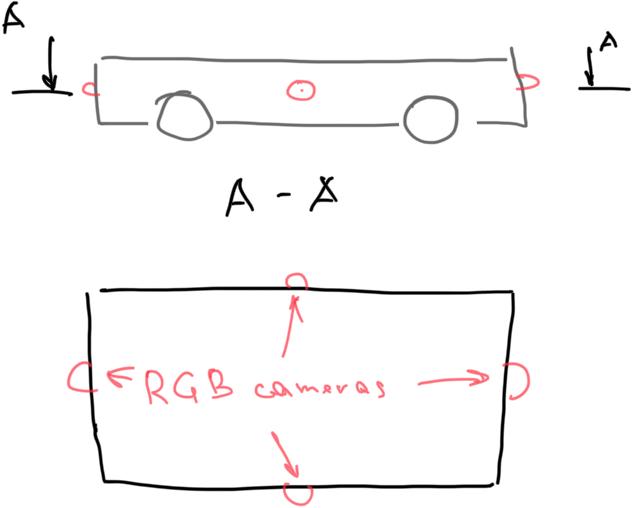

I'm encountered with the following task: - Create map with robot setup of two two stereo cameras (opposed directed, see pic 1 below) - Provide localization on that map with different robot setup (4 RGB omni directed cameras, see pic 2 below) - No IMU, Lidar or other sensors provided, (it could be used wheel odometry though) As far as the area of mapping is rather big, (around 1100 m^2), and can be bigger, I preferred to use rtabmap_ros. To deal with stereocameras, I guess to use stereo_img_proc and disparity_to_depth to provide depth channels for rgbd_camera0 and rgbd_camera1 topics (as in demo_two_kinects.launch example) At this point, after research of this forum and your papers I still have several questions. I'd really appreciate it if you would have the answers. 1. General question is how does multicam mapping technically implemented (as in demo_two_kinects.launch example)? Is it used only in odometry node or in mapping too? 1.1. Does your approach have the same roots as in MCPTAM or Multicol-SLAM, namely does using multicam setup gives profit in map precision besides "blind" camera problem (when one cam does'n see any features, like white wall)? 1.2. As far as you wrote here that image from both cameras merged into one "meta-image" and treated as RGBDImage as I understand, does it mean, that produced PointClouds (so far grid_map, octomap and so on) also are merged and during rgbd mapping-localization stage can be used by both cameras? For instance, when during the mapping stage, robot sees the same place with another camera, does it recognize it's place? 1.3. Are two monochrome stereocams enough for mapping? 1.4 You mentioned here that in the odometry stage, features from both cameras are used, but do they used in loop closure and graph optimization? 2. How monocular localization stage is implemented? Can it be used in multicam setup? 2.1. Does the RGB localization approach need external odometry? Are four differently directed mono cameras is enough? 2.2. How does mono loc technically works after rgbd mapping? Does it retrieve visual features from the pre-loaded map and compares it with visible features? How does it deal with the absence of depth? Thanks for your time and answers! Best regards, Artem  Picture 1. Mapping robot setup  Picture 2. Localization robot setup |

|

Administrator

|

Hi Artem,

1. multicam can be used for odometry, mapping and localization. 1.1 I was inspired from MCPTAM, but the same idea that we need at least one camera seeing features to work is there. In mapping mode, rtabmap requires stereo/RGBD cameras unlike MCPTAM that can work with monocular cameras that are sharing some of their field of view. 1.2 All cameras are used for odometry, mapping the occupancy grid and for localization. It will recognize a place from a camera seen by another camera. For example, if you have a backward and forward camera, if the robot comes back in opposite direction on the same path, it should be able to localize. 1.3 yes, color is just for visualization 1.4 They are used for localization. Graph optimization is independent of the features. Note that there are limitations with multicam setup: a) Vis/EstimationType should be 0 (3D->3D) instead of default 1 (3D->2D or PnP). This may limit the range of cameras and decrease the number of features used for localization and odometry. We are based on cv::solvePnPRansac() function from OpenCV for 3D->2D estimation, I am still not aware if someone implemented it for multicameras. b) OdomF2M/BundleAdjustment should be also disabled, local bundle adjustment has not been implemented for multicam setup (it could be implemented, g2o supports it). This will reduce odometry accuracy. 2. As we cannot use Vis/EstimationType=1 in multicam setup, it won't work. 2.1 We can feed external odometry while doing RGB-only localization (well with any sensors used) 2.2 With Vis/EstimationType=1, we only need 3D position of features in the map, and use only 2D features from the image during localization. Actually, the localization is done exactly the same than with a stereo camera or rgb-d camera. The advantage to use a stereo or rgbd camera is when the transform after PnP is refined by local bundle adjustment (Vis/BundleAdjustment=1), in which the 3D features from the current frame can be used to better refine the transform. cheers, Mathieu |

Re: Mapping with multiple stereocameras, localization with multiple monochrome monocular cameras

|

This post was updated on .

Hello, Mathieu!

Thanks for insights! There are still several questions. 1. Did your answer to point 2 regards to monocular four-camera setup? I can't understand, how the statements about treating multicamera setup as one sensor with extended FoV (here) and the need for multicam-specific PnP algorithms are interconnected. 2. Therefore if I suppose to use a monocular camera for localization on the pre-built map, rtabmap natively supports only one-camera setup, right? If true, which way, in your opinion, we can choose to improve rtabmap methods for monocular multicam localization support? 3. Will the map built with Vis/EstimationType=0 and OdomF2M/BundleAdjustment=0 will work with Vis/EstimationType=1 and OdomF2M/BundleAdjustment=1 at the localization stage? For instance, we built map with two stereocams, and want to localize with one monocular. Thanks for your time. Best regards, Artem |

|

Administrator

|

Hi Artem,

1. In the current implementation, we cannot use PnP with four monocular cameras, only one camera. If PnP cannot be used, we should do 3D->3D estimation, which requires 3D features from the map and the current frames. If we have only mono cameras, we cannot estimate 3D features in that frame. Multi-RGBD cameras works with 3D->3D estimation, but not for multi-mono cameras, which would require 3D->2D estimation (PnP) as we cannot estimate 3D features. 2. Yes, with one camera you can use PnP. For multi mono cameras, we have to implement a multi-camera PnP function. 3. I recommend to use Vis/EstimationType=1 and OdomF2M/BundleAdjustment=1 in mapping mode too, but yes it will work either the map is built with those options or not. The main problem is that we cannot use Vis/EstimationType=1 with multi-cameras setup, and as Vis/EstimationType=0 only works for multi-RGBD cameras, localization won't work with multi-monocular cameras setup. cheers, Mathieu |

Re: Mapping with multiple stereocameras, localization with multiple monochrome monocular cameras

|

This post was updated on .

Hi Mathieu,

My multicam mapping research is ongoing. I have two trajectories in the same room. One for mapping, another for localization (loop closure) tests. My setup is as following:

At the localization stage, I've encountered the problem: RTAB-Map doesn't make loop closure, and returns that there is too large rotation. It seems that it linked with this and different position of cameras on mapping and localization stages. (I 've tried to change their tfs on localization and it worked on start) But I still can not figure out how to fix such behavior. [ INFO] [1592557375.271930197, 1591637994.530363555]: rtabmap (2932): Rate=1.00s, Limit=0.000s, RTAB-Map=0.1445s, Maps update=0.0017s pub=0.0000s (local map=0, WM=2729) [ WARN] (2020-06-19 12:02:56.434) util3d.cpp:604::cloudFromDepthRGB() Cloud with only NaN values created! [ WARN] (2020-06-19 12:02:56.508) Rtabmap.cpp:2144::process() Rejected loop closure 2764 -> 2933: Too large rotation detected! (pitch=0.000000, yaw=0.000000) max is 3.141374 Thanks for your time. Best regards, Artem |

Re: Mapping with multiple stereocameras, localization with multiple monochrome monocular cameras

|

Hi Mathieu and everyone!

The problem was solved by updating from default apt rtabmap package (0.19.3) to building from source (0.19.7). commit Anyway, I still what was the actual reason in the code of rejecting the 180-degree rotation. If anyone has time to explain, I will be very grateful. |

|

Administrator

|

Hi,

Well, we could probably remove that check when a guess is not used. I don't remember the exact case, but it could happen that the visual transform tells something, and the ICP registration (when Reg/Strategy > 0) flips 180 the resulting transform, which is wrong. cheers, Mathieu |

Re: Mapping with multiple stereocameras, localization with multiple monochrome monocular cameras

|

Hi Mathieu!

Btw, I didn't use ICP and lidar at all. Also, I eventually meet this problem again for the same case, except now for monocular localization. If I prepare map with stereocamera, and then use one mono back camera (directed 180 degrees difference with the base_link) for localization, rtabmap throws the same `Too large rotation` error again. But, I found a way to solve it: if I set <param name="frame_id" type="string" value="back_camera"/> , rtabmap works well, but I suppose, that this leads to wrong results of map-> odom correction from rtabmap. |

|

Administrator

|

This makes me remember that we had a similar problem with a setup with a robot having a forward and a backward cameras. When the robot was traversing the same path but in inverted direction, transforms were rejected. We removed the check when having more than one camera: https://github.com/introlab/rtabmap/commit/bd979cca34f9d5ac0bc41c95230f5bcde59fde54

I think we can remove safely that check for good. It was added in January 2016 because of ICP problem described above. However, normally there was a check in RegistrationIcp to detect this problem, but was refactored on May 2016 to do correctly compare with the original guess transform. For example with that refactoring, if the visual guess tells that the transform is 180 deg (like in your case), ICP won't fail if it tells that it is 182 deg instead, but will fail if it tells 2 deg (it compares the change between input transform and the output, not the actual absolute transform angle). I removed the check in this commit: https://github.com/introlab/rtabmap/commit/c5158cade5f9b6f845c3df507c3032916d20ca20 frame_id should be the base frame indeed, not back_camera, with that commit the loop should now be accepted with your backward camera. cheers, Mathieu |

Re: Mapping with multiple stereocameras, localization with multiple monochrome monocular cameras

|

Thanks a lot, Mathieu!

|

«

Return to Official RTAB-Map Forum

|

1 view|%1 views

| Free forum by Nabble | Edit this page |