Monocular mapping on RPi with low depth quality

|

Hey Mathieu,

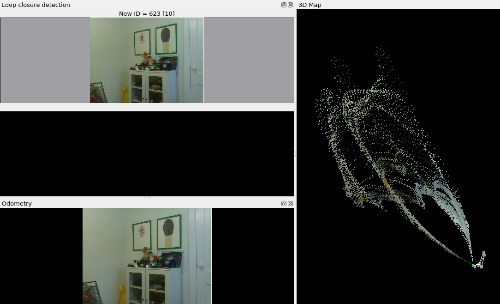

I'm trying to run RTABMap using monocular depth generated from a Raspberry Pi camera. I have no issue running the node, it's just that I'm having trouble generating a good quality 3D map. I'm tried with two different neural networks (fast-depth and mobilepydnet) to compute an approximate 3D image, but in both cases I'm getting very low quality maps:  I used ORB-SLAM to generate odometry, and I think my calibration parameters are good. Since you have better knowledge, I figured you might be able to help with the mapping strategy settings. I hope to share the scripts and models with you for possible integration into RTABMap for running on a Raspberry Pi or otherwise any system with one camera. Here is my launch file: <launch> <param name="use_sim_time" type="bool" value="True"/> <!-- Choose visualization --> <arg name="rviz" default="false" /> <arg name="rtabmapviz" default="true" /> <!-- Localization-only mode --> <arg name="localization" default="false"/> <!-- Corresponding config files --> <arg name="rtabmapviz_cfg" default="~/.ros/rtabmap_gui.ini" /> <arg name="rviz_cfg" default="$(find rtabmap_ros)/launch/config/rgbd.rviz" /> <arg name="frame_id" default="rpi_cam"/> <!-- Fixed frame id, you may set "base_link" or "base_footprint" if they are published --> <arg name="database_path" default="~/.ros/rtabmap.db"/> <arg name="rtabmap_args" default=""/> <!-- delete_db_on_start, udebug --> <arg name="launch_prefix" default=""/> <!-- for debugging purpose, it fills launch-prefix tag of the nodes --> <arg name="approx_sync" default="true"/> <!-- if timestamps of the input topics are not synchronized --> <arg name="rgb_topic" default="/usb_cam/image_raw" /> <arg name="depth_registered_topic" default="/usb_cam/depth" /> <arg name="camera_info_topic" default="/usb_cam/camera_info" /> <arg name="compressed" default="false"/> <arg name="subscribe_scan" default="false"/> <!-- Assuming 2D scan if set, rtabmap will do 3DoF mapping instead of 6DoF --> <arg name="scan_topic" default="/scan"/> <arg name="subscribe_scan_cloud" default="false"/> <!-- Assuming 3D scan if set --> <arg name="scan_cloud_topic" default="/scan_cloud"/> <arg name="visual_odometry" default="false"/> <!-- Generate visual odometry --> <arg name="odom_topic" default="/odom"/> <!-- Odometry topic used if visual_odometry is false --> <arg name="odom_frame_id" default="rpi_cam"/> <!-- If set, TF is used to get odometry instead of the topic --> <arg name="namespace" default="rtabmap"/> <arg name="wait_for_transform" default="10"/> <arg name="queue_size" default="40"/> <arg name="map_frame_id" default="map"/> <include file="$(find rtabmap_ros)/launch/rtabmap.launch"> <arg name="rtabmapviz" value="$(arg rtabmapviz)" /> <arg name="rviz" value="$(arg rviz)" /> <arg name="localization" value="$(arg localization)"/> <arg name="gui_cfg" value="$(arg rtabmapviz_cfg)" /> <arg name="rviz_cfg" value="$(arg rviz_cfg)" /> <arg name="frame_id" value="$(arg frame_id)"/> <arg name="queue_size" value="$(arg queue_size)"/> <arg name="namespace" value="$(arg namespace)"/> <arg name="database_path" value="$(arg database_path)"/> <arg name="wait_for_transform" value="$(arg wait_for_transform)"/> <arg name="rtabmap_args" value="$(arg rtabmap_args)"/> <arg name="launch_prefix" value="$(arg launch_prefix)"/> <arg name="approx_sync" value="$(arg approx_sync)"/> <arg name="rgb_topic" value="$(arg rgb_topic)" /> <arg name="depth_topic" value="$(arg depth_registered_topic)" /> <arg name="camera_info_topic" value="$(arg camera_info_topic)" /> <arg name="compressed" value="$(arg compressed)"/> <arg name="subscribe_scan" value="$(arg subscribe_scan)"/> <arg name="scan_topic" value="$(arg scan_topic)"/> <arg name="subscribe_scan_cloud" value="$(arg subscribe_scan_cloud)"/> <arg name="scan_cloud_topic" value="$(arg scan_cloud_topic)"/> <arg name="visual_odometry" value="$(arg visual_odometry)"/> <arg name="odom_topic" value="$(arg odom_topic)"/> <arg name="odom_frame_id" value="$(arg odom_frame_id)"/> <arg name="odom_args" value="$(arg rtabmap_args)"/> </include> </launch> </pre></div> I also attached a very small db rtabmap.db so you could test it for yourself. If the depth quality is way too bad to be used, I would appreciate any insights you may have. |

|

Scratch that, here is a link to the original rosbag I used: https://drive.google.com/file/d/1ThyX49R2katMMmsAQI0_T74XhjPAI4cq/view?usp=sharing

|

|

Administrator

|

Hi,

It will be the case, as this is an approximation of the depth image. There are a couple of problems with this setup. 1) Without having ORB-SLAM in the loop to estimate the scale of the resulting depth image from the neural network, the depth image won't have ever the same scale computed by ORB-SLAM, so the pose scale and depth scale won't match. 2) With mono slam, the scale is corrected on loop closures, in this case, the depth image scale won't be corrected when ORB-SLAM will find a loop closure. 3) RTAB-Map doesn't optimize the graph to correct scale-drfit, it is assumed that the real scale is always known in the poses (like with stereo or rgb-d setups). RTAB-Map is not a Monocular SLAM approach. 4) in this case, even if the scale was known, the depth estimation seems not very accurate, there is a lot of distortion. cheers, Mathieu |

|

Hi, thank you for your response,

The issues that you voice over the scale are perfectly sound, but my purpose is not to create a robust and accurate approach at this time, but simply making it work. I know RTAB-Map requires depth information, so I wanted to emulate a fake RGBD camera hence the use of the neural net. Right now, it's okay if the map created by RTAB-Map is very poor, as long as it doesn't look like complete garbage data. Disregarding issues with scale and loop closure, would you be able to recommend what parameters should be changed and how for handling poor depth quality? Thanks! |

«

Return to Official RTAB-Map Forum

|

1 view|%1 views

| Free forum by Nabble | Edit this page |