Multi monocular cameras

|

Dear Mathieu,

I'm interested in using this dataset in rtabmap, but i think i could not. The dataset is acquired with three monocular camera, a velodyne and IMU. The images acquired from the three monocular cameras are partially overlapped, but i think it is not sufficient to recreate depth or stereo images from this. Can you confirm my supposition or is there any way to run it in rtabmap? The bag files publish the cameras info, the images, the velodyne point clouds, and also the tf for all this objects, so we know the transformations between the three cameras frames. Thanks in advance for the attention. |

|

Administrator

|

Hi,

Yes it can with some tricks. There is an old post testing KITTI dataset with lidar and only single monocular camera: http://official-rtab-map-forum.206.s1.nabble.com/RTAB-setup-with-a-3D-LiDAR-tp1080p1119.html The main trick is to provide depth images for RGB cameras to enable loop closure detection. That could be done by setting "gen_depth" to true. You could also provide RGB monocular images without depth image ("subscribe_depth=false"), but then only LIDAR-SLAM will be done, with no visual loop closure detection possible*. The main idea would be to do lidar odometry, then feed that odometry + lidar + 3 monocular cameras (use rgb_sync node to sync them into a rgbd_image) to rtabmap node. One caveat is that the 3 monocular images should have same resolution. cheers, Mathieu * Actually it could be possible with "Mem/StereoFromMotion" parameter but it would not be as accurate than having depth images. |

|

Hi,

thanks for the reply. In this dataset the velodyne is published as a PointCloud2 instead of a LaserScan. Is there any way to subscribe directly to PointCloud2? |

|

Administrator

|

Hi,

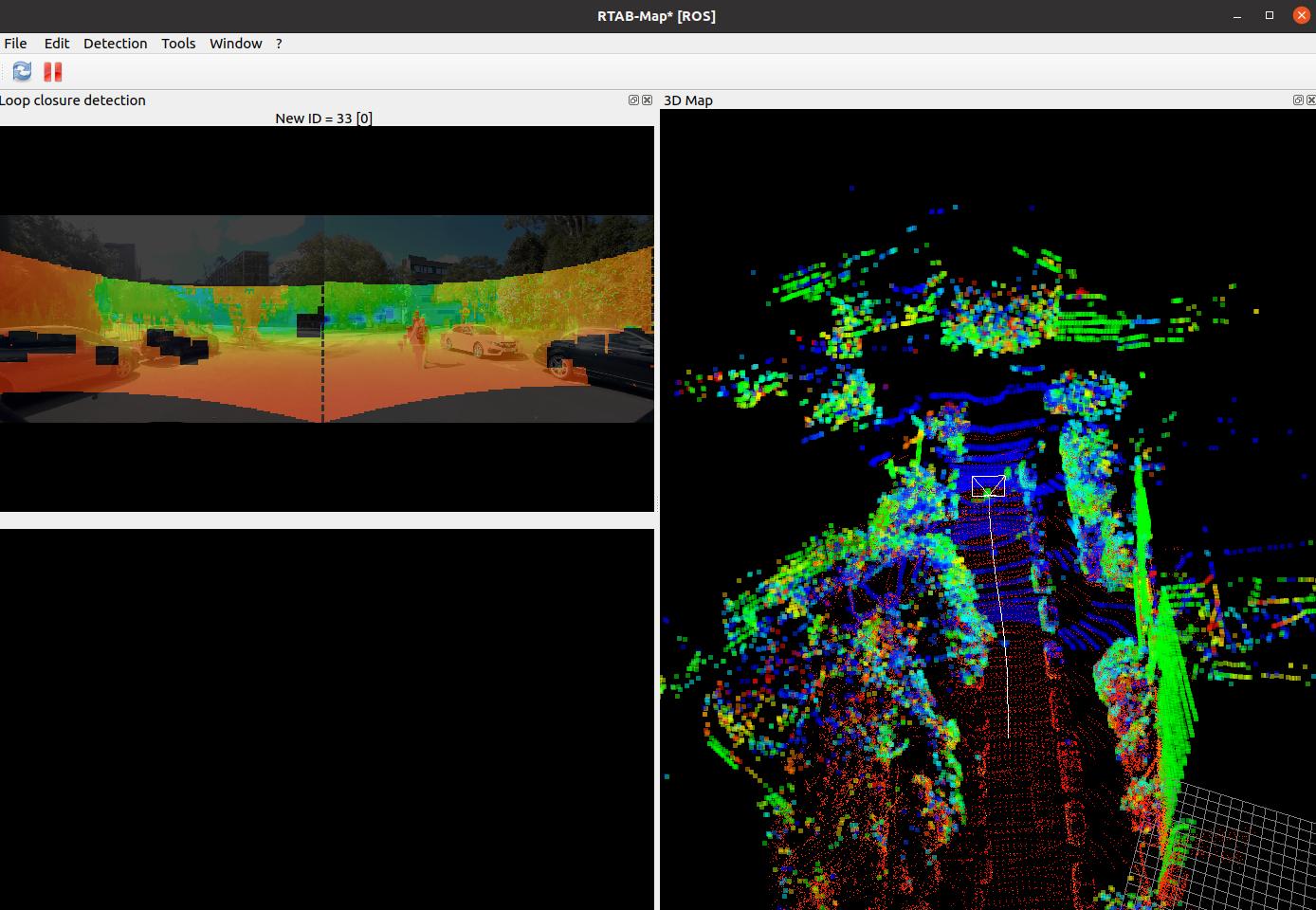

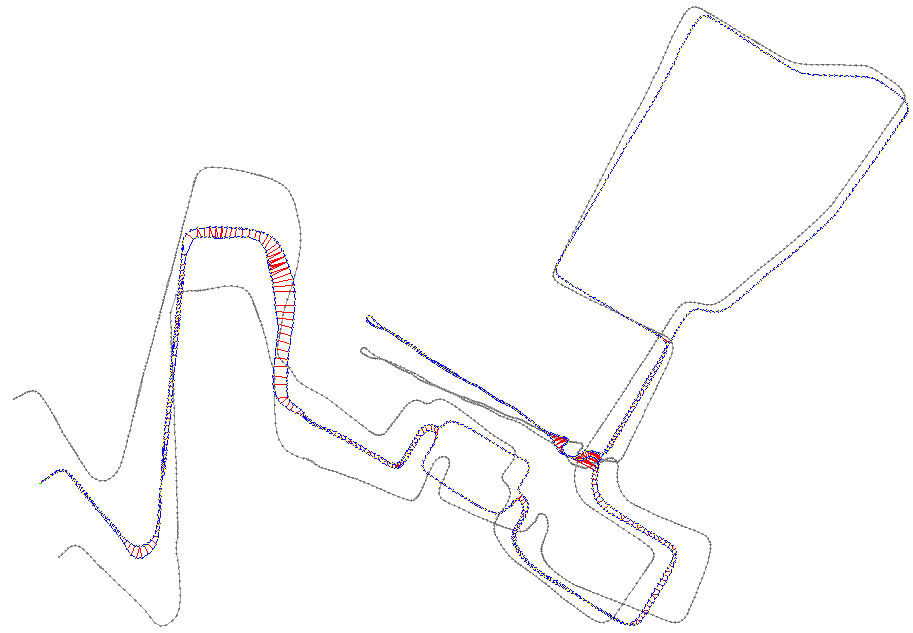

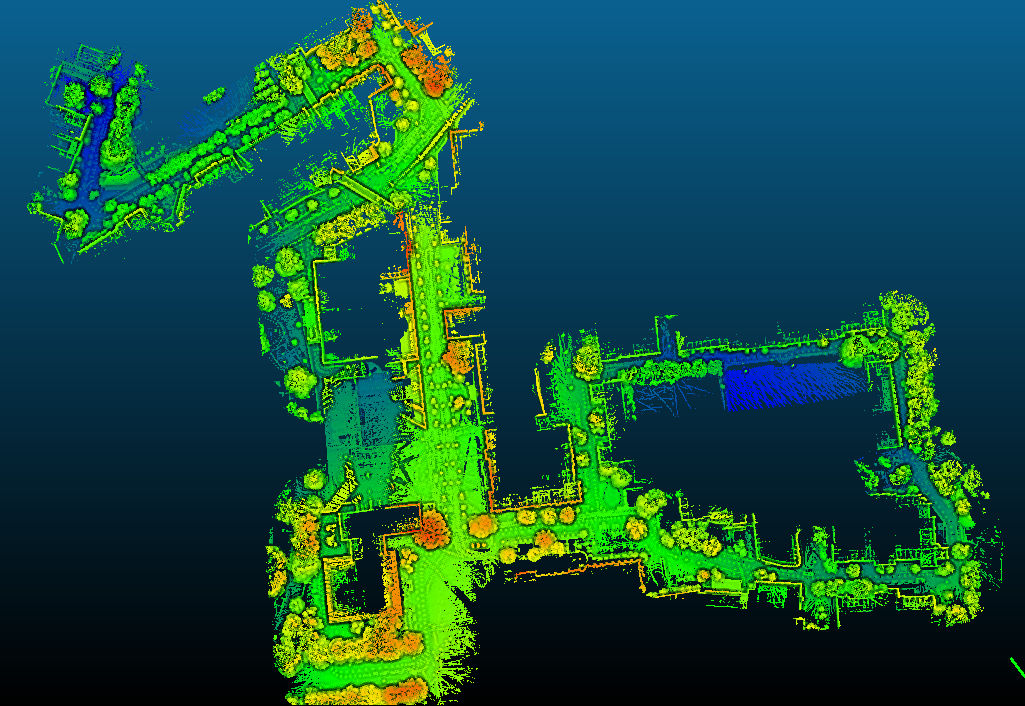

For your question, rtabmap does support PointCloud2 topic. I was playing with the first sequence of the uSyd Campus dataset and came out with this new launch file: usyd_dataset.launch. To make it work properly, you should build latest rtabmap and rtabmap_ros repos. You will also see in the launch how to subscribe to 2 mono cameras and project lidar in them to create depth images.  I also republish /vn100/odometry as Tf to save it like "ground truth" just for comparison purpose. Here the blue path is RTAB-Map (with red links as loop closures) and in gray the vn100 odometry.  Exported assembled point cloud after mapping:  As in the first sequence there is no actual "visual loop closure" possible, I could not test if depth generation is good enough. I'll try later with another sequence and see how good would be the visual loop closures. cheers, Mathieu |

|

Thanks a lot Mathieu, you're the best.

Cheers, Matteo |

|

Hi Mathieu,

i cannot replicate the map with the loop closures with the launch file you added. It seems the loop closures are disabled, while in the map in your reply there are loop closures. How did you obtain them? Cheers, Matteo |

|

Administrator

|

The issue with this dataset is that there are no visual loop closures that can be detected (the car never looks in the same direction when traversing again the same areas). I tuned the parameters to that odometry doesn't drift too much so that the big loop can be detected only by proximity detection. Proximity detection only works well if the robot actually loop relatively close to a previously visited location. Make sure you have the latest rtabmap/rtabmap_ros versions because I had to fix an issue to make it work. I'll try to make a video later to show what you should see. cheers, Mathieu |

|

Administrator

|

Here is a video: https://youtu.be/nFMwh_tgCK0

Also slightly updated parameters to close farther local loop closures, maybe it will help on your side. |

|

Hi Mathieu,

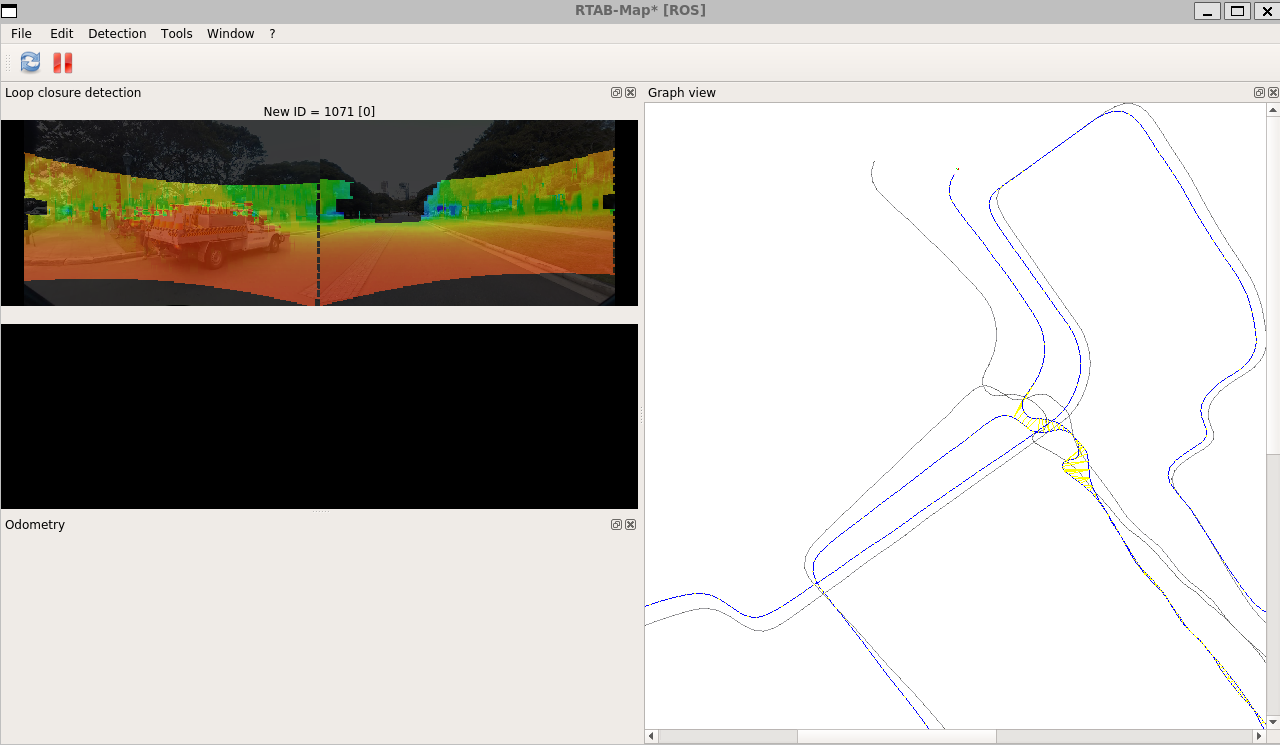

thanks for the reply. I just pulled the master branch of both rtabmap and rtabmap_ros and installed them. I modified the usyd launcher as you reported. I saw exactly what I expected, but loop closures by proximity detection are still disabled. In the image you can see a portion of the map which should contain loop closures by proximity detection as shown in your video, but they are not detected. Cheers, Matteo

|

|

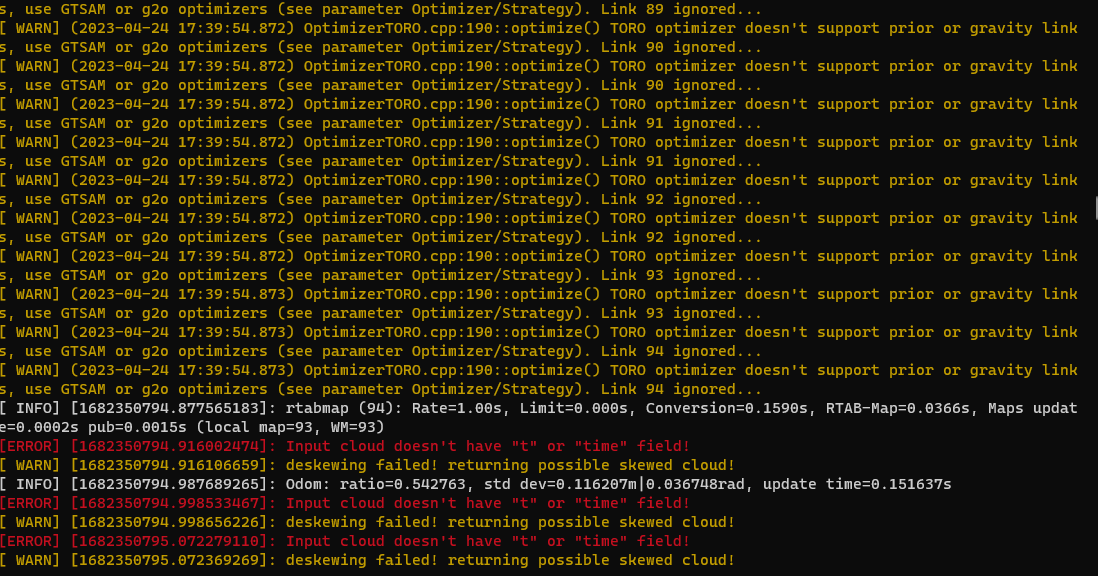

This is the output of rtabmap. Could it be the problem?

|

|

Administrator

|

Install ros-$ROS_DISTRO-libg2o and rebuild rtabmap/rtabmap_ros. This would fix your TORO errors.

The proximity detection is not disabled, if you mouse over the nodes over the first big loop closure, you may see that they are too far apart in Z on your case as the gravity links are ignored. For the deskewing, it is strange that you have this error because I explicitly relaunched the velodyne node to remake a new Pointcloud2 with this field. Cheers, Mathieu |

|

Ok, i've solved the issue. libg2o was already installed, but rtabmap wasn't able to find it, so I built g2o from source and now rtabmap finds it. I rebuilt rtabmap/rtabmap_ros and now it works.

Thanks a lot. Cheers, Matteo |

|

Hi Mathieu,

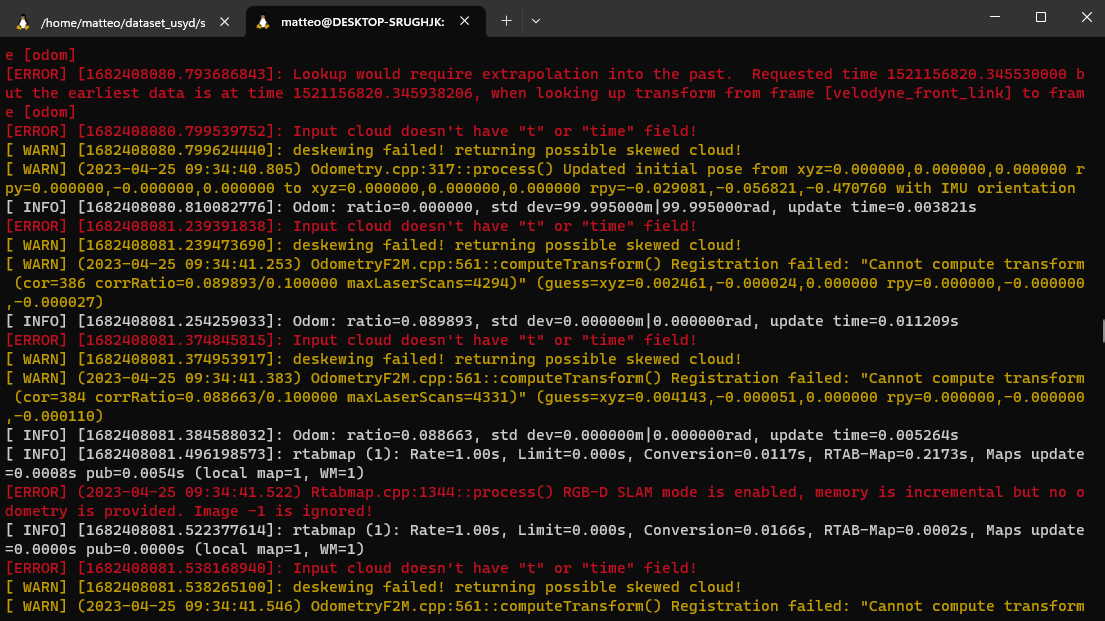

for most of the sequences now the issue is solved, but for Week2 another problem raises now. This is the output of rtabmap.

|

|

Administrator

|

Hi,

I did try the second sequence, even as a second session in same database, and it works (with those new changes to make it easier to detect visual loop closures, though another parameter tuning pass would be required to enable memory management approach of rtabmap as after the second sequence, the algorithm is not real-time anymore). But for your issue, it looks like the rtabmap node is not subscribed to right point cloud topic. In the launch file, I explicitly launch a velodyne node to re-assemble the velodyne point clouds with timestamp field, which is the point cloud that deskewing node should use (otherwise it will fail with errors like this). For the "Cannot compute transform" warnings, it says that consecutive scans don't have enough overlap, which is strange as I didn't see this problem on my side. Note that I updated the launch file to be easier to be used with docker (which includes SURF features used in this example). So based on this how to use rtabmap docker example, you can do with this dataset (also based on docker tools for that dataset here): xhost + # Launch dataset UI (remap volume to host directory containing dataset): docker run -it --rm\ --privileged \ --net=host \ --env="DISPLAY" \ --env="QT_X11_NO_MITSHM=1" \ --ipc host \ --volume="/tmp/.X11-unix:/tmp/.X11-unix:rw" \ -v /home/mathieu/Downloads/usyd_dataset:/data \ --name dataset-tools \ acfr/its-dataset-tools roslaunch dataset_playback run.launch # Select dataset from UI from "/data" folder inside the container # Check "Playback in real-time" # Get latest rtabmap_ros image docker pull introlab3it/rtabmap_ros:noetic-latest # Launch rtabmap example docker run -it --rm \ --user $UID \ -e ROS_HOME=/tmp/.ros \ --network host \ -v ~/.ros:/tmp/.ros \ introlab3it/rtabmap_ros:noetic-latest \ roslaunch rtabmap_examples usyd_dataset.launch \ rtabmap_viz:=false \ cameras:=true \ database_path:=/tmp/.ros/rtabmap.db # for visualization, open local rviz, or rtabmap_viz: export ROS_NAMESPACE=rtabmap && rosrun rtabmap_viz rtabmap_viz # play bag cheers, Mathieu |

«

Return to Official RTAB-Map Forum

|

1 view|%1 views

| Free forum by Nabble | Edit this page |