Navigation with Rtabmap and Stereo Camera

12

12

|

Administrator

|

I would check what is the frame rate of /obstacles_cloud and /ground_cloud.

I would not use cloud_map in obstacle layer. The obstacle layer expects local data at high frame rate. |

|

In reply to this post by imran05

Hi,Thank you for the reply.

I have a follow-up question regarding navigation with RTAB-Map. I created a map using RTAB-Map and am now using move_base within that map. However, I’m facing two issues: 1. Loop Closure Affecting 2D Pose: When I launch RTAB-Map in localization mode, it initially detects a loop closure — and while the loop closure itself is correct, the robot's position appears incorrect in the 2D map within RViz. I try to correct it using the "2D Pose Estimate" tool in RViz, but the robot doesn't align properly, as shown in the attached video. "https://drive.google.com/file/d/1IdM55D12OInuaL0xtdmMbr7_ba8Kakl8/view?usp=sharing" 2. Robot Drifting from Center in Orchard Rows: My goal is to navigate through the center of each orchard row. The planner initially generates a path through the center, but over time, the robot drifts toward the left or right side, getting closer to the trees. This seems to happen because the map keeps shifting its position in RViz — likely due to loop closures. As a result, the planner fails to update correctly, since the map frame itself is moving. "https://drive.google.com/file/d/1HGq5mXDCx0bjKTrNG_ipYMeGRCxlSbXW/view?usp=sharing" I'm attaching videos demonstrating both problems. Could you please review them and let me know what might be causing these issues? Thank you! |

|

Administrator

|

Hi,

1. It seems re-localizing just after you send a pose. If the robot is not really there, then it would be a bad re-localization. 2. It is hard to see if A) the robot was still exactly on the middle in reality or B) was in fact leaning to the left side like we see in rviz. For A, we see after 44 sec in the video it doesn't localize anymore, so if it appears drifting in the map, that would be visual odometry drift. For B, the localization is fine, the issue is that the robot is not followning the path. EDIT: After a second look, I see that the whole map is moving and the path is not updated, is RGBD/OptimizeFromGraphEnd enabled? If so, I suggest to disable it so that the map frame (and the occupancy grip) doesn't move on re-localization (or when you send a new initial pose). Nit: if not already, make sure the global frame in rviz's global option is map frame to make sure to visualize correctly everything. |

|

This post was updated on .

Hello, thank you for the reply.

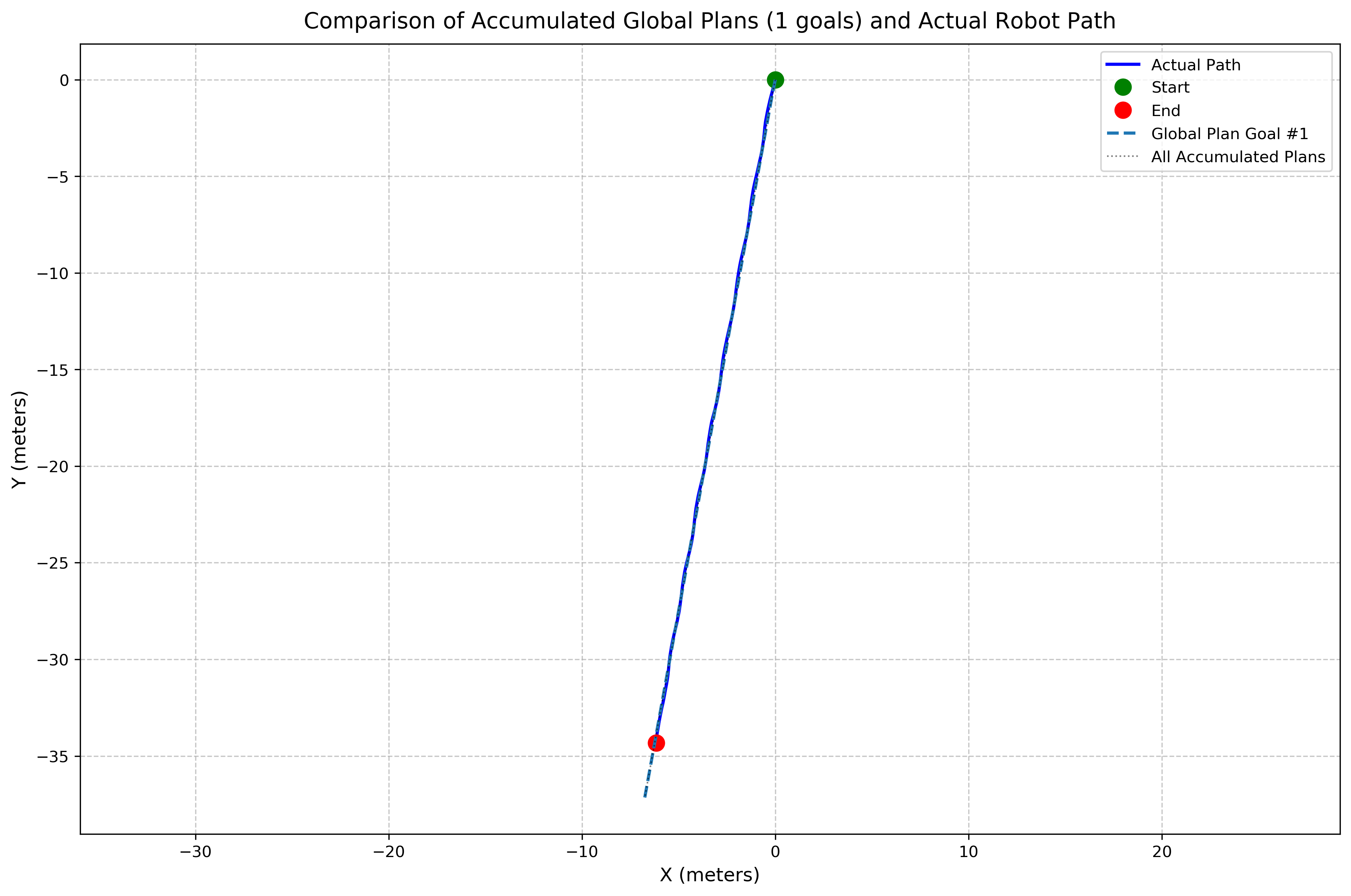

1) Yes, exactly. In reality, my robot is looking straight ahead, which causes poor relocalization, as you mentioned. How can I improve this? 2) Additionally, my robot tends to veer left after some time, as shown in the attached video "https://drive.google.com/file/d/16x1lQlPwKa_9gYmvgXR8w4uxUA0Apnrt/view?usp=sharing". However, I am confident that it follows the path correctly because when I compare the planned path with the actual path on the graph after completing the line, they are almost identical. The dotted line is the planed path and the blue solid line is the actual path followed.  Yes, I am using "RGBD/OptimizeFromGraphEnd=true". I will disable this and test again. Thank you! If you have any other suggestions, I would appreciate it." Let me know if there's anything else you'd like to adjust! |

|

Administrator

|

Looking straight ahead is not that bad, it is just that in this kind of environment with a lot of things (branches, leaves) overlapping at different depths, it can increase the variation of visual features extracted, then reduce re-localization recall. You are also outdoor, which would add some illumination challenge at the same time. I see in the occupancy grid we can see quite easily the "corridor" with obstacles on both side. There could be a geometric local re-localization possible by generating a fake laser scan from multiple consecutive frames. That laser scan could be used either with rtabmap's proximity detection (with Reg/Strategy=1) or by combining AMCL. Using a laser_assembler or point_cloud_assembler node, a local scan surrounding the robot could be created while the robot is moving forward. That kind of laser scan would be great in that kind of environment to keep the robot perfectly aligned between the "walls".

The problem with RGBD/OptimizeFromGraphEnd=true is that if you don't replan a new path after every re-localization, the actual path will drift from the map, causing the robot to drive close to a side. Probably this is what you were observing. cheers, Mathieu |

|

Hi, thank you for your response. I have some questions regarding your suggestion:

1)Since I'm using a stereo camera, can I utilize the fake laser scan data using depthimage_to_laserscan, set Reg/Strategy=1, and then create a new map? 2)Alternatively, can I use the existing map created with Reg/Strategy=0, then generate fake laser scan data using depthimage_to_laserscan, and use AMCL for localization? In this case, I would also use laser_assembler to create a local scan around the robot for better localization. 3)Or, can I continue using RTAB-Map for localization by switching to Reg/Strategy=1, and avoid using AMCL entirely? With this setup, I would use a laser scanner (or fake scan) and laser_assembler to create a local scan around the robot to improve re-localization. Could you please guide me on how to proceed with these approaches? Thank You |

|

Administrator

|

Hi,

1) Possible, but it would better if the laser scans are accumulated for some distance before sending the resulting point cloud to rtabmap. Reg/Strategy=1 with only single-frame forward fake laser scan won't work very well. 2) You can try re-using the current map with AMCL and fake laser scan directly to see if local localization is possible. 3) See point 1. For the 1) and 3), I created a new example: turtlebot3_sim_rgbd_fake_scan_demo.launch.py # Make sure to update the URDF file like in the comment of that launch file, then: ros2 launch rtabmap_demos turtlebot3_sim_rgbd_fake_scan_demo.launch.py  Basically, we convert camera's depth image to a laser scan, then convert the laser scan to a point cloud, then assemble that point cloud in a circular buffer. The resulting assembled cloud is sent to rtabmap node like a laser scan. In the GIF above, the "orange" points are the assembled scans. Note however that in simulation the depth is perfect, I would assume that the fake assembled scan would be a lot more noisier with stereo depth in your setting. "Icp/" parameters may need to be tuned based on the data you will get. cheers, Mathieu |

|

Hello,

Thank You for your detailed reply, I have tried AMCL localization - my fake laser scan data from camera depth images doesn't match the map properly. I've tried two approaches (see attached videos): Depth image → laser scan → point cloud → assembled point cloud → laser scan Direct point cloud assembly → laserscan https://drive.google.com/drive/folders/1PGirPTJwJ4AvxNveiF4BGSq76IBu9X4U?usp=sharing I have following questions: 1) Is this scan data quality sufficient for AMCL localization? 2) How can I filter out ground points that are dominating my point cloud? 3) Which approach works better for localization? 4) Any tuning suggestions for better map matching in pointcloud to laserscan most of pointclouds are from ground so how i can fix that? |

|

Administrator

|

This one looks bad:

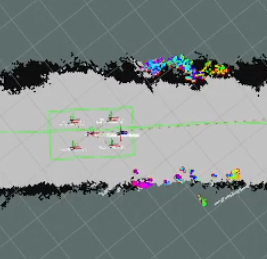

This one is close, though the FOV is so small that AMCL will have a hard time to correctly sample the particles:  In my example above, you can see the orange scan is the accumulated camera's scans over some period. So when the robot is moving, we can have scan points almost at 270 deg, which would help to better localize with amcl. To filter the ground, if you use depthimage_to_laserscan with only use the middle row and that the camera is parallel to ground, it should not add the ground (assuming relatively flat ground). If the middle row doesn't have enough points and/or camera is slightly tilted, you may be able to use pointcloud_to_laserscan instead, which can crop in z (based on base_link) the points from the stereo point cloud to generate a scan with objects over the ground. |

«

Return to Official RTAB-Map Forum

|

1 view|%1 views

| Free forum by Nabble | Edit this page |