Obstacles are drifting while running SLAM with RealSense D435i and Turtlebot2

Obstacles are drifting while running SLAM with RealSense D435i and Turtlebot2

|

Hello! I'm trying to create the indoor map using RTAB-Map with Intel RealSense D435i and Turtlebot2. I've tried to create maps in different locations, but the problem is that obstacles are drifting on map. Also, I've tried to change Kp/MaxFeatures parameter from 500 to 1000, 10000, 1000000. I launch RTAB-Map via following command:

ros2 launch rtabmap_launch rtabmap.launch.py visual_odometry:=false frame_id:=base_footprint \ args:="-d" use_sim_time:=false rgb_topic:=/camera/color/image_raw \ depth_topic:=/camera/depth/image_rect_raw camera_info_topic:=/camera/color/camera_info \ odom_topic:=/odom approx_sync:=true qos:=2 rtabmap_viz:=false ros2 launch realsense2_camera rs_launch.py

|

|

Administrator

|

Re: Obstacles are drifting while running SLAM with RealSense D435i and Turtlebot2

|

I use aligned depth via remappings. Also, I've found that without wheel odometry my setup works fine.

|

|

Administrator

|

If you use visual_odometry=true and want to keep wheel odometry, you can set odom_topic="vo" and odom_guess_frame_id="odom" (assuming wheel odometry is using "odom" frame) to not break the tree.

|

Re: Obstacles are drifting while running SLAM with RealSense D435i and Turtlebot2

|

Should I use /odom topic for wheel odometry if visual odometry is being published into /odom? I've found that while rotating robot cannot localize itself and nav2 is doing strange moving at this moment. I think that wheel odometry can help to solve this problem.

|

|

Administrator

|

They should not be published on same topic name. Wheel odometry would use /odom topic name and "odom" TF frame. Visual odometry should use differnt topic name and TF, like "/vo" topic name and "vo" TF frame. With guess_frame_id=odom, if visual odometry is lost, the vo->odom frame will still be published. nav2 can then use vo as base frame.

|

Re: Obstacles are drifting while running SLAM with RealSense D435i and Turtlebot2

|

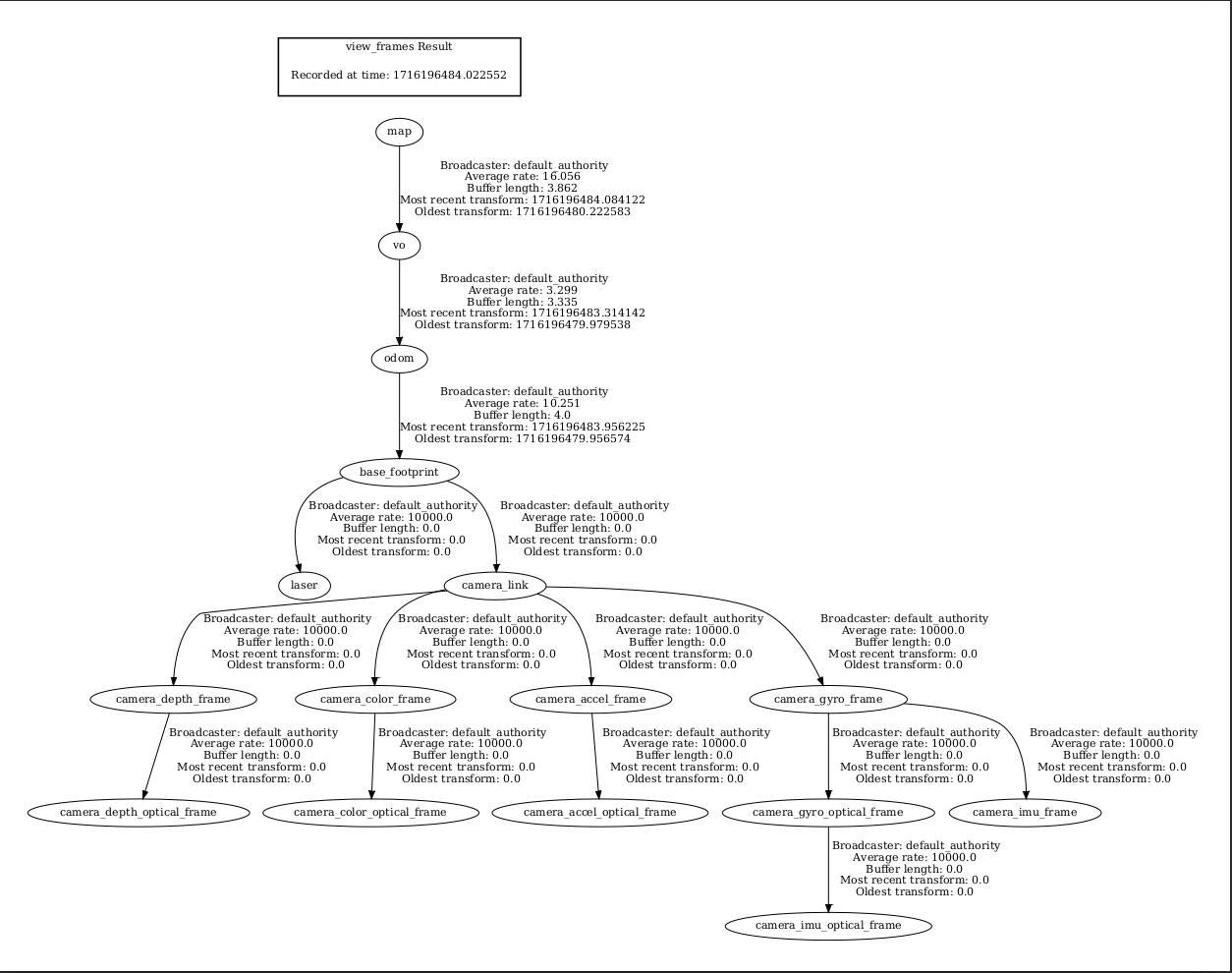

I've tried to use both odom and vo and it works, but now my 2D lidar (which is not used in rtabmap, but used in nav2) cannot be transformed because of following error:

[planner_server-3] [INFO] [1716196816.385999726] [global_costmap.global_costmap]: Message Filter dropping message: frame 'laser' at time 1716196815.963 for reason 'the timestamp on the message is earlier than all the data in the transform cache' What can be the problem? I use base_footprint as base_frame_id. Tf data is from static_transform_publisher (time = 0.0) and all msgs from lidar are later than 0.0 time.

|

|

Administrator

|

Hi,

The time 1716196815.963 of lidar is ~330 sec in future based on the timestamps in your TF tree, if you created the tf tree at the same time than the warning. Well if it is a tf tree your saved 5 min earlier, then maybe it is not a lidar sync issue with host timestamps. It is inconvenient that the warning message doesn't show what is the latest TF stamp in the buffer, that would help to know how off the stamps are. In particular, it seems the transform_tolerance is 0.3 sec, and based on Tf tree, the vo delay is >600 ms, that could explain why global costmap (if using "map" frame) is failing. You could increase transform_tolerance to 1 sec to compare. A vo delay of 600 ms is very high, you may compare the "ros2 topic delay" of vo output odometry topic and the input camera topics to see from where most delay is coming from. cheers, Mathieu |

Re: Obstacles are drifting while running SLAM with RealSense D435i and Turtlebot2

|

Hi, thank you for your explanation!

VO delay is high because of dynamic obstacles filtration in slam frontend. I'm trying to use segmentation masks to filter keypoints and depth frames. And it works, but my CPU is so slow for this approach, so I have a big delay. If I directly use rgb and depth from camera in odometry node and use filtered rgb and depth in rtabmap instead of using filtered keypoints and depth in both odometry node and slam node, what will be with odometry quality in dynamic environments? Now rtabmap odometry node is using only static keypoints and depth values. I've checked lidar msgs and they have real host time. I will try to increase tf_tolerance. |

|

Administrator

|

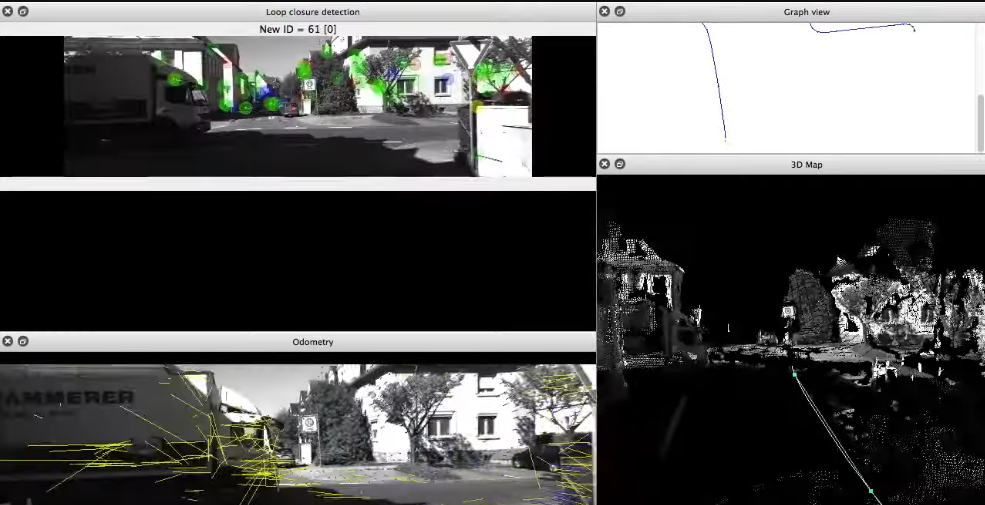

It may work. If most features in the image tally with the expected motion (assuming constant velocity model, or motion estimated using guess_frame_id), it may implicitly filter the dynamic objects. A visual example of this can be see around 1:02 in this video (some cars are taking large part of the field of view, but they are implicitly ignored as they don't match the current motion of the vehicle).  However, if most features extracted in the image are from moving objects, there is no guarantee that odometry won't start to follow them (drifting!) at some point. |

Re: Obstacles are drifting while running SLAM with RealSense D435i and Turtlebot2

|

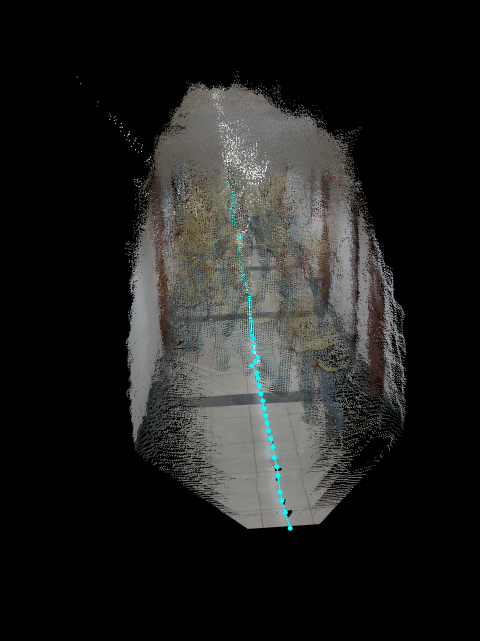

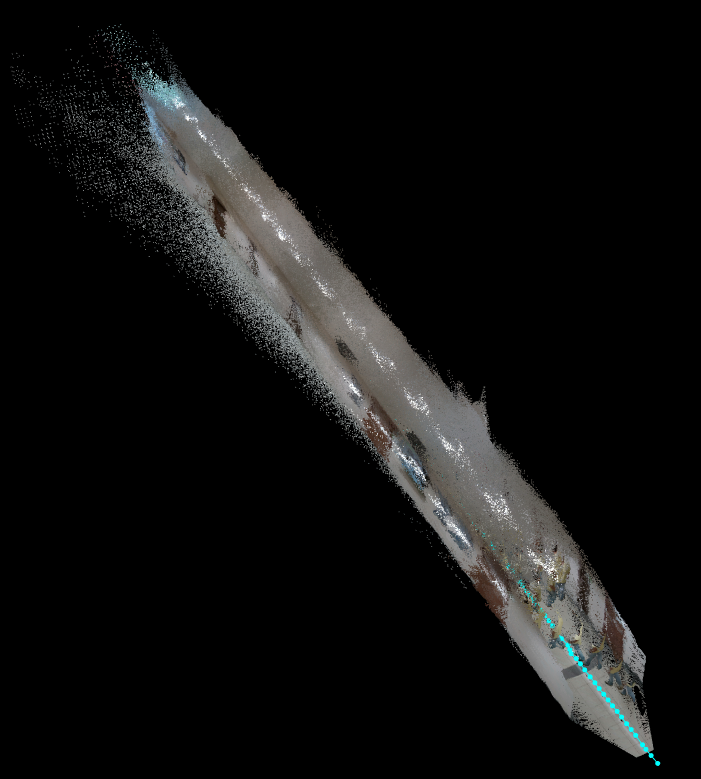

Hi! I've tried to use raw rgb and depth in dynamic environments and now I think that it's better to use filtered rgb and depth.

Now I have a problem with visualization. I have a map with dynamic obstacle, but I can't show it on video because of roof. Is it possible to set height threshold in rtabmap_viz?

|

|

Administrator

|

Hi,

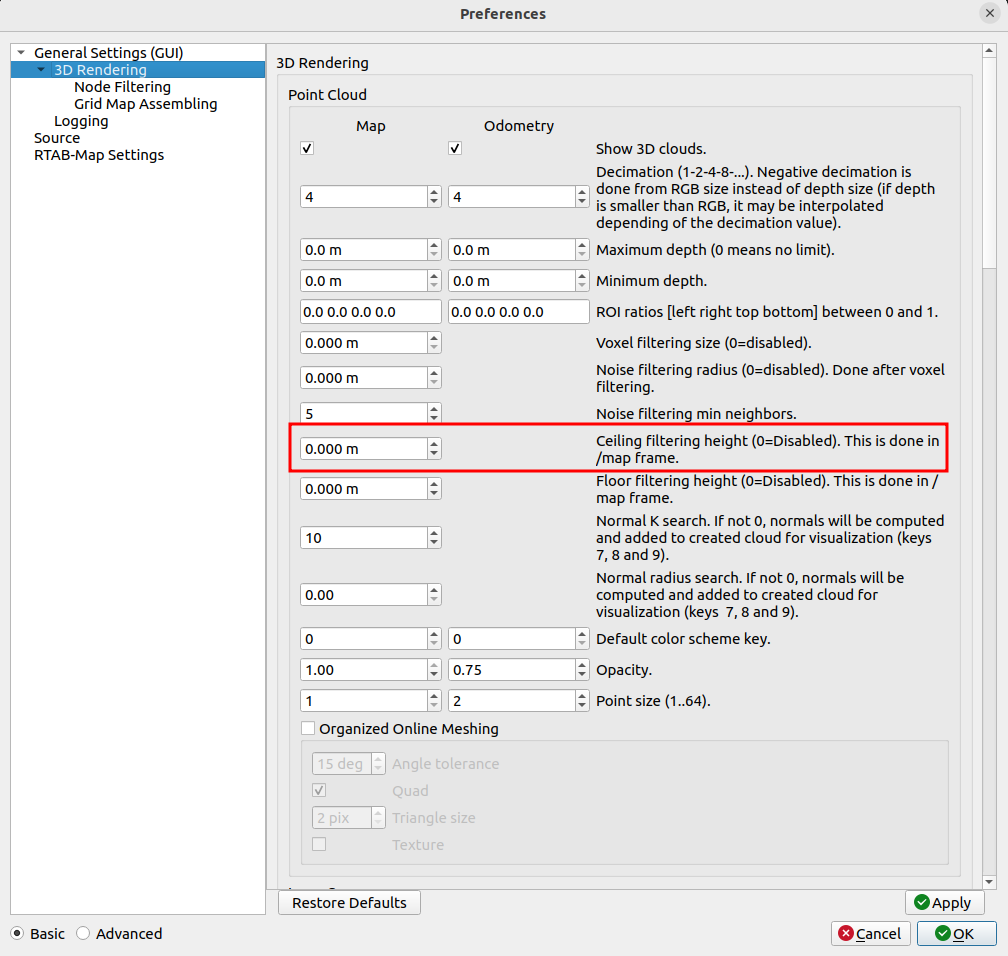

You can use this parameter:  In rviz with MapCloud plugin, there should be a "Fitler ceiling" parameter too. cheers, Mathieu |

Re: Obstacles are drifting while running SLAM with RealSense D435i and Turtlebot2

|

Thank you so much for your help! Now I've finished my work and and got following results while mapping in dynamic environment: https://drive.google.com/file/d/1RWF-6xkWC4Z4CqYm6fIkFk-V6BxuQVaz/view?usp=sharing

|

«

Return to Official RTAB-Map Forum

|

1 view|%1 views

| Free forum by Nabble | Edit this page |