RGB-D SLAM example on ROS and Raspberry Pi 3

|

Administrator

|

This post was updated on .

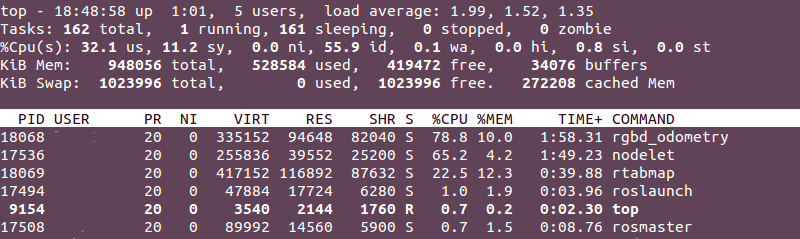

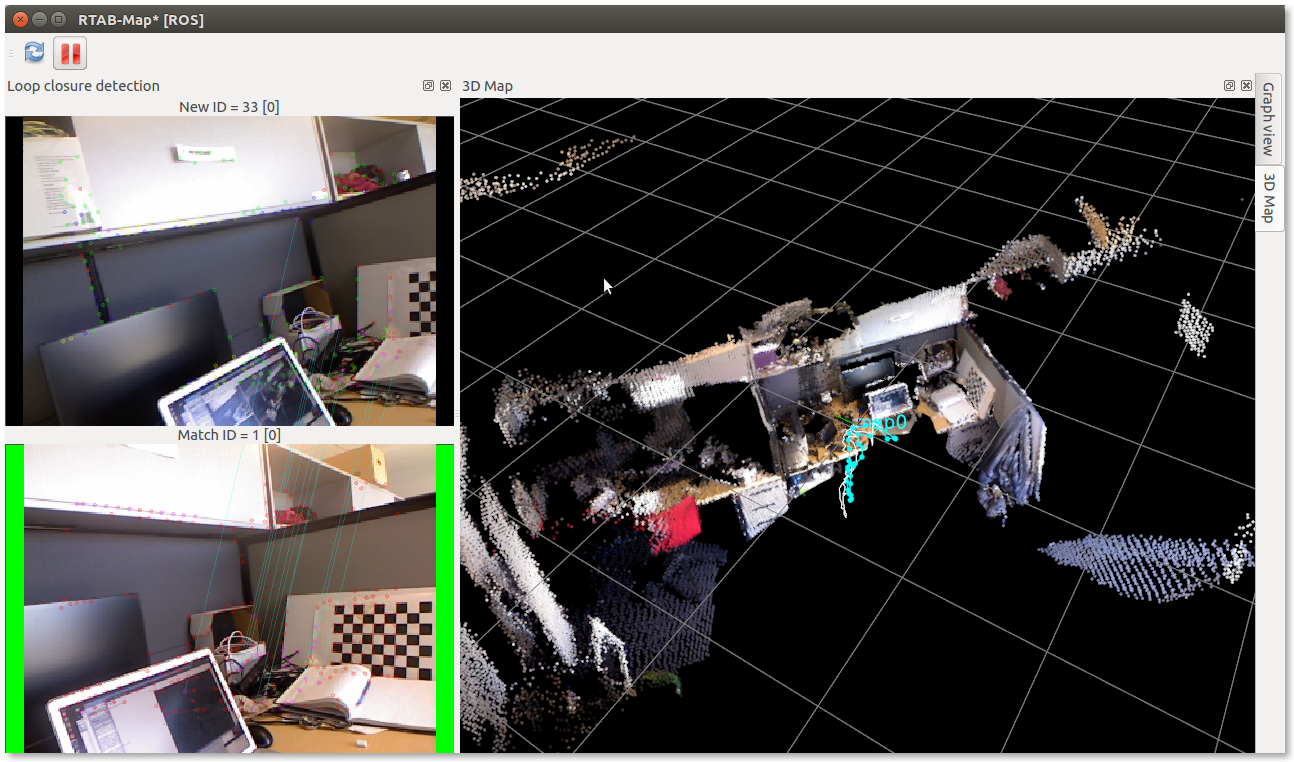

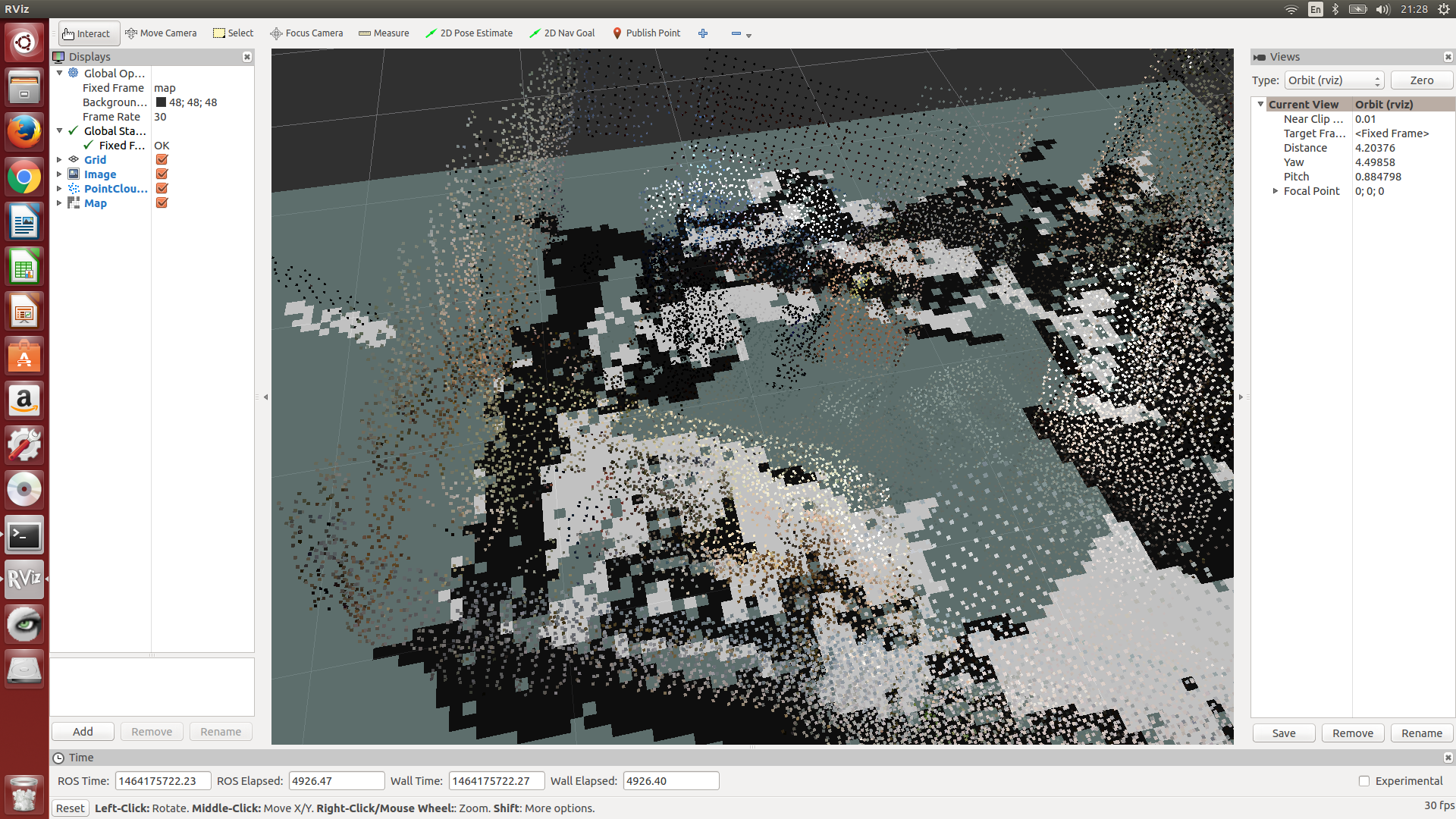

RGB-D SLAM example on ROS and Raspberry Pi 3SetupIn this example, I assume that IP of RPi is 192.168.0.3 and that the one of the client computer is 192.168.0.2. You can get IPs with "$ ifconfig". The ROS master will be running on RPi. Mapping will be done on RPi, and only visualization on the client computer. A Kinect v1 is connected on RPi.Raspberry Pi$ export ROS_IP=192.168.0.3 $ roslaunch freenect_launch freenect.launch depth_registration:=true data_skip:=2 $ roslaunch rtabmap_ros rgbd_mapping.launch rtabmap_args:="--delete_db_on_start --Vis/MaxFeatures 500 --Mem/ImagePreDecimation 2 --Mem/ImagePostDecimation 2 --Kp/DetectorStrategy 6 --OdomF2M/MaxSize 1000 --Odom/ImageDecimation 2" rtabmapviz:=falseTo increase odometry frame rate, input images are decimated by 2. This gives around 175 ms per frame for odometry. At 175 ms per frame, we don't need 30 Hz kinect frames, so data_skip is set to 2 to save some computation time. Here is the CPU usage on RPi ("nodelet" is freenect_launch):  Client computer$ export ROS_MASTER_URI=http://192.168.0.3:11311 $ export ROS_IP=192.168.0.2 $ ROS_NAMESPACE=rtabmap $ rosrun rtabmap_ros rtabmapviz _subscribe_odom_info:=false _frame_id:=camera_linkWell, RVIZ could also be used.   cheers |

Re: Rtabmap real-time problem on Raspberry Pi2

|

This post was updated on .

thank you matlabbe so much for the help :) ! I manged successfully to get

it work but the fps wasn't enough for me so I think that I'm going to switch to Nvidia jetson tk1 because it is more powerful and it has a good GPU with CUDA |

|

In reply to this post by matlabbe

Super helpful. Thanks so much for the step by step. Unfortunately, while I can get successful connection between my RPi and Client. I can't see anything in my RTABMAPVIZ window.

I am using a Raspberry Pi 2 Model B running Lubuntu 14.04 connected to an Asus Xtion Pro Live with Openni2. My client computer is running Ubuntu 14.04. I've confirmed the connection since I can run rviz on the client computer and received visual data from the camera. However, when I try to run rtabmapviz, the screen is completely empty. I've followed your instructions carefully, changing only freenect to openni2 where necessary and I feel this may be the source of the problem. How should I modify the commands for an Openni2 camera? Regards

|

|

Administrator

|

Hi,

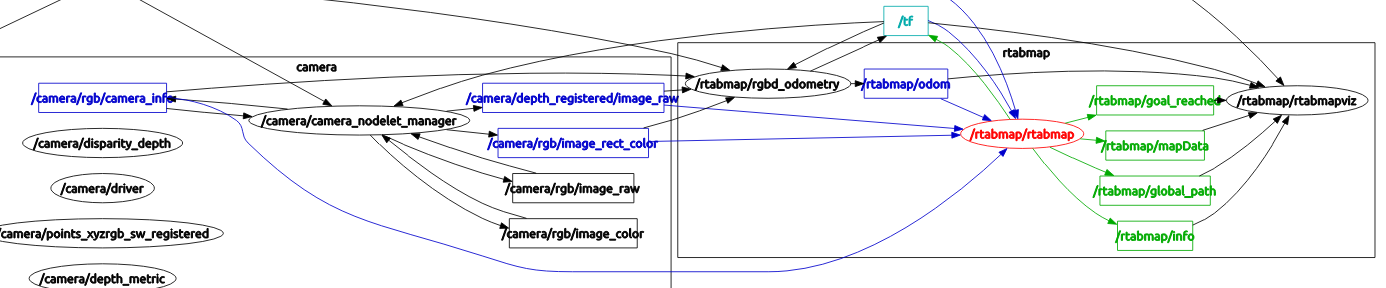

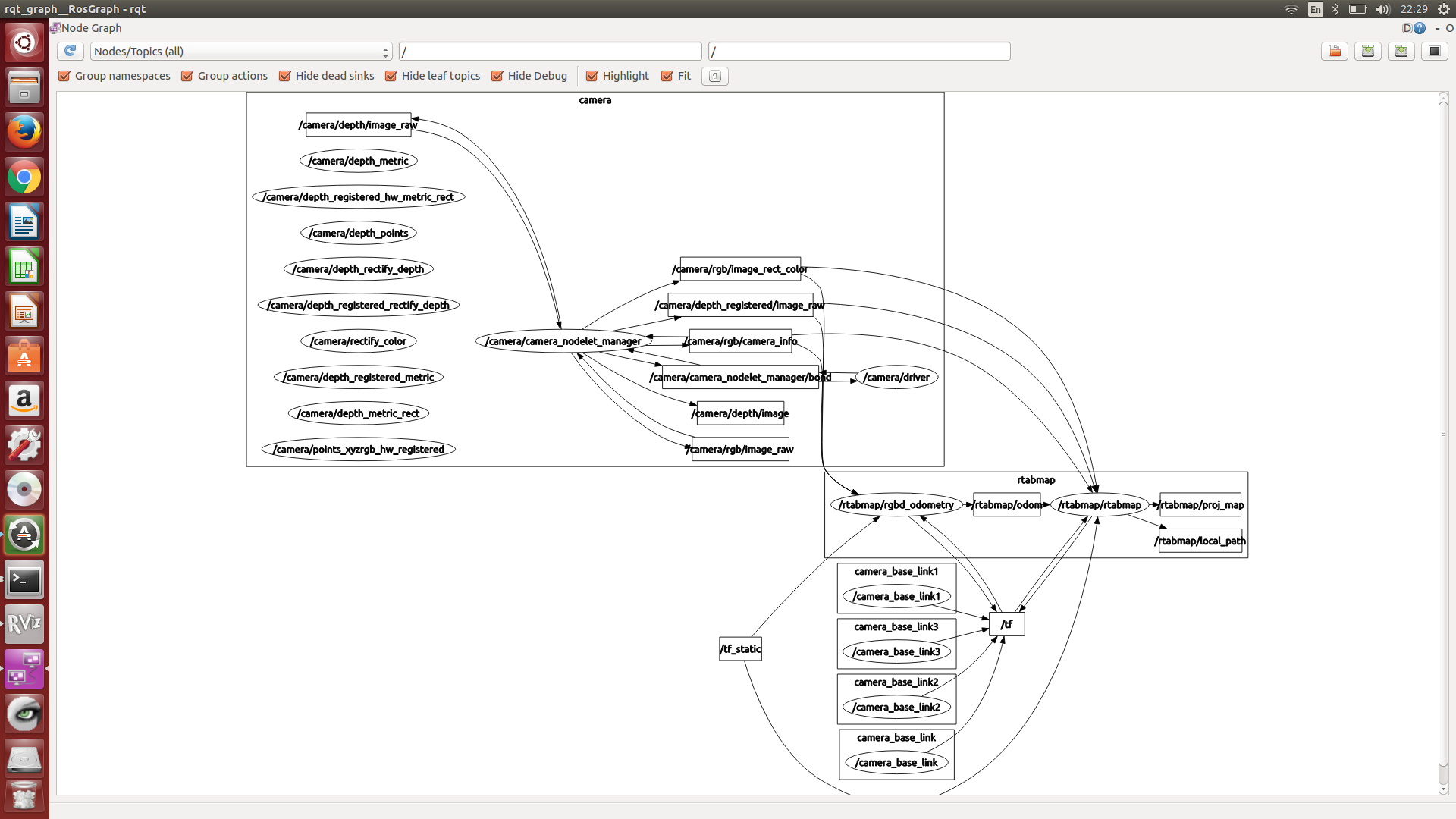

Make sure rtabmapviz is started in the same namespace than rtabmap (see above), so that topics are correctly connected. You can show rqt_graph to compare with the one shown above. The most important is that /rtabmap/mapData topic is connected and that it is published: $ rostopic hz /rtabmap/mapDataIf it is not published, look for warnings on RPi terminal. For openni2, it is similar to freenect: $ roslaunch openni2_launch openni2.launch depth_registration:=true cheers |

|

Hi Matthieu,

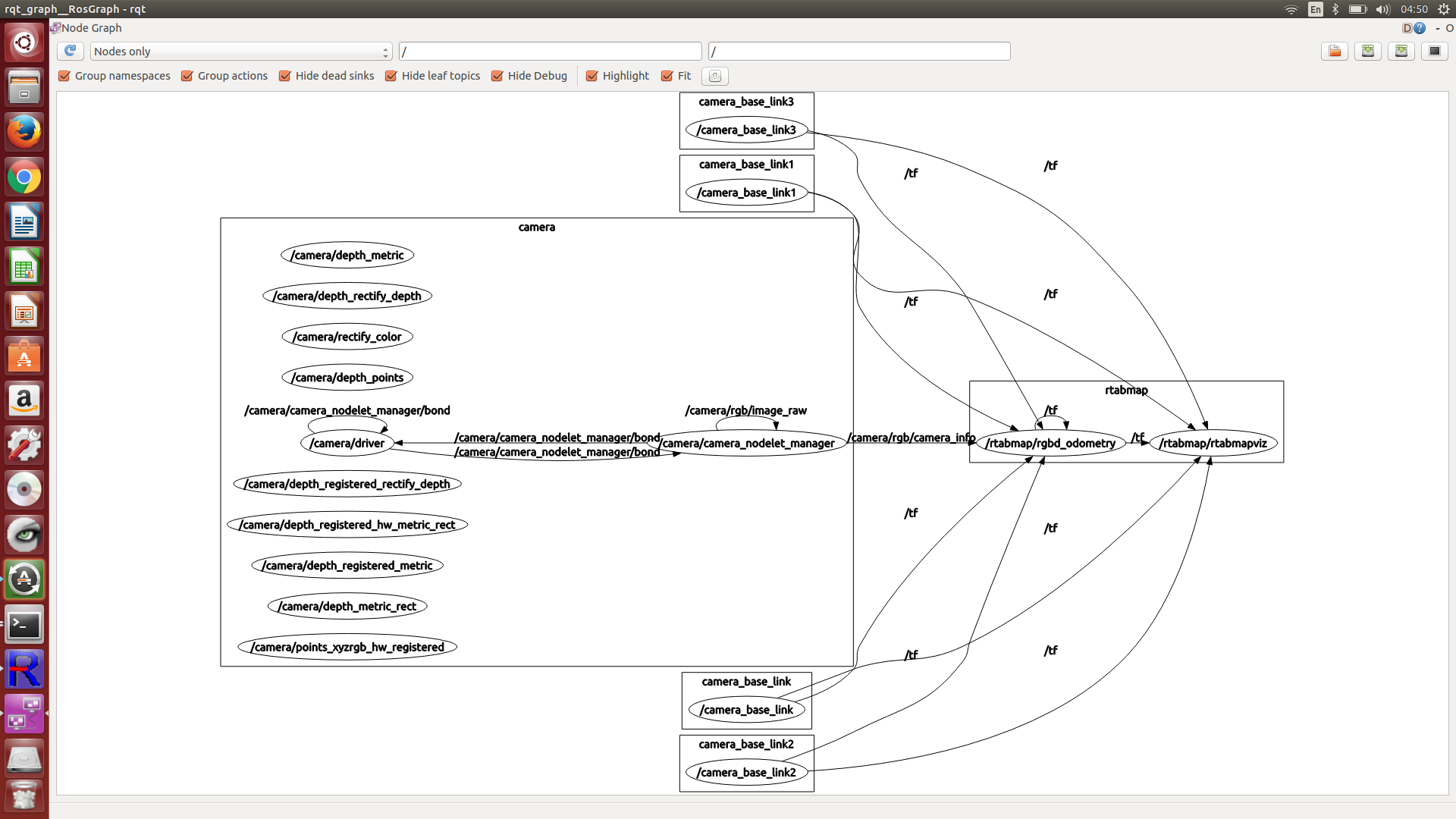

Apologies for the late reply. I’ve been preoccupied on some other projects. I’m still unfamiliar with some of the procedures. On the Raspberry Pi, I used launched openni2 and rtabmap_ros in two separate terminal windows. A warning regarding missing camera calibration file popped up on the openni2 terminal window and some errors regarding unrecognized argument –-Vis/MaxFeatures but otherwise everything is running just fine. I used the following commands: $ roslaunch openni2_launch openni2.launch depth_registration:=true $ roslaunch rtabmap_ros rgbd_mapping.launch rtabmap_args:="--delete_db_on_start --Vis/MaxFeatures 500 --Mem/ImagePreDecimation 2 --Mem/ImagePostDecimation 2 --Kp/DetectorStrategy 6 --OdomF2M/MaxSize 1000 --Odom/ImageDecimation 2" rtabmapviz:=false On my client computer I ran the following command: $ ROS_NAMESPACE=rtabmap rosrun rtabmap_ros rtabmapviz _subscribe_odom_info:=false _frame_id:=camera_link This results in a black screen. I also received warning saying rtabmapviz: could not get transform from odom to camera_link after 0.1 seconds. The following is the resulting image from rqt_graph.  Ultimately what I’m trying to do is use the raspberry pi on a quadrotor, create an occupancy grid of an environment and navigate through it. For now, I’m trying to make it work as a handheld mapping system as proof of concept. Would rviz be better than rtabmapviz in this case? If so, is it possible to run it within the ROS_NAMESPACE? You also mentioned rostopic hz /rtabmap/mapDatawhich I tried to run on a separate terminal on the client computer but this didn't seem to change anything. Apologies for the long-winded question. I'm still trying to learn my way around this framework and any help you can give will be much appreciated. |

|

Administrator

|

Hi,

1. The "–-Vis/MaxFeatures" error means that you may not have the 0.11 version (latest) of rtabmap. How did you install rtabmap? 2. In your graph, rtabmap node is not here. It may have not started, look for errors on the terminal. 3. For rtabmapviz, it will not show anything by default, except the map if rtabmap node is running (which looks not in your graph). For transform warning, you can check if odometry is actually published (and not null): $ rostopic hz /rtabmap/odom 4. Keep in mind that on RPi, rtabmap works at max 4-5 Hz. Adding navigaiton in 3D (you may need an octomap), this would may not be possible on a single RPi. Another design could be to stream the data from the quadcopter to a workstation, on which mapping and navigation are working, sending back the commands. cheers |

|

Hi Mathieu,

I installed it with sudo apt-get install ros-indigo-rtabmap-ros. I have since gotten rtabmap/projmap results on rviz. I ran rtabmap without "--Vis/MaxFeatures" or any of the other parameters except rtabmapviz=false and delete_db_on_start. It's a bit hard to see under the point cloud but it seems to be working as intended. The bottom picture is the updated output of rqt_graph. While this does look like what I need, I'm still curious as to why this doesn't run on rtabmapviz and why the parameters aren't being recognized. Am I meant to install it from source as well as the ros wrapper? Thanks so much for your help so far.

|

|

Administrator

|

Hi,

You can increase the billboard size in PointCloud display in RVIZ. By default, the /rtabmap/cloud_map topic is voxelized to 0.05 m, so the billlboard could be 0.05 m. To get a more dense cloud, you can reduce the "cloud_voxel_size" parameter: <node pkg="rtabmap_ros" type="rtabmap" name="rtabmap"> <param name="cloud_voxel_size" value="0.01"/> <!-- default 0.05 --> ... </node>Note that a more dense cloud increases computation load. The parameters are not recognized because you may have version 0.10, parameters parsing on arguments is introduced in 0.11. Note that Indigo has been released again 3 days ago with rtabmap 0.11. You can update your packages: $ sudo apt-get update $ sudo apt-get upgrade Not sure why rtabmapviz is not working, but with RVIZ you can have almost the same information. In RVIZ, use rtabmap_ros/MapCloud display to show more efficiently the point cloud. cheers |

|

Hi Mathieu,

Thanks for the advice. Everything is running smoothly now. I'll eventually use rtabmap to make a simple navigation algorithm for a quadrotor. Instead of the pointcloud, I was thinking of simply using /rtabmap/projmap to create a 2D occupancy grid of the obstacles at the quadrotor's altitude and simply calculate the distance to obstacles in increments. Thank you for being patient with a newcomer such as myself. Regards. |

|

In reply to this post by matlabbe

Hi

I got this example working fine on a RPi3. However we will like to navigate autonomously on the constructed map. What would be the setup to stream the data from the RPi3 to a workstation? What will be the launching files for mapping and navigation on the workstation side? Should we launch freenect_launch freenect.launch on the Pi and rgbd_mapping.launch and rtabmapviz on the workstation? If yes what would be the syntax for autonomous navigation ? Thank you in advance for your help |

|

Administrator

|

Hi,

From the example above, the line with rgbd_mapping.launch would be started on the workstation instead of RPI3. However, streaming raw images will take a lot of bandwidth, which could cause synchronization problems on workstation side (in particular if you are on WiFi). See this tutorial instead: http://wiki.ros.org/rtabmap_ros/Tutorials/RemoteMapping For navigation, I should refer you to tutorials of the navigation stack of ROS. The /map (from map_server) would be replaced by /rtabmap/grid_map published by rtabmap. cheers, Mathieu |

|

Hi Mathieu,

Thank you very much for your fast reply. I managed to complete the remote mapping tutorial to limit the bandwidth. For the navigation stuff I will better take a look to those navigation tutorials, thanks for guiding me. Just a little question concerning the mapping. Our robot setup is a differential robot driven by steppers motors from an Arduino using the AccelStepper library and the wheels speed commands are sent by the RPi3 using i2c. So we don't have odometry from encoders but we have an IMU giving us only the orientation of the robot. What would be the best configuration to integrate the IMU values to improve odometry for mapping (and finally navigation) using rtabmap ?. Do you think that the laser_scan_matcher (http://wiki.ros.org/laser_scan_matcher) could be an option ? Thanks again for the help |

Re: RGB-D SLAM example on ROS and Raspberry Pi 3

|

In reply to this post by matlabbe

Hi,

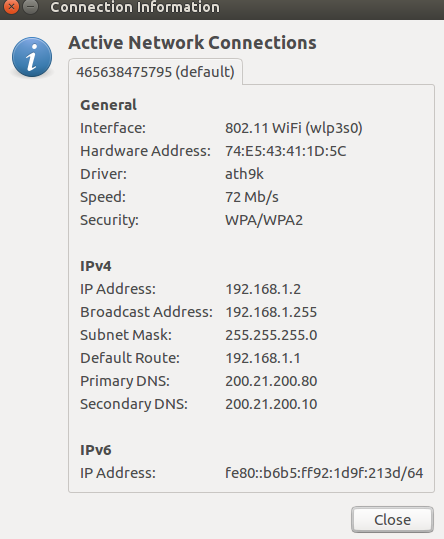

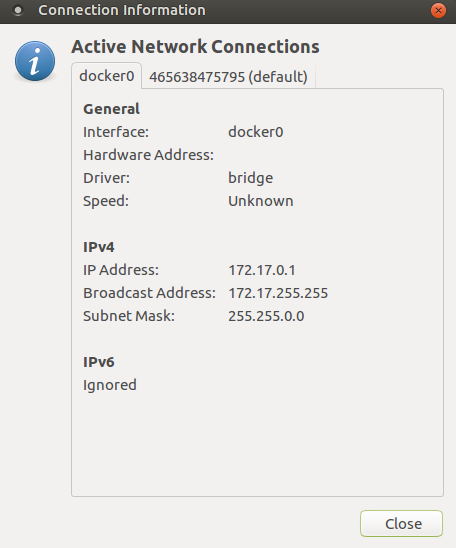

i'm using a RPI 3B and all is fine there, but, doesn't work on my pc, i'm sure Ip's are correct, but when i use this appear the PC's ip  and the RPI's IP  what am i doing wrong? thanks. in the RPI i use this commands: in PC this commands:

|

|

Hi there,

Try to ping to test connectivity. I think that you're using a docker container network, so I don't know if you can access those directly (see this issue: https://github.com/docker/for-win/issues/221). Are you running roscore ? Otherwise, with connectivity checked, these commands worked well for me (do an export ROS_IP on every terminal tab): On the RPi3: On the Pi tab 1: $ export ROS_IP=172.17.01 $ roscore On the Pi tab 2: $ export ROS_IP=172.17.01 $ roslaunch freenect_launch freenect.launch depth_registration:=true data_skip:=2 On the Pi tab 3: $ export ROS_IP=172.17.01 $ roslaunch rtabmap_ros rgbd_mapping.launch rtabmap_args:="--delete_db_on_start --Vis/MaxFeatures 500 --Mem/ImagePreDecimation 2 --Mem/ImagePostDecimation 2 --Kp/DetectorStrategy 6 --OdomF2M/MaxSize 1000 --Odom/ImageDecimation 2" rtabmapviz:=false On the client PC: $ export ROS_MASTER_URI=http://172.17.01:11311 $ export ROS_IP=192.168.1.2 $ ROS_NAMESPACE=rtabmap rosrun rtabmap_ros rtabmapviz _subscribe_odom_info:=false _frame_id:=camera_link Cheers |

|

Administrator

|

In reply to this post by David

Hi David,

If you have a lidar, yes, you could improve odometry a lot with a node like laser_scan_matcher. However, if you simulate a laser scan from the same RGB-D camera, odometry may be worst than visual odometry. If your environment is 2D (indoor always same floor), you may try adding this parameter: "--Reg/Force3DoF true" (if you are on old Indigo binaries, add also "--Optimizer/Slam2D true"). In the remote mapping tutorial, the bandwidth is limited to 5 Hz, but to get good visual odometry, try to increase it to 10/15 Hz at least if you have enough bandwidth. cheers, Mathieu |

|

Hi all,

I'm going through the same optimization of rtabmap and kinect on a Raspberry 3B. Have you ever consider to use one of this dongle to offload calculations? https://coral.ai/products/accelerator/ I'm wondering if any of you made some tests. Thanks Marco |

«

Return to Official RTAB-Map Forum

|

1 view|%1 views

| Free forum by Nabble | Edit this page |