RTAB-Map on Jetson Nano

RTAB-Map on Jetson Nano

|

Hello first time here,

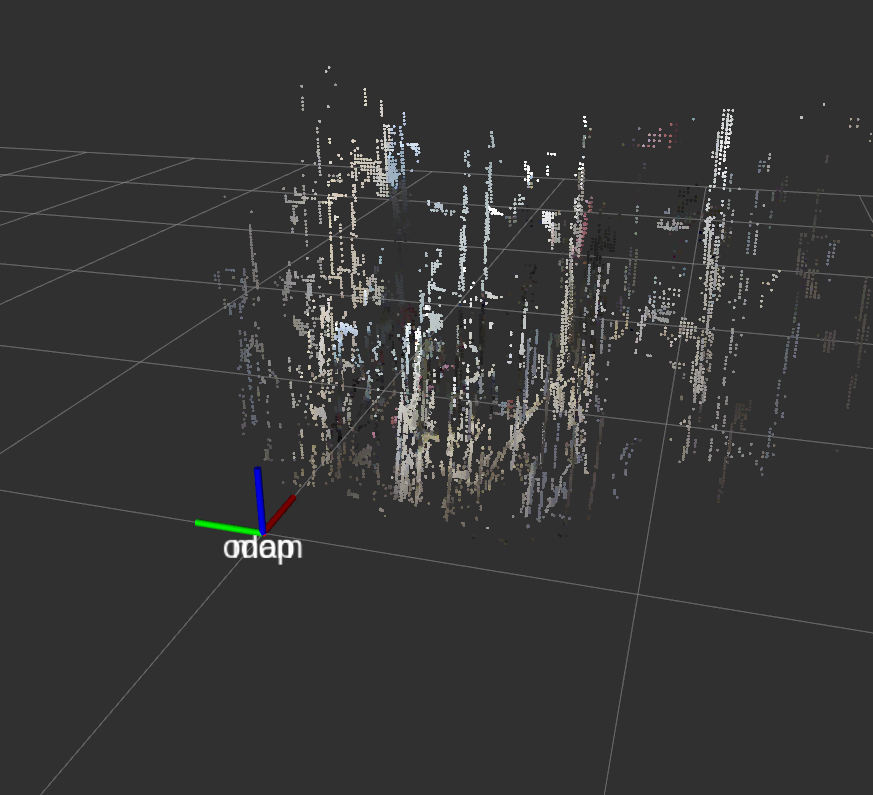

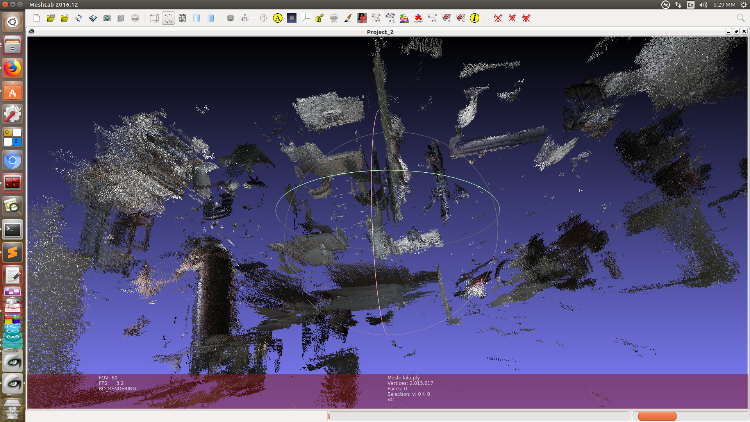

I am trying to integrate rtab-map on a jetson Nano I am using a Kinect v1 when i try to run the handheld version i lose odometry very easily and get maps like this with random points in front of the camera.  What is your opinion on running rtab-map on the Nano with a kinect, should i consider buying a ZED camera that people succesfully used for 3d mapping with rtab I also have a cheap 100 bucks 2D lidar (ydlidar x4) that i can get odometry with, I want to combine visual odometry + lidar odometry + imu + wheel_encoders with the robot localize package. How you suggest to accomplish that (e.g use visual and lidar (ICP) odometry from rtab map and fed them to the robot localize) or (visual odometry from rtabmap) + external lidar odometry or with a Zed camera external visual odometry + lidar odometry -> robot_localize -> rtabmap I hope i am not vague Thanks |

|

Administrator

|

Hi,

The cloud seems very sparse, which is not good for visual odometry. Are you using freenect_launch? Check if the depth image is dense or sparse with rqt_image_view. I would suggest to make work a single odometry approach. If you have wheel encoders, start by using it. rtabmap can refine that odometry afterwards with the lidar (without the need of icp_odometry, thus also saving processing power). See http://wiki.ros.org/rtabmap_ros/Tutorials/SetupOnYourRobot#Kinect_.2B-_Odometry_.2B-_2D_laser If you are navigating indoor, most of the time visual odometry is not reliable if the field of view is limited (camera often looks at plain white walls). cheers, Mathieu |

Re: RTAB-Map on Jetson Nano

|

Hello,

I think the problem is the sparse clouds, i use the command roslaunch openni_launch openni.launch depth_registration:=true I can't easily install freenect from source on ros melodic on my pc the rgbd handheld example works like a charm, wether using freenect or openni i used the launch file you provided me using wheel encoders and limiting the maxDepth to 2 meters the results are still parse and poor how i can get Dense depth images from openni? i don't want to use SURF features since they are closed and i will have to install opencv from source In my pc running ros kinetic it works like a charm either with openni or freenect The sparsity of the clouds is buffling to me.. Thanks you!! Panagiotis

|

|

Administrator

|

Hi,

The depth image doesn't look usable on the platform. I cannot find on internet someone showing a working kinectv1 on nano, only pages on kinectv2. You may switch to a realsense instead if you cannot make it work (seems working on nano). cheers, Mathieu |

Re: RTAB-Map on Jetson Nano

|

Hi,

the problem is that openni doesn't work well with arm processors I managed to get good Depth images using a docker kinetic with freenect install and --net=host --privileged I know this is not a rtabmap specific question but i am tottaly new to docker and somewhat confused So i want to just run freenect from a docker, will i jave to synchronize the clocks to use with rtabmap on host? When i rerun the image the installations are reset,should i use a dockerfile that installs freenect? I would like to use a single command that will run the freenect driver inside docker with synchronized clocks so i don't get crazy Thank you for your time, I will either study docker in depth or buy a Zed or realsense camera :P Thank you |

Re: RTAB-Map on Jetson Nano

|

In reply to this post by matlabbe

Well i found my single command SUCCESS!

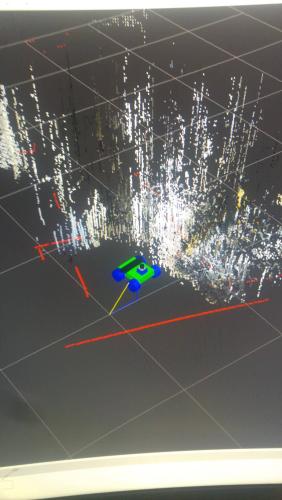

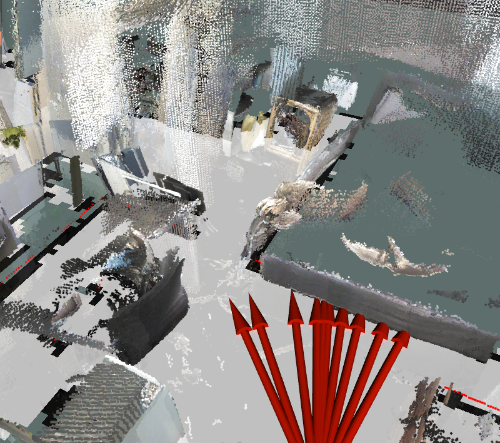

FROM ros:kinetic-ros-base # install rtabmap packages RUN apt-get update && apt-get install -y \ libfreenect-dev \ ros-kinetic-freenect-launch \ && echo "source /opt/ros/kinetic/setup.bash" >> ~/.bashrc \ && rm -rf /var/lib/apt/lists/ CMD ["roslaunch","freenect_launch","freenect.launch", "depth_registration:=true"] I used this docker file and run it with --net=host --privileged I don't think i need to synchronize the clocks of the container and host (this might be a problem on mac os) here is rgbd handheld example  Well now it breaks my rosserial communication when i try to visualize with rviz and the robot moves erratically i could try increasing the buffer size or lowering the hz but i would like to use the remote visualization with the compressed images, instead of ssh -X the whole rviz, can i only the hz's of the compressed image without affecting the hz of the kinect? Anyway thank you, cheers Panagiotis |

Re: RTAB-Map on Jetson Nano

|

I am having some troubles getting my pc to see the topics i need for remote visualization, can i use rgbd_relay but the computation to be done on jetson?

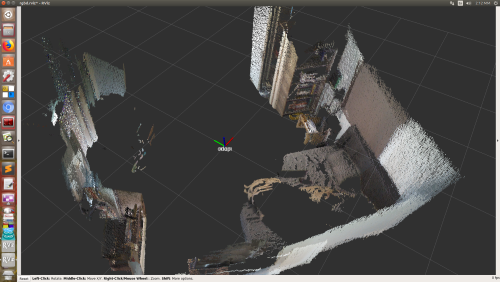

Here are the first results after mapping blind. Good enough i gueess, the kinect is too low in the ground should i tilt it up and if so should i change the tf? Thanks   |

|

Administrator

|

Yes, it would be better to launch rviz on remote computer and subscribe to topics published by the jetson. For visualization, subscribing to only rtabmap/mapData topic with rviz's rtabmap_ros/MapCloud plugin could be enough, as subscribing to depth images with compressedDepth plugin can use a lot of resources (on the jetson) and the framerate would suffer.

Can you point out which kind of configuration on this page you are looking for? as I see you have a 2d robot with lidar. Do you have wheel odometry available? It looks like you could do something similar to the first configuration: http://wiki.ros.org/rtabmap_ros/Tutorials/SetupOnYourRobot#Kinect_.2B-_Odometry_.2B-_2D_laser cheers, Mathieu |

Re: RTAB-Map on Jetson Nano

|

Yes this is the configuration i am running, actually for odometry i fuse lidar + wheel encoders with ekf, is this double work regarding ICP? maybe not

can you guide me a little on how to setup the communication bewteen client and robot to get the Map/data ROS_MASTER_URI , ROS_IP etc can i transfer only specific topics? or it doesn't matter because only "echo" topics get actually transferred? e.q client MASTER pointing to robot and ROS_IP=0.0.0.0? Lastly if i tilt the kinect to see more stuff i have to change the pitch of my xacro? Thank you! |

|

Administrator

|

In that configuration, the RGBD/NeighborLinkRefining is actually refining the odometry with the laser scans, which is enough in general and doesn't use a lot of resources.

If you launched the roscore on the robot (assuming its IP is 192.168.1.9), you only need to set the MASTER uri on remote computer: $ export ROS_MASTER_URI=http://192.168.1.9:11311 $ rostopic list If you tilt the kinect, you should update the xacro. cheers, Mathieu |

Re: RTAB-Map on Jetson Nano

|

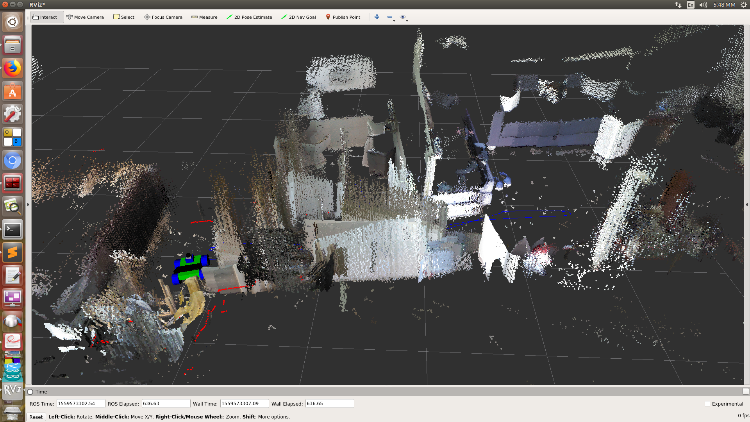

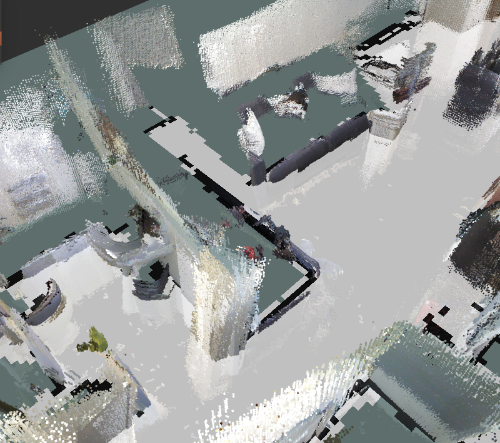

It work nicely with minimum lag, feels good to have a responsive rviz after many days fielding with ssh -X

Now i will have to fiddle more with the paramaters and optimize, and try localization mode Here are some first results,    Thank you so much for your valuable help cheers! |

«

Return to Official RTAB-Map Forum

|

1 view|%1 views

| Free forum by Nabble | Edit this page |