RTAB-Map stereo odometry inaccurate with ZED2i vs ZED

|

I have been using the ZED2i with RTAB-Map stereo odometry and have gotten consistently inaccurate results. When walking in a straight line with a handheld ZED2i for 3.8 meters, the transform from /tf measures a 3.1 meter displacement. This error seems to increase somewhat linearly as distance traveled increases.

I also have access to an original ZED camera and the odometry is much more accurate with it when the same experiment as above is conducted. Could you advise me on any additional features/parameters the ZED2i has that might result in this discrepancy? I have already tried calibrating the ZED2i with little/no observable improvement. Thanks in advance! |

|

Administrator

|

I did notice that with my ZED2 I have also some calibration issue with it. Can you record a rosbag of the camera data? (topics used by stereo odometry). Other cause could be the resolution of the images. Are Zed and Zed2i using same resolution? |

|

Hi Mathieu,

Thank you for your quick reply. Upon further testing, it appear that the ZED does not actually perform better than the ZED2i. It is less consistent and thus sometimes appears to be correct. From run to run, it can have as much as 0.8 m of error. I have attached two bag files recorded with the ZED and ZED2i of me walking 10 feet (3.05 meters).

zed2i_rtabmap_vo.bag

zed_rtabmap_vo.bag

The resolution for both cameras is the same.

Here is our common.yaml:

# params/common.yaml # Common parameters to Stereolabs ZED and ZED mini cameras --- # Dynamic parameters cannot have a namespace brightness: 4 # Dynamic contrast: 4 # Dynamic hue: 0 # Dynamic saturation: 4 # Dynamic sharpness: 4 # Dynamic gamma: 8 # Dynamic - Requires SDK >=v3.1 auto_exposure_gain: true # Dynamic gain: 100 # Dynamic - works only if `auto_exposure_gain` is false exposure: 100 # Dynamic - works only if `auto_exposure_gain` is false auto_whitebalance: true # Dynamic whitebalance_temperature: 42 # Dynamic - works only if `auto_whitebalance` is false depth_confidence: 30 # Dynamic depth_texture_conf: 100 # Dynamic pub_frame_rate: 20.0 # Dynamic - frequency of publishing of video and depth data point_cloud_freq: 10.0 # Dynamic - frequency of the pointcloud publishing (equal or less to `grab_frame_rate` value) general: camera_name: zed # A name for the camera (can be different from camera model and node name and can be overwritten by the launch file) zed_id: 0 serial_number: 0 resolution: 2 # '0': HD2K, '1': HD1080, '2': HD720, '3': VGA grab_frame_rate: 20 # Frequency of frame grabbing for internal SDK operations gpu_id: -1 base_frame: 'base_link' # must be equal to the frame_id used in the URDF file verbose: false # Enable info message by the ZED SDK svo_compression: 2 # `0`: LOSSLESS, `1`: AVCHD, `2`: HEVC self_calib: true # enable/disable self calibration at starting camera_flip: false video: img_downsample_factor: 0.5 # Resample factor for images [0.01,1.0] The SDK works with native image sizes, but publishes rescaled image. extrinsic_in_camera_frame: true # if `false` extrinsic parameter in `camera_info` will use ROS native frame (X FORWARD, Z UP) instead of the camera frame (Z FORWARD, Y DOWN) [`true` use old behavior as for version < v3.1] depth: quality: 2 # '0': NONE, '1': PERFORMANCE, '2': QUALITY, '3': ULTRA, '4': NEURAL sensing_mode: 0 # '0': STANDARD, '1': FILL (not use FILL for robotic applications) depth_stabilization: 0 # `0`: disabled, `1`: enabled openni_depth_mode: false # 'false': 32bit float meters, 'true': 16bit uchar millimeters depth_downsample_factor: 0.5 # Resample factor for depth data matrices [0.01,1.0] The SDK works with native data sizes, but publishes rescaled matrices (depth map, point cloud, ...) pos_tracking: pos_tracking_enabled: true # True to enable positional tracking from start publish_tf: true # publish `visual_odom -> base_link` TF publish_map_tf: false # publish `map -> visual_odom` TF map_frame: 'map' # main frame odometry_frame: 'odom' # odometry frame area_memory_db_path: 'zed_area_memory.area' # file loaded when the node starts to restore the "known visual features" map. save_area_memory_db_on_exit: false # save the "known visual features" map when the node is correctly closed to the path indicated by `area_memory_db_path` area_memory: true # Enable to detect loop closure floor_alignment: false # Enable to automatically calculate camera/floor offset initial_base_pose: [0.0,0.0,0.0, 0.0,0.0,0.0] # Initial position of the `base_frame` -> [X, Y, Z, R, P, Y] init_odom_with_first_valid_pose: true # Enable to initialize the odometry with the first valid pose path_pub_rate: 2.0 # Camera trajectory publishing frequency path_max_count: -1 # use '-1' for unlimited path size two_d_mode: false # Force navigation on a plane. If true the Z value will be fixed to "fixed_z_value", roll and pitch to zero fixed_z_value: 0.00 # Value to be used for Z coordinate if `two_d_mode` is true mapping: mapping_enabled: false # True to enable mapping and fused point cloud publication resolution: 0.05 # maps resolution in meters [0.01f, 0.2f] max_mapping_range: -1 # maximum depth range while mapping in meters (-1 for automatic calculation) [2.0, 20.0] fused_pointcloud_freq: 1.0 # frequency of the publishing of the fused colored point cloud clicked_point_topic: '/clicked_point' # Topic published by Rviz when a point of the cloud is clicked. Used for plane detection # params/zed2i.yaml # Parameters for Stereolabs ZED2 camera --- general: camera_model: 'zed2i' camera_name: 'zed2i' depth: min_depth: 0.3 # Min: 0.2, Max: 3.0 - Default 0.7 - Note: reducing this value wil require more computational power and GPU memory max_depth: 20.0 # Max: 40.0 quality: 3 # '0': NONE, '1': PERFORMANCE, '2': QUALITY, '3': ULTRA, '4': NEURAL pos_tracking: imu_fusion: true # enable/disable IMU fusion. When set to false, only the optical odometry will be used. sensors: sensors_timestamp_sync: false # Synchronize Sensors messages timestamp with latest received frame max_pub_rate: 200. # max frequency of publishing of sensors data. MAX: 400. - MIN: grab rate publish_imu_tf: false # publish `IMU -> <cam_name>_left_camera_frame` TF object_detection: od_enabled: false # True to enable Object Detection [not available for ZED] model: 0 # '0': MULTI_CLASS_BOX - '1': MULTI_CLASS_BOX_ACCURATE - '2': HUMAN_BODY_FAST - '3': HUMAN_BODY_ACCURATE - '4': MULTI_CLASS_BOX_MEDIUM - '5': HUMAN_BODY_MEDIUM - '6': PERSON_HEAD_BOX confidence_threshold: 50 # Minimum value of the detection confidence of an object [0,100] max_range: 15. # Maximum detection range object_tracking_enabled: true # Enable/disable the tracking of the detected objects body_fitting: false # Enable/disable body fitting for 'HUMAN_BODY_X' models mc_people: true # Enable/disable the detection of persons for 'MULTI_CLASS_BOX_X' models mc_vehicle: true # Enable/disable the detection of vehicles for 'MULTI_CLASS_BOX_X' models mc_bag: true # Enable/disable the detection of bags for 'MULTI_CLASS_BOX_X' models mc_animal: true # Enable/disable the detection of animals for 'MULTI_CLASS_BOX_X' models mc_electronics: true # Enable/disable the detection of electronic devices for 'MULTI_CLASS_BOX_X' models mc_fruit_vegetable: true # Enable/disable the detection of fruits and vegetables for 'MULTI_CLASS_BOX_X' models mc_sport: true # Enable/disable the detection of sport-related objects for 'MULTI_CLASS_BOX_X' models |

|

Administrator

|

Hi,

I tested the two bags like this: zed:

rosbag info zed_rtabmap_vo.bag

path: zed_rtabmap_vo.bag

version: 2.0

duration: 13.6s

start: Jan 31 2023 16:45:29.30 (1675212329.30)

end: Jan 31 2023 16:45:42.87 (1675212342.87)

size: 40.5 MB

messages: 161

compression: none [46/46 chunks]

types: sensor_msgs/CameraInfo [c9a58c1b0b154e0e6da7578cb991d214]

sensor_msgs/Image [060021388200f6f0f447d0fcd9c64743]

tf2_msgs/TFMessage [94810edda583a504dfda3829e70d7eec]

topics: /tf 23 msgs : tf2_msgs/TFMessage

/zed_node/left/camera_info 46 msgs : sensor_msgs/CameraInfo

/zed_node/left/image_rect_color 23 msgs : sensor_msgs/Image

/zed_node/right/camera_info 46 msgs : sensor_msgs/CameraInfo

/zed_node/right/image_rect_color 23 msgs : sensor_msgs/Image

roslaunch rtabmap_ros rtabmap.launch \

args:="-d" \

stereo:=true \

left_image_topic:=/zed_node/left/image_rect_color \

left_camera_info_topic:=/zed_node/left/camera_info \

right_image_topic:=/zed_node/right/image_rect_color \

right_camera_info_topic:=/zed_node/right/camera_info \

use_sim_time:=true \

frame_id:=zed_left_camera_optical_frame

rosbag play --clock zed_rtabmap_vo.bag

zed2i:

rosbag info zed2i_rtabmap_vo.bag

path: zed2i_rtabmap_vo.bag

version: 2.0

duration: 16.4s

start: Jan 31 2023 16:40:19.12 (1675212019.12)

end: Jan 31 2023 16:40:35.50 (1675212035.50)

size: 140.9 MB

messages: 642

compression: none [161/161 chunks]

types: sensor_msgs/CameraInfo [c9a58c1b0b154e0e6da7578cb991d214]

sensor_msgs/Image [060021388200f6f0f447d0fcd9c64743]

tf2_msgs/TFMessage [94810edda583a504dfda3829e70d7eec]

topics: /tf 162 msgs : tf2_msgs/TFMessage (2 connections)

/zed_node/left/camera_info 160 msgs : sensor_msgs/CameraInfo

/zed_node/left/image_rect_color 80 msgs : sensor_msgs/Image

/zed_node/right/camera_info 160 msgs : sensor_msgs/CameraInfo

/zed_node/right/image_rect_color 80 msgs : sensor_msgs/Image

roslaunch rtabmap_ros rtabmap.launch \

args:="-d" \

stereo:=true \

left_image_topic:=/zed_node/left/image_rect_color \

left_camera_info_topic:=/zed_node/left/camera_info \

right_image_topic:=/zed_node/right/image_rect_color \

right_camera_info_topic:=/zed_node/right/camera_info \

use_sim_time:=true \

frame_id:=zed2i_left_camera_optical_frame

rosbag play --clock zed2i_rtabmap_vo.bag

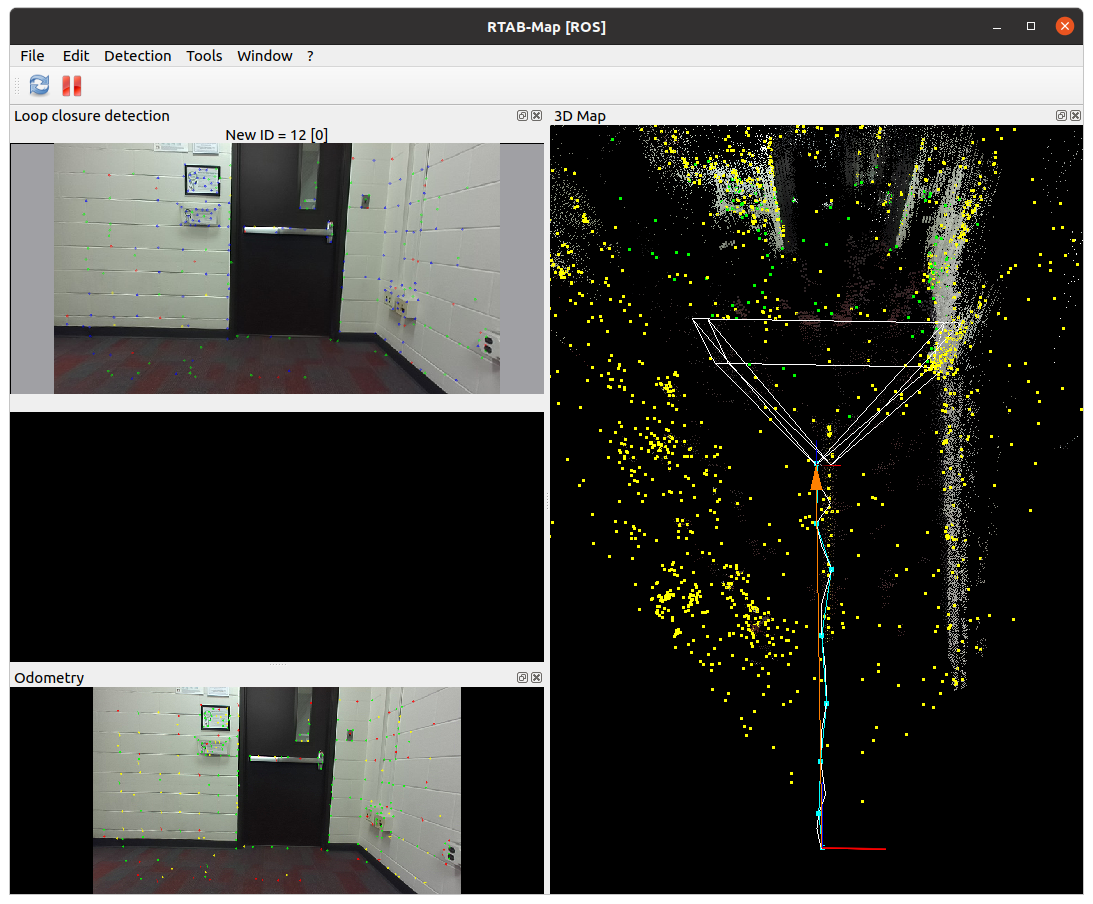

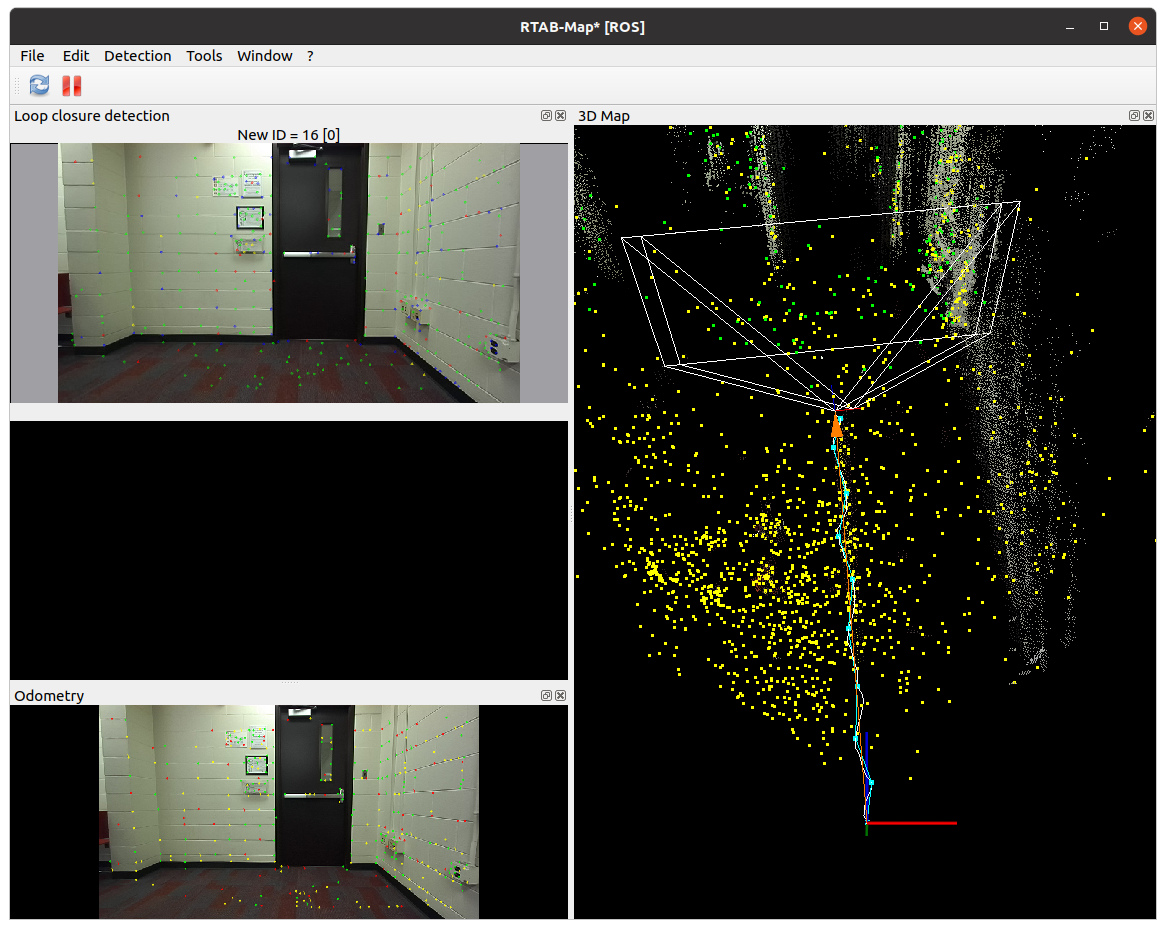

Both trajectories look similar, however the framerate on zed is too slow for visual odometry (only 2 Hz), while zed2i is 10 Hz (not so bad but it could be faster). cheers, Mathieu |

|

Hello Mathieu,

Thank you for looking at this. My prior post was incorrect again (sorry) we had the ZED wrapper odometry on for some reason so that was publishing odom -> base_link instead of RTAB-Map. Using RTAB-Map with ZED1 does perform better than the ZED2i. I was able to borrow a different ZED2i and conducted the same tests as above on it. This new ZED2i is extremely reliable, performing just as well as the ZED1, which leads me to believe we have a ZED2i hardware/firmware issue. Thanks again for your assistance, I will let you know if we continue to have issues. |

«

Return to Official RTAB-Map Forum

|

1 view|%1 views

| Free forum by Nabble | Edit this page |