RTAB SLAM using Wheel Encoder+imu+2d rotating Lidar

|

This post was updated on .

Hi all,

So I'm trying to build a 3d map inside a featureless pipe using wheel encoders + imu + 2d rotating laser. So considering all the t-junctions as a feature to my pipe, I was wondering whether RTAB map can be used without a stereo camera or not. |

|

Administrator

|

Hi,

RTAB-Map can be used without a stereo camera with latest version from source, though there is no official tutorial to do that. Loop closure detection doesn't work as there is no camera. However, proximity detection is still working with scans, so some loop closures can be detected as long as odometry doesn't drift too much. In your setup, the 2d laser should be converted to a 3d point cloud. For example, for each 360 rotation of the lidar, the scans could be accumulated using laser scan assembler. With latest rtabmap version, rtabmap_ros/point_cloud_assembler nodelet could be also used for that (with scans converted to PointCloud2 before). The assembled point cloud would be sent to rtabmap. The wheel encoders + IMU could give the odometry input to rtabmap. To launch rtabmap subscribing only on point cloud and odometry: <node pkg="rtabmap_ros" type="rtabmap" name="rtabmap"> <param name="subscribe_depth" type="bool" value="false"/> <param name="subscribe_rgb" type="bool" value="false"/> <param name="subscribe_scan_cloud" type="bool" value="true"/> <remap from="scan_cloud" to="assembled_scans"/> <!-- sensor_msgs/PointCloud2 --> <remap from="odom" to="odometry"/> <!-- odometry from wheel encoders + IMU fusion --> ... </node>For maximum accuracy when accumulating a point cloud, the robot may stand still. If rtabmap_ros/point_cloud_assembler is used and odometry is quite accurate on short distance, we could feed it the odometry so that the scans are transformed accordingly to odometry when moving during the rotation. cheers, Mathieu |

|

Thanks a lot @matlabbe

So, probably will try 1st with Google Cartographer, if it fails will go for R-TAB. So, I wanted to ask 1 more thing, the loop closure cannot be achieved with a monocular camera, it needs a stereo camera, right? |

|

Administrator

|

Yeah, monocular is not supported in metric slam mode (default), a stereo or RGB-D camera is required. A monocular camera is supported only for appearance-based mapping mode (without metrical estimation) like in the original paper on RTAB-Map on loop closure detection-only.

|

|

This post was updated on .

Thanks for your reply. So, if I have a Kinect as well to my system, do you think it would be sufficient to use RTAB mapping since my environment is featureless, only the junctions can be considered as features. As Kinect matches the corner+key features from the database, I was a bit skeptical about the RTAB mapping in such cases.

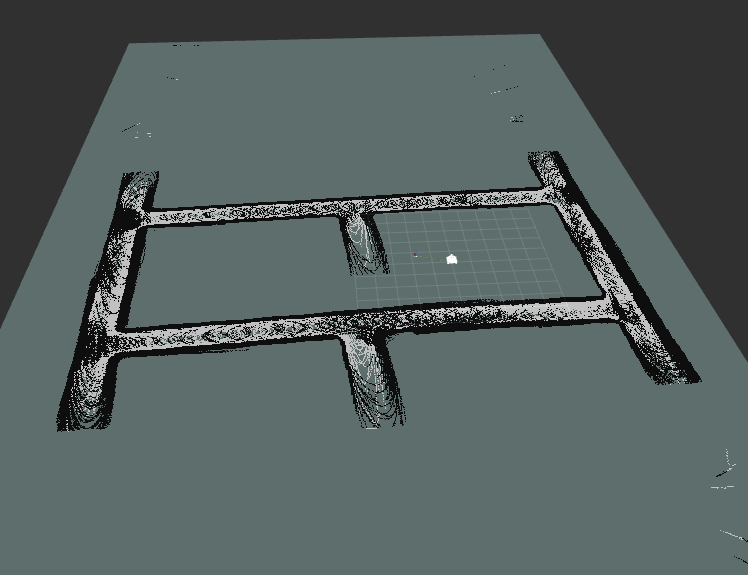

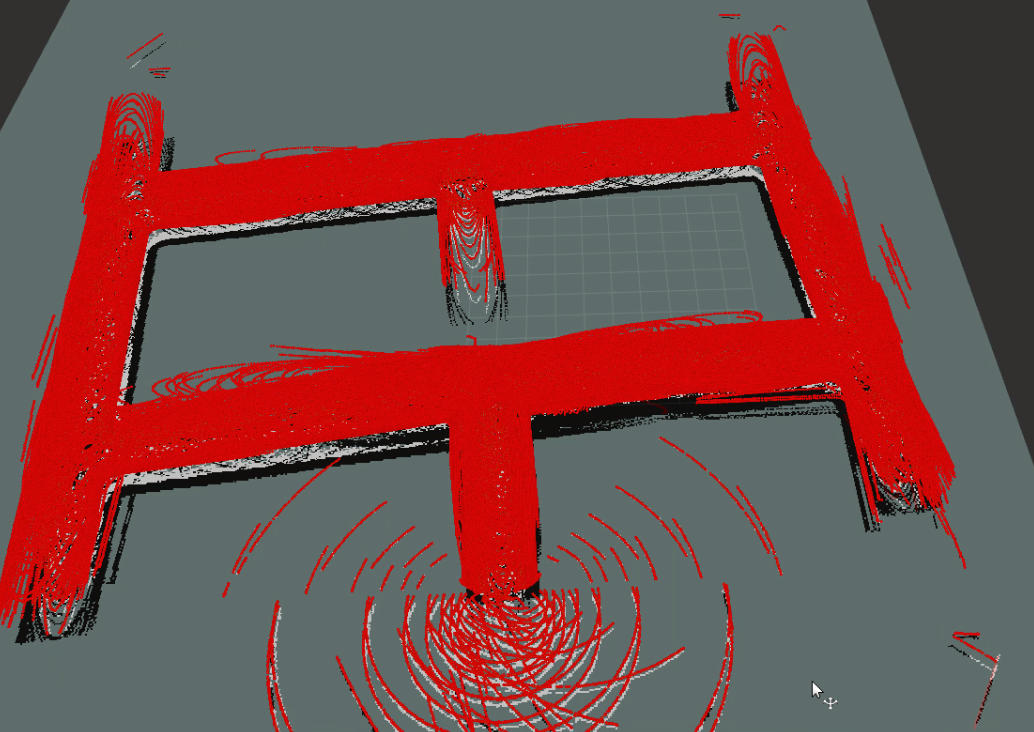

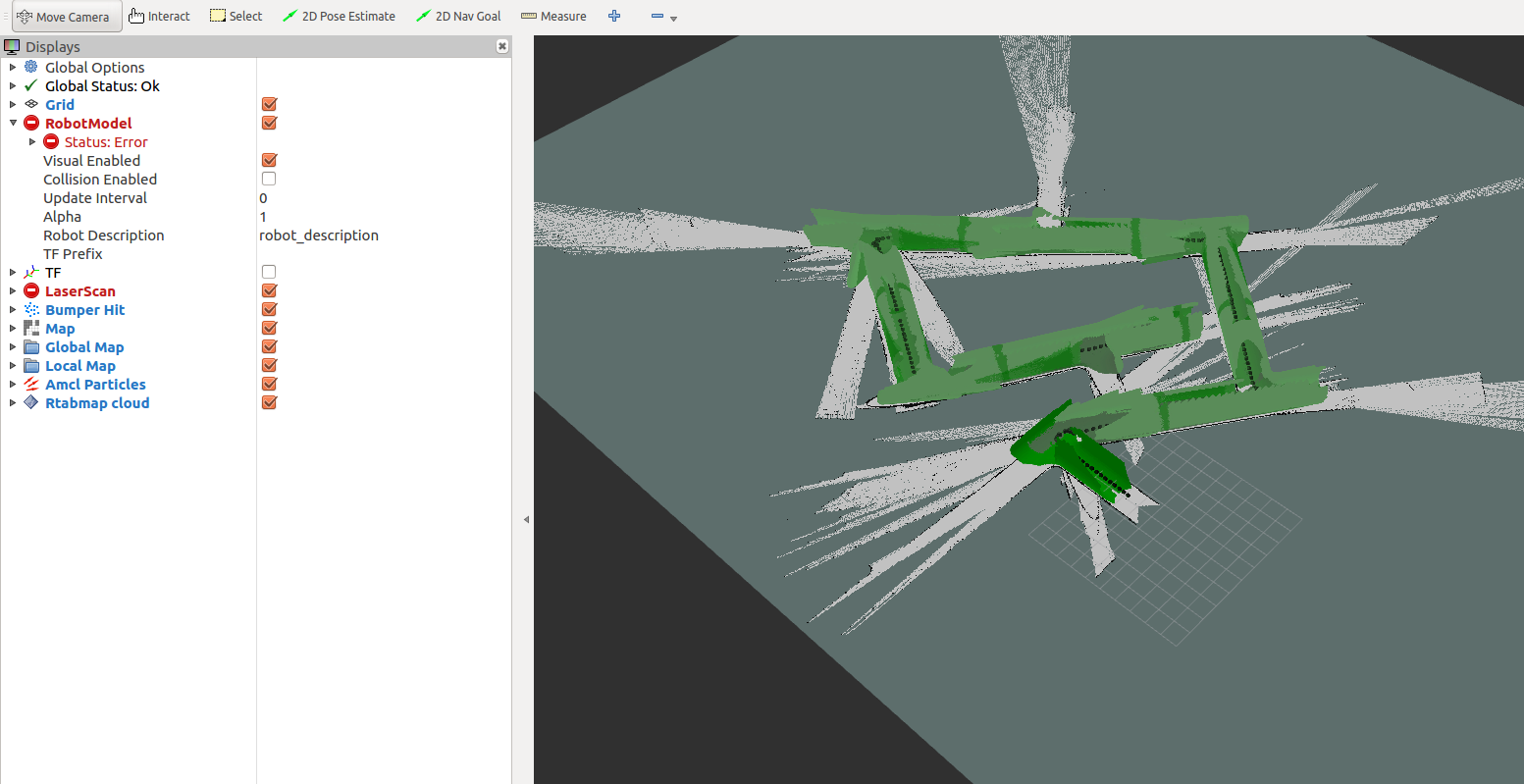

Moreover, I tried another approach. So, I added a 3d LiDAR Velodyne-16 which gives me point cloud directly. So, currently my launch file is this: <?xml version="1.0" encoding="UTF-8"?> <launch> <group ns="rtabmap"> <node pkg="rtabmap_ros" type="rtabmap" name="rtabmap"> <remap from="scan_cloud" to="/velodyne_points"/> <remap from="odom" to="/odom"/> <remap from="grid_map" to="/map"/> </node> </group> </launch> So, when I run the bag file, I'm not able to retain the point cloud when visualizing in Rviz. It disappears as the robots moves further and I end up with a 2d map instead of 3d.  But surprisingly, it is still able to close the loop. I read in one of your post where you suggested to use a monocular rgb camera and then transform the point cloud coming from velodyne to depth. But I'm afraid I couldn't figure out how to convert the point cloud data to depth image. Could you help me in this case? |

|

Administrator

|

Hi,

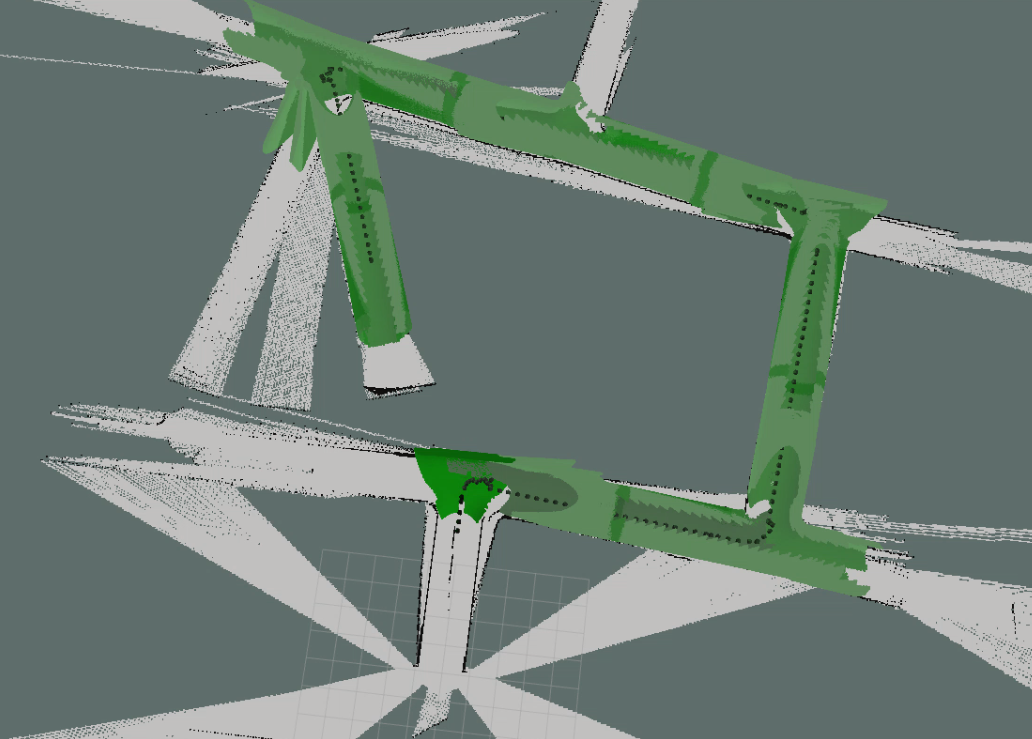

RTAB-Map can close small loop closures with only lidar (a process called "proximity detection" in RTAB-Map). To see the 3D cloud, subscribe to /rtabmap/cloud_map (which is a PointCloud2 type) topic in rviz. It could be easier to add a realsense camera instead of a single RGB camera so that you don't bother how projecting the lidar cloud in RGB camera frame to get a registered depth image. However, if your environment is visually featureless, a camera may just not be usable anyway for loop closure detection, relying only on geometry, which proximity detection is already doing in your case with the lidar alone. cheers, Mathieu |

|

This post was updated on .

Hi,

Yeah, in a small loop using only odometry and 3d Lidar is sufficient to do loop closure.

However, I tried with a Kinect, 2d Lidar and Odometry for the same map. I run the same bag each time and every time I get different results but it fails to close the loop   Is there any specific reason for this? Here is my launch file: my_rtab.launch Moreover, I think if I tune some parameters even this can work for me. |

«

Return to Official RTAB-Map Forum

|

1 view|%1 views

| Free forum by Nabble | Edit this page |