RTAB setup with a 3D LiDAR

|

Hello,

I need to scan and get a 3D model of a small building and its surroundings (trees, etc.). I plan on mounting my sensors on a small drone and fly around the building to scan it. The resolution should be good enough to see details like pipes, chimneys, conduits, etc. My main question is whether RTAB can be used to achieve it. My second questions is what is the best sensor setup to use with RTAB to achieve this goal. I have a 3D LiDAR unit (Velodyne VLP-16). Can it be used by RTAB to produce a 3D model? Do I need any additional sensors? In ROS, I can use velodyne node to get sensor_msgs/PointCloud2 point clouds. Can rtabmap be used to produce an accurate 3D model from them? Thank you for any insight. |

|

Administrator

|

Pretty much answered here: http://answers.ros.org/question/226968/use-of-rtabmap-with-3d-lidars/

RTAB-Map requires at least a camera for loop closure detection. Creating a registered depth image using the laser scans and you are good to go. My only concern is if the drone can power all these sensors and process all their data onboard (in term of power, weight and processing power). cheers, Mathieu |

|

Thank you Mathieu,

I want to make sure I understood you correctly. Are you suggesting to use a camera + RTAB to figure out the pose of the camera. Then compute the pose of the LiDAR. And then use the pose of the LiDAR to transform LiDAR's scans (point clouds) into a common coordinate frame. I'll look into power requirements. As far as processing power, I can afford to do processing offline. Thank you, I am very new to this field so any suggestions are appreciated. |

|

In reply to this post by chukcha2

Hi Chukcha,

RTABMAP can do the incremental map building you are speaking of, and definitely is capable of creating a detailed 3D model. however there are some suggestions and considerations i would offer: > consider using a Stereo Labs ZED camera [ here ] and the nVidia Jetson TX1 [ here ] > the ZED has a ROS driver which will provide 1080p HD RGB and Depth images, which RTABMAP will use to generate a detailed point cloud. > as Mathieu has said, the processing power is really something to consider. to get good performance, you will have to do a lot of tweaking. > I have heard of people using the Lidar LITE [ here ] as a LIDAR altimeter, which may be helpful. also the RPLIDAR is another useful 2D LIDAR, however for your application i dont believe it'll be very useful. best of luck |

|

Thank you @dgmiller! So if I get a camera I could use it together with RTABMAP to get a detailed point cloud. In this case, should I even bother with a lidar (I have Velodyne VLP-16)? Are lidars used to get better resolution/accuracy? If so, I guess I could start with just the camera and then add a lidar if needed.

As far as processing power goes, I have an nVidia Jetson TK1. It is pretty powerful but at this time I don't know how powerful of a system I will need. However I do not need to do all the processing in real time. I could do some/most/all processing offline (if that is something RTAB-MAP supports). Thank you for your suggestions! |

|

Administrator

|

Hi chukcha2,

If your goal is to have a precise 3D point cloud, I would use the Velodyne. RTAB-Map is not a Monocular SLAM approach, so you would need a stereo camera. As dgmiller pointed out, the ZED camera can be a good choice (I think they are supporting the Jetson). RTAB-Map can process data offline, if you are using a rosbag for example (you may record data at 10 Hz to get good odometry from RTAB-Map). Note that current RTAB-Map release (0.10) doesn't support well 3D lidars like the Velodyne (it is configured for 2D lidars). RTAB-Map can still create 3D point clouds from 3D lidars but ICP cannot be used to refine the map. The next version (0.11) will officially support 3D lidars. In the mean time, you can experiment with only a stereo camera on your drone. cheers, Mathieu |

|

Thank you dgmiller, Mathieu,

I looked at ZED and it looks very interesting. Do you have an idea of how hard it would be to add point clouds from the Velodyne to ZED's point clouds? Do you have a guess as to when version 0.11 will come out? Is registration of lidar point clouds based on some approach other than feature extraction? What is the principle behind it? Thank you. |

|

Administrator

|

Hi chukcha2,

You will be able to use a combination of features-based and/or ICP-based odometry. For example, odometry can be computed with visual features from the ZED camera and ICP (with point clouds from the Velodyne) could be done to refine the estimated transformation. RTAB-Map will assemble a XYZRGB point cloud for the ZED camera and a XYZ point cloud for the Velodyne (in ROS it is the rtabmap/cloud_map and rtabmap/scan_map topics). I am very near to make this release, probably next week :P cheers, Mathieu |

|

Thank you Mathieu, I am looking forward to 0.11 :)

In case this is helpful to you or others: after more than a week of waiting, ZED support finally partially replied to my questions regarding the ZED's specifications. They said that the depth accuracy is "1mm @1m and 1.5m @15m". I don't know if the relationship between the depth accuracy and actual distance is linear but if it is, then at 2 meters the accuracy is already ~11cm and at 6m it is over half a meter. So I am not very optimistic about using ZED by itself. But I hope that if depth data is taken from the Velodyne, the accuracy can be acceptable. Given ZED's pretty bad accuracy at larger distances, would it be better to use some other camera that gives better resolution which I am guessing would allow better feature detection? |

|

Administrator

|

Hi,

The ZED camera is a stereo camera with a standard baseline (12 cm). The error is actually exponential with the distance from the camera. To get better accuracy at longer ranges, the distance between the cameras should be higher (like ~1 meter for autonomous cars). If your drone flies close to objects (<15 meters), it would be not a big problem for rtabmap's odometry. In 0.11, to create a registered depth image from lidar point cloud, you can use util3d::projectCloudToCamera() and util3d::fillProjectedCloudHoles() functions. Create a node subscribing to PointCloud2 (from velodyne) and CameraInfo (from the camera), then republish a depth image for rgbd_odometry node. Note that in this setup, the minimum requirement is a single camera + 3d lidar (velodyne). cheers |

|

Mathieu,

If I understood you correctly you are suggesting to get RGB data (CameraInfo) from the camera and depth data (PointCloud2) from Velodyne. In other words you are suggesting not to use depth information from the stereo camera and basically use it as a monocular camera. And if that is the case, wouldn't it be a better to use, instead of ZED, a monocular camera that has greater resolution than ZED? Or are there some advantages to using ZED that I am overlooking? |

|

Administrator

|

Hi,

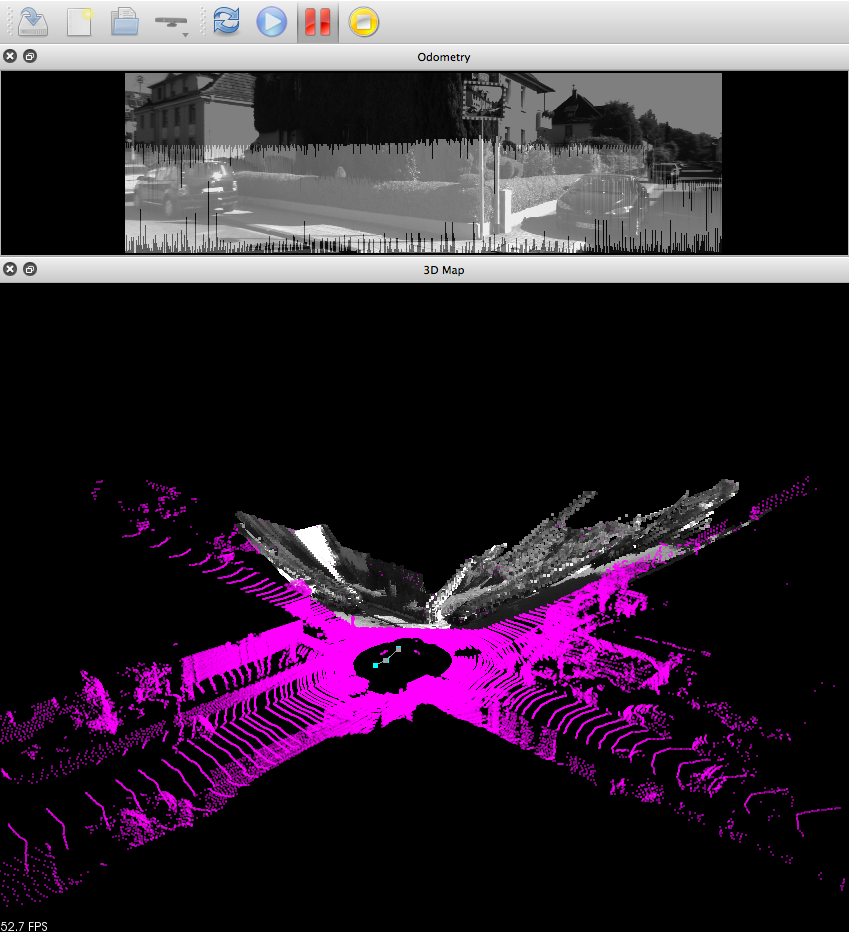

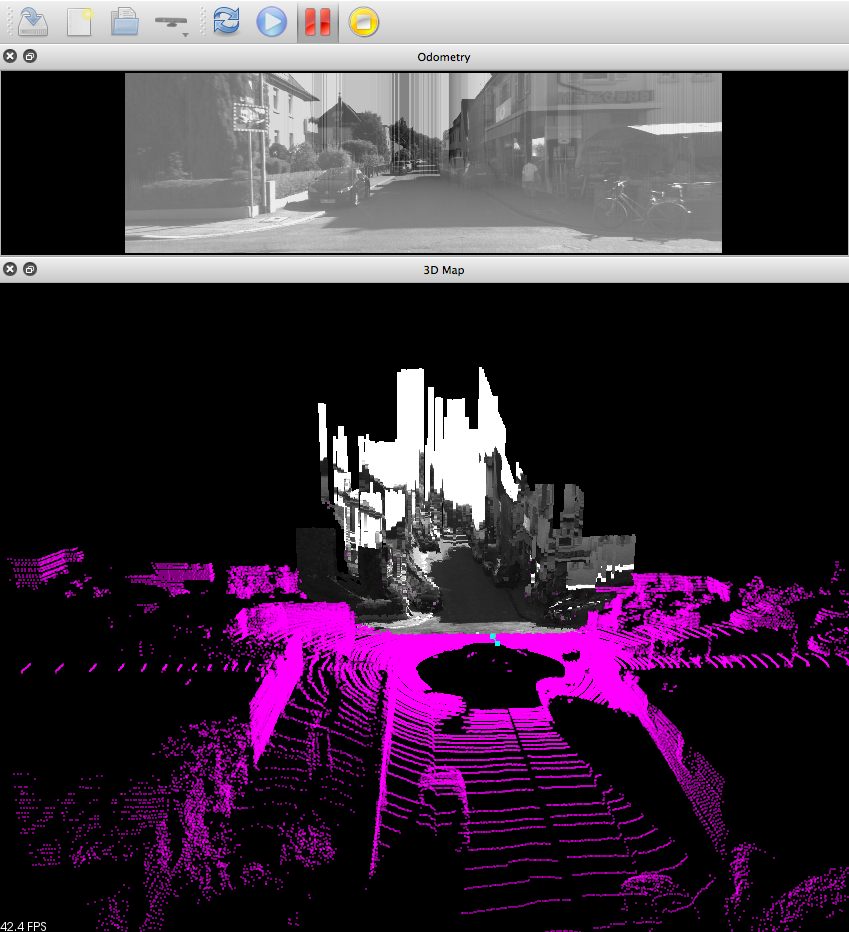

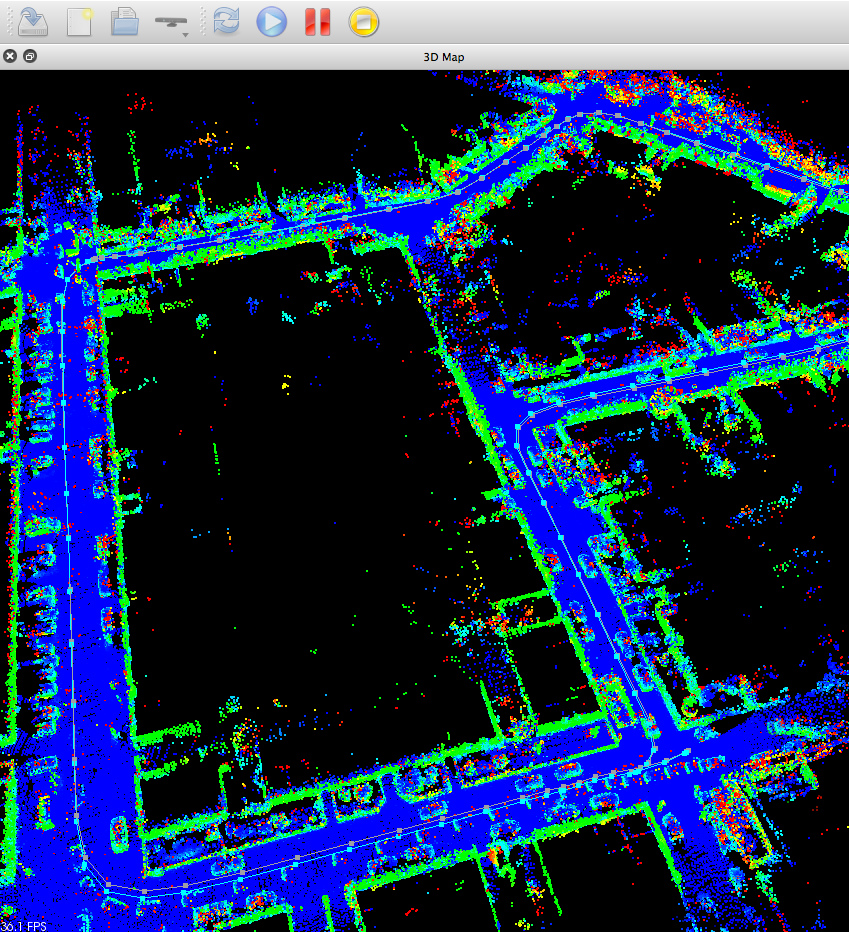

Yes, in that setup you only need a monocular camera with the Velodyne. Here are some examples of registering the point cloud of the Velodyne to a RGB camera. 1) Holes are filled between the projected points in the camera, to create a more dense depth image. Note how in that setup only the bottom of the image has valid depth values (the velodyne hits only stuff under ~3 meters, we could tilt the camera toward the ground to see more points)  2) Holes are filled up to border of the image  While the depth is interpolated, visual odometry can be done as a guess for ICP, thus giving a precise odometry. The resolution of the RGB camera doesn't need to be high (1024x768 is fine). I think that having a ZED camera could give a better guess to ICP. However, ICP with velodyne data is quite good to correct poor guesses (so many points over 360 degrees!). Here is an example of assembled scans after mapping with mono RGB+Velodyne (gray line is the ground truth, cyan line is the estimated trajectory):  cheers |

|

Wow, the screen shots look impressive! In the first example, what is the waveform-like shape in the odometry window? It is not clear in the 3D Map window of the first two examples, whether the lidar/camera was stationary (no registration of 3D point clouds). Obviously the third example shows a moving lidar/camera setup. Looks very impressive!

However from the snapshots it is not immediately clear how accurate the 3D maps are. If it is not too much trouble could you upload a 3D map so I can take a look at it in RTAB or some other software (for example in https://sketchfab.com)? Is this roughly what you are doing: 1) combine RBD from the camera and D from the lidar 2) use visual odometry to pre-register the frames 2) use ICP to finely register 3D point clouds You used a monocular camera in your setup. Do you have any pointers in choosing a camera that will do the job well? (from what I've understood so far there is no advantage to using stereo camera + velodyne vs monocular camera + velodyne) Thank you! |

|

Administrator

|

Hi,

In the first windows, it was just a comparison of the regenerated RGB-D point cloud over a single velodyne scan. I uploaded the point cloud from the assembled scans here: https://skfb.ly/LPAV The gray waveform is the projected depth (interpolated). So for each RGB pixel there is a corresponding depth value (computed from the Velodyne point cloud). This is what I am doing :P You can also add loop closure detection and graph optimization on the SLAM's back-end. A standard webcam could do the job. cheers |

|

Thank you Mathieu for taking the time to upload the map. I am guessing you down sampled the point cloud (it says there are "626.6k vertices") which might make sense for a large map but I'll have to try it with a more dense point cloud to see how accurate small details are scanned. Which Velodyne LiDAR model are you using? In your example the velodyne is mounted on a ground vehicle and hence its rotational motion is mostly limited to rotation around the vertical axis. Do you have an experiment where you moved the lidar in all 6 DoF? Or just tilt it to scan a wall of a building. I wonder how ICP works in this case. From what I understand for ICP to work the scans need to overlap but if between two scans I move the lidar in such a way that each scan line moves up slightly vertically, the two scans won't have overlapping points.

|

|

Administrator

|

Hi,

Indeed, the point cloud is downsampled x10 and a voxel grid of 30 cm is applied (130 000 pts per scan to ~5 000 pts per scan) to reduce ICP time so that Visual-ICP odometry can be done in ~100/120 ms (laptop 2010). The data used is from the KITTI dataset, the LiDAR is a Velodyne HDL-64E. Note that we can save the raw laser scans in the database and do the filtering when required (to export all points). I cannot upload to full scan to sketchfab (>50MB), so there it is: kitti07_fullscan.ply (12 846 629 pts). I don't have experimented on full 6DoF the LiDAR. If you find a dataset which contains such motion, send me the link :P The same problem can occur with a stereo camera if the drone is moving too fast so that there is no overlap between images. How fast you can go is dependent on how fast you can compute the odometry. You can also look at the project libpointmatcher, which has many examples with Velodyne sensors (working only with this sensor, no camera needed). cheers |

|

Hi Mathieu,

I used VLP-16 to scan a building wall by tilting it several times back and forth. I hope it is helpful and am very interested of the results: https://www.dropbox.com/s/0bvsgh3wmdilqya/2016-03-24-15-02-28_Velodyne-VLP-16-Data.pcap?dl=0 If the new version of rtabmap is available I can give it a try myself if there are instructions somewhere. Thank you. |

|

Hi Mathieu,

I see that version 0.11.7 is out. Does it have better support for LiDAR scans now? And have you done any more work using Lidar+Camera as an RGB-D camera? |

|

Administrator

|

Yes, in 0.11.7 the library is updated to handle 3D laser scans. However, the ROS interface has not been totally updated.

For rtabmap node, you have now a generic point cloud input (/scan_cloud) that can be enabled with "subscribe_scan_cloud=true". There are two more parameters: "scan_cloud_normal_k" (int) to compute normals of the point cloud if not 0, "scan_cloud_max_points" the maximum points of a scan. For odometry, the laser scan input is missing, so it will only estimate with RGB-D images (default). You would need a node creating a depth image from rgb camera_info and Lidar data, similar to what is done in the rtabmap library. See util3d::projectCloudToCamera() (cameraTransform is the transform from lidar frame to camera optical frame) and util3d::fillProjectedCloudHoles(). Note that a node like that would be added to rtabmap_ros in the future for convenience. So you would have these topics: Camera: RGB image -> /camera/rgb/image_rect_color Camera: RGB Camera info -> /camera/rgb/camera_info Lidar: Point cloud -> /lidar/cloud Point Cloud Projection: Depth image -> /camera/depth_registered/image_raw A minimal launch file:

<launch>

<node type="cloud_projection" ...

<!-- inputs -->

<param name="camera_info_topic" value="/camera/rgb/camera_info" />

<param name="cloud_topic" value="/lidar/cloud"/>

<!-- output -->

<param name="depth_topic" value="/camera/depth_registered/image_raw" />

</node>

<include file="$(find rtabmap_ros)/launch/rtabmap.launch">

<arg name="frame_id" value="base_link"/>

<arg name="subscribe_scan_cloud" value="true"/>

<arg name="scan_cloud_max_points" value="130000"/> <!-- Velodyne HDL-64E -->

<arg name="rgb_topic" value="/camera/rgb/image_rect_color" />

<arg name="depth_topic" value="/camera/depth_registered/image_raw" />

<arg name="camera_info_topic" value="/camera/rgb/camera_info" />

<arg name="scan_cloud_topic" value="/lidar/cloud"/>

<!-- Icp parameters based on KITTI dataset -->

<arg name="rtabmap_args" value="--delete_db_on_start --Reg/Strategy 1 --Icp/MaxTranslation 0 --Icp/VoxelSize 0.3 --Icp/DownsamplingStep 10 --Icp/MaxCorrespondenceDistance 0.3 --Icp/Iterations 10 Icp/Epsilon 0.01 --Icp/CorrespondenceRatio 0.1 --Icp/PointToPlane true"/>

<!-- Default visual-only odometry, Icp ROS interface is not yet implemented-->

<arg name="odom_args" value="--Reg/Strategy 0"/>

</include>

</launch>

For more information about Icp parameters, see: $ rosrun rtabmap_ros rtabmap --params | grep Icp Some optimizations would be to downsample/voxelize the point cloud and compute normals ("scan_cloud_normal_k" can be used for that) before sending it to rtabmap node (then these parameters would be: "--Icp/VoxelSize 0 --Icp/DownsamplingStep 1"). cheers, Mathieu |

«

Return to Official RTAB-Map Forum

|

1 view|%1 views

| Free forum by Nabble | Edit this page |