RTAB vs other scanning apps for iOS

|

This post was updated on .

Hi everyone,

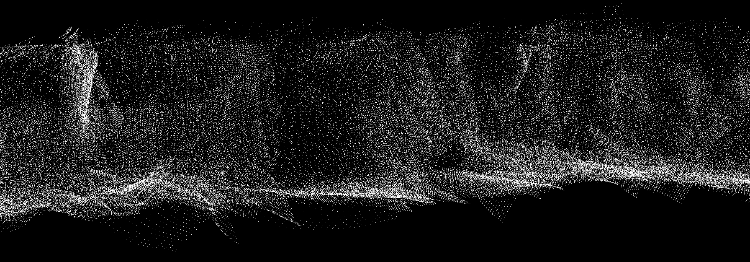

This question builds on the thread here, but I thought it would be helpful to start a new one for clarity. My goal is not to make an impractically broad feature request but rather to understand the challenges and explore if there are ways to handle them myself. To summarize the linked discussion: for scanning extensive galleries or corridors, ideally, we’d like to capture the ceiling, floor, and walls accurately with a single, smooth pass. To maximize coverage, I’ve been using the “low confidence” setting in the app, which reduces data discard but introduces substantial noise because of depth interpolation. This is especially problematic in low-texture areas. An example of this noise can be seen below:  Although this screenshot doesn’t fully show the extent, the noise is problematic in practice, and after extensive attempts to create a usable mesh from these clouds, I had to set the project aside. Using “medium confidence” reduces noise slightly, but it seems the only consistently viable option is “high confidence,” as suggested by Mathieu in the original thread. However, this setting significantly extends scan time, as each pass needs to be carefully deliberate. In contrast, it seems that other apps have found effective ways to mitigate this. For instance, here’s a quick scan I captured using Scaniverse while walking briskly through a low-texture gallery:  Given the high quality achieved at such a speed, I’m curious about how Scaniverse handles this (it’s unfortunately closed-source) and whether any insights from it might make RTAB-Map more adaptable to similar scanning workflows. I would guess that there is that is some clever playing around with the confidence maps, though I’m not sure of the specifics. Here are a few questions I hope might clarify a path forward: - Are there any known algorithms or public methods relevant to handling this type of noise, or is it likely a custom solution developed by the Scaniverse team? - Is there a way to achieve comparable results with RTAB-Map currently? Specifically, can it produce reliable scans while moving quickly through large, low-texture corridors? - If not, are there strategies I could try using RTAB-Map’s data output to improve results? By that I mean, starting from the depth images and poses (and RGB on the side) and then using external hand-made code. Any suggestions on where to start would be greatly appreciated. Although I fear that it will be hard to do anything consistant and reliable without the data of the confidence maps. Thank you in advance for any insights or suggestions! Best, Pierre |

|

Administrator

|

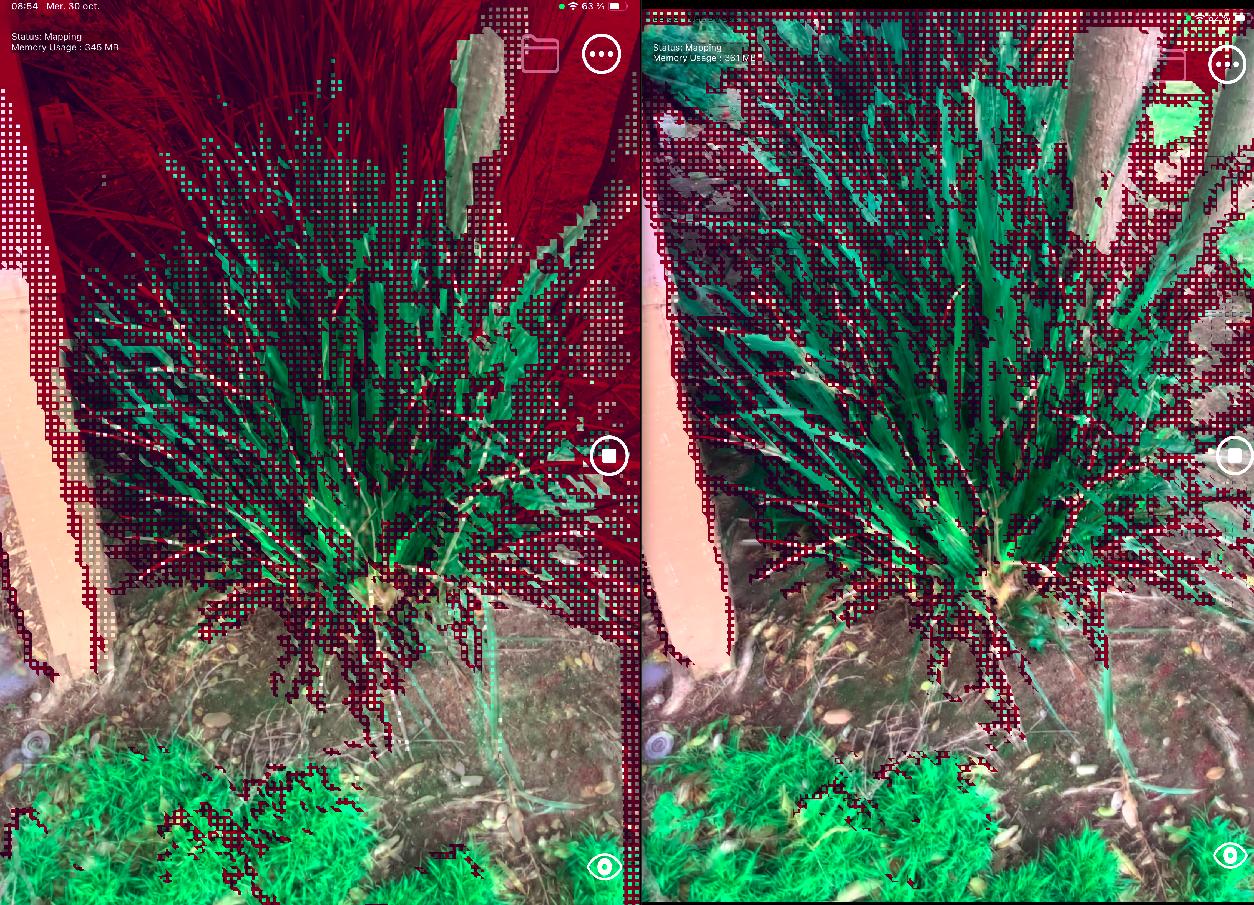

After a quick comparison with Scaniverse and rtabmap's depth confidence settings, it seems Scaniverse is using high confidence setting. Here is a single frame taken from both apps of the same thing (left=Scaniverse, right=RTAB-Map with high confidence):

In comparison, here RTAB-Map in medium and low confidences respectively:  So, the data looks the same. Note sure why you mean by "Given the high quality achieved at such a speed" if both apps use same inputs. Their point cloud rendering looks indeed nicer, don't know if this is just that effect that gives the feeling of giving better results. In term of speed, I noticed that their output file (with raw data) is 4 times larger than RTAB-Map's output file, meaning they are maybe reocrding point clouds faster (5 Hz or even more). In RTAB-Map's Mapping settings, you can increase the frame rate at which we add cloud to the map, so that you can scan "quicker". In term of meshing, they probably use a different approach than in RTAB-Map, though results look pretty similar. We have some noise filtering approaches in Desktop App (primarily based on filters that you can find on PointCloud Library), but we didn't add the options to iOS app. Probably, as they seem recording same data. Note that you can set Point Cloud rendering to see better all points added in real-time. With the online meshing approach, some polygons may not be shown (even if the points are recorded) when angle difference between surface normal and the camera point of view is large (there is a setting to adjust that angle in Rendering options). To try more settings, I generally load the map in RTAB-Map desktop app, then play with all options in Export Clouds dialog. cheers, Mathieu |

|

Hi Mathieu,

Thank you for your response. It looks like the issue comes from a misunderstanding on my part. I had assumed Scaniverse was doing something beyond depth high-confidence, but it seems I was wrong. I’ll focus more on testing with high confidence as you suggested. There are still some things that are not clear to me about the comparison of Scaniverse vs RTAB's performance for the scanning workflow I was talking about but I will do more experimenting. Apologies for the confusion, and thanks again. Best, Pierre |

|

Hi,

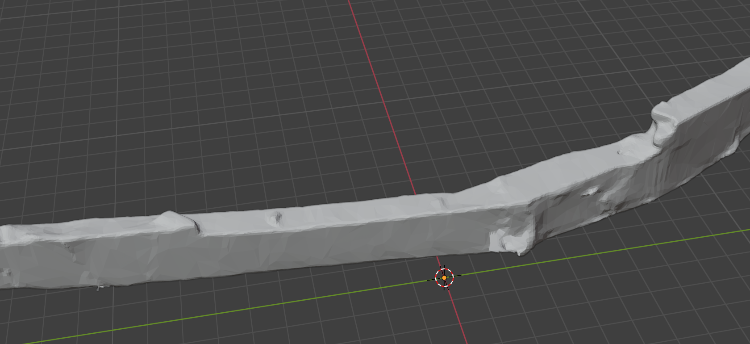

After doing more testing (and using RTAB and Scaniverse both for many hours of scanning), I almost certain that there is more going on Scaniverse than just using high confidence. For a static image like your example, it seems that it does use high confidence indeed. The difference of behaviour starts when you move the phone around. It is not so easy to show a comparison on the forum but I'll try to see if I can include some videos if you find this helpful. A concrete example where the difference is obvious, but in practice it affects all my scans: I have been scanning a gallery which is fairly high and narrow. Using high confidence in RTAB, and walking while filming, all the depth maps didn't see the ceiling and the walls. On the other hand, walking the exact same way with Scaniverse produced a decent mesh. First image: example of RTAB's depth map in high confidence. In particular, the exported point cloud only shows the floor and a bit of the walls. Second picture is a screenshot of Scaniverse's mesh.   Where I am going with that is that I hope this is some additional motivation to add an option in the iOS app where all points of any confidence are kept in the database, but additionally a grayscale image of their respective confidence so that they can be used as weight in an implicit surface reconstruction algorithm (at post-production, not live). Right now, we have to make a fixed choice of confidence that cannot be changed within the scan, which is very unflexible for the conditions in which I'm using the app although I understand that I might use it in an exotic way. A temporary solution would be to enable switching between levels of confidence within a scan (you could use low/middle and switch to high when for capturing a smaller object in the scene, for example). This would already be extremely helpful for me :) I hope my story makes a bit more sense now. I thank you a lot for your help. Best, Pierre |

|

Administrator

|

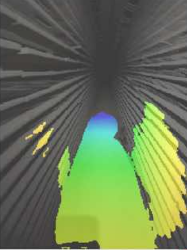

Hi Pierre,

Thank you for sharing some comparison data. I'll try to test more Scaniverse to see what is going on. Personally, I still not trust that much the low confidence points, most of the time they are highly interpolated and create "fake" surfaces that don't exist. However, in some environments, the interpolated points may be "good enough" to reproduce the overall structure while not being mm accurate. So I understand that could be useful to record that data. In particular for loop closure detection, it is actually better to record with low confidence to use features farther than lidar range. As stated in that feature request, doing this would require significant design. Note that they could also use something else than low confidence points, like the VIO tracked features themselves, which should be more accurate than the low confidence depth. RTAB-Map records already that data (should be already in your databases), we could combine those tracked features point cloud with the projected depth points before mesh reconstruction. I can try to confirm this when testing Scaniverse. That sounds not too complicated to do, like adding a slider on the UI to change it while scanning. I created a feature request about that: https://github.com/introlab/rtabmap/issues/1394 Regards, Mathieu |

|

This post was updated on .

Thanks a lot for your answer Mathieu !

Yes I noticed that the low confidence points are usually not too trustable. On the other hand I had good experience using the app with medium confidence, and actually, for the specific environments I'm scanning I would say that it feels a bit more like Scaniverse in terms of feeling when you scan (the speed at which it can understand the local scene), except that it can overlook some details. I also made some use of this "laser scan", using it as a high confidence cloud with higher prior for implicit surface reconstructions. Results were decent but I'm also not so sure that if this cloud is really "high confidence", as in ARKit features could be projected badly on the scene because of a low confidence depth ? Or does ARKit assign a specific depth to them ? Thanks for creating that feature request :) I would definitly benefit from it, I hope that so would others. Using the app with medium confidence by default and occasionally switching to high for details sounds like a great workflow. Cheers Pierre |

«

Return to Official RTAB-Map Forum

|

1 view|%1 views

| Free forum by Nabble | Edit this page |