RTABMAP in Desktop Station doesn't display point clouds

|

Hello everyone!

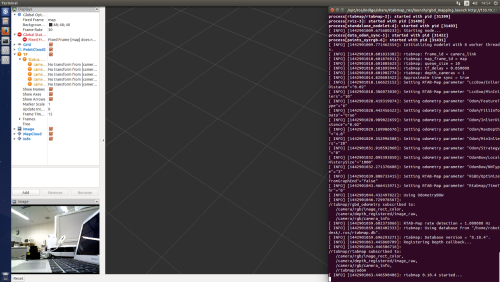

I'm using two computers for my project. The master is a laptop connected to my roomba, also, in this computer I have connected a Hokuyo sensor laser and Xtion PRO. I use the command $ export ROS_IP=XXX.XXX.XXX.XXX and $ export ROS_MASTER_URI=http://XXX.XXX.XXX.XXX:11311 for communication. After I introduce the export commands in both computers I type in my Master the next lines: $ roslaunch openni2_launch openni2.launch depth_registration:=true And then in my station computer: $ roslaunch rtabmap_ros rgbd_mapping.launch rviz:=true rtabmapviz:=false But I only got the screen for Rviz with the camera image in real time, but no point clouds, also it has an error that says that Fixed Frame [map] does not exist, and the TF shows warnings about map and the camera_depth_frame, camera_depth_optical_frame, camera_link, camera_rgb_frame and camera_rgb_optical_frame. If I run all the commands in the same computer it works without any problem.

|

|

Administrator

|

Hi,

The problem here is the high network bandwidth used. Is the laptop connected wirelessly? Openni2 publishes rgb and depth images at 30 Hz, if you subscribe to them without compression, there will be a lot of latency on the workstation, making difficult to synchronize input topics for the rtabmap or visual odometry nodes. Some information about remote mapping issue are discussed here. I would suggest to use roomba's wheel odometry or to do visual odometry on the laptop. As in the remote mapping example, the rtabmap node can then subscribe to rgb-depth-laser-odometry throttled messages at lower rate to save bandwidth. The /map frame is published only when rtabmap is mapping, which is not the case in your screenshot. The rtabmap's callback is not called because of the topics synchronization is poor (or not all input topics are received). cheers, Mathieu |

|

Thank you for your response Mathieu,

I'm learning about ROS and some packages and configurations (beginner level), so I want to ask for some tutorials to solve the issues I have. First of all I'm using wireless for the laptop, the purpose of the robot is self navigation, so it has to be able to move in a indoor place by itself, also it has to do 2D and 3D mapping, create the map of the environment and after that, it has to make autonomous navigation and obstacle avoidance. For 2D mapping I'm using hector_mapping and I think I also have to do some configurations for using it for odometry (a little difficult for me). Do you know where I can find information for change the parameters that you mentioned about Openni2's depth and rgb images and compress them? How can solve the problem with the callback and synchronization? Thank you in advance, Best Regards, Paola |

|

Administrator

|

Hi,

I suggest that you do the all the mapping/navigation stuff on the robot (i.e., the laptop), to avoid network delays. To monitor what is going on the the robot from a workstation over the network, you can open RVIZ on that computer (while setting ROS_MASTER_URI to laptop IP) and use "rviz/Map" display to show 2D map or "rtabmap_ros/MapCloud" display to show 3D map. Note that rtabmap can do 2D and 3D mapping. I would refer to this page to know which configuration would fit the best for your application. For the autonomous navigation part, see move_base package. For image compression, it is done through image_transport. I suggest to use image_transport/republish node for convenience on a remote computer. Example in a launch file: <node name="republish_rgb" type="republish" pkg="image_transport" args="theora in:=/camera/rgb/image_rect_color raw out:=/camera/rgb/image_rect_color_remote" /> <node name="republish_depth" type="republish" pkg="image_transport" args="compressedDepth in:=/camera/depth_registered/image_raw raw out:=/camera/depth_registered/image_raw_remote" /> cheers |

|

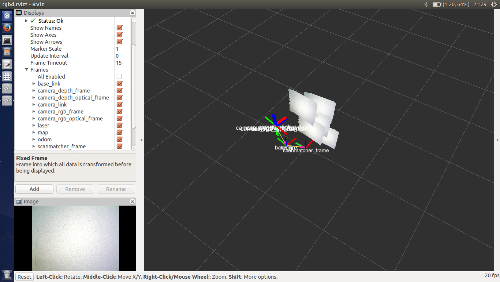

This post was updated on .

Hi Mathieu

I've been trying to use rgbd_mapping.launch and a demo for kinect and laser, I don't know how to setup odometry for roomba wheels, so I've decided to use the setup mentioned in the tutorial Set Up In Your Robot 3.3 Kinect+2D laser and using hector_mapping, but there are many configurations and parameters that I don't know if I have to change, and also I think I have problems with the TF, because when rgbd_mapping.launch is working many point clouds become to appear everywhere. This is mi launch file for devices: <launch> <!--Initializes all devices--> <!--Roomba--> <node pkg="roomba_500_series" type="roomba500_light_node" name="roomba500" output="screen"/> <!--Laser--> <!--node pkg="urg_node" type="urg_node" name="mapping_urg"/--> <node pkg="hokuyo_node" type="hokuyo_node" name="hokuyo_scan"/> <!--param name="serial_port" value="/dev/ttyACM0"/> </node--> <!--Camera--> <include file="$(find ros_cam_node)/launch/ros_cam_node.launch"/> <!--TF--> <node pkg="tf" type="static_transform_publisher" name="base_to_laser" args="0.14 0.0 0.12 0 0 0 base_link laser 100"/> <node pkg="tf" type="static_transform_publisher" name="base_to_camera" args="-0.15 0.0 0.59 0 0 0 base_link camera_link 100"/> <node pkg="roomba_tf" type="test_listener_tf" name="laser_tf" output="screen"/> <node pkg="roomba_tf" type="camtest_listener_tf" name="cam_tf" output="screen"/> <!--AMCL--> <node pkg="amcl" type="amcl" name="amcl"/> </launch> And this is for 2D mapping <launch>

<arg name="base_frame" default="base_link"/>

<arg name="odom_frame" default="base_link"/>

<arg name="pub_map_odom_transform" default="true"/>

<arg name="scan_subscriber_queue_size" default="5"/>

<arg name="scan_topic" default="scan"/>

<arg name="map_size" default="2048"/>

<node pkg="hector_mapping" type="hector_mapping" name="hector_mapping" output="screen">

<!-- Frame names -->

<param name="map_frame" value="map" />

<param name="base_frame" value="$(arg base_frame)" />

<param name="odom_frame" value="$(arg odom_frame)" />

<!-- Tf use -->

<param name="pub_map_odom_transform" value="$(arg pub_map_odom_transform)"/>

</node>

<node pkg="tf" type="static_transform_publisher" name="map_nav_broadcaster" args="0 0 0 0 0 0 base_link laser 100"/>

<node pkg="rviz" type="rviz" name="map_rviz"/>

</launch>How can I use this together with rtabmap and what params I have to change in the demo_hector_mapping.launch file or create a new launch file? Also I attach the image of rviz with the point clouds.

|

|

Administrator

|

Hi,

For a tutorial on how to generate odometry messages, see here. I've found another code related to Roomba here. Maybe your roomba launch already publishes odometry messages? You can use this to debug TF tree: $ rosrun tf view_frames $ evince frames.pdf I will suggest that you try to make work hector_mapping or rtabmap alone before mixing them. After that, if you want to mix hector_mapping with rtabmap on your specific robot, I would start with a fresh launch file. You can refer to demo_hector_mapping.launch for inspiration. To test rtabmap alone, here is an example of a minimal setup for rtabmap alone with your roomba (inspired from the Kinect + Odometry + 2D laser example): <node name="rtabmap" pkg="rtabmap_ros" type="rtabmap" output="screen" args="--delete_db_on_start"> <!-- Should be a fixed frame on the robot, generally base_link or base_footprint --> <param name="frame_id" type="string" value="base_footprint"/> <param name="subscribe_depth" type="bool" value="true"/> <param name="subscribe_laserScan" type="bool" value="true"/> <!-- Input topics, if any of them is not published, rtabmap won't work! --> <remap from="odom" to="/odom"/> <remap from="scan" to="/scan"/> <remap from="rgb/image" to="/camera/rgb/image_rect_color"/> <remap from="depth/image" to="/camera/depth_registered/image_raw"/> <remap from="rgb/camera_info" to="/camera/rgb/camera_info"/> <!-- Use laser scans to refine loop closure transformations --> <param name="LccIcp/Type" type="string" value="2"/> <!-- update the map only if the robot move --> <param name="RGBD/AngularUpdate" type="string" value="0.01"/> <param name="RGBD/LinearUpdate" type="string" value="0.01"/> </node> cheers |

«

Return to Official RTAB-Map Forum

|

1 view|%1 views

| Free forum by Nabble | Edit this page |