RTABMAP navigation using xtion pro live ONLY

|

Hi,

Just a little background to what I'm trying to do: I have a xtion camera, and a arbitrary robot(built from scratch using robot chassis, servos, a microcontroller and a TK1 single board computer). I'm trying to do SLAM using the rtabmap+kinect solution and I'm already able to see the mapping feature in action on rtabmapviz. My questions are: 1)What is the best way to do navigation in this case? How do I get information like camera's current location, goal location, so that I could translate these information into motor functions? 2)Can I still use localization mode for arbitrary robot? Is there any difference using rtabmap localization mode between an actual robot and an arbitrary robot? 3)What would your advice be for SLAM on arbitrary robot? I have tried multiple SLAM solution and rtabmap seems most promising. Is there a general guideline for rtabmap SLAM solution on arbitrary robot? Thank you so much in advance. I sincerely appreciate your help. Regards, CJ |

|

Administrator

|

Hi,

Yes, rtabmap can be integrated on any robots. You should however make sure that TF is correctly set. Follow this tutorial to get your robot ready for navigation. In that tutorial, "rtabmap" replace "map_server" and "amcl" modules. Your setup is similar to Turtlebot. You can look at this rtabmap+turtlebot tutorial for more examples. Localization mode can be activated in any configurations. cheers, Mathieu |

|

This post was updated on .

Thanks for the information.

I have a follow up question regarding this thread: http://official-rtab-map-forum.206.s1.nabble.com/Depth-data-from-a-single-frame-td2247.html#a2253 Where is the code that calculates and shows the depth data of an extracted feature in the current frame, as shown in the graphics view mode? The reason why I need this information is because I want to write some code that finds the distance of the closest extracted feature to the camera, and instruct the robot to move forward if the distance is below certain threshold. Thanks, Chongjin |

|

Administrator

|

Hi,

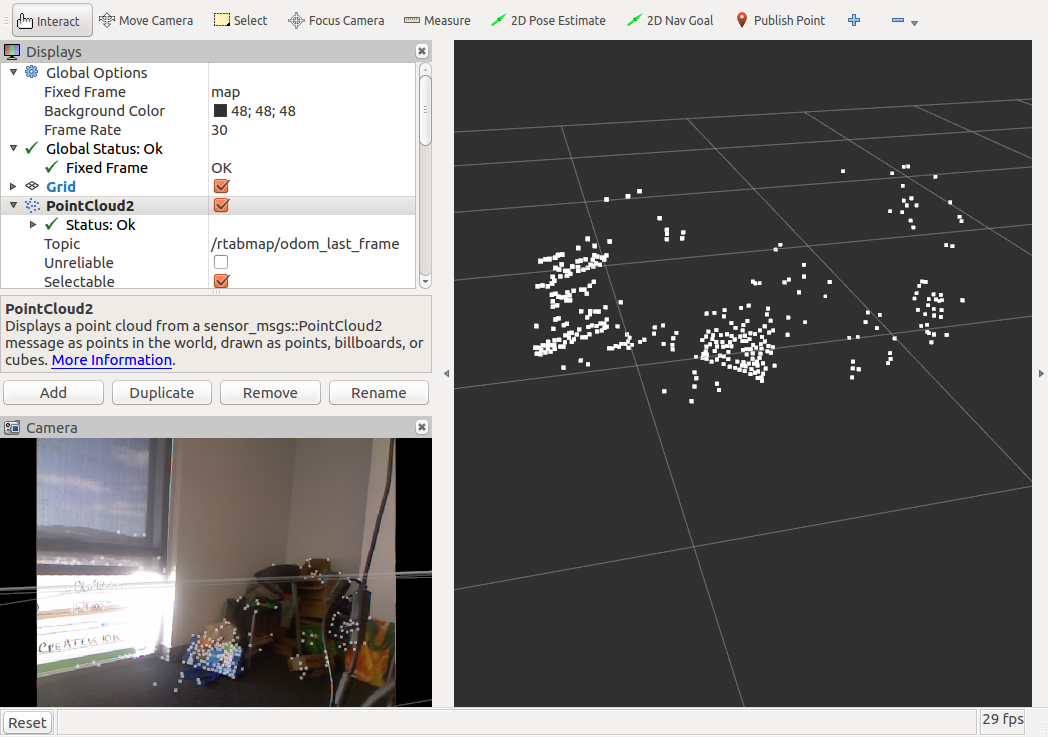

You can use /rtabmap/odom_last_frame topic published by rgbd_odometry for convenience (it is a PointCloud2 msg of the features).  If you need details on the features detected (keypoint's response, size, xy pixel in image etc...), you can also subscribe to /rtabmap/odom_info, which is a rtabmap_ros/OdomInfo msg (wordsValues corresponding to wordsKeys). cheers, Mathieu |

|

In reply to this post by matlabbe

Thanks for the information. I have some follow up questions on navigation stack.

Does that mean that I don't need a move_base launch file if I am using rtabmap.launch file? Also, for the odometry sensor, if I am only using visual odometry, do I have to explicitly call the 'rgbd_odometry' node pkg in my configuration file, or rtabmap.launch has already taken care of that? From my understanding, all I need to launch now is just openni2(sensor), rtabmap(odometry, map_server, amcl), and a base_controller that listens to odometry messages. Please enlighten me. Thanks for your patience. CJ |

|

Administrator

|

Hi,

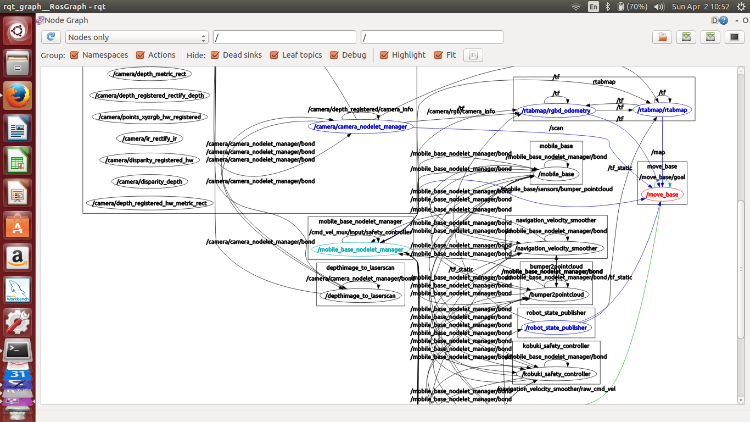

rtabmap node provides the map and does localization by its own. Thus, it doesn't rely on map_server (providing the map) or amcl (localization). move_base should be started as well, like in the ros navigation tutorials. move_base will connect to output map of rtabmap instead of map_server. You can look at the launch files used in rtabmap TurtleBot tutorial, this will give you a good example of navigation integration. Following your example, it would be openni2(sensor), rgbd_odometry(odometry), rtabmap(output map for move_base), move_base (local costmap, global costmap, local planner, global planner) and a base_controller (subscribing to cmd_vel from move_base). cheers, Mathieu |

|

Hi,

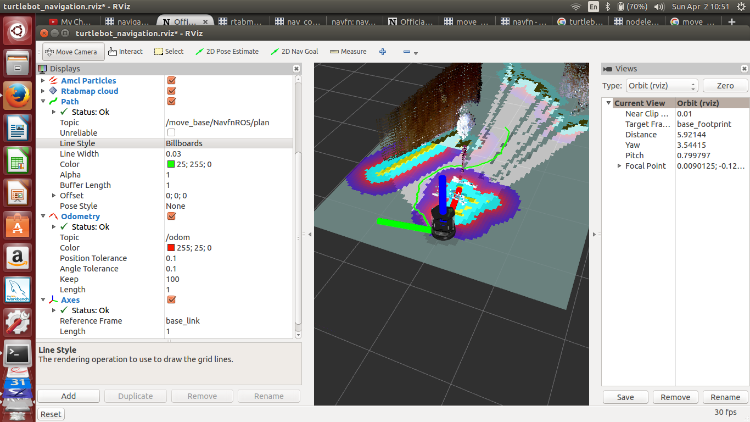

One follow up question. When I launch the turtlebot simulation using the following commands: $ roslaunch turtlebot_bringup minimal.launch $ roslaunch rtabmap_ros demo_turtlebot_mapping.launch args:="--delete_db_on_start" rgbd_odometry:=true $ roslaunch rtabmap_ros demo_turtlebot_rviz.launch I realized that move_base is not publishing cmd_vel topic. How can I get it to publish cmd_vel? Also, I realized that NavfnROS/plan topic publishes a decent path to a destination location, as shown in the diagram below. Do you think it's wise/efficient to code something up that subscribes to NavfnROS/plan, and come up with the navigation commands from there? If so, could you point me to the right approach?   Thanks, CJ |

|

Administrator

|

Hi,

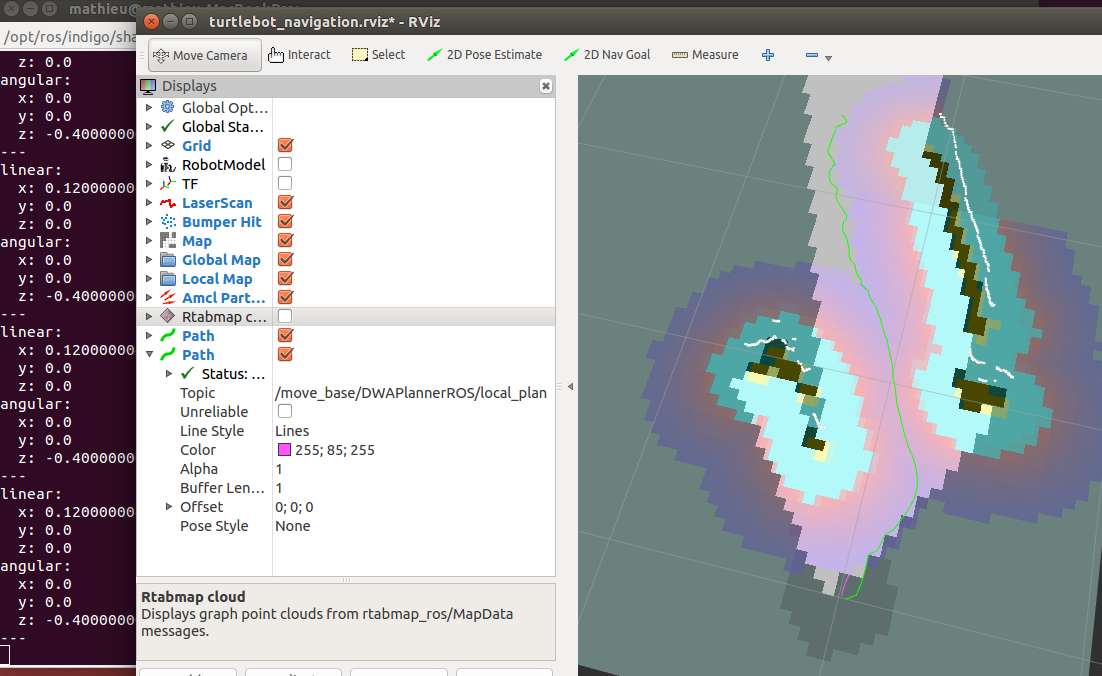

Look at the end of section 3 of the tutorial. On turtlebot the /cmd_vel output topic is named "/mobile_base/commands/velocity": $ rostopic echo /mobile_base/commands/velocity linear: x: 0.120000004768 y: 0.0 z: 0.0 angular: x: 0.0 y: 0.0 z: -0.40000000596 --- linear: x: 0.120000004768 y: 0.0 z: 0.0 angular: x: 0.0 y: 0.0 z: -0.40000000596 ... The small purple line represents the local plan (which would match the output velocities):  |

«

Return to Official RTAB-Map Forum

|

1 view|%1 views

| Free forum by Nabble | Edit this page |