RTABMAP standalone for localization in xyz (Kinect Xbox 360)

12

12

Re: RTABMAP standalone for localization in xyz (Kinect Xbox 360)

#include <rtabmap/core/RegistrationVis.h>

#include <rtabmap/core/CameraRGBD.h>

#include <rtabmap/core/util3d_transforms.h>

#include <iostream>

using namespace rtabmap;

int main(int argc, char * argv[])

{

//CameraFreenect camera; // Assume Kinect for XBOX 360

//camera.setImageRate(1); // capture at 1 Hz

CameraRGBDImages camera("/home/rahul/uimages/rgb/", "/home/rahul/uimages/depth/");

//Transform opticalRotation(0,0,1,0, -1,0,0,0, 0,-1,0,0);

//camera.setLocalTransform(opticalRotation);

camera.init(".", "calibration");

/*if(camera.init(".", "calibration")) // use "calibration.yaml" in the current directory

{

}*/

//Transform opticalRotation(0,0,1,0, -1,0,0,0, 0,-1,0,0);

//camera.setLocalTransform(opticalRotation); // from camera frame to rtabmap/ros frame

if(!camera.init())

{

printf("Failed to initialize the camera!\n");

return -1;

}

RegistrationVis registration;

SensorData frame = camera.takeImage();

SensorData previousFrame = frame;

int k = 1;

Transform pose = Transform::getIdentity();

while(frame.isValid())

{

Transform t = registration.computeTransformation(previousFrame, frame);

if(t.isNull())

{

printf("Could not compute motion\n");

}

else

{

pose *= t;

printf("%d Motion: %s\n", k, pose.prettyPrint().c_str());

// previousFrame = frame;

}

previousFrame = frame;

frame = camera.takeImage();

k++;

}

return 0;

}

I have changed my position of previous frame as otherwise once it is not able to compute motion it is never able to compute it. But still the poses differ a lot from the rgbd poses taken from rtabmap application. I have attached all the files.uposesrgbd.txt |

Re: RTABMAP standalone for localization in xyz (Kinect Xbox 360)

|

I was not able to upload the data directly so i am sharing the google drive link https://drive.google.com/file/d/0Bw8yiqrgUuZ9Q0g0UDdNUEFPV2M/view?usp=sharing

Thank you :) |

|

Administrator

|

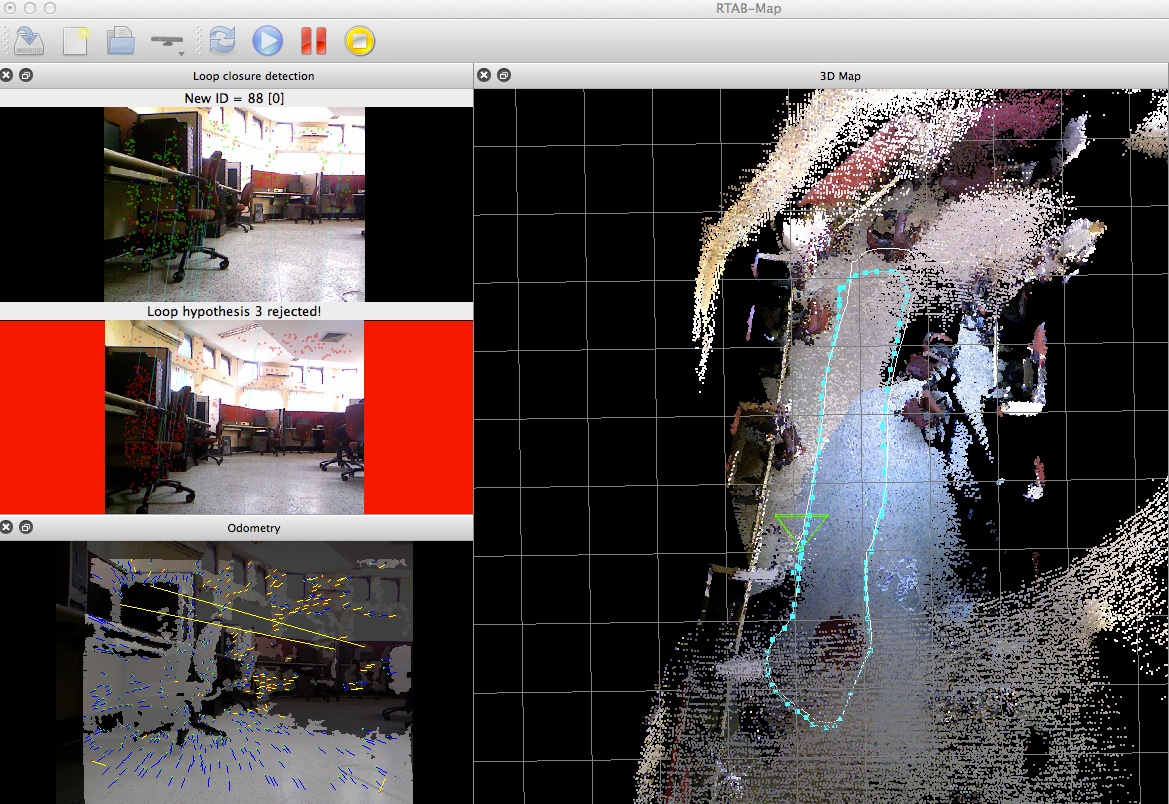

Thx for sharing some images. The inputs are correct, though the camera seems tilted, which makes the whole map tilting:

Here some modifications (EDIT call camera.init() only one time):

#include <rtabmap/core/RegistrationVis.h>

#include <rtabmap/core/CameraRGBD.h>

#include <rtabmap/core/util3d_transforms.h>

#include <rtabmap/utilite/ULogger.h>

#include <iostream>

using namespace rtabmap;

int main(int argc, char * argv[])

{

//ULogger::setType(ULogger::kTypeConsole);

//ULogger::setLevel(ULogger::kDebug);

CameraRGBDImages camera("rgb/", "depth/");

Transform opticalRotation(0,0,1,0, -1,0,0,0, 0,-1,0,0);

camera.setLocalTransform(opticalRotation);

if(!camera.init(".", "calibration"))

{

printf("Failed to initialize the camera!\n");

return -1;

}

ParametersMap parameters;

parameters.insert(ParametersPair(Parameters::kVisMaxFeatures(), "2000"));

parameters.insert(ParametersPair(Parameters::kGFTTQualityLevel(), "0.0001"));

RegistrationVis registration(parameters);

SensorData frame = camera.takeImage();

SensorData previousFrame = frame;

int k = 1;

Transform pose = Transform::getIdentity();

while(frame.isValid())

{

Transform t = registration.computeTransformation(previousFrame, frame, Transform());

if(t.isNull())

{

printf("Could not compute motion\n");

}

else

{

pose *= t;

printf("%d Motion: %s\n", k, pose.prettyPrint().c_str());

}

previousFrame = frame;

frame = camera.takeImage();

k++;

}

return 0;

}

Output: ./app 1 Motion: xyz=-0.000000,0.000000,-0.000000 rpy=-0.000000,-0.000000,-0.000000 2 Motion: xyz=0.096059,-0.041338,-0.021647 rpy=0.015822,0.041045,-0.124383 3 Motion: xyz=0.278359,-0.128642,-0.054722 rpy=0.010936,0.052426,-0.138549 4 Motion: xyz=0.451387,-0.151467,-0.089365 rpy=0.022727,0.049921,-0.106849 5 Motion: xyz=0.595555,-0.186856,-0.117298 rpy=0.023987,0.047945,-0.103965 6 Motion: xyz=0.706510,-0.207808,-0.140637 rpy=0.019156,0.049477,-0.125589 7 Motion: xyz=0.852631,-0.241526,-0.159657 rpy=0.012100,0.063451,-0.137917 8 Motion: xyz=1.117742,-0.288078,-0.212169 rpy=0.026494,0.062510,-0.041698 9 Motion: xyz=1.336713,-0.320899,-0.246170 rpy=0.024900,0.063917,-0.059680 10 Motion: xyz=1.552208,-0.357929,-0.258332 rpy=0.021405,0.073228,-0.061567 11 Motion: xyz=1.802232,-0.402473,-0.309128 rpy=0.020279,0.067973,-0.069699 12 Motion: xyz=2.000293,-0.444259,-0.331871 rpy=0.021971,0.067247,-0.053778 13 Motion: xyz=2.150059,-0.472710,-0.360049 rpy=0.028851,0.064985,-0.028782 14 Motion: xyz=2.400941,-0.485272,-0.396146 rpy=0.030966,0.067258,0.017067 15 Motion: xyz=2.683805,-0.511647,-0.421695 rpy=0.024163,0.076484,0.007113 16 Motion: xyz=2.883028,-0.552473,-0.454001 rpy=0.016965,0.076369,-0.040936 17 Motion: xyz=3.115946,-0.621864,-0.483639 rpy=0.014258,0.083660,-0.102753 18 Motion: xyz=3.341121,-0.681825,-0.518477 rpy=0.014601,0.084552,-0.143972 19 Motion: xyz=3.506264,-0.727775,-0.538368 rpy=0.010057,0.087075,-0.187762 20 Motion: xyz=3.633256,-0.775784,-0.559337 rpy=0.008609,0.089380,-0.209280 21 Motion: xyz=3.767669,-0.828677,-0.585466 rpy=0.017301,0.086890,-0.199669 22 Motion: xyz=3.962567,-0.859250,-0.608509 rpy=0.019417,0.093273,-0.138847 23 Motion: xyz=4.134307,-0.898185,-0.634246 rpy=0.035104,0.090683,-0.086766 24 Motion: xyz=4.241316,-0.899724,-0.651128 rpy=0.045254,0.086378,-0.011518 25 Motion: xyz=4.405526,-0.914692,-0.666203 rpy=0.042605,0.092379,-0.031175 26 Motion: xyz=4.424380,-0.931118,-0.666824 rpy=0.021557,0.097925,-0.147743 27 Motion: xyz=4.425740,-0.965021,-0.682306 rpy=0.018860,0.093194,-0.300056 28 Motion: xyz=4.538544,-1.076656,-0.690224 rpy=0.004845,0.103154,-0.522600 29 Motion: xyz=4.589847,-1.180597,-0.694345 rpy=-0.015647,0.106994,-0.685529 30 Motion: xyz=4.633134,-1.342991,-0.714623 rpy=-0.039513,0.096624,-0.939259 31 Motion: xyz=4.628997,-1.511508,-0.739517 rpy=-0.053218,0.086334,-1.163164 32 Motion: xyz=4.584663,-1.751958,-0.737384 rpy=-0.080232,0.077766,-1.493525 33 Motion: xyz=4.540266,-1.852043,-0.740272 rpy=-0.094258,0.063538,-1.693316 34 Motion: xyz=4.459736,-1.916675,-0.735668 rpy=-0.111722,0.044415,-1.903450 35 Motion: xyz=4.263023,-1.966075,-0.714240 rpy=-0.125430,-0.017022,-2.437568 36 Motion: xyz=4.171748,-1.970275,-0.712977 rpy=-0.117732,-0.042098,-2.614017 37 Motion: xyz=4.102336,-1.973578,-0.707408 rpy=-0.113209,-0.053249,-2.729317 38 Motion: xyz=4.044336,-1.937765,-0.701978 rpy=-0.106198,-0.073335,-2.899623 39 Motion: xyz=3.920281,-1.892625,-0.698982 rpy=-0.091354,-0.104029,-3.136053 40 Motion: xyz=3.690588,-1.821474,-0.675681 rpy=-0.093893,-0.109289,3.094732 41 Motion: xyz=3.425051,-1.786948,-0.657175 rpy=-0.084142,-0.113617,3.014163 42 Motion: xyz=3.200768,-1.734344,-0.625997 rpy=-0.084285,-0.112042,3.028543 43 Motion: xyz=2.947685,-1.649295,-0.602453 rpy=-0.086359,-0.110387,3.049866 44 Motion: xyz=2.674697,-1.566258,-0.586175 rpy=-0.089231,-0.116047,3.068491 45 Motion: xyz=2.365971,-1.518929,-0.557059 rpy=-0.086595,-0.120815,3.045329 46 Motion: xyz=2.107377,-1.488421,-0.547021 rpy=-0.094954,-0.110605,3.073463 47 Motion: xyz=1.771824,-1.470666,-0.510161 rpy=-0.093333,-0.106589,3.092340 48 Motion: xyz=1.377862,-1.464180,-0.472537 rpy=-0.087087,-0.112380,3.065004 49 Motion: xyz=1.021443,-1.417066,-0.415038 rpy=-0.079800,-0.117036,3.017883 50 Motion: xyz=0.907611,-1.402418,-0.401775 rpy=-0.077766,-0.120695,3.000803 51 Motion: xyz=0.757379,-1.375308,-0.374572 rpy=-0.076179,-0.119200,2.992942 52 Motion: xyz=0.689114,-1.352059,-0.258670 rpy=-0.066830,-0.146225,3.115226 53 Motion: xyz=0.451515,-1.278703,-0.229703 rpy=-0.099599,-0.174860,3.133827 54 Motion: xyz=0.131641,-1.196302,-0.217807 rpy=-0.088943,-0.153085,3.082522 55 Motion: xyz=0.017517,-1.122849,-0.272969 rpy=-0.072086,-0.061530,-3.101448 56 Motion: xyz=-0.085362,-1.100788,-0.267441 rpy=-0.093622,-0.066149,3.124704 57 Motion: xyz=-0.222032,-1.099719,-0.248564 rpy=-0.084748,-0.055183,-3.136980 58 Motion: xyz=-0.333326,-1.085781,-0.244746 rpy=-0.087185,-0.059659,-3.085541 59 Motion: xyz=-0.497293,-1.089485,-0.224713 rpy=-0.074582,-0.067276,3.123388 60 Motion: xyz=-0.612280,-1.143493,-0.221751 rpy=-0.074933,-0.065411,3.141501 61 Motion: xyz=-0.750184,-1.203259,-0.242996 rpy=-0.077092,-0.072257,-3.126212 62 Motion: xyz=-0.923267,-1.178586,-0.219963 rpy=-0.080052,-0.062611,-3.061483 63 Motion: xyz=-1.097892,-1.211402,-0.246906 rpy=-0.077507,-0.069383,-3.066699 64 Motion: xyz=-1.414049,-1.204854,-0.222710 rpy=-0.059959,-0.082606,3.029355 65 Motion: xyz=-1.755561,-1.111263,-0.201528 rpy=-0.058407,-0.096716,2.963845 66 Motion: xyz=-2.041065,-0.927291,-0.157231 rpy=-0.052476,-0.098531,2.915685 67 Motion: xyz=-2.389010,-0.781366,-0.066825 rpy=-0.024539,-0.085022,2.762751 68 Motion: xyz=-2.510045,-0.719000,-0.062909 rpy=-0.024608,-0.088645,2.734644 69 Motion: xyz=-2.502421,-0.691634,-0.058034 rpy=-0.007639,-0.087550,2.574683 70 Motion: xyz=-2.531069,-0.578221,-0.040782 rpy=0.042402,-0.082216,2.249119 71 Motion: xyz=-2.533519,-0.530021,-0.043176 rpy=0.052937,-0.078749,2.130080 72 Motion: xyz=-2.485994,-0.368159,-0.023304 rpy=0.088683,-0.048460,1.780049 73 Motion: xyz=-2.394643,-0.265200,-0.052038 rpy=0.093074,-0.044136,1.584650 74 Motion: xyz=-2.310431,-0.125172,-0.061045 rpy=0.105625,-0.033043,1.413940 75 Motion: xyz=-2.211387,0.013865,-0.056205 rpy=0.114431,-0.008362,1.251628 76 Motion: xyz=-2.145555,0.093966,-0.054600 rpy=0.114249,0.004937,1.179260 77 Motion: xyz=-2.030848,0.192291,-0.069386 rpy=0.113402,0.015595,1.030139 78 Motion: xyz=-1.926394,0.265144,-0.079011 rpy=0.110677,0.026915,0.907220 79 Motion: xyz=-1.782592,0.326718,-0.081548 rpy=0.106432,0.056997,0.682670 80 Motion: xyz=-1.732408,0.321083,-0.087660 rpy=0.103864,0.081336,0.450130 81 Motion: xyz=-1.632886,0.358122,-0.101295 rpy=0.099525,0.081531,0.413110 82 Motion: xyz=-1.491041,0.350980,-0.119422 rpy=0.097138,0.088882,0.321774 83 Motion: xyz=-1.304873,0.266462,-0.147837 rpy=0.073848,0.108097,0.105418 84 Motion: xyz=-1.139552,0.107630,-0.190501 rpy=0.057086,0.117578,-0.035892 85 Motion: xyz=-0.963399,0.018552,-0.218584 rpy=0.043514,0.118230,-0.060713 86 Motion: xyz=-0.789719,-0.004511,-0.236432 rpy=0.042893,0.116601,0.011953 87 Motion: xyz=-0.703167,-0.013234,-0.242476 rpy=0.043792,0.117537,0.015745 88 Motion: xyz=-0.578075,-0.025921,-0.255993 rpy=0.045874,0.116701,0.022209 89 Motion: xyz=-0.507436,-0.031650,-0.264976 rpy=0.045827,0.114893,0.028139 90 Motion: xyz=-0.402011,-0.040877,-0.274266 rpy=0.045476,0.115988,0.038743 91 Motion: xyz=-0.265405,-0.045922,-0.290712 rpy=0.051396,0.116841,0.053817 92 Motion: xyz=-0.094995,-0.048198,-0.315468 rpy=0.060937,0.117777,0.078537 93 Motion: xyz=0.036817,-0.061311,-0.330150 rpy=0.062870,0.122325,0.063041 The poses matches the map above. Note that I set a null guess transform when calling the registration. Note also that without "ParametersMap", some transformations cannot be computed: ./app 1 Motion: xyz=-0.000000,-0.000000,0.000000 rpy=-0.000000,0.000000,0.000000 2 Motion: xyz=0.093835,-0.043521,-0.014649 rpy=0.016219,0.042720,-0.123604 3 Motion: xyz=0.278296,-0.130547,-0.057380 rpy=0.013485,0.052050,-0.138210 4 Motion: xyz=0.458152,-0.150802,-0.096051 rpy=0.024611,0.048676,-0.107369 5 Motion: xyz=0.607526,-0.187239,-0.121622 rpy=0.025820,0.047073,-0.104421 6 Motion: xyz=0.717482,-0.211763,-0.148789 rpy=0.021731,0.047861,-0.125243 7 Motion: xyz=0.864966,-0.247773,-0.165117 rpy=0.015303,0.062870,-0.137419 8 Motion: xyz=1.125768,-0.306659,-0.227506 rpy=0.033047,0.059175,-0.038731 9 Motion: xyz=1.342262,-0.339010,-0.265503 rpy=0.033217,0.059713,-0.056528 10 Motion: xyz=1.554240,-0.377817,-0.281039 rpy=0.030538,0.068116,-0.057604 11 Motion: xyz=1.791579,-0.424144,-0.340903 rpy=0.029842,0.061099,-0.063911 12 Motion: xyz=1.989575,-0.461871,-0.364379 rpy=0.030213,0.060132,-0.048799 13 Motion: xyz=2.141289,-0.481732,-0.380411 rpy=0.034027,0.061182,-0.025724 14 Motion: xyz=2.387484,-0.493657,-0.409577 rpy=0.036027,0.065288,0.019927 15 Motion: xyz=2.673488,-0.522375,-0.434666 rpy=0.031471,0.073722,0.010550 16 Motion: xyz=2.877146,-0.565637,-0.469583 rpy=0.025879,0.072878,-0.036817 17 Motion: xyz=3.112961,-0.633412,-0.502472 rpy=0.025164,0.079206,-0.098418 18 Motion: xyz=3.337329,-0.693424,-0.537651 rpy=0.027731,0.079807,-0.138571 19 Motion: xyz=3.502747,-0.738925,-0.563329 rpy=0.024140,0.080739,-0.182408 20 Motion: xyz=3.632988,-0.782474,-0.576391 rpy=0.021269,0.086761,-0.205746 21 Motion: xyz=3.768572,-0.832576,-0.599995 rpy=0.028889,0.085557,-0.197895 22 Motion: xyz=3.968936,-0.869622,-0.622194 rpy=0.031934,0.092430,-0.134583 23 Motion: xyz=4.143216,-0.900301,-0.643558 rpy=0.042645,0.091738,-0.087239 24 Motion: xyz=4.250652,-0.900981,-0.659110 rpy=0.053080,0.087973,-0.013683 25 Motion: xyz=4.413375,-0.916959,-0.681079 rpy=0.050052,0.090884,-0.032976 26 Motion: xyz=4.431487,-0.948970,-0.679705 rpy=0.032389,0.098131,-0.141502 27 Motion: xyz=4.445034,-0.967496,-0.681771 rpy=0.024481,0.102104,-0.303862 28 Motion: xyz=4.562570,-1.088627,-0.690147 rpy=0.009381,0.113178,-0.523854 29 Motion: xyz=4.614085,-1.192818,-0.699670 rpy=-0.016265,0.117261,-0.687841 Could not compute motion 31 Motion: xyz=4.663776,-1.360347,-0.734480 rpy=-0.039569,0.110420,-0.914331 32 Motion: xyz=4.684916,-1.594798,-0.755196 rpy=-0.083997,0.099045,-1.249427 33 Motion: xyz=4.685333,-1.704406,-0.768035 rpy=-0.106662,0.080635,-1.452670 34 Motion: xyz=4.619014,-1.787708,-0.761248 rpy=-0.125135,0.060072,-1.660322 Could not compute motion 36 Motion: xyz=4.552051,-1.852464,-0.774501 rpy=-0.130629,0.029798,-1.834309 37 Motion: xyz=4.509122,-1.901975,-0.770979 rpy=-0.135072,0.017902,-1.951441 38 Motion: xyz=4.434370,-1.914486,-0.767720 rpy=-0.137430,-0.006939,-2.116749 39 Motion: xyz=4.313663,-1.977502,-0.762757 rpy=-0.137524,-0.041458,-2.354386 Could not compute motion 41 Motion: xyz=4.100012,-2.141349,-0.755857 rpy=-0.133216,-0.048205,-2.432417 42 Motion: xyz=3.924580,-2.272524,-0.734004 rpy=-0.131795,-0.044663,-2.419754 43 Motion: xyz=3.672859,-2.418859,-0.760695 rpy=-0.131520,-0.050037,-2.397425 44 Motion: xyz=3.423733,-2.589204,-0.787492 rpy=-0.132814,-0.062261,-2.379974 45 Motion: xyz=3.193967,-2.770907,-0.779393 rpy=-0.131610,-0.068126,-2.402620 46 Motion: xyz=2.987067,-2.959003,-0.779883 rpy=-0.137778,-0.056922,-2.375507 47 Motion: xyz=2.753707,-3.182793,-0.754832 rpy=-0.136415,-0.049499,-2.355603 48 Motion: xyz=2.487159,-3.457180,-0.739534 rpy=-0.132223,-0.054979,-2.380369 49 Motion: xyz=2.280102,-3.693788,-0.735136 rpy=-0.129339,-0.064366,-2.432939 50 Motion: xyz=2.191157,-3.770051,-0.730865 rpy=-0.128933,-0.069392,-2.449812 51 Motion: xyz=2.076499,-3.862442,-0.710548 rpy=-0.128385,-0.067506,-2.458284 52 Motion: xyz=2.039201,-3.919051,-0.585311 rpy=-0.112749,-0.085911,-2.340548 53 Motion: xyz=1.777290,-4.052454,-0.555332 rpy=-0.144724,-0.113098,-2.314582 54 Motion: xyz=1.510162,-4.217020,-0.562039 rpy=-0.136093,-0.093365,-2.365023 Could not compute motion 56 Motion: xyz=1.410619,-4.282617,-0.541190 rpy=-0.153534,-0.098806,-2.420758 57 Motion: xyz=1.304674,-4.355351,-0.532488 rpy=-0.152151,-0.091489,-2.396867 58 Motion: xyz=1.208814,-4.403300,-0.501802 rpy=-0.154414,-0.087222,-2.342787 59 Motion: xyz=1.093679,-4.524405,-0.492817 rpy=-0.140905,-0.103080,-2.418694 60 Motion: xyz=0.999353,-4.633669,-0.459326 rpy=-0.142245,-0.095375,-2.391687 61 Motion: xyz=0.908260,-4.741258,-0.477357 rpy=-0.144497,-0.099850,-2.367750 62 Motion: xyz=0.749232,-4.822415,-0.444715 rpy=-0.149683,-0.084814,-2.298748 63 Motion: xyz=0.589574,-4.961695,-0.591278 rpy=-0.154309,-0.121520,-2.294576 64 Motion: xyz=0.228284,-5.131255,-0.827960 rpy=-0.135961,-0.220442,-2.451741 65 Motion: xyz=-0.093178,-5.291726,-0.786157 rpy=-0.129652,-0.247189,-2.509778 66 Motion: xyz=-0.403239,-5.384898,-0.714005 rpy=-0.118078,-0.258338,-2.564317 Could not compute motion 68 Motion: xyz=-0.520614,-5.462965,-0.713131 rpy=-0.110524,-0.273356,-2.596588 Could not compute motion Could not compute motion 71 Motion: xyz=-0.560832,-5.466260,-0.705357 rpy=-0.080527,-0.286855,-2.725792 72 Motion: xyz=-0.691870,-5.399815,-0.629957 rpy=0.037156,-0.276618,-3.095704 73 Motion: xyz=-0.777447,-5.306406,-0.627716 rpy=0.085387,-0.272828,2.979055 Could not compute motion Could not compute motion 76 Motion: xyz=-0.864662,-5.252893,-0.601736 rpy=0.108457,-0.263027,2.903584 Could not compute motion 78 Motion: xyz=-0.963237,-5.171313,-0.581905 rpy=0.147490,-0.252853,2.782036 Could not compute motion 80 Motion: xyz=-0.988098,-5.118247,-0.593786 rpy=0.220855,-0.222808,2.549279 81 Motion: xyz=-1.068257,-5.049090,-0.573083 rpy=0.231034,-0.217253,2.514055 82 Motion: xyz=-1.139112,-4.918522,-0.557319 rpy=0.252816,-0.194621,2.421437 Could not compute motion Could not compute motion 85 Motion: xyz=-1.218940,-4.734974,-0.544844 rpy=0.247042,-0.189093,2.398124 86 Motion: xyz=-1.339320,-4.607048,-0.509829 rpy=0.224948,-0.204391,2.470206 87 Motion: xyz=-1.402613,-4.544164,-0.484029 rpy=0.222996,-0.202645,2.474748 88 Motion: xyz=-1.491202,-4.448613,-0.461829 rpy=0.224237,-0.204537,2.482826 89 Motion: xyz=-1.543892,-4.398868,-0.445062 rpy=0.221544,-0.205832,2.489028 90 Motion: xyz=-1.623066,-4.321157,-0.424558 rpy=0.219160,-0.207633,2.500906 91 Motion: xyz=-1.726630,-4.232123,-0.399987 rpy=0.217572,-0.210533,2.516319 92 Motion: xyz=-1.859165,-4.123621,-0.367149 rpy=0.217740,-0.212510,2.540902 93 Motion: xyz=-1.952675,-4.035099,-0.339640 rpy=0.223592,-0.204784,2.525388 cheers, Mathieu |

Re: RTABMAP standalone for localization in xyz (Kinect Xbox 360)

|

This post was updated on .

I know i am bothering you a lot and i cannot express my thanks.

Can you answer my following queries: 1. How is the quality of a feature decided for these 2 lines parameters.insert(ParametersPair(Parameters::kVisMaxFeatures(), "2000")); parameters.insert(ParametersPair(Parameters::kGFTTQualityLevel(), "0.0001"));2. How the transformation between pixels correspond to movement of the robot?(like how to to project image coordinates to world and translation and rotation between them into the world coordinates) 3. I have a parallel visual place recognition algorithm running which will be using machine learning for predicting the pose.Can i apply a kalman filter between the two outputs? 4. How to write a ros node which publishes the depth and color images to a directory? 5. What is a null guess transform? 6. For the same code my system is giving a slightly different set of poses does the program depend on system configuration? 1 Motion: xyz=-0.000000,0.000000,-0.000000 rpy=-0.000000,-0.000000,-0.000000 2 Motion: xyz=0.094567,-0.039635,-0.021750 rpy=0.015348,0.040988,-0.124709 3 Motion: xyz=0.276223,-0.125610,-0.056799 rpy=0.011484,0.051641,-0.139223 4 Motion: xyz=0.449915,-0.145747,-0.091855 rpy=0.023380,0.048954,-0.108235 5 Motion: xyz=0.597031,-0.179449,-0.121761 rpy=0.023902,0.046507,-0.105871 6 Motion: xyz=0.712889,-0.207357,-0.146660 rpy=0.020396,0.047609,-0.125965 7 Motion: xyz=0.858302,-0.240838,-0.163101 rpy=0.013236,0.062303,-0.138359 8 Motion: xyz=1.125163,-0.289140,-0.213232 rpy=0.026985,0.061862,-0.042156 9 Motion: xyz=1.342943,-0.320010,-0.244187 rpy=0.024632,0.064283,-0.060728 10 Motion: xyz=1.554901,-0.361857,-0.258968 rpy=0.021726,0.072951,-0.061355 11 Motion: xyz=1.805471,-0.408930,-0.311055 rpy=0.020092,0.067443,-0.068733 12 Motion: xyz=2.002221,-0.450421,-0.332694 rpy=0.021336,0.066921,-0.052801 13 Motion: xyz=2.152773,-0.476476,-0.359784 rpy=0.028110,0.064995,-0.028356 14 Motion: xyz=2.403729,-0.489945,-0.391022 rpy=0.029160,0.068802,0.017379 15 Motion: xyz=2.687101,-0.518808,-0.416418 rpy=0.022507,0.078184,0.007968 16 Motion: xyz=2.886377,-0.559438,-0.448798 rpy=0.015049,0.078092,-0.040079 17 Motion: xyz=3.119622,-0.631030,-0.476324 rpy=0.012803,0.085778,-0.101208 18 Motion: xyz=3.343428,-0.689908,-0.512343 rpy=0.012875,0.086095,-0.142508 19 Motion: xyz=3.507787,-0.738178,-0.532946 rpy=0.008566,0.088307,-0.185299 20 Motion: xyz=3.634570,-0.782258,-0.553033 rpy=0.005550,0.091159,-0.208121 21 Motion: xyz=3.769480,-0.834550,-0.578773 rpy=0.013820,0.088996,-0.198818 22 Motion: xyz=3.963507,-0.862272,-0.602887 rpy=0.014824,0.094996,-0.138831 23 Motion: xyz=4.136519,-0.901263,-0.626288 rpy=0.026514,0.093997,-0.086410 24 Motion: xyz=4.243340,-0.902200,-0.645369 rpy=0.038330,0.088989,-0.011953 25 Motion: xyz=4.407354,-0.917301,-0.661834 rpy=0.035946,0.093957,-0.031783 26 Motion: xyz=4.427501,-0.934978,-0.661718 rpy=0.014213,0.099100,-0.147766 27 Motion: xyz=4.430011,-0.970667,-0.676190 rpy=0.012273,0.094028,-0.299622 28 Motion: xyz=4.544741,-1.078755,-0.690079 rpy=-0.004814,0.098798,-0.524771 29 Motion: xyz=4.592621,-1.180603,-0.698974 rpy=-0.025119,0.098195,-0.686650 30 Motion: xyz=4.635608,-1.344861,-0.714315 rpy=-0.047919,0.087284,-0.940405 31 Motion: xyz=4.626229,-1.510515,-0.738168 rpy=-0.057444,0.074965,-1.162767 32 Motion: xyz=4.575504,-1.749716,-0.737496 rpy=-0.081113,0.064056,-1.489948 33 Motion: xyz=4.531546,-1.850391,-0.741546 rpy=-0.093403,0.048766,-1.689447 34 Motion: xyz=4.449580,-1.916235,-0.735552 rpy=-0.106973,0.030548,-1.899068 35 Motion: xyz=4.240814,-1.958253,-0.709927 rpy=-0.115162,-0.024779,-2.427241 36 Motion: xyz=4.149100,-1.960975,-0.708945 rpy=-0.107103,-0.047910,-2.602525 37 Motion: xyz=4.078785,-1.965204,-0.700826 rpy=-0.101291,-0.056059,-2.717927 38 Motion: xyz=4.021066,-1.931695,-0.695629 rpy=-0.095530,-0.073673,-2.889308 39 Motion: xyz=3.898484,-1.886093,-0.697804 rpy=-0.079280,-0.104162,-3.125196 40 Motion: xyz=3.669677,-1.816881,-0.674717 rpy=-0.081396,-0.108737,3.105732 41 Motion: xyz=3.394392,-1.785281,-0.657008 rpy=-0.072606,-0.112197,3.024935 42 Motion: xyz=3.176237,-1.722829,-0.619595 rpy=-0.072279,-0.109198,3.040151 43 Motion: xyz=2.916579,-1.654518,-0.597510 rpy=-0.074091,-0.107877,3.058830 44 Motion: xyz=2.594076,-1.592900,-0.569439 rpy=-0.079027,-0.113362,3.073779 45 Motion: xyz=2.274418,-1.556119,-0.541483 rpy=-0.076209,-0.118176,3.049190 46 Motion: xyz=2.012665,-1.526656,-0.532471 rpy=-0.083965,-0.108493,3.077469 47 Motion: xyz=1.680278,-1.511958,-0.500032 rpy=-0.081271,-0.105392,3.096306 48 Motion: xyz=1.281849,-1.507207,-0.469266 rpy=-0.077182,-0.111771,3.069146 49 Motion: xyz=0.946780,-1.526729,-0.457583 rpy=-0.068748,-0.122229,3.011751 50 Motion: xyz=0.833157,-1.512142,-0.444206 rpy=-0.066625,-0.125816,2.994952 51 Motion: xyz=0.685911,-1.484201,-0.419735 rpy=-0.065758,-0.124288,2.987408 52 Motion: xyz=0.600114,-1.475899,-0.318554 rpy=-0.060507,-0.155404,3.105712 53 Motion: xyz=0.372894,-1.404108,-0.283748 rpy=-0.089450,-0.181819,3.123125 54 Motion: xyz=0.055515,-1.311231,-0.268791 rpy=-0.078277,-0.159223,3.075319 55 Motion: xyz=-0.047917,-1.247353,-0.315963 rpy=-0.060716,-0.066090,-3.108874 56 Motion: xyz=-0.154235,-1.225818,-0.307577 rpy=-0.080992,-0.069181,3.117300 57 Motion: xyz=-0.289315,-1.223378,-0.289731 rpy=-0.074000,-0.059520,3.138863 58 Motion: xyz=-0.400243,-1.213795,-0.282568 rpy=-0.076087,-0.063973,-3.093606 59 Motion: xyz=-0.570695,-1.213708,-0.258991 rpy=-0.059662,-0.069914,3.115623 60 Motion: xyz=-0.705373,-1.223144,-0.252233 rpy=-0.058428,-0.067564,-3.140104 61 Motion: xyz=-0.842482,-1.278891,-0.267796 rpy=-0.060460,-0.073788,-3.123787 62 Motion: xyz=-1.014715,-1.249598,-0.227656 rpy=-0.063569,-0.061237,-3.058153 63 Motion: xyz=-1.188965,-1.282659,-0.249589 rpy=-0.061453,-0.067121,-3.063144 64 Motion: xyz=-1.519954,-1.241400,-0.279969 rpy=-0.046254,-0.091748,3.040942 65 Motion: xyz=-1.859399,-1.146060,-0.266581 rpy=-0.043046,-0.107485,2.976997 66 Motion: xyz=-2.145198,-0.954926,-0.257309 rpy=-0.036888,-0.119282,2.931842 67 Motion: xyz=-2.500942,-0.813956,-0.162848 rpy=-0.005115,-0.105332,2.778253 68 Motion: xyz=-2.622015,-0.749648,-0.159939 rpy=-0.003413,-0.110200,2.751458 69 Motion: xyz=-2.610068,-0.719480,-0.153328 rpy=0.017458,-0.104843,2.592235 70 Motion: xyz=-2.639444,-0.602416,-0.136338 rpy=0.071247,-0.090734,2.266977 71 Motion: xyz=-2.640756,-0.556520,-0.137371 rpy=0.081972,-0.083168,2.147684 72 Motion: xyz=-2.588440,-0.392825,-0.115702 rpy=0.115032,-0.039592,1.801814 73 Motion: xyz=-2.497997,-0.289143,-0.145764 rpy=0.118540,-0.028370,1.608228 74 Motion: xyz=-2.420120,-0.147862,-0.159080 rpy=0.124427,-0.013241,1.431804 75 Motion: xyz=-2.326939,-0.006521,-0.156246 rpy=0.133256,0.016054,1.267372 76 Motion: xyz=-2.261998,0.076038,-0.160165 rpy=0.129676,0.028318,1.193399 77 Motion: xyz=-2.164385,0.183587,-0.178985 rpy=0.133109,0.039198,1.034214 78 Motion: xyz=-2.056496,0.254444,-0.191080 rpy=0.132771,0.052438,0.915238 79 Motion: xyz=-1.914543,0.316562,-0.197418 rpy=0.123366,0.089043,0.691068 80 Motion: xyz=-1.857053,0.306166,-0.210366 rpy=0.113693,0.112325,0.464141 81 Motion: xyz=-1.758212,0.343598,-0.224786 rpy=0.107909,0.114054,0.427976 82 Motion: xyz=-1.615641,0.337305,-0.248441 rpy=0.104490,0.121405,0.336600 83 Motion: xyz=-1.426022,0.248571,-0.287814 rpy=0.073455,0.140094,0.120724 84 Motion: xyz=-1.259295,0.093324,-0.333425 rpy=0.051702,0.150054,-0.021576 85 Motion: xyz=-1.082695,0.003364,-0.367969 rpy=0.037643,0.150196,-0.045839 86 Motion: xyz=-0.908900,-0.015400,-0.391281 rpy=0.038872,0.148918,0.026804 87 Motion: xyz=-0.821368,-0.023405,-0.399124 rpy=0.040382,0.150327,0.030933 88 Motion: xyz=-0.696187,-0.033903,-0.417123 rpy=0.042821,0.149540,0.037514 89 Motion: xyz=-0.625320,-0.036507,-0.428577 rpy=0.042466,0.147488,0.042721 90 Motion: xyz=-0.518787,-0.043019,-0.441278 rpy=0.041992,0.148687,0.053281 91 Motion: xyz=-0.383541,-0.044359,-0.460874 rpy=0.047964,0.150000,0.068088 92 Motion: xyz=-0.214269,-0.043820,-0.491175 rpy=0.058064,0.151140,0.092639 93 Motion: xyz=-0.084446,-0.054460,-0.510355 rpy=0.059359,0.155497,0.077108 Thank you :) |

|

Administrator

|

Hi,

1. 2000 is to get more points to matches per image. The GFTT quality level defines the lowest response of features to keep in comparison to feature with highest response. Example, if the highest response is 1000 and quality level is 0.01, then all features under response 10 are ignored. Setting it low makes detection of keypoints in images having texture with low contrast/edges. However, these features are generally not good to match, but in case of your environment, in particular when the camera is only seeing the floor (not so textured) we have to set it low, otherwise no features are extracted and transformation cannot be computed. Note that using low quality features give worst transformations. 2. You may read this: http://docs.opencv.org/3.2.0/dc/d2c/tutorial_real_time_pose.html. 3. For Registration::computeTransform(), the third argument is a guess transform. If you predict the next pose, you may set it there. Merging different poses with a Kalman filter could be also done, though covariance should be carefully chosen. 4. Subscribe to rgb and depth topics and save them (save the depth in PNG). Also save the calibration somewhere. 5. A null guess transform is "no guess". This tells the registration algorithm to not assume that images are overlapping. When a guess is set, the registration can look for visual correspondences only in some parts of the images, instead of comparing all features together. This can be also useful when there are repetitive textures in the images, to good correspondences can be found. However, if the guess is poor, transformation estimation may not be possible. 6. The registration algorithm uses a RANSAC approach, so not always the features are matched the same between two runs. The version of the code have an effect too (bug fixes or default parameters have changed). Note that Odometry class can be used for estimating the pose instead of using directly Registration class. cheers, Mathieu |

Re: RTABMAP standalone for localization in xyz (Kinect Xbox 360)

|

This post was updated on .

If i have a program supplying the initial coordinate of the robot along with its orientation in angle and the axis are y up and x horizontal how do i add the transformations given by rtabmap visual odometry code?

What are the axis in case of RTAB-MAP? y-axis ^ | | .(Position of robot x,y,and making angle theta with x axis) | |---------------->x-axis up facing is the global y axis and right is global x axis and i need the position with reference to global axis |

|

Administrator

|

Hi,

rtabmap is x=foward, y=left, z=up. See ROS standard: http://www.ros.org/reps/rep-0103.html cheers Mathieu |

Re: RTABMAP standalone for localization in xyz (Kinect Xbox 360)

|

Is the x and y given with respect to global frame or the Kinect takes the line of sight as x and it's left as y (local)?

|

|

Administrator

|

In camera frame, images are:

Otherwise all transforms (local/global) are:

|

Re: RTABMAP standalone for localization in xyz (Kinect Xbox 360)

#include <rtabmap/core/RegistrationVis.h>

#include <rtabmap/core/CameraRGBD.h>

#include <rtabmap/core/util3d_transforms.h>

#include <rtabmap/utilite/ULogger.h>

#include <iostream>

#include <string>

#include <math.h>

#include <stdio.h>

#include <bits/stdc++.h>

using namespace rtabmap;

static float d2r(float d) {

return (d / 180.0) * ((float) M_PI);

}

float hx[6],hy[6];

float hdis[6];

std::ofstream xxx;

std::ifstream myfile;

int main(int argc, char * argv[])

{

CameraRGBDImages camera("/home/rahul/dataset6sem/classroom_testrun2/mono/", "/home/rahul/dataset6sem/classroom_testrun2/depth/");

Transform opticalRotation(0,0,1,0, -1,0,0,0, 0,-1,0,0);

camera.setLocalTransform(opticalRotation);

if(!camera.init(".", "calibration"))

{

printf("Failed to initialize the camera!\n");

return -1;

}

ParametersMap parameters;

parameters.insert(ParametersPair(Parameters::kVisMaxFeatures(), "2000"));

parameters.insert(ParametersPair(Parameters::kGFTTQualityLevel(), "0.0001"));

RegistrationVis registration(parameters);

SensorData frame ;

/*for(int i=0;i<6; ){

char ch;

std::cin>>ch;

if(ch=='y'){

frame = camera.takeImage();

i++;

cv::Mat rgb = frame.imageRaw();

/*std::string s = "/home/rahul/immono.jpg";

cv::imwrite( s, rgb );

cv::waitKey(1);

cv::Mat depth = frame.depthRaw();

s = "/home/rahul/imdepth.jpg";

cv::imwrite( s, depth );

cv::waitKey(1);

std::string filename = "/home/rahul/rtabmap_project/StartPoint.py";

std::string command = "python ";

command += filename;

system(command.c_str());

myfile.open("Start.txt");

myfile>>hx[i-1]>>hy[i-1];

std::cout<<hx[i-1]<<" "<<hy[i-1]<<endl;

myfile.close();

}

}*/

/*

for(int i=0;i<6;i++){

hdis[i]=0;

for(int j=0;j<6;j++){

hdis[i] += (hx[i]-hx[j])*(hx[i]-hx[j])+(hy[i]-hy[j])*(hy[i]-hy[j]);

}

}

float hmi=10000;

int hind=0;

for(int i=0;i<6;i++){

//std::cout<<hdis[i]<<" ";

if(hmi>hdis[i]){

hmi=hdis[i];

hind=i;

}

}

xxx.open("Start.txt");

xxx<<hx[hind]<<"_"<<hy[hind]<<"_0_.jpg";

xxx.close();*/

// frame = camera.takeImage();

/* cv::Mat rgb = frame.imageRaw();

std::string s = "/home/rahul/immono.png";

cv::imwrite( s, rgb );

cv::waitKey(1);

cv::Mat depth = frame.depthRaw();

s = "/home/rahul/imdepth.png";

cv::imwrite( s, depth );

cv::waitKey(1);

std::string filename = "/home/rahul/rtabmap_project/TestRum.py";

std::string command = "python ";

command += filename;

system(command.c_str());

myfile.open("Coord.txt");

myfile>>inx>>iny>>ang;

ang = ang;

inx = inx/100;

iny = iny/100;

ang = 90-ang;

*/

frame = camera.takeImage();

float inx,iny,ang;

std::cin>>inx>>iny>>ang;

ang = d2r(ang);

std::cout<<inx<<" "<<iny<<" "<<ang<<"\n";

myfile.close();

SensorData previousFrame = frame;

int k = 1;

Transform pose = Transform::getIdentity();

/* pose.data()[3] = inx;

pose.data()[7] = iny;

pose.data()[0] = cos(ang);

pose.data()[1] = -1*sin(ang);

pose.data()[2] = 0;

pose.data()[4] = sin(ang);

pose.data()[5] = cos(ang);

pose.data()[6] = 0;

pose.data()[8] = 0;

pose.data()[9] = 0;

pose.data()[10] = 1;

*/

while(frame.isValid())

{

Transform t = registration.computeTransformation(previousFrame, frame, Transform());

if(t.isNull())

{

printf("Could not compute motion\n");

}

else

{

pose *= t;

float x,y,z,roll,pitch,yaw,temp,tx,ty;

pose.getTranslationAndEulerAngles(x, y, z, roll, pitch, yaw);

temp = y;

// y = x;

// x = -1*temp;

printf("%d Motion orig: x = %f y = %f z = %f roll=%f pitch = %f yaw = %f\n",x,y,z,roll,pitch,yaw);

tx = x;

ty = y;

// x = (tx*cos(ang))-(ty*sin(ang));

// y = (ty*cos(ang))+(tx*sin(ang));

// printf("rahul %d Motion: x = %f y = %f \n",k,inx+tx,iny+ty);

// std::cout<<x<<y<<"Hi";

}

previousFrame = frame;

k++;

frame = camera.takeImage();

/*cv::Mat rgb = frame.imageRaw();

cv::Mat rgb1;

//frame1 = &frame;

//rgb = frame1->rgb;

//int id = frame;

std::string s = "/home/rahul/immono.png";

cv::imwrite( s, rgb );

//rgb1 = cv::imread("/home/rahul/im.jpg");

rgb.convertTo(rgb,CV_8UC3);

cv::imshow("Image",rgb);

cv::waitKey(1);

cv::waitKey(1);

cv::Mat depth = frame.depthRaw();

s = "/home/rahul/imdepth.png";

cv::imwrite( s, depth );

std::string filename = "/home/rahul/rtabmap_project/TestRum.py";

std::string command = "python ";

command += filename;

system(command.c_str());

myfile.open("Coord.txt");

float hinx,hiny,hang;

myfile>>hinx>>hiny>>hang;

std::cout<<hinx<<" "<<hiny<<" "<<hang<<"\n";

myfile.close();

// std::cout<<rgb1;

*/

}

return 0;

}

How should i modify this code if i want y as forward x as right and z as up? Initial input is starting point in x and y and angle in degree ( 5 5 180) 5 is x 5 is y and 180 is the angle with y axis clockwise Here is the dataset https://drive.google.com/file/d/0Bw8yiqrgUuZ9WlhRcnNsM21BQTg/view?usp=sharing |

|

Administrator

|

Hi,

Try this constructor of Transform: // Transform(float x, float y, float theta) Transform pose(inx, iny, ang); You will get for the first frame (instead of identity): 867941678 Motion orig: x = 5.000000 y = 5.000000 z = -0.000000 roll=0.000000 pitch = -0.000000 yaw = -3.141593 The ground plane is xy, with z up. So here the rotation is 180 degrees around z axis. To get y as forward x as right and z as up, we would have to rotate pose 90 over z axis. Example: Transform pose; // in rtabmap referential, x forward, y left, z up Transform rotation(0, 0, 0, 0, 0, PI/2); Transform poseNew = rotation * pose; // in y forward, x right, z up referentialFor example if you have pose(x=1,y=0), you wll get poseNew(x=0, y=1); Note that images should overlap to be able to compute transformations. cheers, Mathieu |

Re: RTABMAP standalone for localization in xyz (Kinect Xbox 360)

|

This post was updated on .

Hi,

I am still having a problem combining the original orientation of the robot with the rotation of axis what is the problem with this code? This code is reporting x as negative and the transformations are also not taking properly. Could you please try this code?

#include <rtabmap/core/RegistrationVis.h>

#include <rtabmap/core/CameraRGBD.h>

#include <rtabmap/core/util3d_transforms.h>

#include <rtabmap/utilite/ULogger.h>

#include <iostream>

#include <string>

#include <math.h>

#include <stdio.h>

#include <bits/stdc++.h>

using namespace rtabmap;

/*

4.125 7.125 180

3.375 3.375

5.625 1.875 60

*/

static float d2r(float d) {

return (d / 180.0) * ((float) M_PI);

}

float hx[6],hy[6];

float hdis[6];

std::ofstream xxx;

std::ifstream myfile;

int main(int argc, char * argv[])

{

CameraRGBDImages camera("/home/rahul/dataset6sem/classroom_testrun2/mono/", "/home/rahul/dataset6sem/classroom_testrun2/depth/");

Transform opticalRotation(0,0,1,0, -1,0,0,0, 0,-1,0,0);

camera.setLocalTransform(opticalRotation);

if(!camera.init(".", "calibration"))

{

printf("Failed to initialize the camera!\n");

return -1;

}

ParametersMap parameters;

parameters.insert(ParametersPair(Parameters::kVisMaxFeatures(), "2000"));

parameters.insert(ParametersPair(Parameters::kGFTTQualityLevel(), "0.0001"));

RegistrationVis registration(parameters);

SensorData frame ;

/*for(int i=0;i<6; ){

char ch;

std::cin>>ch;

if(ch=='y'){

frame = camera.takeImage();

i++;

cv::Mat rgb = frame.imageRaw();

/*std::string s = "/home/rahul/immono.jpg";

cv::imwrite( s, rgb );

cv::waitKey(1);

cv::Mat depth = frame.depthRaw();

s = "/home/rahul/imdepth.jpg";

cv::imwrite( s, depth );

cv::waitKey(1);

std::string filename = "/home/rahul/rtabmap_project/StartPoint.py";

std::string command = "python ";

command += filename;

system(command.c_str());

myfile.open("Start.txt");

myfile>>hx[i-1]>>hy[i-1];

std::cout<<hx[i-1]<<" "<<hy[i-1]<<endl;

myfile.close();

}

}*/

/*

for(int i=0;i<6;i++){

hdis[i]=0;

for(int j=0;j<6;j++){

hdis[i] += (hx[i]-hx[j])*(hx[i]-hx[j])+(hy[i]-hy[j])*(hy[i]-hy[j]);

}

}

float hmi=10000;

int hind=0;

for(int i=0;i<6;i++){

//std::cout<<hdis[i]<<" ";

if(hmi>hdis[i]){

hmi=hdis[i];

hind=i;

}

}

xxx.open("Start.txt");

xxx<<hx[hind]<<"_"<<hy[hind]<<"_0_.jpg";

xxx.close();*/

// frame = camera.takeImage();

/* cv::Mat rgb = frame.imageRaw();

std::string s = "/home/rahul/immono.png";

cv::imwrite( s, rgb );

cv::waitKey(1);

cv::Mat depth = frame.depthRaw();

s = "/home/rahul/imdepth.png";

cv::imwrite( s, depth );

cv::waitKey(1);

std::string filename = "/home/rahul/rtabmap_project/TestRum.py";

std::string command = "python ";

command += filename;

system(command.c_str());

myfile.open("Coord.txt");

myfile>>inx>>iny>>ang;

ang = ang;

inx = inx/100;

iny = iny/100;

ang = 90-ang;

*/

frame = camera.takeImage();

float inx,iny,ang;

std::cin>>inx>>iny>>ang;

ang = 90-ang;

ang = d2r(ang);

std::cout<<inx<<" "<<iny<<" "<<ang<<"\n";

myfile.close();

SensorData previousFrame = frame;

int k = 1;

Transform pose(inx,iny,ang);

Transform rotation(0,0,0,0,0,(float)M_PI/2);

Transform posenew ;

//pose = posenew;

/* pose.data()[3] = inx;

pose.data()[7] = iny;

pose.data()[0] = cos(ang);

pose.data()[1] = -1*sin(ang);

pose.data()[2] = 0;

pose.data()[4] = sin(ang);

pose.data()[5] = cos(ang);

pose.data()[6] = 0;

pose.data()[8] = 0;

pose.data()[9] = 0;

pose.data()[10] = 1;

*/

while(frame.isValid())

{

Transform t = registration.computeTransformation(previousFrame, frame, Transform());

if(t.isNull())

{

printf("Could not compute motion\n");

}

else

{

pose *= t;

posenew = rotation*pose;

float x,y,z,roll,pitch,yaw,temp,tx,ty;

posenew.getTranslationAndEulerAngles(x, y, z, roll, pitch, yaw);

temp = y;

// y = x;

// x = -1*temp;

printf("%d Motion orig: x = %f y = %f z = %f roll=%f pitch = %f yaw = %f\n",x,y,z,roll,pitch,yaw);

tx = x;

ty = y;

// x = (tx*cos(ang))-(ty*sin(ang));

// y = (ty*cos(ang))+(tx*sin(ang));

// printf("rahul %d Motion: x = %f y = %f \n",k,inx+tx,iny+ty);

// std::cout<<x<<y<<"Hi";

}

previousFrame = frame;

k++;

frame = camera.takeImage();

/*cv::Mat rgb = frame.imageRaw();

cv::Mat rgb1;

//frame1 = &frame;

//rgb = frame1->rgb;

//int id = frame;

std::string s = "/home/rahul/immono.png";

cv::imwrite( s, rgb );

//rgb1 = cv::imread("/home/rahul/im.jpg");

rgb.convertTo(rgb,CV_8UC3);

cv::imshow("Image",rgb);

cv::waitKey(1);

cv::waitKey(1);

cv::Mat depth = frame.depthRaw();

s = "/home/rahul/imdepth.png";

cv::imwrite( s, depth );

std::string filename = "/home/rahul/rtabmap_project/TestRum.py";

std::string command = "python ";

command += filename;

system(command.c_str());

myfile.open("Coord.txt");

float hinx,hiny,hang;

myfile>>hinx>>hiny>>hang;

std::cout<<hinx<<" "<<hiny<<" "<<hang<<"\n";

myfile.close();

// std::cout<<rgb1;

*/

}

return 0;

}

Thanks :) |

|

Administrator

|

Hi,

can you show a small example with transforms that you can manually set? Example:

Transform t1(1,0,0);

printf("%s\n", t1.prettyPrint.c_str());

Transform t2(0,0,PI/2);

printf("%s\n", t2.prettyPrint.c_str());

Transform t3 = t2 * t1;

printf("%s\n", t3.prettyPrint.c_str());

//Then describe what you want to have for t3 ?

cheers |

Re: RTABMAP standalone for localization in xyz (Kinect Xbox 360)

|

Sorry but I don't understand could you please explain further

|

Re: RTABMAP standalone for localization in xyz (Kinect Xbox 360)

|

Administrator

|

This doesn't work? Transform rotation(0, 0, 0, 0, 0, PI/2); Transform poseNew = rotation * pose; // in y forward, x right, z up referential You have to rotate the pose 90 degrees to put in your global frame. |

|

Administrator

|

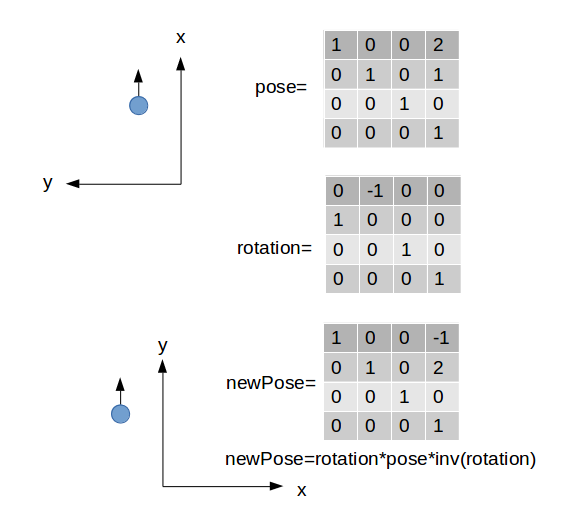

EDIT Missing final rotation to get theta right

Transform rotation(0, 0, 0, 0, 0, PI/2); Transform poseNew = rotation * pose * rotation.inverse(); // in y forward, x right, z up referential |

Re: RTABMAP standalone for localization in xyz (Kinect Xbox 360)

|

How do I integrate theta and initial coordinates into it where theta is the angle in making with global y also could please edit in the code I sent to you?

|

Re: RTABMAP standalone for localization in xyz (Kinect Xbox 360)

|

Hey Mattieu,

How can I transform my exported mesh/map to local/global frame using the GUI? I mean without using camera reference system. In addition, what is reference system of the mesh and the camera pose during tracking? Best, Bruno |

|

Administrator

|

Hi,

@brunoeducsantos, RTAB-Map uses same coordinate system than ROS, see REP103. If you export a mesh, you can change orientation/position in a 3D modeling software. By default the origin of the map is the first pose of odometry received. If the first pose has wrong orientation (e.g., ignoring gravity), then the whole map would be tilted. @rit2014006, did you try the "posenew = rotation * pose * rotation.inverse();"? Example here of transformation from RTAB-Map coordinate system to your coordinate system:  cheers, Mathieu |

«

Return to Official RTAB-Map Forum

|

1 view|%1 views

| Free forum by Nabble | Edit this page |