Raspberry stereo cams, ROS nav, RTAB

|

Hi M. Labbe - first post so I just want to say this project is amazing, by far the most complete and accessible I've seen!

I'm trying to add SLAM to an existing legged robot. I just about managed to get it all working (RTAB stereo odometry, RTAB-MAP, ROS navigation stack) on a Raspberry Pi Compute Module CM3 with the standard breakout board, with two standard Raspberry Pi cameras. ROS is Kinetic, RTAB is built from latest source a few weeks ago. It struggled to do even 5 frames per sec so I moved most of the nodes to a laptop, all except the camera publishers and the base controller. It starts out ok but quickly loses pose when the robot moves. I built a 3-axis gimbal for the camera head but it still gets some blurring from vibration. Even if I move it slowly by hand it quickly loses it with errors like: [ WARN] [1525461571.204964055]: Could not get robot pose, cancelling reconfiguration [ WARN] [1525461571.804925142]: Costmap2DROS transform timeout. Current time: 1525461571.8049, global_pose stamp: 1525461440.3336, tolerance: 1.0000 [ERROR] [1525461571.904596715]: Extrapolation Error looking up robot pose: Lookup would require extrapolation into the past. Requested time 1525461440.333629049 but the earliest data is at time 1525461561.984878136, when looking up transform from frame [base_link] to frame [map] Could you please have a look over my main launch file and see if I'm doing anything obviously stupid? <launch> <!-- navigation stack with RTAB visual odometry and mapping and stereo cams based on http://wiki.ros.org/rtabmap_ros/Tutorials/StereoOutdoorNavigation and http://wiki.ros.org/rtabmap_ros#rtabmap https://github.com/introlab/rtabmap_ros/blob/master/launch/azimut3/ az3_mapping_robot_stereo_nav.launch --> <!-- ROS navigation stack move_base --> <group ns="planner"> <remap from="openni_points" to="/planner_cloud"/> <remap from="base_scan" to="/base_scan"/> <!--<remap from="map" to="/rtabmap/proj_map"/> runtime tells me this is deprecated--> <remap from="map" to="/rtabmap/grid_map"/> <remap from="move_base_simple/goal" to="/planner_goal"/> <node pkg="move_base" type="move_base" respawn="false" name="move_base" output="screen"> <rosparam file="$(find mmnavigate)/launch/costmap_common_params.yaml" command="load" ns="global_costmap" /> <rosparam file="$(find mmnavigate)/launch/costmap_common_params.yaml" command="load" ns="local_costmap" /> <rosparam file="$(find mmnavigate)/launch/local_costmap_params.yaml" command="load" /> <rosparam file="$(find mmnavigate)/launch/global_costmap_params.yaml" command="load" /> <rosparam file="$(find mmnavigate)/launch/base_local_planner_params.yaml" command="load" /> </node> </group> <!-- stereo cameras --> <group ns="/stereo_camera"> <!-- TF: usb_cam should declare its TF frame as stereo_camera for RTAB. set up static transform for RTAB: static_transform_publisher x y z qx qy qz qw frame_id child_frame_id period_in_ms --> <arg name="pi/2" value="1.5707963267948966" /> <arg name="optical_rotate" value="0 0 0.2 -$(arg pi/2) 0 -$(arg pi/2)" /> <!-- added z offset of 0.2m, hope that's right --> <node pkg="tf" type="static_transform_publisher" name="camera_base_link" args="$(arg optical_rotate) base_link stereo_camera 100" /> <!-- increased speed from 100 to 50ms --> <!-- bring compressed images over wifi and uncompress for stereo_image_proc --> <node name="republish_left" type="republish" pkg="image_transport" args="compressed in:=left/image_raw raw out:=left/image_raw_relay" /> <node name="republish_right" type="republish" pkg="image_transport" args="compressed in:=right/image_raw raw out:=right/image_raw_relay" /> <!-- what's stereo_nodelet for? pointcloud nodelets use it? --> <node pkg="nodelet" type="nodelet" name="stereo_nodelet" args="manager"/> <!-- ROS image pipeline: stereo_image_proc for image rectification --> <node pkg="stereo_image_proc" type="stereo_image_proc" name="stereo_image_proc"> <param name="disparity_range" value="128"/> <!-- default 64; 128 set by RTAB --> <param name="approximate_sync" value="true" /> <!-- set by me as I think it'll be needed, it seemed to be for calibration --> <remap from="left/image_raw" to="left/image_raw_relay"/> <remap from="right/image_raw" to="right/image_raw_relay"/> <remap from="left/camera_info" to="left/camera_info"/> <remap from="right/camera_info" to="right/camera_info"/> <!--remap works: from=expected, to=actual--> </node> <!-- Generate a point cloud from the disparity image --> <!-- RTAB point_cloud_xyz --> <node pkg="nodelet" type="nodelet" name="disparity2cloud" args="load rtabmap_ros/point_cloud_xyz stereo_nodelet"> <!-- 4 subs - supplied by depth/image (sensor_msgs/Image) - ? depth/camera_info (sensor_msgs/CameraInfo) - ? "Construct a point cloud from a depth or disparity image." disparity/image (stereo_msgs/DisparityImage) - stereo_proc disparity/camera_info (sensor_msgs/CameraInfo) - stereo_cam --> <remap from="disparity/image" to="disparity"/> <remap from="disparity/camera_info" to="right/camera_info"/> <!-- 1 Pub: cloud (sensor_msgs/PointCloud2) --> <remap from="cloud" to="cloudXYZ"/> <!-- 8 Params --> <!--<param name="queue_size" type="int" value="10"/>def=10--> <param name="approx_sync" type="bool" value="true"/><!--def=true--> <param name="decimation" type="int" value="4"/><!--def=1(off)--> <param name="voxel_size" type="double" value="0.05"/><!--def=0.0(off)--> <!--<param name="min_depth" type="double" value="0.0"/>def=0.0--> <param name="max_depth" type="double" value="4"/><!--def=0.0(off)--> <!--<param name="noise_filter_radius" type="double" value="0.0"/>def=0.0(off)--> <!--<param name="noise_filter_min_neighbors" type="int" value="5"/>def=5--> </node> <!-- Create point cloud for the local planner --> <!-- RTAB obstacles_detection --> <node pkg="nodelet" type="nodelet" name="obstacles_detection" args="load rtabmap_ros/obstacles_detection stereo_nodelet"> <!-- 1 Sub - supplied by cloud (sensor_msgs/PointCloud2) - point_cloud_xyz --> <remap from="cloud" to="cloudXYZ"/> <!-- 2 Pubs: ground (sensor_msgs/PointCloud2) obstacles (sensor_msgs/PointCloud2) --> <remap from="obstacles" to="/planner_cloud"/> <!-- 6 Params --> <param name="frame_id" type="string" value="base_link"/><!--def=base_link--> <!--<param name="queue_size" type="int" value="10"/>def=10--> <!--<param name="normal_estimation_radius" type="double" value="0.05"/>def=0.05--> <!--<param name="ground_normal_angle" type="double" value="PI/4"/>def=PI/4--> <!-- extra params from example launch: --> <param name="map_frame_id" type="string" value="map"/> <param name="wait_for_transform" type="bool" value="true"/> </node> <!-- RTAB stereo_odometry --> <node pkg="rtabmap_ros" type="stereo_odometry" name="stereo_odometry" output="screen"> <!-- 4 subs - produced by left/image_rect - stereo_proc left/camera_info - stereo_cam right/image_rect - stereo_proc right/camera_info - stereo_cam --> <!--<remap from="left/image_rect" to="/stereo_camera/left/image_rect"/> <remap from="right/image_rect" to="/stereo_camera/right/image_rect"/> <remap from="left/camera_info" to="/stereo_camera/left/camera_info"/> <remap from="right/camera_info" to="/stereo_camera/right/camera_info"/>--> <!-- no pubs listed in wiki? just does a TF? yet this looks like an output remap: --> <!--<remap from="odom" to="/odometry"/>--> <param name="frame_id" type="string" value="base_link"/> <param name="odom_frame_id" type="string" value="odom"/> <param name="approx_sync" type="bool" value="true" /><!-- changed this --> <param name="queue_size" type="int" value="5"/> <!-- runtime told me to change Odom to Vis in these params from example--> <param name="Vis/InlierDistance" type="string" value="0.1"/> <param name="Vis/MinInliers" type="string" value="10"/> <param name="Vis/RoiRatios" type="string" value="0.03 0.03 0.04 0.04"/> <param name="Vis/MaxDepth" type="string" value="10"/> </node> </group> <!-- end group stereo_camera --> <group ns="rtabmap"> <!-- RTAB Mapping: args: "delete_db_on_start" and "udebug" --> <node name="rtabmap" pkg="rtabmap_ros" type="rtabmap" output="screen" args="--delete_db_on_start"> <!-- 11 subs - supplied by odom - RTAB /stereo_odometry left/image_rect - /stereo_camera left/camera_info - /stereo_camera right/image_rect - /stereo_camera right/camera_info - /stereo_camera unused: rgb/image - only for subscribe_depth rgb/camera_info - Not needed for subscribe_stereo depth/image - only for subscribe_depth scan - only for subscribe_scan scan_cloud - only for subscribe_scan_cloud goal (geometry_msgs/PoseStamped) - can do path planning intenally? --> <remap from="left/image_rect" to="/stereo_camera/left/image_rect_color"/> <!-- one side colour and the other mono? ok --> <remap from="right/image_rect" to="/stereo_camera/right/image_rect"/> <remap from="left/camera_info" to="/stereo_camera/left/camera_info"/> <remap from="right/camera_info" to="/stereo_camera/right/camera_info"/> <remap from="odom" to="/stereo_camera/odom"/> <!-- 12 Pubs: info mapData mapGraph grid_map proj_map cloud_map scan_map labels global_path local_path goal_reached goal_out --> <param name="frame_id" type="string" value="base_link"/> <param name="subscribe_stereo" type="bool" value="true"/> <param name="subscribe_depth" type="bool" value="false"/> <param name="approx_sync" type="bool" value="true"/><!-- changed this--> <remap from="left/image_rect" to="/stereo_camera/left/image_rect_color"/> <remap from="right/image_rect" to="/stereo_camera/right/image_rect"/> <remap from="left/camera_info" to="/stereo_camera/left/camera_info"/> <remap from="right/camera_info" to="/stereo_camera/right/camera_info"/> <remap from="odom" to="/stereo_camera/odom"/> <param name="queue_size" type="int" value="30"/> <!-- RTAB-Map lib parameters --> <param name="Rtabmap/TimeThr" type="string" value="700"/> <param name="Rtabmap/DetectionRate" type="string" value="1"/> <param name="Vis/MinInliers" type="string" value="10"/> <param name="Vis/InlierDistance" type="string" value="0.1"/> </node> </group> </launch> |

|

Administrator

|

Hi,

Computing stereo disparity and stereo odometry on the RPI is quite a lot! Odometry should run at least at 10 Hz to not have to move very slowly. You can check with "rostopic hz /stereo_camera/odom" what is the rate you can achieve with your current setup. If your robot has already a decent odometry (from wheel odometry/IMU), you may use it directly instead of using stereo_odometry to save a lot of computing resources. Next issue is how did you calibrate the cameras? After calibration, you can inspect the disparity image to see if stereo rectification is good (if so, the disparity should be dense on textured surfaces). Last issue is that you set "approx_sync:=true". With dealing with stereo cameras, it is very important that left and right images are hardware synchronized. Without cameras exactly synchronized, it is likely that the disparity of the stereo images will change when the robot is moving (giving wrong distance of objects form the camera). There is a topic here about synchronization of PI cameras that seems not possible: https://www.raspberrypi.org/forums/viewtopic.php?f=43&t=48238&p=377084&hilit=%2bsync#p377084 cheers, Mathieu |

|

I moved most of the nodes to my laptop, much faster. The camera nodes on the Pi can run at 20 FPS now.

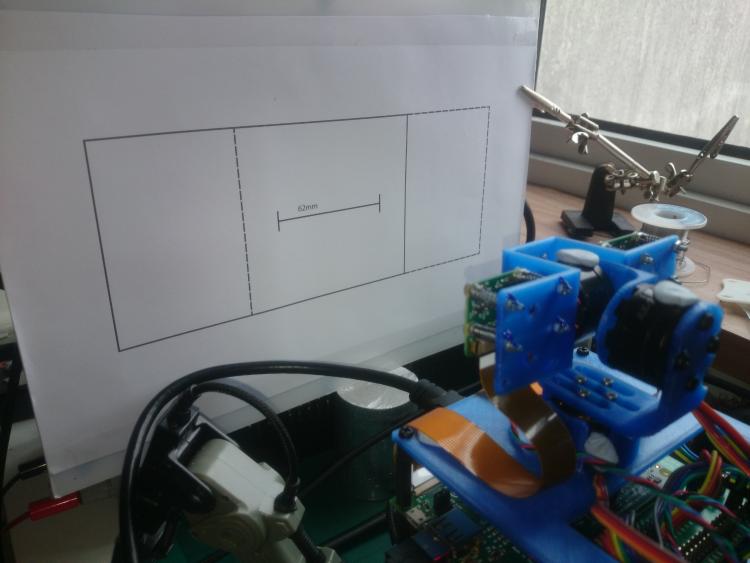

edit: one of the issues was time sync between laptop and Pi but I beleive I've fixed that with a local NTP server. I align the cameras using a homemade template as in the pics here:   Far from perfect. and then use ROS's own stereo calibration tool as per the wiki. I wasn't able to view the disparity images, though I didn't try very hard. That's a really good hint. I'll try again. I'm using a Pi Compute board with the 2 camera inputs, which should allow decent sync... I think, but then I'm using 2 separate usb_cam nodes which probably ruins the sync. I probably need to find or make a node that can use raspicam or raspivid at a high framerate to improve sync. I think the standard cameras are basically terrible for this, but I really want to do it with cheap standard parts if possible, not just for me but for other low budget hobbyists. I've ordered slightly better pi-compatible cameras with wide angle lenses, I'll see how much that helps. I have IMUs on the robot. I wonder if I could do some sort of Kalman filter sensor fusion to improve the confidence of the visual odometry? Not sure where to start with that though. Thanks for the advice Mathieu, I'll get back to you again once i've spent time trying to remedy some of these issues. |

|

Hi Mathieu,

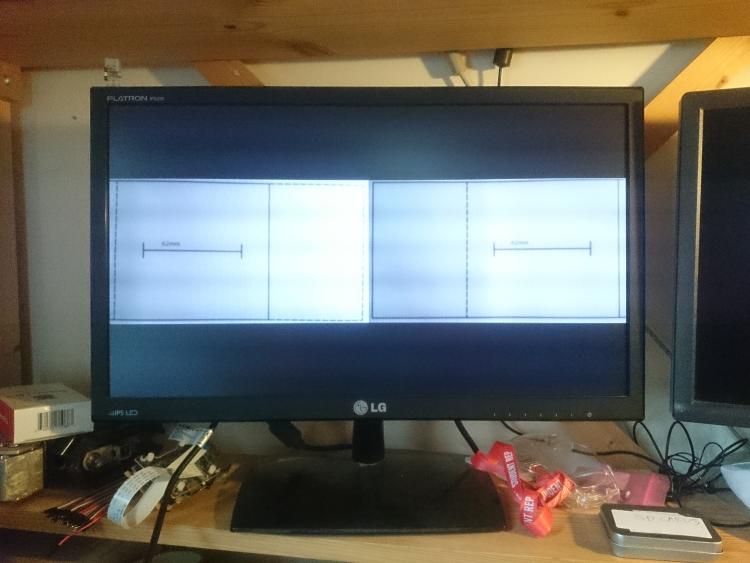

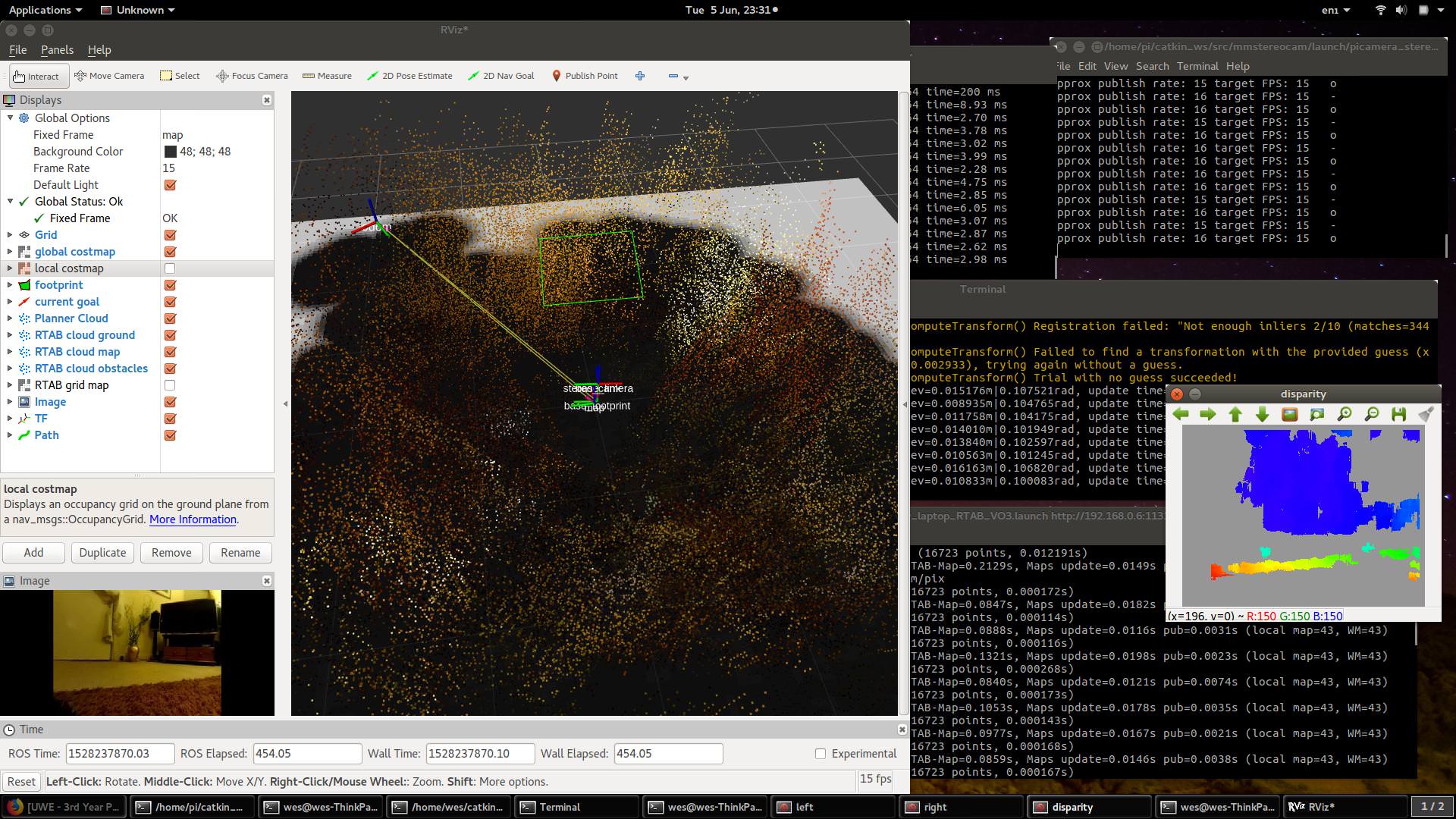

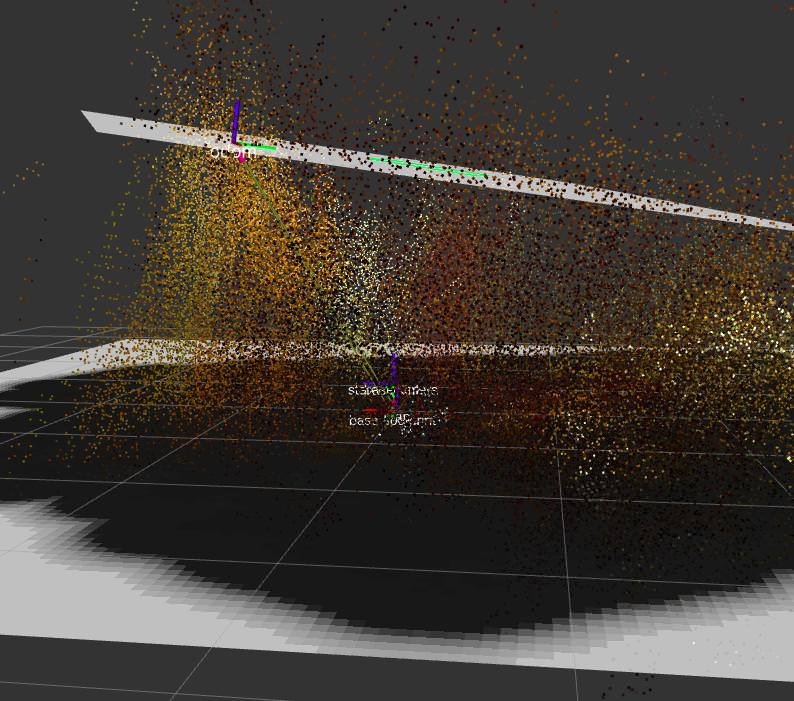

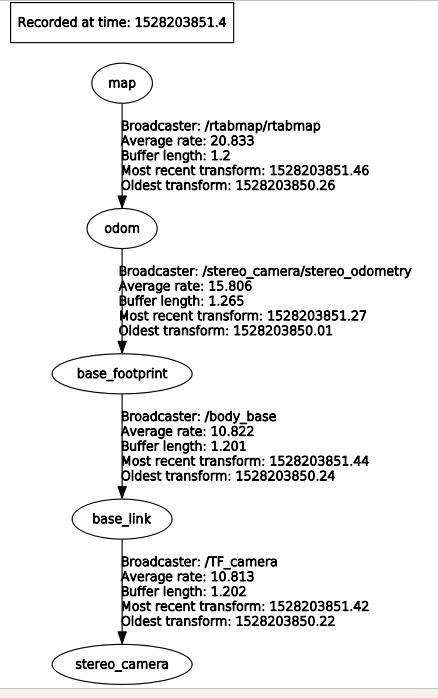

I rebuilt the camera head and wrote a custom camera node. It now has wide angle cameras, better aligned, and the sync is good, better than 1/60 sec at least, as measured by taking screenshots of a millisec stopwatch on my phone, which has a 60Hz screen. The disparity looks pretty good as shown in the right of the first image below. Everything runs at 15Hz comfortably, with 320x240 images. The odometry generally works really well now, I can roam around the room even when the light is bad and it will throw very few errors, and the TF shows the location correctly when I take it back to the start. But, the point cloud and occupancy grid map are messed up. The second image below shows how the odom frame and the local costmap plane have drifted badly, and I suspect the 'smearing' of the pointcloud is linked to that? Clearly I'm still doing something wrong. i have pasted the relevant files below. Could you please have a look?

Launchfile on the Pi: <launch> <group ns="/stereo_camera"> <node name="stereo_pub" pkg="mmstereocam" type="picamera_node.py" output="screen" > <!-- no params --> </node> <node name="repub_RGB_as_JPG_left" type="republish" pkg="image_transport" args="raw in:=left/image_raw compressed out:=left/image_raw" /> <node name="repub_RBG_as_JPG_right" type="republish" pkg="image_transport" args="raw in:=right/image_raw compressed out:=right/image_raw" /> </group> <!-- TF: camera should declare it's TF frame as stereo_camera for RTAB. set up static transform for RTAB: --> <arg name="pi/2" value="1.5707963267948966" /> <arg name="optical_rotate" value="0 0 0 -$(arg pi/2) 0 -$(arg pi/2)" /> <node pkg="tf" type="static_transform_publisher" name="TF_camera" args="$(arg optical_rotate) base_link stereo_camera 100" /> </launch> Laptop launch file: <launch> <!-- navigation stack with RTAB visual odometry and mapping and stereo cams based on http://wiki.ros.org/rtabmap_ros/Tutorials/StereoOutdoorNavigation and http://wiki.ros.org/rtabmap_ros#rtabmap https://github.com/introlab/rtabmap_ros/blob/master/launch/azimut3/ az3_mapping_robot_stereo_nav.launch --> <!--remap works: from=expected, to=actual--> <!-- ROS navigation stack move_base --> <group ns="planner"> <!-- sub remaps --> <remap from="openni_points" to="/planner_cloud"/> <!--<remap from="base_scan" to="/base_scan"/> --> <remap from="map" to="/rtabmap/grid_map"/> <!--<remap from="map" to="/rtabmap/proj_map"/> deprecated --> <remap from="move_base_simple/goal" to="/planner_goal"/> <node pkg="move_base" type="move_base" respawn="false" name="move_base" output="screen"> <rosparam file="$(find mmnavigate)/launch/costmap_common_params.yaml" command="load" ns="global_costmap" /> <rosparam file="$(find mmnavigate)/launch/costmap_common_params.yaml" command="load" ns="local_costmap" /> <rosparam file="$(find mmnavigate)/launch/local_costmap_params.yaml" command="load" /> <rosparam file="$(find mmnavigate)/launch/global_costmap_params.yaml" command="load" /> <rosparam file="$(find mmnavigate)/launch/base_local_planner_params.yaml" command="load" /> </node> </group> <!-- TF: base_footprint to base_link; floor to body centre --> <node pkg="tf" type="static_transform_publisher" name="body_base" args="0 0 0.2 0 0 0 base_footprint base_link 100" /> <group ns="/stereo_camera"> <!-- bring compressed images over wifi and uncompress for stereo_image_proc --> <node name="republish_left" type="republish" pkg="image_transport" args="compressed in:=left/image_raw raw out:=left/image_raw_relay" /> <node name="republish_right" type="republish" pkg="image_transport" args="compressed in:=right/image_raw raw out:=right/image_raw_relay" /> <!-- what's stereo_nodelet for? pointcloud nodelets use it? --> <node pkg="nodelet" type="nodelet" name="stereo_nodelet" args="manager"/> <!-- ROS image pipeline: stereo_image_proc for image rectification --> <node pkg="stereo_image_proc" type="stereo_image_proc" name="stereo_image_proc"> <!-- sub remaps --> <remap from="left/image_raw" to="left/image_raw_relay"/> <remap from="right/image_raw" to="right/image_raw_relay"/> <param name="disparity_range" value="32"/> <param name="approximate_sync" value="false" /> </node> <!-- RTAB point_cloud_xyz - Generate a point cloud from the disparity image --> <node pkg="nodelet" type="nodelet" name="disparity2cloud" args="load rtabmap_ros/point_cloud_xyz stereo_nodelet"> <!-- sub remaps --> <remap from="disparity/image" to="disparity"/> <remap from="disparity/camera_info" to="right/camera_info"/> <!-- Pub remaps --> <!-- cloud (sensor_msgs/PointCloud2) --> <remap from="cloud" to="cloudXYZ"/> <param name="approx_sync" type="bool" value="false"/><!--def=true--> <param name="decimation" type="int" value="2"/><!--def=1(off) only needed for high res feeds?--> <param name="voxel_size" type="double" value="0.05"/><!--def=0.0(off) metres--> <param name="max_depth" type="double" value="4"/><!--def=0.0(off) in metres --> </node> <!-- RTAB obstacles_detection - extract obstacles from point cloud for nav stack used as fake laser scan? --> <node pkg="nodelet" type="nodelet" name="obstacles_detection" args="load rtabmap_ros/obstacles_detection stereo_nodelet"> <!-- Sub remaps --> <remap from="cloud" to="cloudXYZ"/> <!-- 2 pubs: ground (sensor_msgs/PointCloud2) obstacles (sensor_msgs/PointCloud2) --> <!--<remap from="obstacles" to="/planner_cloud"/>--> <param name="frame_id" type="string" value="base_footprint"/><!--def=base_link--> </node> <!-- RTAB stereo_odometry --> <node pkg="rtabmap_ros" type="stereo_odometry" name="stereo_odometry" output="screen" launch-prefix="gnome-terminal --command"><!-- to second window as it's chatty --> <param name="frame_id" type="string" value="base_footprint"/> <param name="odom_frame_id" type="string" value="odom"/> <param name="approx_sync" type="bool" value="false" /> <param name="queue_size" type="int" value="5"/> <param name="Vis/InlierDistance" type="string" value="0.1"/> <param name="Vis/MinInliers" type="string" value="10"/> <param name="Vis/RoiRatios" type="string" value="0.03 0.03 0.04 0.04"/> <param name="Vis/MaxDepth" type="string" value="10"/> </node> </group><!-- stereo camera group --> <group ns="rtabmap"> <!-- RTAB Mapping: args: "delete_db_on_start" and "udebug" --> <node name="rtabmap" pkg="rtabmap_ros" type="rtabmap" output="screen" args="--delete_db_on_start"> <!-- sub remaps --> <remap from="left/image_rect" to="/stereo_camera/left/image_rect_color"/> <remap from="right/image_rect" to="/stereo_camera/right/image_rect"/> <remap from="left/camera_info" to="/stereo_camera/left/camera_info"/> <remap from="right/camera_info" to="/stereo_camera/right/camera_info"/> <remap from="odom" to="/stereo_camera/odom"/> <param name="frame_id" type="string" value="base_footprint"/> <param name="subscribe_stereo" type="bool" value="true"/> <param name="subscribe_depth" type="bool" value="false"/> <param name="approx_sync" type="bool" value="false"/> <param name="queue_size" type="int" value="30"/> <!-- RTAB-Map lib parameters --> <param name="Rtabmap/TimeThr" type="string" value="700"/> <param name="Rtabmap/DetectionRate" type="string" value="1"/> <param name="Vis/MinInliers" type="string" value="10"/> <param name="Vis/InlierDistance" type="string" value="0.1"/> </node> </group> </launch> costmap common params: obstacle_range: 2.5 raytrace_range: 3.0 footprint: [[ 0.3, 0.3], [-0.3, 0.3], [-0.3, -0.3], [ 0.3, -0.3]] #RTAB footprint_padding: 0.03 #robot_radius: 0.25 # in normal walking stances it isn't bigger than this inflation_radius: 0.55 # the radius to which the cost scaling function is applied transform_tolerance: 1 controller_patience: 2.0 NavfnROS: allow_unknown: true recovery_behaviors: [ {name: conservative_clear, type: clear_costmap_recovery/ClearCostmapRecovery}, {name: aggressive_clear, type: clear_costmap_recovery/ClearCostmapRecovery} ] conservative_clear: reset_distance: 3.00 aggressive_clear: reset_distance: 1.84 global costmap params: global_costmap: global_frame: map robot_base_frame: base_footprint update_frequency: 0.5 publish_frequency: 0.5 static_map: true local costmap params: local_costmap: global_frame: odom robot_base_frame: base_footprint update_frequency: 2.0 publish_frequency: 2.0 static_map: false rolling_window: true width: 4.0 height: 4.0 resolution: 0.05 origin_x: -2.0 origin_y: -2.0 observation_sources: point_cloud_sensor # assuming receiving a cloud from rtabmap_ros/obstacles_detection node point_cloud_sensor: { sensor_frame: base_footprint, data_type: PointCloud2, topic: openni_points, expected_update_rate: 0.5, marking: true, clearing: true, min_obstacle_height: -99999.0, max_obstacle_height: 99999.0} base local planner params: TrajectoryPlannerROS: max_vel_x: 0.45 min_vel_x: 0.1 max_vel_theta: 1.0 min_in_place_vel_theta: 0.4 acc_lim_theta: 3.2 acc_lim_x: 2.5 acc_lim_y: 2.5 holonomic_robot: true |

|

Administrator

|

Hi,

Can you share the resulting database? Note that by default parameters for creating the occupancy grid use decimation of 4 (parameter "Grid/DepthDecimation") for rtabmap node. With your small images, you may decrease to 2 or 1. If your robot is moving on the ground, you may also set "Reg/Force3DoF" to true for both stereo odometry and rtabmap nodes. This will filter position errors in z, as well as angular errors in pitch and roll. cheers, Mathieu |

«

Return to Official RTAB-Map Forum

|

1 view|%1 views

| Free forum by Nabble | Edit this page |