Reduce drift with 3× RealSense D455 with no FOV overlap

|

Hi Mathieu and RTAB-Map community,

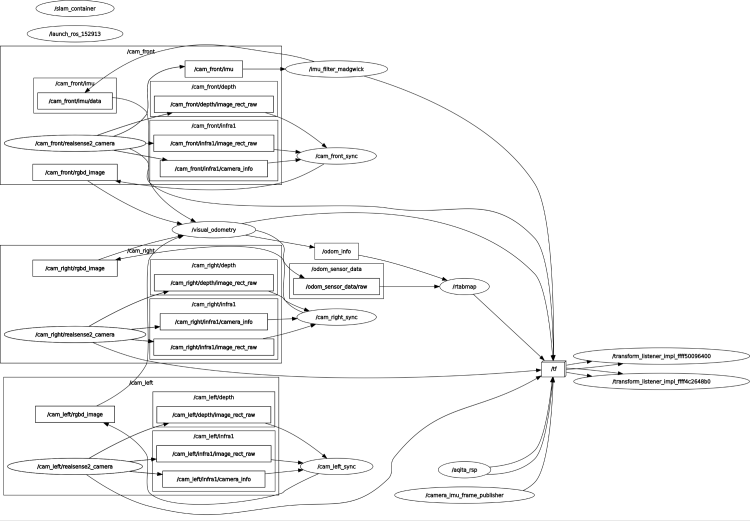

First of all, thanks for the great work and your involvement in helping people like me! I've spent a couple of months trying to use the RtabMap algorithm for a rig with 3 RealSense D455 cameras (non-overlapping FOV, triangular pattern - front/left/right at 90° spacing). No matter what I try, I still get drift that I think is more than it should be. I've also evaluated some of the parameters on the EuRoC dataset, and it seems that the performance is much better there. On Machine Hall Easy, I get <2cm RMSE at 48 meters, and with my setup, I manage to see ~50+cm or more when doing a 10-20 meter walk with it. I can't measure exact values as I don't have a GT, but rough measurements confirm this. As my use case is a drone, I really can't afford such drift, especially along the Z axis. Until now, I've tested with different feature extractors, and I thought SuperPoint may solve the issues, but although it was a little bit more robust, I don't see a huge benefit in using this feature type. At this point, my prime suspect is the camera's parameters or calibration of its extrinsics. Some questions: Are my parameters below appropriate for this setup? How can I optimize them? How can I use most of my GPU? How critical is TF tree accuracy for non-overlapping cameras? What precision is needed? I have a machined frame with, I believe, accurate measurements to the left eye. My best odom parameters are these: "Vis/FeatureType": "11" "SuperPoint/ModelPath": "/path/superpoint_v1.pt" "SuperPoint/Cuda": "true" "SuperPoint/Threshold": "0.010" "SuperPoint/NMS": "true" "SuperPoint/NMSRadius": "4" "Vis/MaxFeatures": "2500" "Kp/MaxFeatures": "1250" "SIFT/Gpu": "true" "ORB/Gpu": "true" "FAST/Gpu": "true" "GFTT/Gpu": "true" "Vis/CorNNType": "1" "Vis/CorNNDR": "0.7" "Vis/CorFlowGpu": "false" "Vis/CorGuessMatchToProjection": "false" "Kp/Parallelized": "true" "Kp/NNStrategy": "1" "Vis/EstimationType": "0" "Vis/MinInliers": "10" "Vis/InlierDistance": "0.1" "Vis/RefineIterations": "10" "OdomF2M/BundleAdjustment": "1" "OdomF2M/BundleAdjustmentMaxFrames": "15" "OdomF2M/MaxSize": "5000" "OdomF2M/MaxNewFeatures": "500" "OdomF2M/ValidDepthRatio": "0.85" "OdomF2M/ScanSubtractRadius": "0.05" "Odom/Strategy": "0" "Odom/KeyFrameThr": "0.2" "Odom/ImageBufferSize": "3" "Odom/ImageDecimation": "1" "Odom/ScanKeyFrameThr": "0.2" "Odom/VisKeyFrameThr": "100" "Optimizer/Strategy": "1" "Optimizer/Robust": "false" "g2o/Optimizer": "0" "g2o/Solver": "3" "g2o/PixelVariance": "1.0" subscribe_rgbd: true rgbd_cameras: 3 approx_sync: false wait_imu_to_init: true  RealSense D455 Configuration (3 cameras): Resolution: 424x240@30fps (depth + RGB/infra1) Hardware sync: Front camera = MASTER (mode 1), Left/Right = SLAVE (mode 2) Auto exposure enabled on all cameras for consistent lighting Filters: Spatial (mag=2, alpha=0.5, delta=20), Temporal (alpha=0.4,delta=20) Depth range: 0.3m to 20m (threshold filter) Visual preset: 3 or 4 IMU: Front camera only @ 200Hz, Madgwick filter (maybe a complementary filter would be better) Any guidance on debugging this multi-camera setup or recommendations for parameter tuning would be greatly appreciated. I'm particularly interested in understanding if the extrinsic calibration accuracy is the likely culprit and what methods are recommended for calibrating non-overlapping multi-camera systems. Thanks in advance for any help! Best regards |

|

Administrator

|

Hi,

Is approx_sync set to false to sync nodes too? So everything should have the same stamp? For odometry, using SuperPoint won't help that much. Is rtabmap build with OpenGV? It should if you want to do multi-camera VO. I assume it is also built with g2o based on the EuRoC results you get. If there is an extrinsics issue, you could launch rtabmap_viz with subscribe_odom, subscribe_odom_info and subscribe_rgbd to true, then open Odometry View and check if there are inliers in all cameras all the time. If one or two cameras don't get any inliers, then there is something wrong with the extrinsics. For odometry node, you may temporary use Odom/Strategy=1 with Vis/CorType=1 (F2F optical flow) to better see in the Odometry View if features from all cameras are tracked equally. Doublecheck that the depth image is correctly aligned with the RGB frame used. Note that you may debug using infra1+depth without IR emitter enabled to remove the any depth registration error or time sync error between RGB and IR form the testing system. The static TF between the camera should be as accurate as possible, but may still work if not perfectly set, though there will be more drift. For how to calibrate non-overlapping cameras, this post seems giving good insights about it. (github of the first answer) cheers, Mathieu |

|

Hi,

Yes, RtabMap is built with many deps. I created a custom Docker image for that. Seems like there are features in all of the cameras. Although I observe a saw-like graph when plotting the inliners from the odometry. I think these are due to new keyframes. I'm still trying to calibrate all three RealSense cameras using one of the IMUs as a bridge, but I'm not seeing great results. During testing, I noticed a behavior that I cannot fully explain, but basically, I lift the prototype and walk for ~25-30 meters forward while trying to keep it at the same height. The strange thing is that if I move forward, I accumulate 20-30cm of drift, and if I move backward ( the front-facing camera looking back and I walk backward), the Z drift is much smaller at around 5-10cm. This is more or less the same regardless of if im using the IMU or not. I am monitoring the TF position in RViz for these tests. Seems like either the initial map estimation is a bit tilted or something else is happening. Do you have an idea what might cause such behavior, and how this can be minimized? I'm thinking of adding a rangefinder pointing towards the ground. Not sure what the best way to integrate it is yet. Also, if visual odometry is the problem, will ICP odometry from a lidar be better, with less drift? RTAB-Map: 0.23.2 PCL: 1.12.1 With VTK: 9.1.0 OpenCV: 4.10.0 With OpenCV xfeatures2d: true With OpenCV nonfree: true With ORB OcTree: true With SuperPoint Torch: true With Python3: true With FastCV: false With OpenGV: true With Madgwick: true With PDAL: true With libLAS: false With CudaSift: true With TORO: true With g2o: true With GTSAM: true With Vertigo: true With CVSBA: false With Ceres: false With OpenNI: true With OpenNI2: true With Freenect: false With Freenect2: false With K4W2: false With K4A: false With DC1394: true With FlyCapture2: false With ZED: false With ZED Open Capture: false With RealSense: false With RealSense SLAM: false With RealSense2: true With MYNT EYE S: false With DepthAI: true With XVisio SDK: false With libpointmatcher: true With CCCoreLib: false With Open3D: true With OctoMap: true With GridMap: false With cpu-tsdf: false With open chisel: false With Alice Vision: false With LOAM: false With FLOAM: false With FOVIS: false With Viso2: false With DVO: false With ORB_SLAM: false With OKVIS: false With MSCKF_VIO: false With VINS-Fusion: false With OpenVINS: false |

|

Administrator

|

Can you share the database of a mapping session showing the issue?

Without IMU, if the camera is tilted on start, the z would increase or decrease, making the map looks a slope. With IMU, if the TF transform between the camera and IMU is wrong, that could give the same effect. Lidar Odometry/SLAM can suffer from similar drift than visual odometry. Note that using a different visual odometry apporach could also increase or decrease the drift. Is visual odometry done using all 3 cameras or just one? If vo is using 3 cameras, the extrinsics between the cameras should be accurate and ideally all hardware time synchronized. |

«

Return to Official RTAB-Map Forum

|

1 view|%1 views

| Free forum by Nabble | Edit this page |