RtabMap on a drone Problem

|

Hi, I built a drone with rtabmap running on a jetson tx1.

I fuse the visual odometry data with the imu of the drone. Now I've got this type of performance moving the drone very slowly. https://www.dropbox.com/s/x8j62ckc6jnunwz/rtabmap_11Apr.db?dl=0 Is there a way to improve the online results? i want to use the model for planning purpose.

|

|

Administrator

|

Hi,

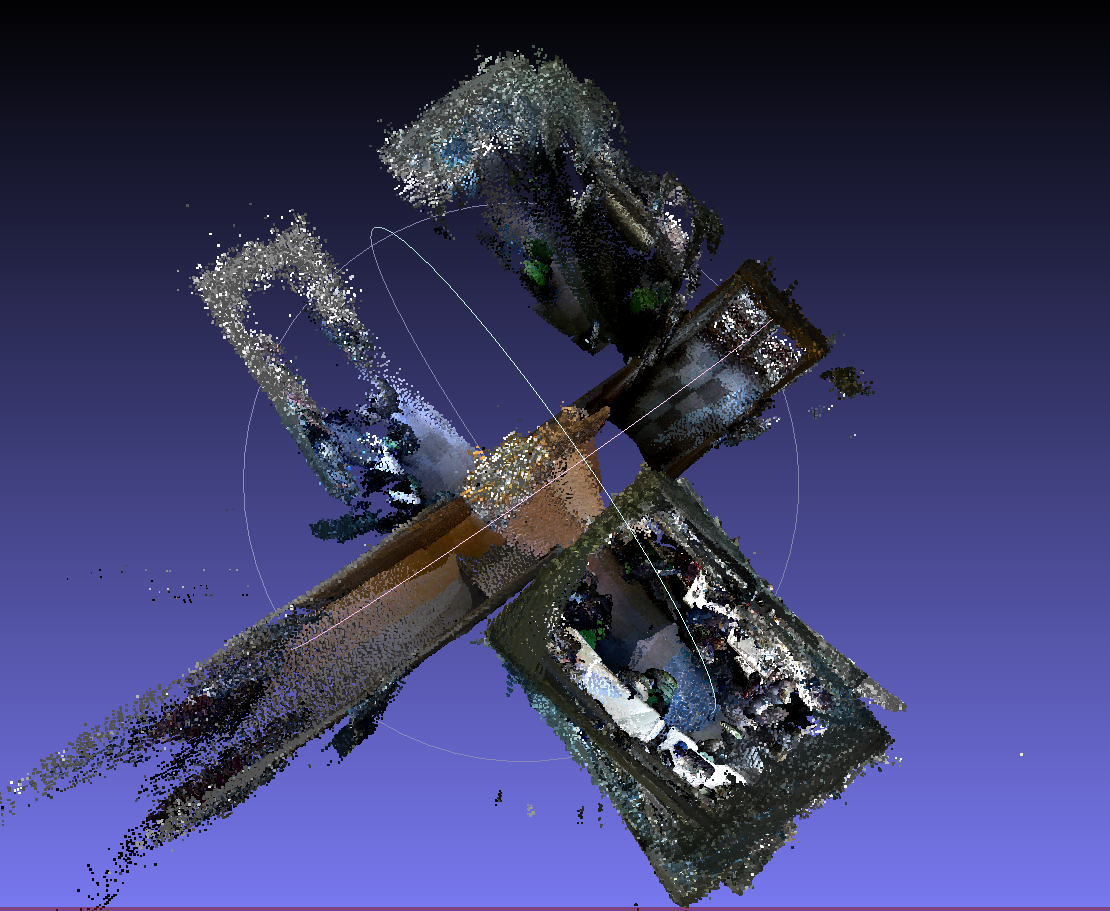

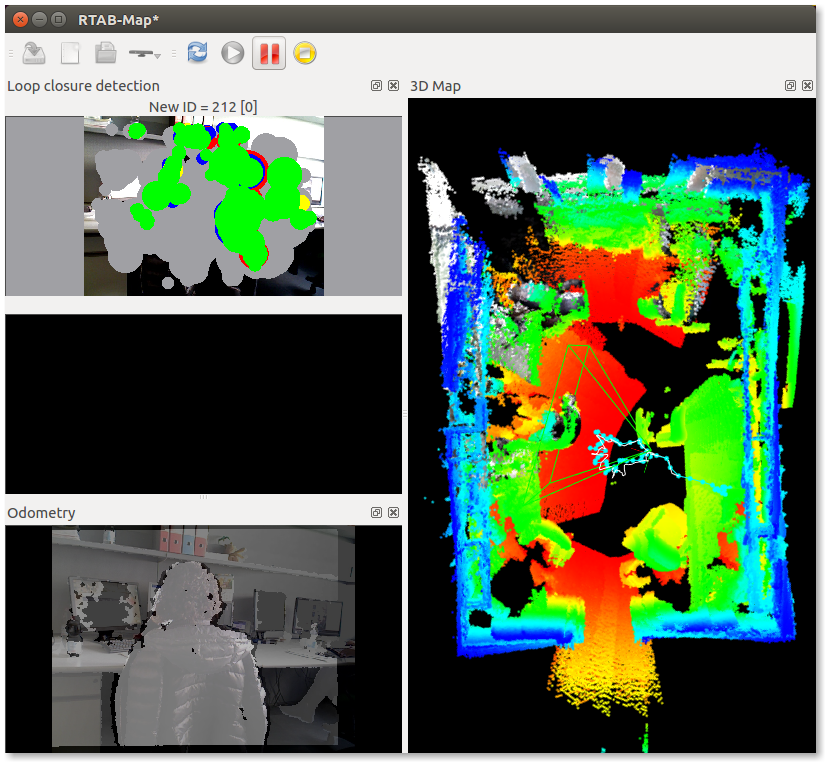

Looking at the database, it is mostly the odometry drift that is causing the most errors. Loop closure detection helps to reduce a little (as show below), but some movements/rotations seem to create more drift. Also, set "RGBD/ProximityPathMaxNeighbors" to 0 to avoid using the laser scan for proximity detections (which not very good with low FOV sensors). Before Loop:  After Loop:  So the main focus would be to decrease odometry drift. cheers, Mathieu |

|

This post was updated on .

Thank you for the rapid answer. I'm going to focus on odometry. I added the imu just to have better performance on rooms with poor features. Do you think the problem is due to this fusing? or is this a poor features environment? I don't understand if it is a problem of robot_localization misconfiguration or a limit of rtabmap. Thanks again

edit: I don't have the parameter you say, maybe because am I using 0.11.8? |

|

Administrator

|

Hi,

In 0.11.8, don't subscribe to laser scans generated from the camera's depth (either /scan or /scan_cloud). I don't think it is a problem from rtabmap node, as it just accumulates odometry poses and try to find loop closures to decrease odometry drift. Are you using rgbd_odometry or another approach for visual odometry? What is the launch file fusing IMU +VO (robot_localization config)? cheers, Mathieu |

|

Actually I don't subscribe to it. I'm extending your rtabmap.launch but scan and scan_cloud are setted to false. I'm using rgbd_odometry and imu data as input for robot_localization. Here's my launch file.

https://www.dropbox.com/s/2ey4sw44mf70i6x/RDBest.launch?dl=0 |

|

Administrator

|

Hi,

Sorry, you can ignore "RGBD/ProximityPathMaxNeighbors". When I replayed the database, my Preferences->Source parameters were set (from a previous test...) so that a laser scan was generated from depth image. For your launch file, you will have to split rtabmap.launch to integrate correctly with robot_localization as we need TF /odom->/base_link from robot_localization and not rgbd_odometry (see publish_tf parameter), similar to this example launch file: sensor_fusion.launch:

<arg name="rgb_topic" default="/camera/rgb/image_rect_color" />

<arg name="depth_topic" default="/camera/depth_registered/image_raw" />

<arg name="camera_info_topic" default="/camera/rgb/camera_info" />

<group ns="rtabmap">

<node pkg="rtabmap_ros" type="rgbd_odometry" name="rgbd_odometry" output="screen">

<remap from="rgb/image" to="$(arg rgb_topic)"/>

<remap from="depth/image" to="$(arg depth_topic)"/>

<remap from="rgb/camera_info" to="$(arg camera_info_topic)"/>

<remap from="odom" to="/vo"/>

<param name="publish_tf" type="string" value="false"/>

<param name="frame_id" type="string" value="$(arg frame_id)"/>

</node>

<node name="rtabmap" pkg="rtabmap_ros" type="rtabmap" output="screen" args="--delete_db_on_start">

<param name="frame_id" type="string" value="$(arg frame_id)"/>

<remap from="rgb/image" to="$(arg rgb_topic)"/>

<remap from="depth/image" to="$(arg depth_topic)"/>

<remap from="rgb/camera_info" to="$(arg camera_info_topic)"/>

<remap from="odom" to="/odometry/filtered"/>

</node>

</group>

<node pkg="robot_localization" type="ekf_localization_node" name="ekf_localization" clear_params="true" output="screen">

...

<param name="publish_tf" value="true"/>

<param name="odom0" value="/vo"/>

...

</node>

For robot_localization's parameters for each sensor, I would refer you to ROS Answers (questions about robot_localization). cheers, Mathieu |

|

Sorry for the delay. My launch is splitted as that, if I understand correctly what you said.

I changed the tf publisher to robot localization and I see a little improvement. There is still a big error in some areas.. Is there anything else I could try? This is my last db. https://www.dropbox.com/s/aqrefceay8zonf0/rtabmap_hope_16maggio.db?dl=0 |

|

Administrator

|

Hi,

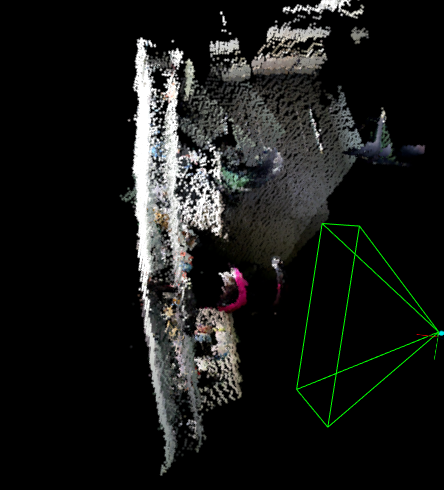

Odometry is indeed drifting quite a lot:  Did you try using rgbd_odometry alone (without robot_localization) while avoiding areas where visual odometry could get lost? You can try also with PnP transformation estimation "Vis/EstimationType=1" as there are some distortions in depth images, see the dome effect on the left wall:  You may try depth calibration tutorial to fix these distortions: https://github.com/introlab/rtabmap/wiki/Depth-Calibration cheers, Mathieu |

«

Return to Official RTAB-Map Forum

|

1 view|%1 views

| Free forum by Nabble | Edit this page |