Run standaone rtabmap with two RGBD cameras

|

This post was updated on .

I want to run standalone Linux app with two RGBD cameras. I followed this post - link.

First I confirmed that I can create valid SensorData for a single camera and post event to Rtabmap, and I get valid 3D pointlcoud and poses when the camera moves. All good. Then to start with two cameras code, I stitched two same rgb images horizontally and two same depth images horizontally. Captured from same depth camera (realsense), just duplicated. In the camera model localTransform is rtabmap::CameraModel::opticalRotation() for both models. so this is as if the two cameras are located in exactly same spot. After running this, I see immediately from the log that the tracking is lost. [2024-10-23 13:11:05.207192] [info] [framework/realsensegrabber.cpp] [144] [] [] Camera 153122070967 sent to SLAM. [ INFO] (2024-10-23 13:11:05.242) OdometryF2M.cpp:1423::computeTransform() Odom update time = 0.034782s lost=false features=947 inliers=0/0 variance:lin=9999.000000, ang=9999.000000 local_map=947 local_scan_map=0 [2024-10-23 13:11:05.272345] [info] [framework/realsensegrabber.cpp] [144] [] [] Camera 153122070967 sent to SLAM. [ INFO] (2024-10-23 13:11:05.304) RegistrationVis.cpp:1677::computeTransformationImpl() Not enough inliers 0/20 (matches=11) between -1 and 2 [ INFO] (2024-10-23 13:11:05.304) OdometryF2M.cpp:1423::computeTransform() Odom update time = 0.032439s lost=true features=897 inliers=0/11 variance:lin=1.000000, ang=1.000000 local_map=947 local_scan_map=0 [2024-10-23 13:11:05.435172] [info] [framework/realsensegrabber.cpp] [144] [] [] Camera 153122070967 sent to SLAM. [ INFO] (2024-10-23 13:11:05.464) RegistrationVis.cpp:1677::computeTransformationImpl() Not enough inliers 0/20 (matches=12) between -1 and 3 [ INFO] (2024-10-23 13:11:05.465) OdometryF2M.cpp:1423::computeTransform() Odom update time = 0.031977s lost=true features=906 inliers=0/12 variance:lin=1.000000, ang=1.000000 local_map=947 local_scan_map=0 Also I did not modify anything in how the odometry, rtabmap thread created/run (do I need to?) Whats the correct way of running two cameras? Is it possible to get it running with toy example of duplicating same data? I'm looking for the simplest way to run the two cameras SLAM code and build up from there (to real 2nd camera data and real transform). Will appreciate any advice Thanks, Jenna |

|

Administrator

|

Using two times exactly the same image may confuse odometry as it won't know (without any doubt) the correspondence between features of consecutive frames (they can either correspond to first or second camera). The feature matching would fail.

To help feature matching, you may try to rotate 90 deg a camera, by setting localTransform: "rtabmap::Transform(0,0,0,0,0,M_PI/2) * rtabmap::CameraModel::opticalRotation()". You can also decrease Vis/MinInliers parameter to 6. |

|

Thank you for the reply.

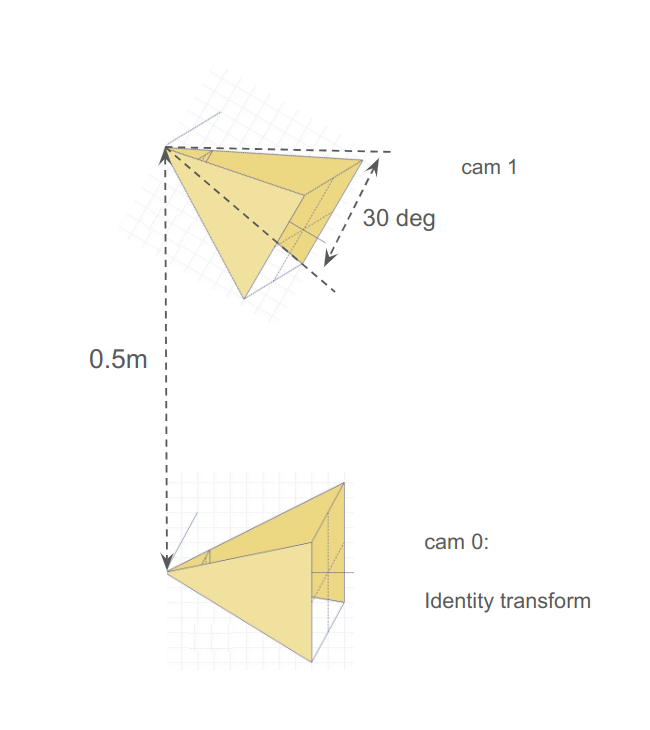

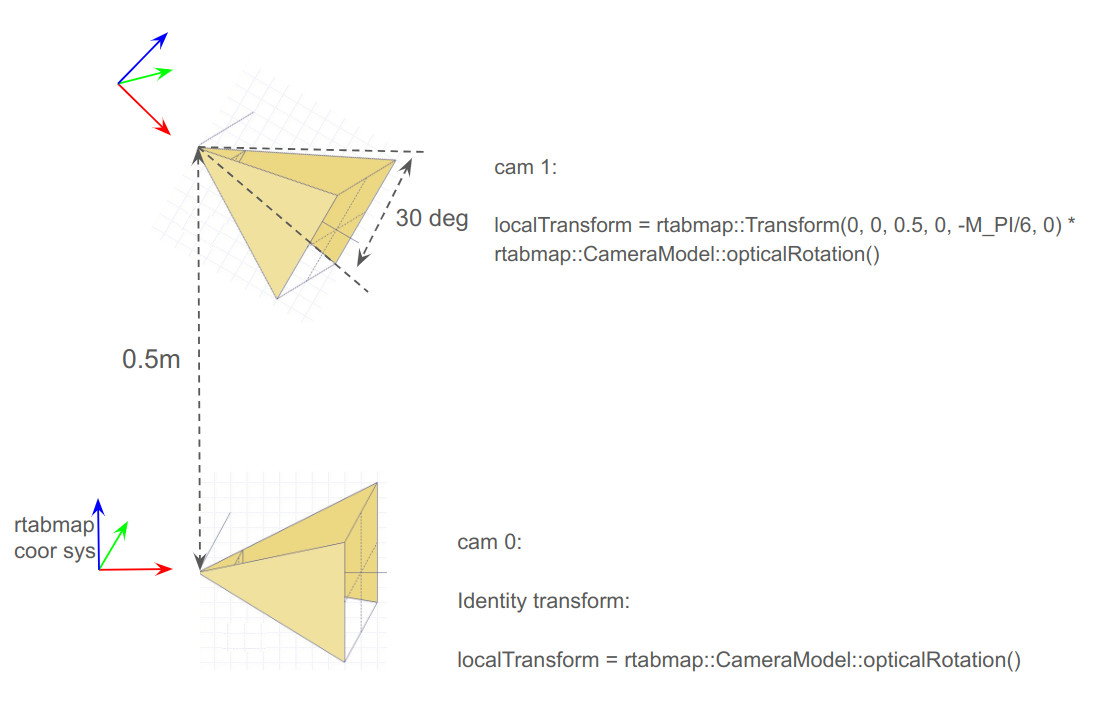

Now I think it will be better for be to go to real data instead of toy example that causes more problems because of how I setup it. Could you please advise, if my thinking is correct of how the transforms should be defined given this setup?   |

|

Administrator

|

I think the pitch should be positive, otherwise it looks good.

|

|

Thank you for checking!

I will keep this thread open for now, while implementing the change and trying out two cameras |

|

Thanks a lot for help! The two cameras setup work! Pitch was positive, you were right!

I have two follow-up questions: 1. If the cameras field of views have overlap, is there alignment/registration of both point clouds? My camera calibration was pretty rough (measured angle and distance by hand) and I was surprised that I dont see "stitching" artifacts on the 3D points 2. Previously before doing two cameras setup (and constructing SensorData myself) I really benefited from IMU filtering in camera thread. Now with two cameras, is the IMU filtering still supported? Can I just call SensorData::setIMU() and pass lower camera IMU data for example? |

|

Administrator

|

No, there is no correction between the two cameras. We assume extrinsics are accurate. You can set a localTransform on the IMU data. That would be the transform from the base frame to IMU. I think you've set one camera frame as the base frame, the imu frame could be relative to that frame. |

«

Return to Official RTAB-Map Forum

|

1 view|%1 views

| Free forum by Nabble | Edit this page |