Setup requirements

12

12

|

Hi Mathieu

I'm brand new to the 3D mapping world so please excuse my newbie questions. My discipline is generating As-Built floorplans of large scale buildings and I'm currently using the Leica 3D Disto which is a very time consuming process to gather data on site. Smaller areas with details I still do by hand which is also time consuming and sometimes full of errors. I've been watching a lot of videos of RTAB-Map and processes that users use and I really think this process can assist me a lot in the field! I've been playing around with a Kinect and Faro's software to start understanding the processes, but the damn kinect and its tethering is a nuisance! Would a sensor like the Intel Realsense SR200 or Asus Xtion 2 be compatible with RTAB-Map and would a dummy like me be able to utilize your system combined with the sensor? Which sensor would you recommend of the two? Would appreciate your assistance on this... |

|

Hi !

First of all, are you sure you're not talking about R200 or SR300 ? In addition, I looked here to check what kind of ROS packages support the cameras (because RTABMAP just wants the topics associated with RGB-D cameras, as you can understand on the package wiki page, in the subscribed topics section). By looking at the packages wiki pages: - openni_camera - realsense_camera - openni2_launch I don't see any SR200 or Xtion 2 being supported. Still, if you manage to get the right ROS topics out of your sensors, I don't why RTABMAP wouldn't work with it (although I canno't say I'm totally sure). Hope this was interesting for you & your question ! |

|

Thanks Blupon!

Yes, apologies, it's the R200 camera. I'll try to figure out what all the information means that you hyperlinked. I'm not a developer so all the information is like I'm reading Latin! urgh! My brother is a developer so he might be able to assist me in understanding. I apologize to all reading this if I'm actually in the wrong place asking dumb questions because of not being developing efficient. I think one should actually call me the end user really! I would love to learn and understand how these systems work. |

|

Administrator

|

Hi,

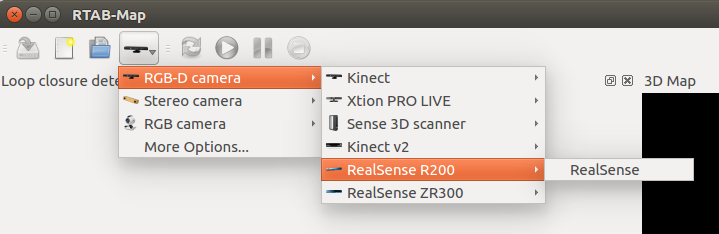

For an end user, ROS may be intimidating. You could try the standalone version of RTAB-Map (there are binaries here for Windows, basic tutorial here). Some common drivers are built-in (RealSense and Asus Xtion are supported):  Between RealSense and Xtion for indoor scanning, I would go for Xtion. cheers, Mathieu |

|

Thanks Mathieu

With my incompetent abilities I got the program running on my laptop. The OpenCl.dll was a problem though but I managed to get it up and running. The programs interface looks good! Now I need to start testing with my kinect and see what is what. To further my requirements question, I can't seem to purchase the Asus Xtion Pro Live camera anymore. Discontinued! Hence the Asus Xtion 2 compatibility? Can one manage to link the drivers of the Xtion 2 to RTAB-Map? Blupon mentioned something about this but he's not sure. Seeing that I'm in the purchase fase of a camera, what would be the best one of the already compatible devices be to create the most accurate pointclouds? I've come across the Basler Tof camera which is more industrial grade than the rest, but it appears that their drivers don't allow for tracking if I understand them correctly. I was researching their camera because of its high specifications and I thought that maybe I can get running on available 3rd party software. |

|

How would the ZED stereo camera compare as well??

I really like the concept and also being able to work outdoors as I do survey exteriors of buildings and properties. I can't find any specs on accuracy though, does anyone know how accurate it is of let's say 5/6m? |

|

In reply to this post by Tertius

How would the ZED stereo camera compare as well??

I really like the concept and also being able to work outdoors as I do survey exteriors of buildings and properties. I can't find any specs on accuracy though, does anyone know how accurate it is of let's say 5/6m? |

|

Administrator

|

In reply to this post by Tertius

Hi,

The Xtion 2 would be compatible using the same driver as Xtion 1 (OpenNI2), though I don't have this camera to test it myself. The ZED camera is a stereo camera, accuracy will be good only if the images as a lot of textures. Indoor, you will not get good 3D reconstruction results, in particular on texture-less walls/objects. For the Basler TOF camera, it is interesting, but cannot be used as is with the standalone version because it would require a specific driver. A RGB camera is also required to work with rtabmap. cheers, Mathieu |

|

This post was updated on .

Thanks Mathieu!

Well, I suppose this means that I can only benefit if I have both let's say the Xtion for internal and then flip to the ZED for external surveying/mapping?? Has anyone maybe tried the Xtion externally and had a success? I understand the structured light procedure and that they struggle in sunlight, but maybe, if one can focus one's mapping during sunset or sunrise? Won't this improve on things? Also another thing, would a Windows Surface Pro 3 be sufficient in mapping large scale? I mean specifically on the Ram side of things and off-course storage capacity? Regards Tertius Kruger 082 053 3724 |

|

Administrator

|

Hi Tertius,

Yes, if you map outside without direct sunlight, the Xtion could work like indoor. I don't think there is any problem with Windows Surface Pro 3. cheers, Mathieu |

|

Thanks once again Mathieu! Your helping a lot more than the mainstream suppliers and suppose to be tech support people!

I managed to source a second hand Xtion Pro Live camera which will be delivered next week, so I'll start running some tests with it then and revert back. On another thought, please excuse my incompetence again, can one integrate an IMU with RTAB-Map in order to utilize this form of tracking for odometry instead of the tracking points used with the current method. Basically what I'm after is to be able to increase the walking/moving speed of the scanner and from my first tests with the kinect and Faro's software and also Skanect was the frustration of losing the tracking numerous times. What do you think, possible?? |

|

Administrator

|

Hi Tertius,

RTAB-Map standalone doesn't support IMU. Note that the speed we can scan is dependent at 1- which frame rate the computer can process the frames, 2- the actual maximum frame rate of the camera, and 3- maximum speed before images start to have motion blur. See also this Lost Odometry section about other events that can cause tracking lost. Google Tango and Apple ARKit use visual inertial odometry approaches, which can be quite accurate when not moving too fast. With fast movements, the system will lose the ability to correctly track the visual features, then relying only on IMU for motion estimation, which is bad as the system will drift a lot more. cheers, Mathieu |

|

Thanks Mathieu.

I've started playing around today quickly with the system with the Asus Xtion. Wow! I love the speed it can scan compared to Faro Scene Capture! Just a few questions: How do I align the floor level to my x and y axis? I found that when I export and import into Pointcab my top view is skew meaning my Z value is not perpendicular to my floor level. I found that the processing procedure take a large amount of time when I export the cloud to .ply when I apply the smoothing function under the settings and I assume that my 4gig ram of my laptop is the problem maybe? Does the loop closure play a significant role in the accuracy of the map generated? I'm just thinking in terms of large areas to map with many rooms for instance, if I start in one room and end the scan in a opposite part of the building in another room, will this affect the accuracy? Thanks! |

|

Administrator

|

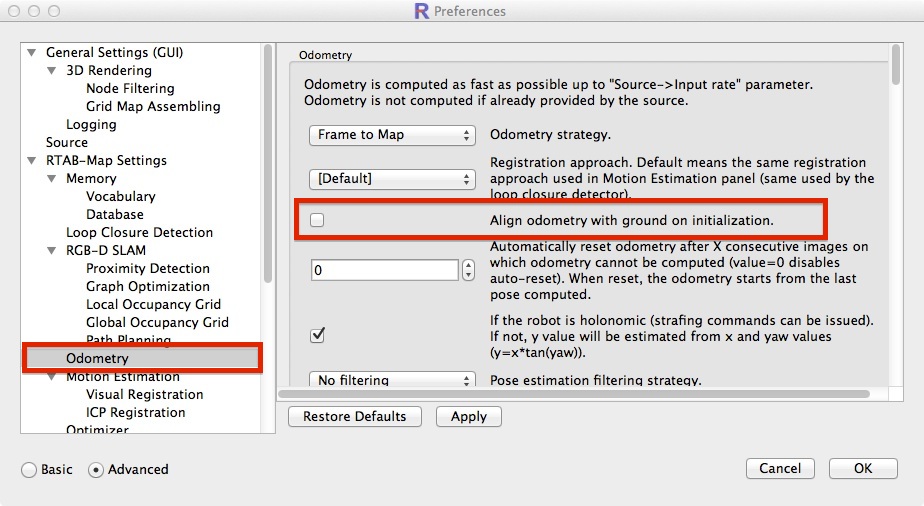

Hi Tertius,

To align the camera at start with the ground, activate this setting ("Odom/AlignWithGround"):  The camera should see the ground on start with ~45 degrees angle. Without this setting, the origin of the map is dependent on the camera orientation at start. Moving Least Square can be indeed very long to do (the approach is roughly explained here). Increasing the voxel size (e.g., 0.01 m or more) can reduce smoothing time. Another approach to smooth the cloud is to do meshing with Poisson surface reconstruction, though you may lose cloud density. Loop closures are very important to correct the accumulated odometry drift. See the video on this page, you will see how I regularly come back to previous locations to find loop closures and correct the map. So, if you scan on a straight line and never come back to start (no loop closures), the map will bend (the severity of bending will depend on the odometry quality). cheers, Mathieu |

|

Mathieu

Okay, so I've that going on the ground detection setting. It worked, but to some extent. It only aligns the one axis and not the opposite one (horizontal 90° turn). What I mean is the Z axis is not perpendicular to the floor level. Can it be set that both X and Y is leveled to the ground from initial startup recording? Or must I ad maybe a mini bubble level to the scanner to have level in my hand? Also, on this, if I'm scanning a space where the floor is at a angle but the walls have a theoretical 0 Z-axis, can it be set that one can align the wall to the Z-axis on the software, but then again, come to think of it, there is no additional axis on that plane to align again at 90° in the opposite face to create all walls parallel to the Z-axis. Darnit! Guess a little bubble level may be the only solution?? Can't one align the pointlcoud in Meshlab maybe like this. I haven't had time to learn Meshlab sufficiently yet, so maybe one can?? As for the processing and even scanning large areas, I do believe my laptop is of too low specifications. I took the system to site to test today and maybe assisting me in my parking garage survey. It struggled tremendously to keep up! It started off well, but by quarter way through the map building and tracking screens was far behind my actual positions. This, I think caused numerous tracking loss without me noticing it cause when it finally caught up after I came around to my loop closure the final pointcloud had three layers of the same images over each other, was weird! As for the processing, the laptop eventually decided to clunk out while still busy trying to process and export the .ply file. It was just too much for the old timer! I'll get some Ram and try to turbocharge the old man and see if I can't improve on the process. Only a 4gig Ram i5. Hopefully I can have it juiced to 16gig Ram. I catch what you mean by the loop closure yes, interesting though, in my head I had the process as quite accurate with no drift because of the multiple tracking overlapping whilst moving forward. I'll thus ensure that I'm pre planning my path prior to initializing the start point. Guess I need some more practice with the process in anyway. And also try to better understand the various parameter settings etc for various scenarios and details. |

|

Administrator

|

Hi,

Can you give a screenshot of the wrong alignment with ground? If wrong transformations happen, there could be superposed point clouds for same objects. Odometry drift without appropriate loop closures could create this effect too. You can add a screenshot of this if you want. If you can share also the database (DropBox/Google Drive link), this can help to see better the problem. When you export the PLY, set the voxel size to 1 or 2 cm to reduce a lot the RAM needed. cheers, Mathieu |

|

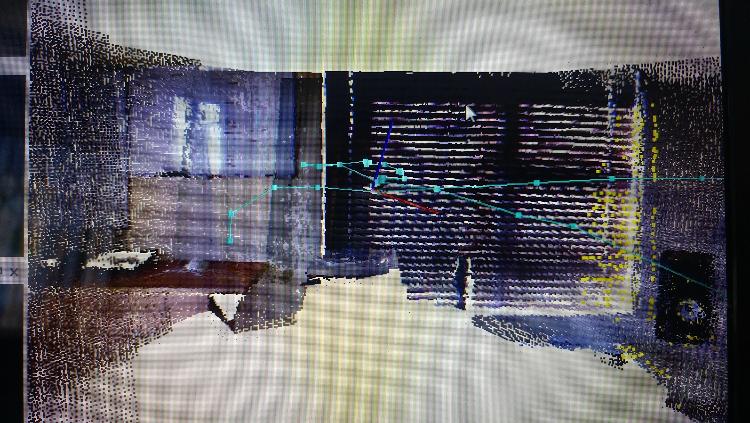

Hi Mathieu

I tried making a screenshot of the interface but it removed the 3d Map when I created the screen shot. So I took a normal photo. Note the Horizontal blinds in the back ground. The green Axis was aligned with the ground but the red was not thus leaving the z axis not perpendicular to the ground.  I did some more scanning here in the office and home today and I struggled a lot! I scanned a few areas and rooms in my home and for some reason the different areas was completely out of alignment with each other, both horizontally and vertically. I had no lost odometry with the last scan, so what the heck am I doing wrong?? The Dropbox/Google Drive link, where can I find this, I want to drop you the dataset of this particular scan. You reckon by upping my Ram on my Laptop will improve on things?? |

|

Hi Mathieu

Did some more scans today on site to try again. I had a fairly successful scan on a small area today externally as it was a cloudy day. The internal scan of the building was however not too good, I think I'm experiencing odometry drift. I came back to my starting point and it picked the loop closure/match ID but it was not aligned on the starting frame, you think maybe calibration or something?? Would appreciate it if I share my scans/dataset with you, so that you can have a look at some point and see if your experienced eyes can see what the heck I'm doing wrong... So if you have cloud link where I can load it to? Or should I create one on my side? Thanks! |

|

Hi Mathieu, you still there?? I'm really stuck on this and really want this to work!

|

|

Administrator

|

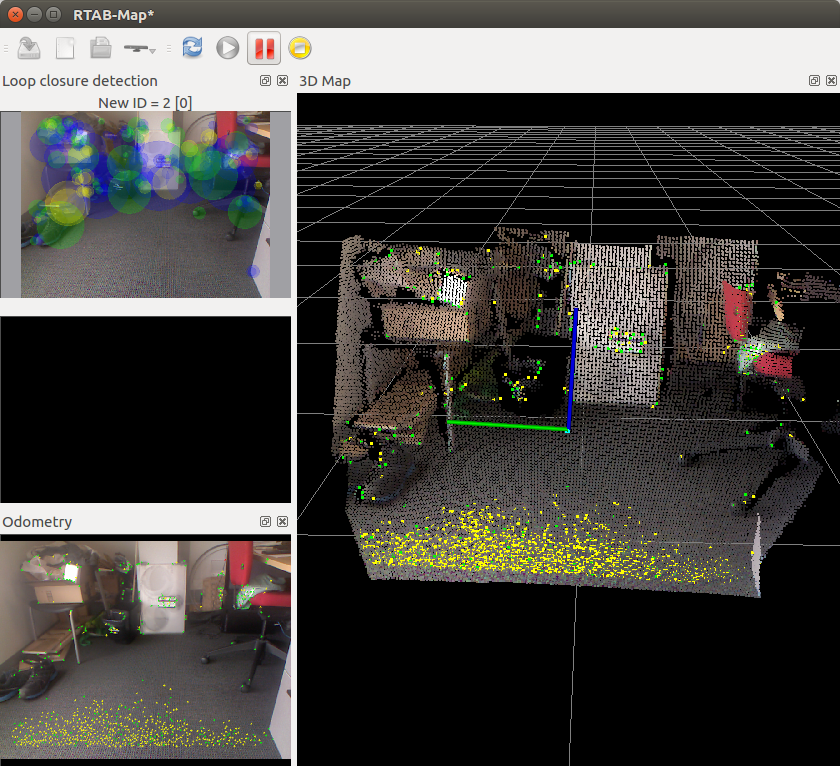

Hi,

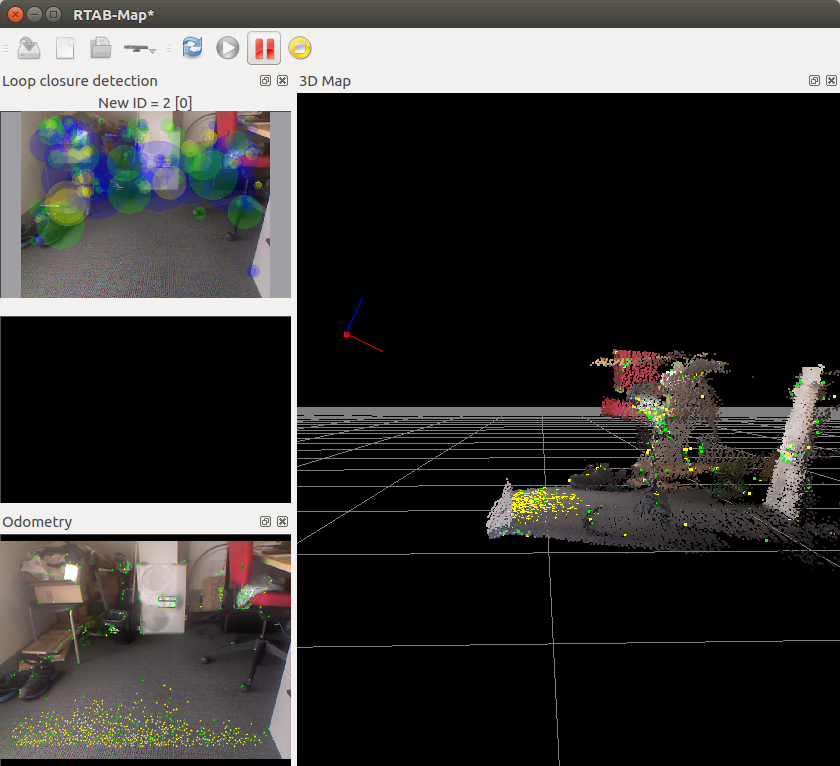

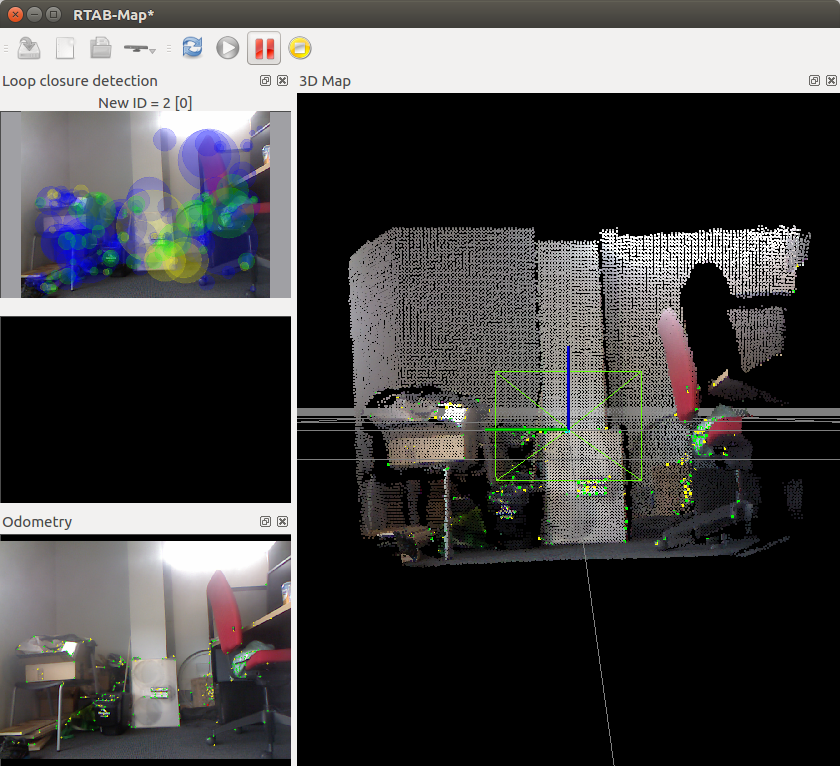

THe axis in the 3D map view is the axis from the camera, not the map, so it is normal it is not aligned with the map when rotating the camera. To get the map correctly aligned with the ground for the first frame, make sure the camera is seeing the ground like in this screenshot:  View on the side (the ground cloud is exactly on the xy plane of the map):  After mapping a little:  Note that with odometry drift, the ground/walls may not be aligned with gravity unless a loop closure is detected with the first frame (which is correctly aligned with the ground). If you cannot start on an even ground, unckeck Align with ground odometry option, and start the camera looking straight ahead. Example:  Note that it can be difficult to start exactly perpendicular to ground, there is a little roll angle here. The height of the camera from the ground is also 0 as we don't detect the ground. To share database, people upload on their own dropbox/google drive and share the link here. cheers, Mathieu |

«

Return to Official RTAB-Map Forum

|

1 view|%1 views

| Free forum by Nabble | Edit this page |