The calibration problem.

|

Hello We met two problem.

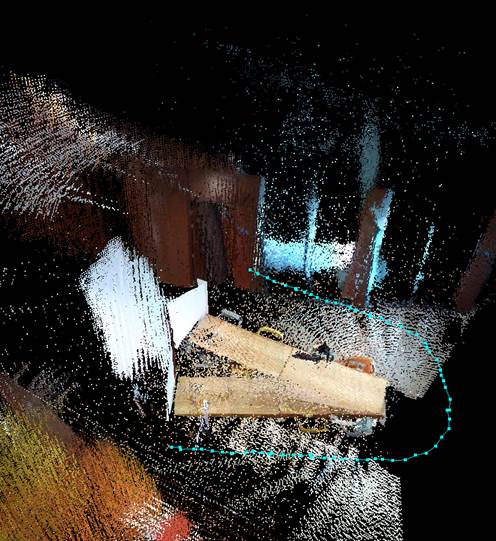

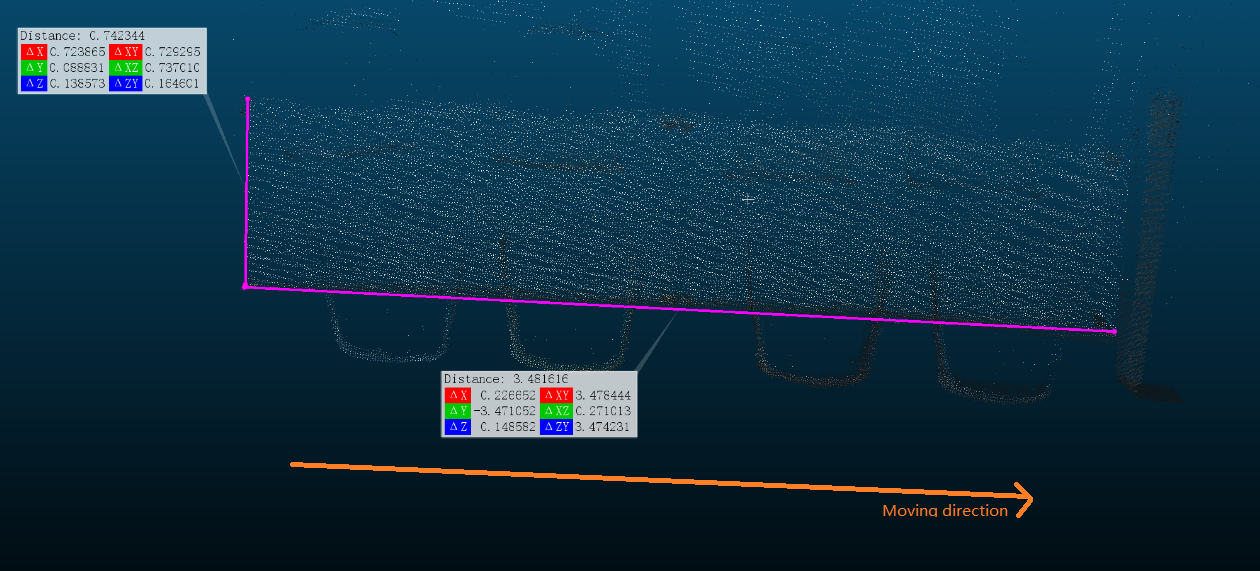

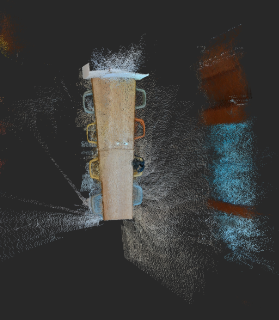

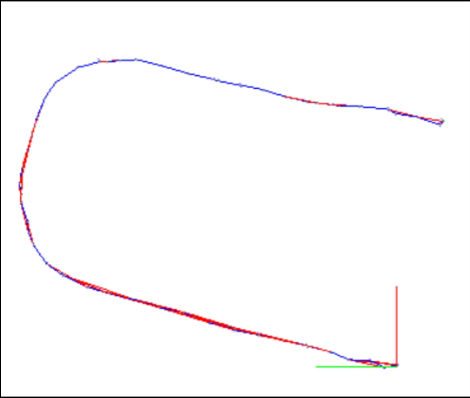

We use an Orbbec Deeps camera to scan the cloud points. First Problem I always found that the angle of POS rotation is always less than real value. The clouds can’t be merged corrected. Here is the an example. This picture shows that result with Orbbec Camera.  The same scene in tango like that.  Second Problem After splicing, the width is smaller than the actual value. I move horizontally camera. Collect the clouds of the table. And measure the size of cloud.  The result of distance is 3.481616 and 0.742344 But the real value is 4.02 and 0.76 So I think it may a calibration problem? Could you help us to found which parameter should I edit to fix this problem? Thanks a lot. |

|

Administrator

|

Hi,

RTAB-Map's visual odometry drifts more than Tango's visual odometry. This may be caused by bad depth/RGB image synchronization and/or bad depth registration. Can you share the resulting database with orbbec? For the scale problem, it is related to camera calibration (in particular fx anf fy parameters). You may recalibrate the RGB camera to compare de K matrix. You may also evaluate if the depth values make sense too. cheers, Mathieu |

|

Thank you for your reply,

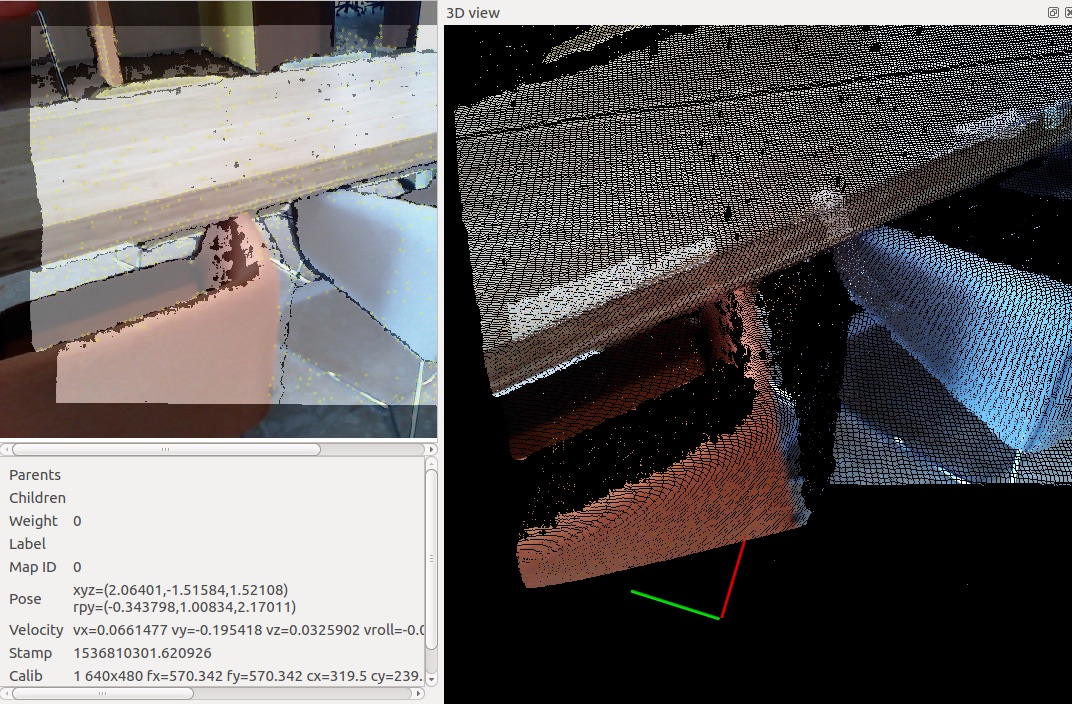

From db view the RGB/depth image synchronization looks well  And I share the DB in the path https://drive.google.com/open?id=1eCtaHJRAxDizrHrfkfM7kYegHVukFJ0Y And from my test . The intel realscense Camera has also this problem. Though the result much better than obbrec,the visual odometry drifts also more than Tango's .  Could you give some suggestion? Thanks a lot. |

|

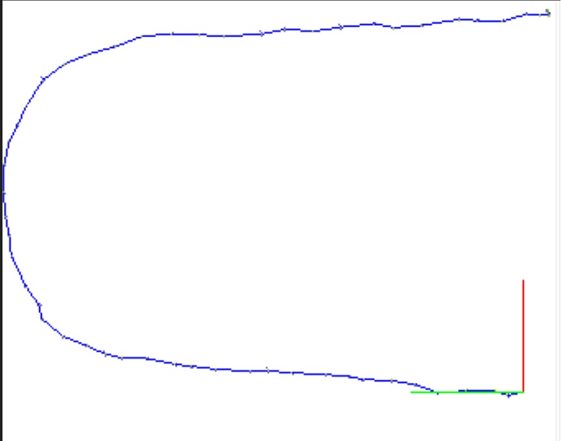

By the way

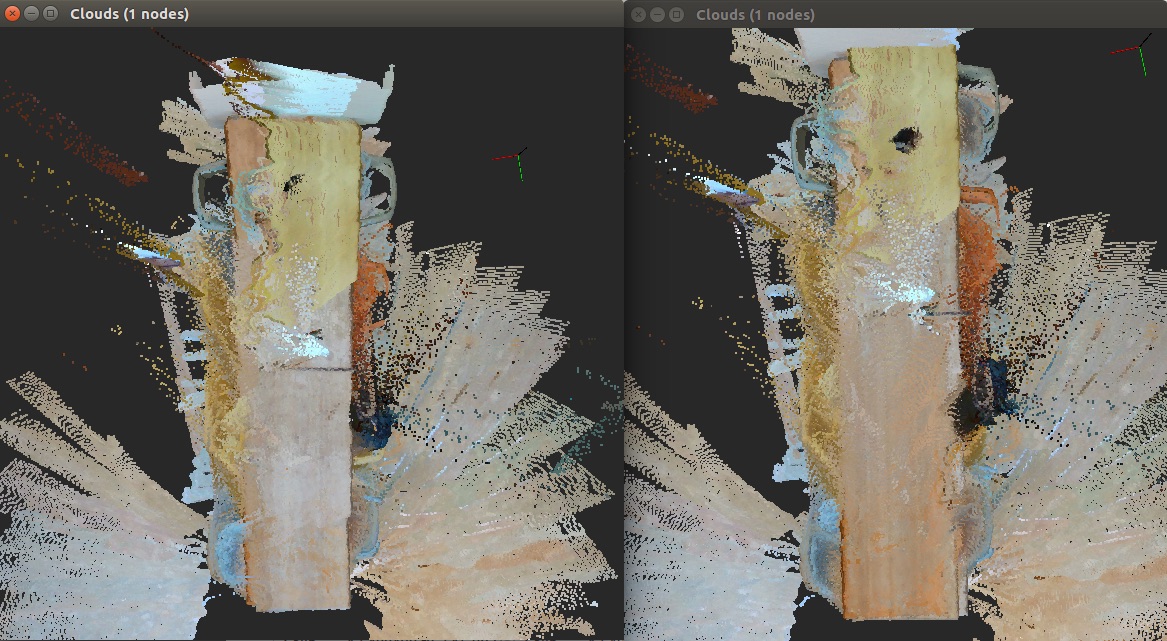

This database is base on the realsense D435 . it also has a little drift.  The database path: https://drive.google.com/open?id=1eCtaHJRAxDizrHrfkfM7kYegHVukFJ0Y But after use "detect more loop closures" or "refine all loop closures links" the result is looks well.  The database after edit path : https://drive.google.com/open?id=11F2BQ4rf2Ijl_xUdAVWi_Fq87FOGOUsu From our expection, we won't like any manual process after scan. So how can I add more loop closures in the process real time ? |

|

Administrator

|

Hi,

For the orbbec database, there are some registration errors, the depth image is not exactly matching the color image (look at the red chair):  For the realsense database, adding more local (between consecutive nodes) loop closures would not add really more accuracy than the odometry provide. Here is a comparison without and with loop closures respectively:    Having a real loop closure between the beginning and the end would help more to correct the odometry drift. For this kind of path (no real loop closure), I would try to tune OdomF2M parameters: $ rtabmap --params | grep OdomF2M Param: OdomF2M/BundleAdjustment = "1" [Local bundle adjustment: 0=disabled, 1=g2o, 2=cvsba.] Param: OdomF2M/BundleAdjustmentMaxFrames = "10" [Maximum frames used for bundle Param: OdomF2M/MaxSize = "2000" [[Visual] Local map size: If > 0 (example 5000), the odometry will maintain a local map of X maximum words.] As odometry F2M does local bunble adjustment between local frames, it should give better optimized transforms locally. If you still want to add loop closures on consecutive nodes in real-time, set RGBD/ProximityDetectionByTime to true. $ rtabmap --params | grep ProximityByTime Param: RGBD/ProximityByTime = "false" [Detection over all locations in STM.] There is indeed no ROS service to call "Detect more loop closures", though it would be possible to add the interface, I opened an issue about that. Finally, RTAB-Map on Google Tango uses the visual-inertial odometry approach from Google, which gives better odometry than RTAB-Map's odometry approach, explaining why you can get better results on Tango than with the cameras alone. cheers, Mathieu |

«

Return to Official RTAB-Map Forum

|

1 view|%1 views

| Free forum by Nabble | Edit this page |