Tips needed to improve on RTAB Mapping for legged Robots

Tips needed to improve on RTAB Mapping for legged Robots

|

This post was updated on .

Hi Matlabbe,

I have been spending the past few weeks reading up and experimenting on your RTAB implementation for ROS. Really appreciate the work that you have done in making such an amazing piece of technology open-sourced. I managed to come up with a rough map of an office environment, but I am still unsatisfied with the results. I have mounted a intel realsense d435i, along with a 2d lidar sensor on my legged robot. The RGB image and depth information, along with laserscan and odometry values are recorded into a ROSbag. I did not use the IMU within the Realsense for odometry, rather, my odometry came from another IMU within the robot. The odometry value is corrected by the RTABMAP node by setting `Reg/Strategy` to 1. My starter code is based off of this ROS tutorial. The frequency of topics the recording is limited due to recording large volume of information at once, but I thought it was sufficient given that the main RTAB node is running at 1Hz: Laserscan: ~15Hz Aligned depth cloud: ~12Hz RBG Image: ~4Hz Here is a video of the results: https://youtu.be/FC21MHkqcUs Here is a video of the 3d point cloud after post-processing the database: https://youtu.be/83EHctnZ39E Here is database of the results: RTAB DB FILE I have a few questions regarding how I can improve the map: 1) It seems like there are still lingering points along the walkway, as though the loop closure did not do a good job of stitching the depth cloud properly. I tried out almost all keypoint detectors and descriptors possible, but this is already the best possible configuration. Any idea on how I can improve this?  2) By the end of the simulation, there were only 5 loop closures detected, with 30 rejected. Is this normal? I attempted to use Vertigo using your tutorial on loop closure detection, but the results were worse off than this current configuration. It seems like the optimization resulted in an even more distorted map, even though there were significantly more loop closures and less rejected ones. Any insights on why this is so? 3) This question is more conceptual in nature. What is the purpose of the feeding in the depth cloud into the RTABmap node? Is it solely to construct a 3d map of the environment, stored as an octmap? Is this map used for Lidar ICP odometry correction, where the laserscan at each time step is compared against the Octmap to give a good estimation of the robot's odometry? Or is the odometry correction done by comparing the scan from previous timestep and the one at the current time step? Could I disable to construction of the 3D map if I wanted to? (p.s. I am asking this question because I hope to eventually swap out the 2d lidar for a 3d Lidar as an input for more accurate scan matching. Ideally, I would like to use the 3d Lidar's input to construct the 3d map, especially since a 3d lidar have longer range than a typical RGBD camera. Would it be as simple as specifying the ''depth_topic" to be the 3d point cloud's topic? Or would another tool like Google's cartographer be more suitable for this? For outdoor applications, I would like to have 3D point cloud for ICP odometry correction and for map construction, and also at the same time using RGB input for global loop closure). I welcome any other tips you might have! Here is my rtab mapping code. Thank you very much for your help! rtab_mapping.launch: <?xml version="1.0" encoding="UTF-8"?> <launch> <arg name="database_path" default="/home/$(env NAME)/.ros/rtabmap.db"/> <arg name="rgb_topic" default="/camera/color/image_raw"/> <arg name="depth_topic" default="/camera/aligned_depth_to_color/image_raw"/> <arg name="camera_info_topic" default="/camera/color/camera_info"/> <arg name="scan_topic" default="/scan_filtered"/> <group ns="rtabmap"> <!-- Use RGBD synchronization --> <!-- Here is a general example using a standalone nodelet, but it is recommended to attach this nodelet to nodelet manager of the camera to avoid topic serialization --> <node pkg="nodelet" type="nodelet" name="rgbd_sync" args="standalone rtabmap_ros/rgbd_sync" output="screen"> <remap from="rgb/image" to="$(arg rgb_topic)"/> <remap from="depth/image" to="$(arg depth_topic)"/> <remap from="rgb/camera_info" to="$(arg camera_info_topic)"/> <remap from="rgbd_image" to="rgbd_image"/> <!-- output --> <!-- Should be true for not synchronized camera topics (e.g., false for kinectv2, zed, realsense, true for xtion, kinect360)--> <param name="approx_sync" value="true"/> </node> <node name="rtabmap" pkg="rtabmap_ros" type="rtabmap" output="screen" args="--delete_db_on_start"> <!-- Sensor Input Parameters --> <param name="frame_id" type="string" value="camera_link"/> <param name="subscribe_depth" type="bool" value="false"/> <param name="subscribe_rgbd" type="bool" value="true"/> <param name="subscribe_scan" type="bool" value="true"/> <remap from="scan" to="$(arg scan_topic)"/> <remap from="rgbd_image" to="rgbd_image"/> <!-- If odom_frame_id is empty, use odom message instead of tf --> <param name="odom_frame_id" type="string" value=""/> <remap from="odom" to="/odom"/> <!-- Output Params --> <param name="queue_size" type="int" value="10"/> <remap from="grid_map" to="/map"/> <!-- Rate (Hz) at which new nodes are added to map --> <param name="Rtabmap/DetectionRate" type="string" value="1"/> <!-- RTAB-Map's parameters --> <param name="RGBD/NeighborLinkRefining" type="string" value="true"/> <!-- Correct odometry using the input lidar topic using ICP --> <param name="RGBD/ProximityBySpace" type="string" value="true"/> <!-- Find local loop closures based on the robot position in the map. --> <param name="RGBD/AngularUpdate" type="string" value="0.01"/> <!-- Map update Rate (Angular) --> <param name="RGBD/LinearUpdate" type="string" value="0.01"/> <!-- Map update Rate (Linear) --> <param name="RGBD/OptimizeFromGraphEnd" type="string" value="false"/> <!-- FALSE: on loop closures the graph will be optimized from the first pose in the map --> <param name="RGBD/ProximityPathMaxNeighbors" type="string" value="10"/> <!-- Use 10 if Reg/Strategy is using ICP--> <param name="Grid/FromDepth" type="string" value="false"/> <!-- FALSE: the occupancy grid is created from the laser scans --> <param name="Reg/Force3DoF" type="string" value="true"/> <!-- roll, pitch and z won't be estimated. --> <param name="Reg/Strategy" type="string" value="1"/> <!-- 0=Visual, 1=ICP, 2=Visual+ICP --> <!-- ICP Loop Closure Detection (NOT IN USED) --> <param name="Optimizer/Strategy" type="string" value="2"/> <!-- g2o=1, GTSAM=2 --> <param name="Optimizer/Robust" type="string" value="false"/> <param name="RGBD/OptimizeMaxError" type="string" value="6"/> <!-- 0=SURF 1=SIFT 2=ORB 3=FAST/FREAK 4=FAST/BRIEF 5=GFTT/FREAK 6=GFTT/BRIEF 7=BRISK 8=GFTT/ORB 9=KAZE --> <param name="Kp/DetectorStrategy" type="string" value="2"/> <!-- KEYPOINT DETECTOR --> <param name="Vis/FeatureType" type="string" value="2"/> <!-- KEYPOINT DESCRIPTOR --> <param name="Vis/MinInliers" type="string" value="15"/> <!-- Minimum visual inliers to accept loop closure --> <param name="Kp/MaxDepth" type="string" value="0"/> <!-- unlimited distance for the vocabulary --> <!-- ICP parameters --> <param name="Icp/VoxelSize" type="string" value="0.05"/> <!-- (DEFAULT: 0.0) --> <param name="Icp/MaxCorrespondenceDistance" type="string" value="0.1"/> <!-- (DEFAULT: 0.1) --> <param name="Icp/MaxTranslation" type="string" value="0.5"/> <!-- (DEFAULT: 0.2) --> <param name="Icp/MaxRotation" type="string" value="0.78"/> <!-- (DEFAULT: 0.78) --> <param name="Icp/Iterations" type="int" value="50"/> <!-- (DEFAULT: 30) --> <!-- Set to false to avoid saving data when robot is not moving --> <param name="Mem/NotLinkedNodesKept" type="string" value="false"/> </node> </group> </launch> <?xml version="1.0" encoding="UTF-8"?> <launch> <!-- Arguments for launch file with defaults provided --> <arg name="database_path" default="/home/$(env NAME)/.ros/rtabmap.db"/> <arg name="rgb_topic" default="/camera/color/image_raw"/> <arg name="depth_topic" default="/camera/aligned_depth_to_color/image_raw"/> <arg name="camera_info_topic" default="/camera/color/camera_info"/> <arg name="scan_topic" default="/scan_filtered"/> <!-- Mapping Node --> <group ns="rtabmap"> <!-- visualization with rtabmapviz --> <node pkg="rtabmap_ros" type="rtabmapviz" name="rtabmapviz" args="-d $(find rtabmap_ros)/launch/config/rgbd_gui.ini" output="screen"> <param name="subscribe_depth" type="bool" value="false"/> <param name="subscribe_rgbd" type="bool" value="true"/> <param name="subscribe_rgb" type="bool" value="false"/> <param name="subscribe_scan" type="bool" value="true"/> <param name="frame_id" type="string" value="base_link"/> <remap from="rgb/image" to="$(arg rgb_topic)"/> <remap from="depth/image" to="$(arg depth_topic)"/> <remap from="rgb/camera_info" to="$(arg camera_info_topic)"/> <remap from="scan" to="$(arg scan_topic)"/> <remap from="odom" to="/odom"/> </node> </group> </launch> |

|

Administrator

|

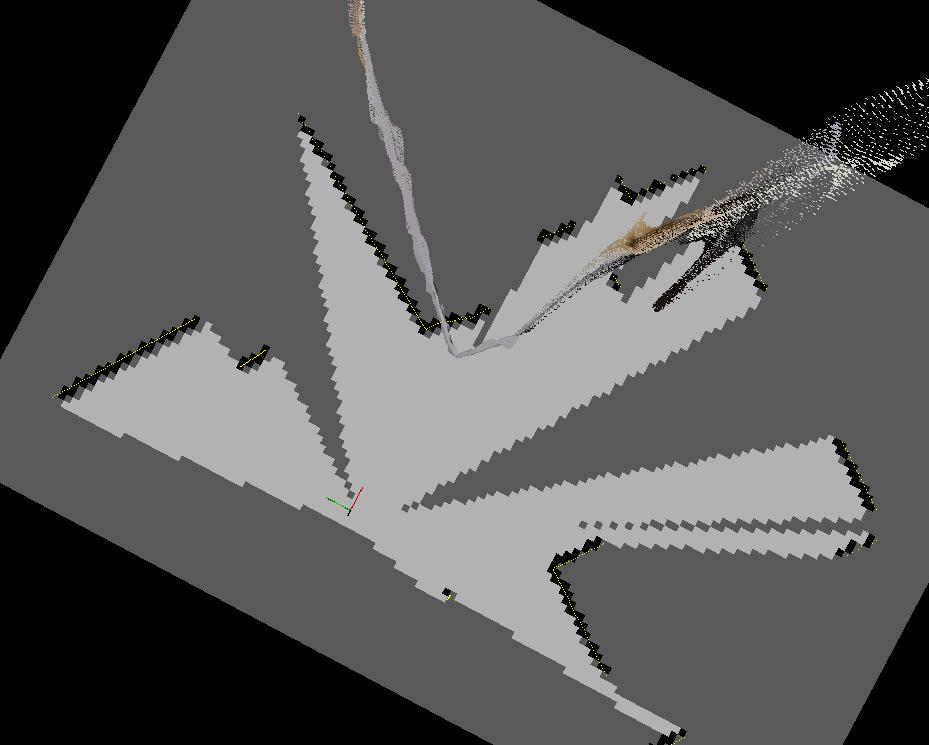

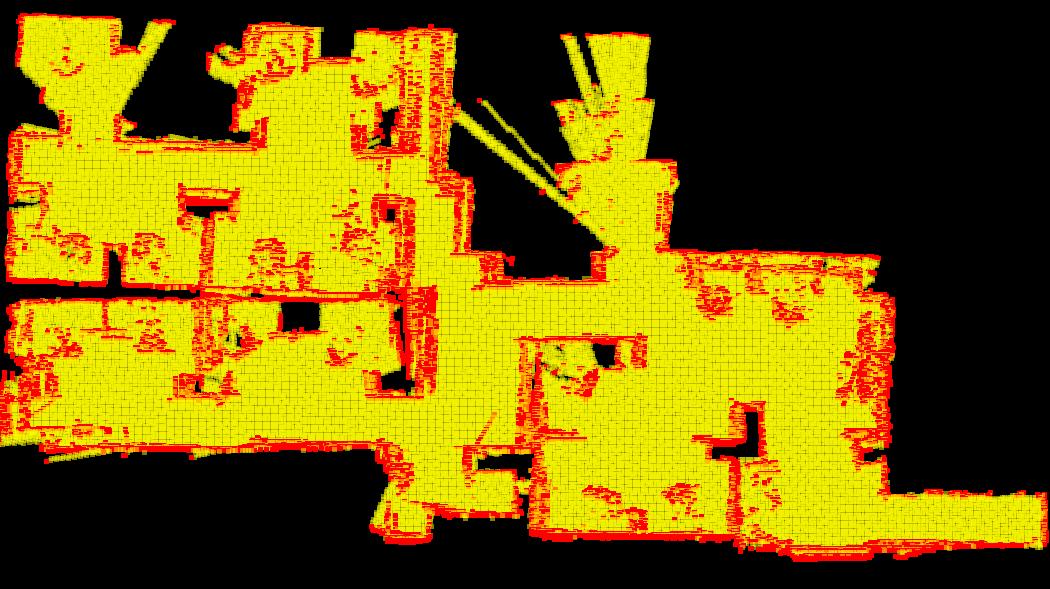

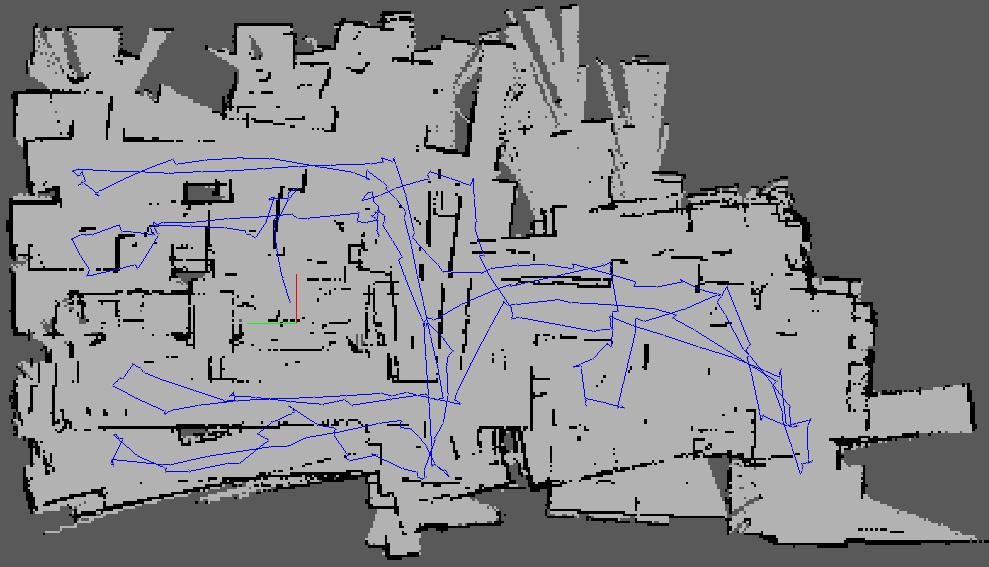

Hi,

thank you for sharing the data. 1) You coudl try setting "odom_sensor_sync" to true. The transform between lidar and camera is often wrong, not sure if it is a problem with the static transform between camera and lidar, or because of time synchronization. Setting odom_sensor_sync could help with time syncrhonization. With databaseViewer, we can can see that the RGB-D cloud doesn't match the scan:  2) With Reg/Strategy=1, you should look at the map created from scans, not the RGB-D point cloud:   The overall map, looking the scans, looks not too bad. However, some places where to robot rotated or moved faster, ICP was not able to correct the odometry (RGBD/NeighborLinkRefining is true). If rtabmap is built with libpointmatcher, you could try those parameters: <param name="Icp/PM" type="string" value="true"/> <param name="Icp/PMOutlierRatio" type="string" value="0.85"/> <param name="Icp/MaxCorrespondenceDistance" type="string" value="0.5"/>Note that many proximity loop closures have been already added to map (yellow links above). Without them and the loop closures (red links):  3) The depth map is used to get 3D positions of the visual features extracted from the RGB image. This is used mainly for visual loop closure detection. In rtabmapviz/Preferences/3D rendering, you hide the 3D map and show only the scan map. Based on your parameters, the current map (grid_map and cloud_ma topics) is created from the lidar and is 2D. With Grid/FromDepth=true, the map would be created using the depth images. You could indeed feed a 3D scan with subscribe_scan_cloud, this may help to do 6DoF mapping instead 2D mapping has the lidar is not always horizontal to ground when the robot is walking. cheers, Mathieu |

Re: Tips needed to improve on RTAB Mapping for legged Robots

|

This post was updated on .

Hi Mathieu,

Thanks the your reply. A few other follow-up questions: 1) I don't see any paramters on the rtabmap node using rtabmap --param. I assume you are referring to 'rtabmap_ros/data_odom_sync' node? From the launch file I posted above, I am currently already using the 'rtabmap_ros/rgbd_sync' node. It seems like the rgbd_sync node outputs the topic 'rgbd_image', yet the 'data_odom_sync' node takes in the 'rgb/image_in' and 'depth/image_in' topics individually. How should we reconcile the differences between the output and inputs of the different nodes? 2) Ok sounds good! I have set my 'MaxCorrespondenceDistance' parameter to 1.5 instead of 0.5, since that yields a better overall map. Nodes will have proximity links (shown in yellow) to other nodes that are further away, allowing for more map shaping over longer distances. Is my analysis accurate? 3a) It seems like the construction of the 3d depth map will happen regardless of whether I choose to hide it or not, since the keypoints extracted from the image uses these 3d position information. Lets say for each 1920x1080 image (~2mil pixels) that I am processing, I am only extracting at most 1000 keypoints. However, it seems like all depth pixels are used for the construction of this 3d visualization, rather than just the 1000 keypoints. I wonder if this will result in excessive memory consumption during mapping mode, since all we need are the positions of the 1000 keypoints for loop closure detection? I intend to use this visual loop closure feature, while constructing a 2d lidar map, over a long period of time and long distances. 3b) Will using scan_cloud (3d point cloud) instead of scan (2d lidar scans) provide me with more accurate localization 2d pose? It seems that there will be more features presented in a 3d point cloud for more accurate localization, at the expense of computation speed. In addition, I read from another of your forum post that lidar scan matching (libpointmatcher) uses scan-to-scan odometry correction. I wonder if it is possible to opt for scan-to-map odometry correction instead? It's really fascinating to see what this software is capable of. Thanks for your help! Take Care, Derek |

|

Administrator

|

This post was updated on .

Hi Derek,

This is a parameter not documented, odom_sensor_sync is a ros parameter for rtabmap node. Parameters shown by --params are those from RTAB-Map library. Icp/MaxCorrespondenceDistance is more an ICP parameters to find correspondences between the scans. If it is high, it can indeed close loops were drift between two scans is higher. Making it too high could close wrongly some loop closures. If you don't show or subscribe to 3D map, it won't be constructed. To save memory, you can also disable saving binary data in rtabmap. To do this, set Mem/NotLinkedNodesKept and Mem/BinDataKept to false. Yes, using a 3D lidar gives more data, so probably better loop closures, even if Reg/Force3DoF is true. In the case of that post, it does scan to map for proximity detections. Instead of doing a scan to grid map for localization (like AMCL), it does scan to map's scans for localization. cheers, Mathieu |

Re: Tips needed to improve on RTAB Mapping for legged Robots

|

Hi Mathieu,

Appreciate your reply. After performing extensive testing, it turns out setting odom_sensor_sync to true did not affect the results much. The 3d map became much better after I performed localization using a 3d point cloud instead of 2d laser scan. Nevertheless, I will keep the `odom_sensor_sync` parameter to true. I have a few follow-up question: 1) What is the difference between scan-to-gridmap vs scan-to-mapscan that you have mentioned? I understand that for AMCL, particle's laserscan measurements are compared to the robot's real laserscan measurements, such that particles' laserscan with low similarity to robot's laserscan will be more likely removed during the resampling process. How is this different from RTABMAP's scan-to-mapscan? 2) Can a rtabmap database obtained from a robot with a 2d lidar be shared with a robot with 3d lidar? I experimented with this, but got an error that says the following? I wonder if this issue is linked to (1)? [FATAL] LaserScan.cpp:241::LaserScan() Condition (data.channels() != 3 || (data.channels() == 3 && (format == kXYZ || format kXYI))) not met! [format=XY] 3) When running rtabmap that subscribes to scan_cloud, it appears that I have the following warning at each timestep RTABMAP adds a node to the posegraph (i.e. Rtabmap/DetectionRate). The 0 0 0 0 message is rather undescriptive. I wonder what this means? [WARN] [TIMESTAMP] 0 0 0 0 Thanks alot, Derek |

|

Administrator

|

Hi Derek,

If the timestamps between sensors and/or odometry are taken from different clocks not synchronized, odom_sensor_sync won't really help. However, this parameter is false by default as it has not be extensively tested in many scenarios, so it may not work as expected in all setups. 1) AMCL will localize against the occupancy grid map (a map from map_server for example), not the raw scans. RTAB-Map does it against the raw scans, by doing ICP between the current scan and the closest scans in the map. 2) Which rtabmap version are you using? I may test this case as it should not do a FATAL error. I had problems differencing between XYZ and XYI formats, but it should have been fixed now. However, I don't think ICP will work correctly when mixing 2D and 3D scans. 3) This has been fixed in January: https://github.com/introlab/rtabmap_ros/commit/21090899d423fd7795fd36c9d33d8efde28de7dc#diff-6a3ab41a835ba9b64ad85e420a4b9f270d8a4def12845afc786bc2c21f438eb6 cheers, Mathieu |

Re: Tips needed to improve on RTAB Mapping for legged Robots

|

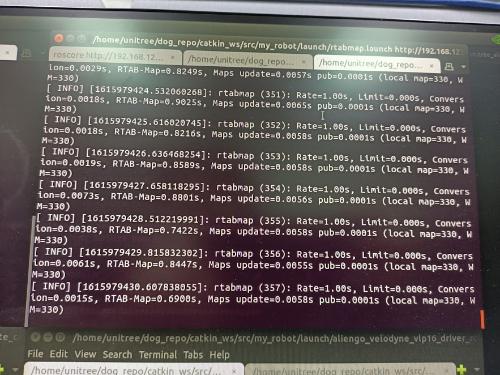

This post was updated on .

Hi Mathieu,

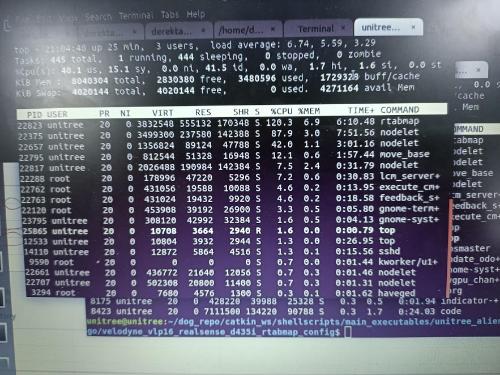

Thank you very much for your reply. I think I now have a good understand on how localization is performed via ICP. I have also incorporated the odom_sensor_sync parameter into my rtabmap config file. Regarding your question about the rtabmap version, I was using the one installed a month ago. I have recently pulled the newest changes but have yet to attempt to reproduce this problem yet. Interestingly as I proceed on with finetuning my parameters for localization mode, I realized that I have been encountering a persistent issue. Even though my RTABMap update_frequency was set at 1Hz, my localized pose is published at 1Hz at the beginning when the RTABMap node is being run, as seen from the terminal outputs every second. After awhile, it will hang and no more terminal outputs are produced, and I would stop receiving localization pose from RTABMap. Here is an image to show that (Even though it claims that the iteration is performed within 0.9 to 1-ish seconds, rtabmap output still freezes for more than 10 seconds).  I would like to share 2 further observations: 1) RTABMap overloading my CPU. As you can see from this snapshot from my 'top' command line tool, RTABMap is loading more than 100% of one of my CPU. When I opened Ubuntu's system monitor, I noticed that one of the CPU cores is consistently loaded to 100% (usually CPU 2 or 3), while the other CPU cores are relatively free below 50% loading. I wonder if RTABMap's core mapping and localization operations are supposed to utilize more than 1 CPU core, if some operations are multi-threaded in nature? By extension, what aspects of RTABMap utilizes the GPU?  2) There are also times when my realsense driver shoots up to around 110% CPU loading as well on the 'top' command line tool. I investigated further and found that while the realsense driver uses minimal amount of CPU, the addition of a RGBD_Sync node within the same nodlet (to avoid topic serialization) causes CPU loading of that nodlet to shoot up to more than 100%. I referenced this guide, with approx_sync set to true. I hope these observations could provide you with some insight to this issue. I am not entirely sure if these 2 issues are related either. I am rather stuck on what I could do about this, as it makes realtime operations impossible. For you information, my current set-up is Ubuntu 18.04, ROS Melodic, on a Nvidia NX Xavier, with a VLP16 Velodyne Lidar and an Intel Realsense d435. I am currently launching the robot's nodes via SSH, via gnome terminals. Here is my parameter file if that helps: rtabmap.yaml Looking forward to hearing from you, as always. Much Thanks, Derek |

|

Administrator

|

Hi Derek,

The localization_pose is published only when rtabmap localizes on the map. If the rtabmap is logging at 1 Hz, it is not frozen. 1) Some parts of rtabmap are multi-threaded, like computing normals of point clouds. I see that you are using ICP registration with velodyne point clouds and Icp/VoxelSize=0.05. This is quite small for a jetson. For velodyne point clouds, I generally use Icp/VoxelSize=0.2 (indoor) to 0.5 (outdoor). To get details about cpu usage: rtabmap-report \ Timing/Total/ms \ Timing/Proximity_by_space/ms \ Timing/Posterior_computation/ms \ Timing/Memory_update/ms \ Timing/Neighbor_link_refining/ms \ Timing/Likelihood_computation/ms \ Timing/Add_loop_closure_link/ms \ Timing/Map_optimization/ms \ rtabmap.db 2) When using rgbd_sync, it subscribes to realsense topic to force streaming them. You coudl compare with starting only realsense node, then open 2 terminals and subscribe (rostopic hz "topic name") to RGB and Depth topics to make the node working, and see wthat is CPU usage then. cheers, Mathieu |

«

Return to Official RTAB-Map Forum

|

1 view|%1 views

| Free forum by Nabble | Edit this page |