Trying to reproduce Odometry

|

Hello,

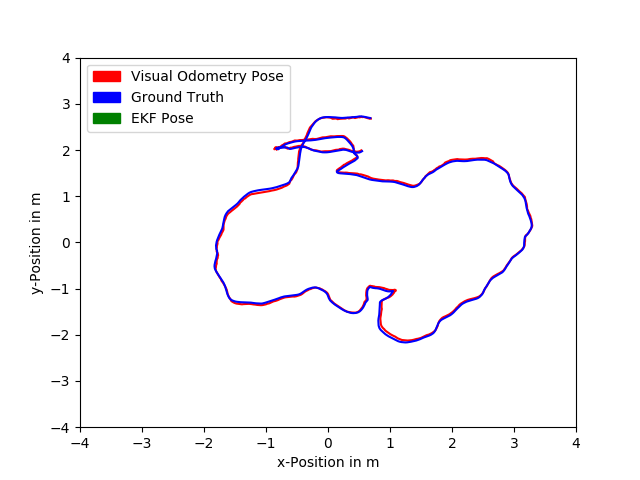

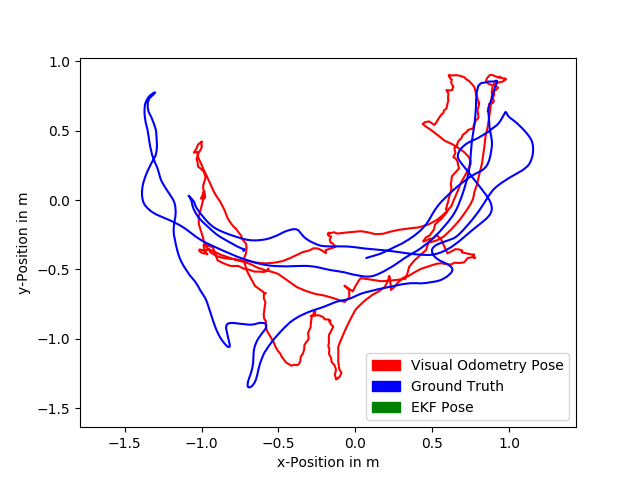

we are currently trying to compare different VO-Algorithms and want to reproduce the results from https://introlab.3it.usherbrooke.ca/mediawiki-introlab/images/7/7a/Labbe18JFR_preprint.pdf page 20 figure 10(a). We are using the TUM Dataset fr1-room. We decompressed the ROS bag file and also ran the python script to rename the transforms as stated here: https://github.com/introlab/rtabmap_ros/blob/master/launch/tests/rgbdslam_datasets.launch Additionally we included <param name="GFTT/MinDistance" type="string" value="5"/> <param name="Odom/MinInliers" type="string" value="10"/> We launch rosbag play rgbd_dataset_freiburg1_room.bag --clock and roslaunch rtabmap_ros rgbdslam_datasets.launch In parallel we have a python script running, which stores the /rtabmap/odom topic information in a separate file, which we will then plot against the ground truth provided by TUM. Our results are far from good and we have already changed a lot of parameters without any real success. Our results:  Our launch file:

<launch>

<!-- Example to run rgbd datasets:

$ wget http://vision.in.tum.de/rgbd/dataset/freiburg3/rgbd_dataset_freiburg3_long_office_household.bag

$ rosbag decompress rgbd_dataset_freiburg3_long_office_household.bag

$ wget https://gist.githubusercontent.com/matlabbe/897b775c38836ed8069a1397485ab024/raw/6287ce3def8231945326efead0c8a7730bf6a3d5/tum_rename_world_kinect_frame.py

$ python tum_rename_world_kinect_frame.py rgbd_dataset_freiburg3_long_office_household.bag

$ roslaunch rtabmap_ros rgbdslam_datasets.launch

$ rosbag play -.-clock rgbd_dataset_freiburg3_long_office_household.bag

-->

<param name="use_sim_time" type="bool" value="True"/>

<!-- Choose visualization -->

<arg name="rviz" default="true" />

<arg name="rtabmapviz" default="false" />

<!-- TF FRAMES -->

<node pkg="tf" type="static_transform_publisher" name="world_to_map"

args="0.0 0.0 0.0 0.0 0.0 0.0 /world /map 100" />

<group ns="rtabmap">

<!-- Odometry -->

<node pkg="rtabmap_ros" type="rgbd_odometry" name="rgbd_odometry" output="screen">

<remap from="rgb/image" to="/camera/rgb/image_color"/>

<remap from="depth/image" to="/camera/depth/image"/>

<remap from="rgb/camera_info" to="/camera/rgb/camera_info"/>

<param name="Reg/Force3DoF" value="false" />

<param name="Odom/Strategy" type="string" value="0"/> <!-- 0=Frame-to-Map, 1=Frame-to-KeyFrame -->

<param name="Odom/ResetCountdown" type="string" value="15"/>

<param name="Odom/GuessSmoothingDelay" type="string" value="0"/>

<param name="GFTT/MinDistance" type="string" value="5"/>

<param name="Odom/MinInliers" type="string" value="10"/>

<param name="frame_id" type="string" value="kinect"/>

<param name="queue_size" type="int" value="10"/>

<param name="wait_for_transform" type="bool" value="true"/>

<param name="ground_truth_frame_id" type="string" value="world"/>

<param name="ground_truth_base_frame_id" type="string" value="kinect_gt"/>

</node>

<!-- Visual SLAM -->

<!-- args: "delete_db_on_start" and "udebug" -->

<node name="rtabmap" pkg="rtabmap_ros" type="rtabmap" output="screen" args="--delete_db_on_start">

<param name="subscribe_depth" type="bool" value="true"/>

<param name="Rtabmap/StartNewMapOnLoopClosure" type="string" value="true"/>

<param name="RGBD/CreateOccupancyGrid" type="string" value="false"/>

<param name="Rtabmap/CreateIntermediateNodes" type="string" value="true"/>

<param name="frame_id" type="string" value="kinect"/>

<param name="ground_truth_frame_id" type="string" value="world"/>

<param name="ground_truth_base_frame_id" type="string" value="kinect_gt"/>

<remap from="rgb/image" to="/camera/rgb/image_color"/>

<remap from="depth/image" to="/camera/depth/image"/>

<remap from="rgb/camera_info" to="/camera/rgb/camera_info"/>

<param name="Reg/Force3DoF" value="false" />

<param name="Optimizer/Slam2D" value="false" />

<param name="queue_size" type="int" value="10"/>

</node>

</group>

</launch>What can we do in order to achieve a better VO-estimate? Thanks in advance, fugashu |

|

Administrator

|

Hi,

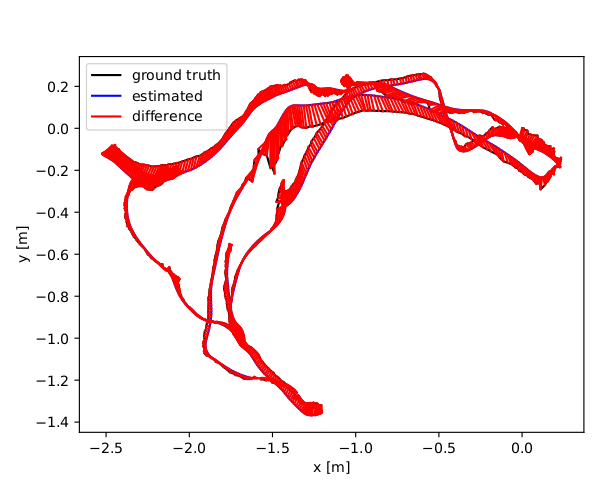

I don't recommend the usage of the tum's rosbag for benchmarking, because of some synchronization issues, not all images are processed. This sequence is also fast, so we should not miss any frames. I just tried with the bag and even if the odometry processing time is around 40-50 ms, the max odometry rate is 8-9 hz, which means a lot of frames are discarded on synchronization (at 40 ms, we should see 20 Hz odometry). In the paper, this offline tool rtabmap-rgbd_dataset was used in order to process all frames using the image dataset instead of the bag. Example: $ rtabmap-rgbd_dataset \ --Rtabmap/DetectionRate 2 \ --RGBD/LinearUpdate 0 \ --RGBD/AngularUpdate 0 \ --Mem/STMSize 30 \ --Rtabmap/CreateIntermediateNodes true \ rgbd_dataset_freiburg1_room $ rtabmap-report --scale --poses rgbd_dataset_freiburg1_room/rtabmap.db $ python evaluate_ate.py \ rgbd_dataset_freiburg1_room/rtabmap_poses.txt \ rgbd_dataset_freiburg1_room/rtabmap_gt.txt \ --plot output.pdf $ evince output.pdf  See the tool's usage to generate the sync folders: Usage:

rtabmap-rgbd_dataset [options] path

path Folder of the sequence (e.g., "~/rgbd_dataset_freiburg3_long_office_household")

containing least rgb_sync and depth_sync folders. These folders contain

synchronized images using associate.py tool (use tool version from

https://gist.github.com/matlabbe/484134a2d9da8ad425362c6669824798). If

"groundtruth.txt" is found in the sequence folder, they will be saved in the database.evaluate_ate.py was downloaded from here: https://vision.in.tum.de/data/datasets/rgbd-dataset/toolscheers, Mathieu |

|

Hey,

thanks for the quick reply. We generated the sync folders and successfully created all the required files Unfortunately $ python evaluate_ate.py \ rgbd_dataset_freiburg1_room/rtabmap_poses.txt \ rgbd_dataset_freiburg1_room/rtabmap_gt.txt \ --plot output.pdf throws and error Couldn't find matching timestamp pairs between groundtruth and estimated trajectory! Did you choose the correct sequence? The files rtabmap_gt.txt and rtabmap_poses.txt somehow don't provide timestamps, although we used every script as stated by you. EDIT: We just opened the .db file and managed to visualize all the data we needed. One more question: Could you recommend any public available rosbag files where the rtabmap algorithm performs well in real time situations? Thanks in advance, fugashu |

|

Administrator

|

Hi,

The exported format has been corrected recently to be compatible directly with evaluate_ate.py like in my post. If you build rtabmap from source, the exported poses should be compatible. The rgbd_dataset_freiburg3_long_office_household.bag sequence works ok, as the camera is not moving very fast. You can also try the EuRoC dataset, in particular the Machine Hall sequences: https://projects.asl.ethz.ch/datasets/doku.php?id=kmavvisualinertialdatasets, see https://github.com/introlab/rtabmap_ros/blob/master/launch/tests/euroc_datasets.launch for some examples of usage. cheers, Mathieu |

«

Return to Official RTAB-Map Forum

|

1 view|%1 views

| Free forum by Nabble | Edit this page |