Using Kinect2 and LiDAR to Map and Navigate

|

Hi Matlabbe,

I am new to ROS and I am trying to run mapping and navigating with RTAB-Map. My sensors are Kinect V2 and RPLIDAR A1, and I have a robot with internal odometry. I am now having some troubles integrate them with RTAB-map to realize better mapping and navigation

1. What I have done:

1.1 Using the RTAB-Map to run mapping with Kinect+Odom / Kinect+Laser+Odom.

1.2 Using the gmapping to map with my LiDAR.

1.3 Navigate using navigation stack within the map constructed by gampping (LiDAR only).

My Problem: The LiDAR is placed at a high position, so it cannot detect low obstacles (like this figure).

2. I tried many approaches to solve this problem:

2.1 Using the projected 2D map and re-run the navigation with LiDAR only (map server+AMCL). Although the projected map can detect the obstacles, during navigation stage, the robot will hit on it (I guess it is because LiDAR cannot find the obstacles and remove it during navigation).

2.2 I carefully look through the Turtlebot tutorial, but found the costmap only uses the laser, so I guess the same problem may still exists.

2.3 I followed the demo of az3 and modify it to try to run it on my own robot but failed (cannot send goal, seems no obstacle cloud and ground cloud). It seems there is something wrong with the obstacle detection node, and communication between the RTAB-Map and move_base. I will post some codes. I am really new so I guess there may be many mistakes.

3. My question:

3.1 What do you think is the best implementation to solve my question?

3.2 If it is possible to use the RTAB-Map obstacle detection node or just the pointcloud from Kinect to integrate into navigation stack to achieve avoiding low obstacles? If so, may I have your help on giving some detailed instructions?

3.3 If there is some thing I misunderstood with your tutorial on turtlebot mapping and navigation. Can it solve my problem? And how does RTAB-Map localize the robot during navigation (by using the VSLAM sensor of LiDAR?)?

Sorry for so many questions. I am new to ROS and want to develop a robot that can do SLAM and collect a database of Wi-Fi signal. This is my final year project and if you are interested in it we could together do some development (time is tight so I am desperate for your help).

Thanks again!

2.3

My launch file:

My Problem: The LiDAR is placed at a high position, so it cannot detect low obstacles (like this figure).

2. I tried many approaches to solve this problem:

2.1 Using the projected 2D map and re-run the navigation with LiDAR only (map server+AMCL). Although the projected map can detect the obstacles, during navigation stage, the robot will hit on it (I guess it is because LiDAR cannot find the obstacles and remove it during navigation).

2.2 I carefully look through the Turtlebot tutorial, but found the costmap only uses the laser, so I guess the same problem may still exists.

2.3 I followed the demo of az3 and modify it to try to run it on my own robot but failed (cannot send goal, seems no obstacle cloud and ground cloud). It seems there is something wrong with the obstacle detection node, and communication between the RTAB-Map and move_base. I will post some codes. I am really new so I guess there may be many mistakes.

3. My question:

3.1 What do you think is the best implementation to solve my question?

3.2 If it is possible to use the RTAB-Map obstacle detection node or just the pointcloud from Kinect to integrate into navigation stack to achieve avoiding low obstacles? If so, may I have your help on giving some detailed instructions?

3.3 If there is some thing I misunderstood with your tutorial on turtlebot mapping and navigation. Can it solve my problem? And how does RTAB-Map localize the robot during navigation (by using the VSLAM sensor of LiDAR?)?

Sorry for so many questions. I am new to ROS and want to develop a robot that can do SLAM and collect a database of Wi-Fi signal. This is my final year project and if you are interested in it we could together do some development (time is tight so I am desperate for your help).

Thanks again!

2.3

My launch file:

<launch> <!-- Kinect 2 Install Kinect2 : Follow ALL directives at https://github.com/code-iai/iai_kinect2 Make sure it is calibrated! Run: $ roslaunch kinect2_bridge kinect2_bridge.launch publish_tf:=true $ roslaunch rtabmap_ros rgbd_mapping_kinect2.launch --> <!-- config param --> <arg name="pub_imu" default="False" /> <arg name="sub_ackermann" default="False" /> <arg name="lidar_frame" default="base_laser_link"/> <arg name="rgbd_odometry" default="false"/> <arg name="localization" default="false" /> <!-- Which image resolution to process in rtabmap: sd, qhd, hd --> <arg name="resolution" default="qhd" /> <!-- Choose visualization --> <arg name="rviz" default="true" /> <arg name="rtabmapviz" default="false" /> <!-- Fixed frame id, you may set "base_link" or "base_footprint" if they are published --> <arg name="frame_id" default="base_footprint"/> <include file="$(find base_control)/launch/base_control.launch"> <arg name="pub_imu" value="$(arg pub_imu)"/> <arg name="sub_ackermann" value="$(arg sub_ackermann)"/> </include> <include file="$(find robot_navigation)/launch/lidar.launch"> <arg name="lidar_frame" value="$(arg lidar_frame)"/> </include> <!-- Rotate the camera --> <arg name="pi/2" value="1.5707963267948966"/> <arg name="optical_rotate" value="0 0 0 -$(arg pi/2) 0 -$(arg pi/2)" /> <node pkg="tf" type="static_transform_publisher" name="kinect2_link" args="$(arg optical_rotate) kinect2_base_link kinect2_link 100" /> <node pkg="tf" type="static_transform_publisher" name="kinect2_base_link" args="0.1 0.0 0.4 0.0 0.2618 0.0 base_footprint kinect2_base_link 20" /> <!-- Corresponding config files --> <arg name="rtabmapviz_cfg" default="-d $(find rtabmap_ros)/launch/config/rgbd_gui.ini" /> <!--<arg name="rviz_cfg" default="-d $(find rtabmap_ros)/launch/config/rgbd.rviz" /> --> <!-- slightly increase default parameters for larger images (qhd=720p) --> <arg name="gftt_block_size" default="5" /> <arg name="gftt_min_distance" default="5" /> <group ns="rtabmap"> <!-- use rgb-d sync --> <node pkg="nodelet" type="nodelet" name="rgbd_sync" args="standalone rtabmap_ros/rgbd_sync" output="screen"> <remap from="rgb/image" to="/kinect2/$(arg resolution)/image_color_rect"/> <remap from="depth/image" to="/kinect2/$(arg resolution)/image_depth_rect"/> <remap from="rgb/camera_info" to="/kinect2/$(arg resolution)/camera_info"/> <remap from="rgbd_image" to="rgbd_image"/> <!-- output --> <!-- Should be true for not synchronized camera topics (e.g., false for kinectv2, zed, realsense, true for xtion, kinect360)--> <param name="approx_sync" value="false"/> </node> <!-- Odometry --> <node if="$(arg rgbd_odometry)" pkg="rtabmap_ros" type="rgbd_odometry" name="rgbd_odometry" output="screen"> <remap from="rgb/image" to="/kinect2/$(arg resolution)/image_color_rect"/> <remap from="depth/image" to="/kinect2/$(arg resolution)/image_depth_rect"/> <remap from="rgb/camera_info" to="/kinect2/$(arg resolution)/camera_info"/> <param name="frame_id" type="string" value="$(arg frame_id)"/> <param name="approx_sync" type="bool" value="false"/> <param name="GFTT/BlockSize" type="string" value="$(arg gftt_block_size)"/> <param name="GFTT/MinDistance" type="string" value="$(arg gftt_min_distance)"/> </node> <param unless="$(arg localization)" name="Rtabmap/TimeThr" type="string" value="500"/> <param if="$(arg localization)" name="Mem/IncrementalMemory" type="string" value="false"/> <param if="$(arg localization)" name="Mem/InitWMWithAllNodes" type="string" value="true"/> <!-- Visual SLAM (robot side) --> <!-- args: "delete_db_on_start" and "udebug" --> <node name="rtabmap" pkg="rtabmap_ros" type="rtabmap" output="screen" args= "--delete_db_on_start"> <param name="subscribe_depth" type="bool" value="false"/> <param name="subscribe_rgbd" type="bool" value="true"/> <param name="subscribe_scan" type="bool" value="true"/> <param name="Grid/FromDepth" type="bool" value="false"/> <param name="frame_id" type="string" value="$(arg frame_id)"/> <param name="rgbd_odometry" type="bool" value="false"/> <param name="visual_odometry" type="bool" value="false"/> <param name="odom_frame_id" type="string" value="odom"/> <remap from="odom" to="/odom"/> <remap from="rgbd_image" to="rgbd_image"/> <remap from="scan" to="/scan"/> <param name="approx_sync" type="bool" value="true"/> <param name="use_action_for_goal" type="bool" value="true"/> <remap from="move_base" to="/move_base"/> <!-- RTAB-Map's parameters --> <param name="RGBD/NeighborLinkRefining" type="string" value="true"/> <param name="RGBD/ProximityBySpace" type="string" value="true"/> <param name="RGBD/AngularUpdate" type="string" value="0.01"/> <param name="RGBD/LinearUpdate" type="string" value="0.01"/> <param name="RGBD/OptimizeFromGraphEnd" type="string" value="false"/> <param name="Reg/Force3DoF" type="string" value="true"/> <param name="Reg/Strategy" type="string" value="1"/> <!-- 1=ICP --> <param name="GridGlobal/MinSize" type="string" value="20"/> <!-- ICP parameters --> <param name="Icp/VoxelSize" type="string" value="0.05"/> <param name="Icp/MaxCorrespondenceDistance" type="string" value="0.1"/> <param name="GFTT/BlockSize" type="string" value="$(arg gftt_block_size)"/> <param name="GFTT/MinDistance" type="string" value="$(arg gftt_min_distance)"/> </node> <node pkg="nodelet" type="nodelet" name="obstacle_nodelet_manager" args="manager" output="screen"/> <node pkg="nodelet" type="nodelet" name="points_xyz_planner" args="load rtabmap_ros/point_cloud_xyz obstacle_nodelet_manager"> <remap from="depth/image" to="/kinect2/$(arg resolution)/image_depth_rect"/> <remap from="depth/camera_info" to="/kinect2/$(arg resolution)/camera_info"/> <remap from="cloud" to="cloudXYZ" /> <param name="decimation" type="int" value="4"/> <param name="max_depth" type="double" value="4.0"/> <param name="voxel_size" type="double" value="0.02"/> </node> <node pkg="nodelet" type="nodelet" name="obstacles_detection" args="load rtabmap_ros/obstacles_detection obstacle_nodelet_manager"> <remap from="cloud" to="cloudXYZ"/> <remap from="obstacles" to="/obstacles_cloud"/> <remap from="ground" to="/ground_cloud"/> <param name="frame_id" type="string" value="base_footprint"/> <param name="map_frame_id" type="string" value="map"/> <param name="wait_for_transform" type="bool" value="true"/> <param name="Grid/MinClusterSize" type="int" value="20"/> <param name="Grid/MaxObstacleHeight" type="double" value="0.5"/> <param name="Grid/MaxGroundAngle" type="double" value="0.1"/> </node> <!-- Visualisation RTAB-Map --> <node if="$(arg rtabmapviz)" pkg="rtabmap_ros" type="rtabmapviz" name="rtabmapviz" args="$(arg rtabmapviz_cfg)" output="screen"> <param name="subscribe_depth" type="bool" value="true"/> <param name="subscribe_scan" type="bool" value="true"/> <param name="approx_sync" type="bool" value="true"/> <param name="frame_id" type="string" value="$(arg frame_id)"/> <remap from="rgb/image" to="/kinect2/$(arg resolution)/image_color_rect"/> <remap from="depth/image" to="/kinect2/$(arg resolution)/image_depth_rect"/> <remap from="rgb/camera_info" to="/kinect2/$(arg resolution)/camera_info"/> <remap from="scan" to="/scan"/> </node> </group> <group ns="planner"> <arg name="cmd_vel_topic" default="cmd_vel" /> <arg name="odom_topic" default="odom" /> <arg name="planner" default="dwa" doc="opt: dwa, teb"/> <arg name="simulation" default= "false"/> <arg name="base_type" default= "$(env BASE_TYPE)"/> <remap from="scan" to="/scan"/> <remap from="obstacles_cloud" to="/obstacles_cloud"/> <remap from="ground_cloud" to="/ground_cloud"/> <remap from="map" to="/rtabmap/grid_map"/> <node pkg="move_base" type="move_base" respawn="false" name="move_base" output="screen"> <param name="base_local_planner" value="dwa_local_planner/DWAPlannerROS" /> <rosparam file="$(find robot_navigation)/param/$(env BASE_TYPE)/costmap_common_params(new).yaml" command="load" ns="global_costmap" /> <rosparam file="$(find robot_navigation)/param/$(env BASE_TYPE)/costmap_common_params(new).yaml" command="load" ns="local_costmap" /> <rosparam file="$(find robot_navigation)/param/$(env BASE_TYPE)/local_costmap_params(new).yaml" command="load" /> <rosparam file="$(find robot_navigation)/param/$(env BASE_TYPE)/global_costmap_params(new).yaml" command="load" /> <rosparam file="$(find robot_navigation)/param/$(env BASE_TYPE)/move_base_params.yaml" command="load" /> <rosparam file="$(find robot_navigation)/param/$(env BASE_TYPE)/dwa_local_planner_params(new).yaml" command="load" /> <remap from="cmd_vel" to="$(arg cmd_vel_topic)"/> <remap from="odom" to="$(arg odom_topic)"/> </node> </group> <!-- Visualization RVIZ --> <node if="$(arg rviz)" pkg="rviz" type="rviz" name="rviz" args="-d $(find robot_navigation)/rviz/new_navigation.rviz" output="screen"/> <!-- sync cloud with odometry and voxelize the point cloud (for fast visualization in rviz) --> <node if="$(arg rviz)" pkg="nodelet" type="nodelet" name="standalone_nodelet" args="manager" output="screen"/> <node if="$(arg rviz)" pkg="nodelet" type="nodelet" name="data_odom_sync" args="load rtabmap_ros/data_odom_sync standalone_nodelet"> <remap from="rgb/image_in" to="/kinect2/$(arg resolution)/image_color_rect"/> <remap from="depth/image_in" to="/kinect2/$(arg resolution)/image_depth_rect"/> <remap from="rgb/camera_info_in" to="/kinect2/$(arg resolution)/camera_info"/> <remap from="odom_in" to="rtabmap/odom"/> <param name="approx_sync" type="bool" value="true"/> <remap from="rgb/image_out" to="data_odom_sync/image"/> <remap from="depth/image_out" to="data_odom_sync/depth"/> <remap from="rgb/camera_info_out" to="data_odom_sync/camera_info"/> <remap from="odom_out" to="odom_sync"/> </node> </launch> |

|

Sorry I don't know why this happens to be no spacing.

|

|

In reply to this post by jingyu

Sorry to bother. I think I did run successfully using the demo of turtlebot3 navigation, and finishes the obstacle avoidance using projected map (if use the grid map from the lidar, the problem still exists)

If you have any advice on how to do fusion of kinect and lidar just feel free to comment. I think I may work on verifying the performance of using this demo. So please just ignore my code posted above. I think my current problem is on improve the accuracy and performance of navigation, if there is any advice on tuning RTAB-Map parameters? |

|

Administrator

|

Hi,

This is a common problem when using only lidar, in particular if it cannot be located at the bottom of the robot to see small obstacles. The lidar is quite useful for mapping and localization, so I would still feed the lidar to RTAB-Map for better localization. For the map rtabmap is creating, you have two choices: 1) Use lidar (default), and the map will have only obstacles at the height of the robot 2) Use depth image projection (Grid/FromDepth=true), and the map will have the small obstacles but more limited to field of view of the camera If the small objects are not static, I would go for the first approach, and configure the local costmap from move_base to use both lidar and depth camera cloud to avoid small obstacles. See this config for example. clearing for the lidar could be set to off if you really want to make sure small objects getting off sight of the camera are not cleared. You seem following the similar example. Make sure obstacles_cloud and ground_cloud are connected to move_base node (rqt_graph), there could be a remapping issue. Make sure those topics used in the local costmap are published. You parameters with lidar are somewhat standard. It will be easier to help if you have more specific problems. cheers, Mathieu |

|

Thank you so much for your quick response!

1. Now I am trying to add another laser using the pointcloud_to_laserscan into the costmap (I fed with kinect2/qhd/points) but it does not work well (I put the kinect at an angle about 15 degrees to look at the ground so I don't use the depthimage_to_laserscan). I think the node works but it cannot detect the obstacles very accurately. Sometimes the obstacles it shows on rviz don't match its real location. I guess if it is due to the tf transform problem. I noticed a tf transform to do optical rotate of kinect2. I chose the goal frame of pointcloud_to_laserscan to be my kinect_link or Kinect_base_link, but still not works. Could you please tell if there is anything wrong or just if it is possible to do so. 2. I am using the second approach with only lidar as observation source during navigation. It works because the small obstacles are static at my experimental site. I will try later using the obstacle_cloud you mentioned and update soon. 3. My goal is to make the robot navigate within the constructed map to collect Wi-Fi RSSI fingerprint. The problem I have is that if it is possible to publish a goal to RTABmap and repeat when the previous goal is reached. Another problem is how to find the map's size and how to check if a point is obstacle? I proposed to use a loop to generate an array of points (based on map's size) to reach to collect data. I think I should be able to check if the current goal is at obstacle. Will the planner tell? 4. Can I complete my goal just at the mapping stage? I noticed a Wifi Signal Strength Mapping (User Data Usage) tutorial. Can it detect signal strength to multiple APs? When the map is corrected, will the coordinates of the Wi-Fi signal strength be updated? Can I export the data to other usage?? The format I wish is like [x1 y1 MAC1 dBm1 MAC2 dBm2 ... x2 y2 MAC1 dBm1 MAC2 dBm2 ...] Sorry for so many questions... I really appreciate your reply! |

|

In reply to this post by matlabbe

Hi Matlabbe,

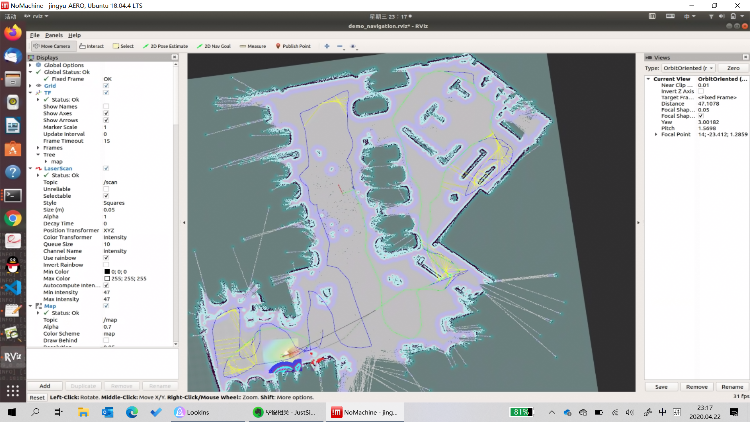

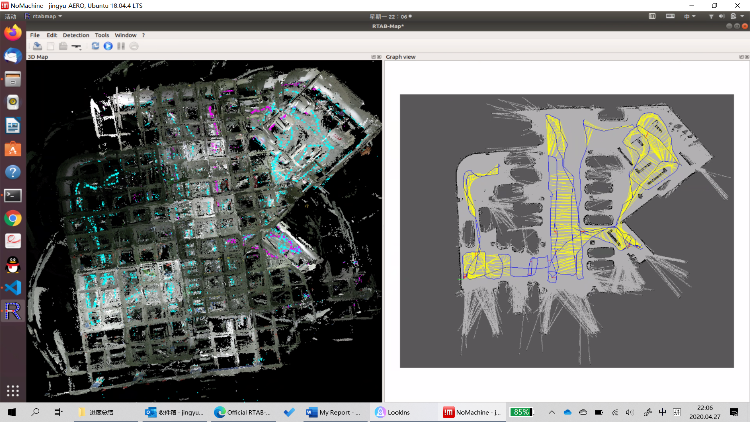

I want to add some updates of my recent progress. I used the obstacles_cloud and ground_cloud in the costmap and it works. But I still have some problems. 1. When the obstacles are small, the camera can recognize it at a considerably large distance, and it will soon turned to avoid the obstacles. But when the obstacles is outside the camera's range, the planned path will turn to the obstacle's position. At this time, even the camera heads the obstacle's direction, it cannot see the obstacles since they are closer. When I tilt the camera at a larger angle (20 degrees), its performance is better at avoiding obstacles but can only see very little visual information (the height of 3D map is low). I tried to set clearing of LiDAR to false but can still have the problem. The obstacles I want to avoid are common in underground parking lot.  2. Sometimes the RTABmap will plan a path that is not reasonable (the green path is the RTABMap global path). Why this happens and how can I fix it?  |

|

Administrator

|

When the robot is approaching and the obstacles enter the camera range, doesn't it detect the obstacles? I see that if the obstacles "appear" too close to robot, the camera won't see them. But if the obstacles are out of range, then the robot approaches them, it should detected them. Just make sure they are not cleared when the robot continues to approach them. For the path planning, if there are no loop closures or proximity links between the end and beginning, rtabmap will likely plan a path to return to all past nodes. The shortcuts would be where there are loop closures and proximity links (the red and yellow links in the graph). Maybe the question is more why it didn't detect some loop closures. Do you have the database to share? The image has been also scaled, it is difficult to see the beginning (it seems at the bottom left) and the end. cheers, Mathieu |

|

Hi Matlabbe,

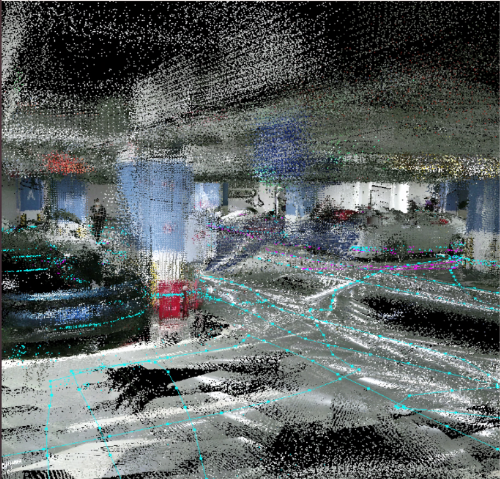

I think I solved the plan problem. What you suggest is exactly true, and I follow the tutorial of SetupOnYourRobot (change the parameters as recommended). The result is good! Thanks a lot! Another question I have is that how to obtain a good top view of the 3D point cloud map. The experimental site is an underground parking lot about 600m2. When I use rtabmapviz to view the database, I found there are many tiny metrices on the top. I think they are the captured roofs of the parking lot. May I have your help on explaining what are these metrices and how to obtain a better top-view picture of the 3D map.  I think it would be better if we can directly see the different vehicles from the top-view side.

|

|

Administrator

|

This post was updated on .

Hi,

You can filter the ceiling for visualization with option "Ceiling filtering height (0=Disabled). This is done in /map frame." in Preferences->3D Rendering. If you set to 2 meters for example, do Edit->Clear the cache then Edit->Download all clouds to refresh the 3D clouds. It is also possible to export the assembled clouds (File -> Export 3D clouds) to a PLY file, open it in CloudCompare in which you can use a tool to crop the ceiling. An EDL shader can be also enabled to better visualize the point cloud. cheers, Mathieu |

«

Return to Official RTAB-Map Forum

|

1 view|%1 views

| Free forum by Nabble | Edit this page |