We are receiving imu data (buffer=37), but cannot interpolate imu transform at time. IMU won't be added to graph.

Reply |

Threaded

Open this post in threaded view

|

More ♦

♦

| Loading... |

| Reply to author |

| Edit post |

| Move post |

| Delete this post |

| Delete this post and replies |

| Change post date |

| Print post |

| Permalink |

| Raw mail |

We are receiving imu data (buffer=37), but cannot interpolate imu transform at time. IMU won't be added to graph.

|

Dear Sir or Madame,

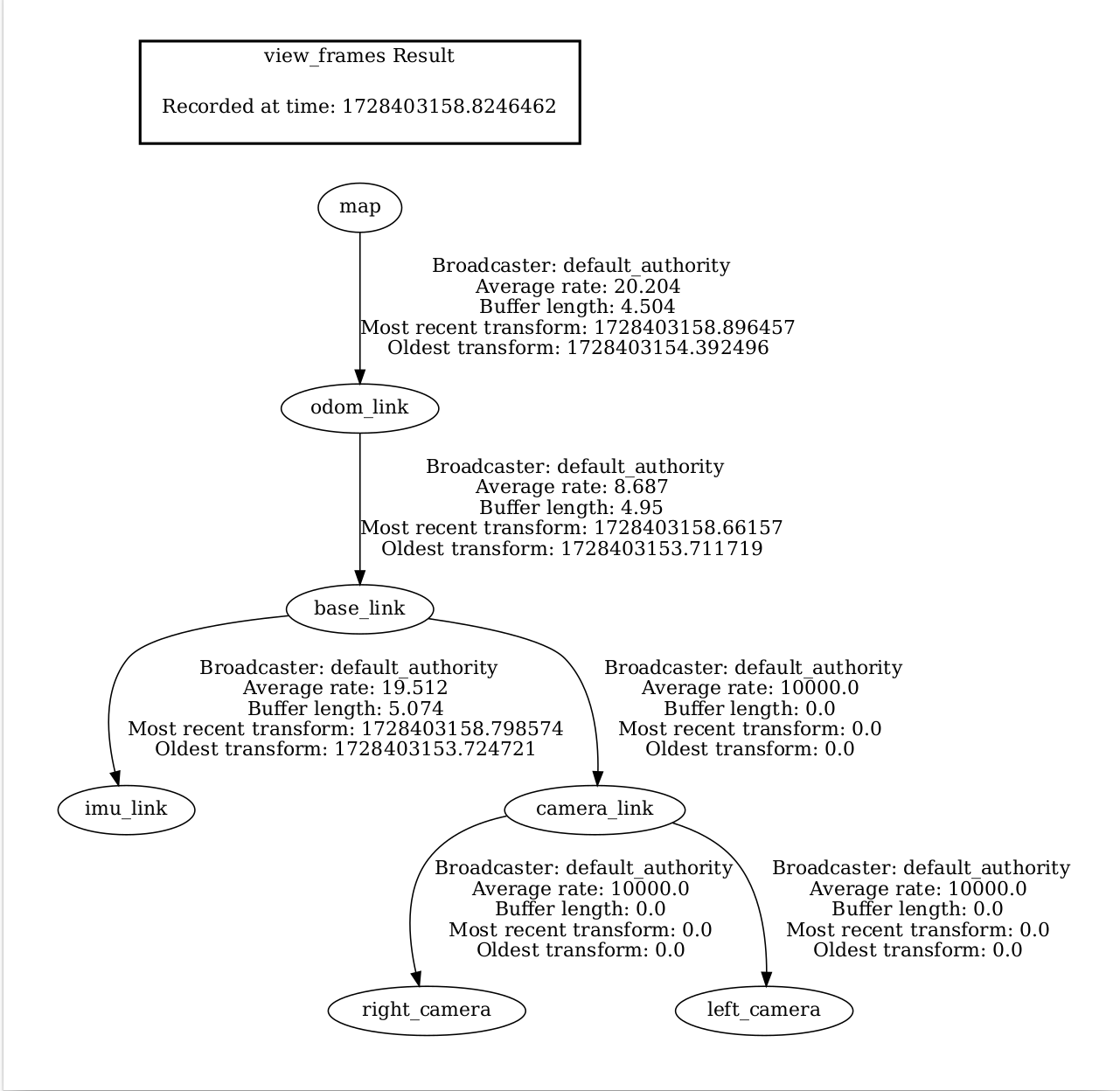

I am aware of other threads in this topic, however those are kind of no longer alive, so I decided to write my own. I am receiving a message: `We are receiving imu data (buffer=37), but cannot interpolate imu transform at time 1728403692.106505. IMU won't be added to graph.` I already learned that it comes from: https://github.com/introlab/rtabmap_ros/blob/23c06875b9369988a2815edb915cd1eade85031a/src/CoreWrapper.cpp#L2017-L2045 However I am not sure what may cause the issue. I am using stereo cameras and IMU and for now I have following tf tree:  Currently, both images and IMU are published with a frequency of 15 Hz. Please help if possible. |

Reply |

Threaded

Open this post in threaded view

|

More ♦

♦

| Loading... |

| Reply to author |

| Edit post |

| Move post |

| Delete this post |

| Delete this post and replies |

| Change post date |

| Print post |

| Permalink |

| Raw mail |

Re: We are receiving imu data (buffer=37), but cannot interpolate imu transform at time. IMU won't be added to graph.

|

Administrator

|

Hi,

what is the latency on those topics? For ros1: rostopic delay /my/image/topic rostopic delay /my/imu/topic For ros2: ros2 topic delay /my/image/topic ros2 topic delay /my/imu/topic |

Reply |

Threaded

Open this post in threaded view

|

More ♦

♦

| Loading... |

| Reply to author |

| Edit post |

| Move post |

| Delete this post |

| Delete this post and replies |

| Change post date |

| Print post |

| Permalink |

| Raw mail |

Re: We are receiving imu data (buffer=37), but cannot interpolate imu transform at time. IMU won't be added to graph.

|

Hi,

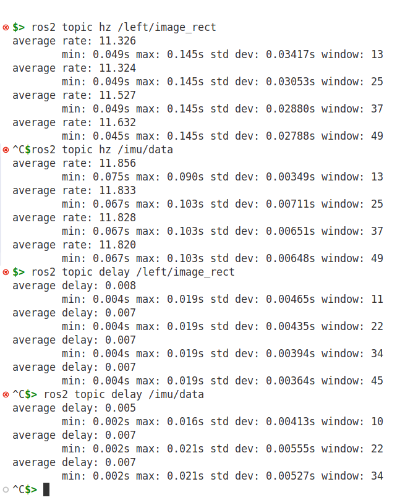

thank you for the response. Currently I have changed publication frequency for both IMU and images to 12 Hz.

|

Reply |

Threaded

Open this post in threaded view

|

More ♦

♦

| Loading... |

| Reply to author |

| Edit post |

| Move post |

| Delete this post |

| Delete this post and replies |

| Change post date |

| Print post |

| Permalink |

| Raw mail |

Re: We are receiving imu data (buffer=37), but cannot interpolate imu transform at time. IMU won't be added to graph.

|

In reply to this post by matlabbe

To give a whole picture:

I have two cameras with the distance between them 100mm and with camera_link exactly between them. Camera_link is +77mm on X and +12 mm on Z from base_link. Base_link is located exactly on the middle of IMU. Transformations map -> odom_link and odom_link -> base_link are provided by rtabmap_odom and rtabmap_slam. I try different combinations of transformation base_link -> imu_link. In the graph from the fist message I try with zero tf from base_link -> imu_link and also with tf turned off. For both imu_filter_madgwick has publish_tf: false. Also I tried with IMU posting messages at the frequency of cameras and 2x (30Hz) - both results in the "cannot interpolate imu transform" message. |

Reply |

Threaded

Open this post in threaded view

|

More ♦

♦

| Loading... |

| Reply to author |

| Edit post |

| Move post |

| Delete this post |

| Delete this post and replies |

| Change post date |

| Print post |

| Permalink |

| Raw mail |

Re: We are receiving imu data (buffer=37), but cannot interpolate imu transform at time. IMU won't be added to graph.

|

Administrator

|

Based on the high IMU delay (which look very similar to image delay), it looks like the camera driver publishes IMU at the "same time" than image topic. Do they have exactly the same stamp? If so, make sure that the camera driver publishes IMU topic and corresponding TF before publishing the image topic, so that rtabmap can receive the IMU (with stamp >= image stamp) before the image (filling its imu buffer and then be able to interpolate the imu pose when receiving the image).

|

Reply |

Threaded

Open this post in threaded view

|

More ♦

♦

| Loading... |

| Reply to author |

| Edit post |

| Move post |

| Delete this post |

| Delete this post and replies |

| Change post date |

| Print post |

| Permalink |

| Raw mail |

Re: We are receiving imu data (buffer=37), but cannot interpolate imu transform at time. IMU won't be added to graph.

|

IMU and images are published separately.

I try to synchronize them, but it doesn't seem to work. How much before should be enough? I tried different values and the situation repeats. Is the TF tree from the first message correct? |

Reply |

Threaded

Open this post in threaded view

|

More ♦

♦

| Loading... |

| Reply to author |

| Edit post |

| Move post |

| Delete this post |

| Delete this post and replies |

| Change post date |

| Print post |

| Permalink |

| Raw mail |

Re: We are receiving imu data (buffer=37), but cannot interpolate imu transform at time. IMU won't be added to graph.

|

Administrator

|

If they are published separately, why is IMU frame rate so low? and has so much delay?

In normal situation, the IMU would publish > 100 Hz with <1 ms delay, and images would be published at 30 Hz with maybe ~50 ms delay, meaning that the warning would never happen (that would be impossible that we didn't receive yet the IMU when we receive the image 50 ms later than the IMU stamp). |

Reply |

Threaded

Open this post in threaded view

|

More ♦

♦

| Loading... |

| Reply to author |

| Edit post |

| Move post |

| Delete this post |

| Delete this post and replies |

| Change post date |

| Print post |

| Permalink |

| Raw mail |

Re: We are receiving imu data (buffer=37), but cannot interpolate imu transform at time. IMU won't be added to graph.

|

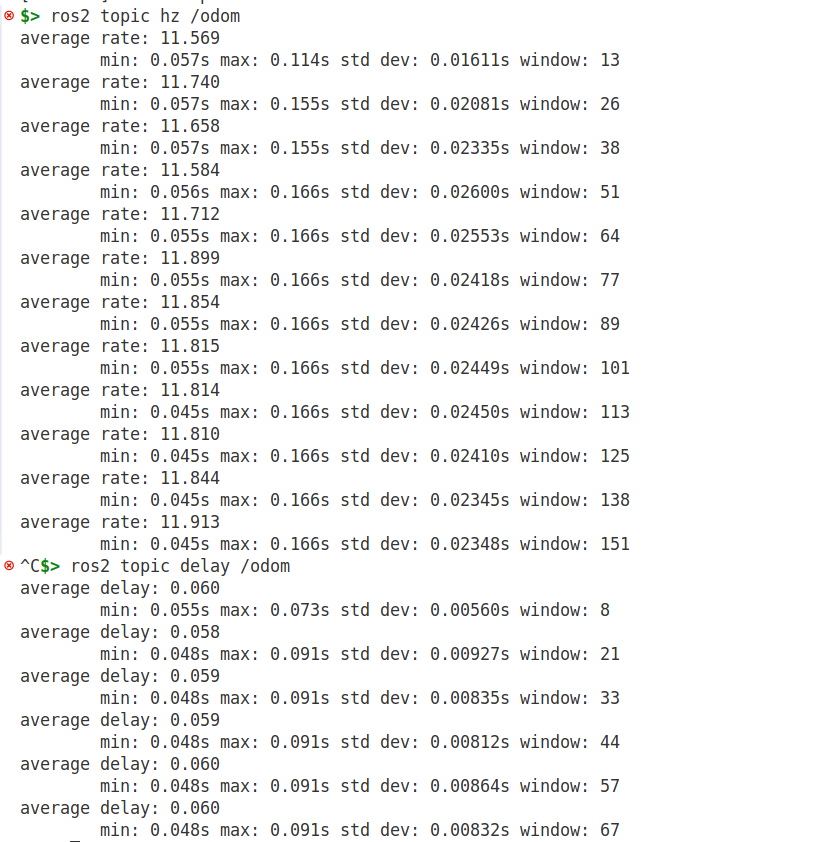

I am trying to launch it all together on raspberry pi 5: um7 imu driver, madgwick filter, driver for 2 rpi module 3 cameras, rtabmap stereo odometry and rtabmap slam.

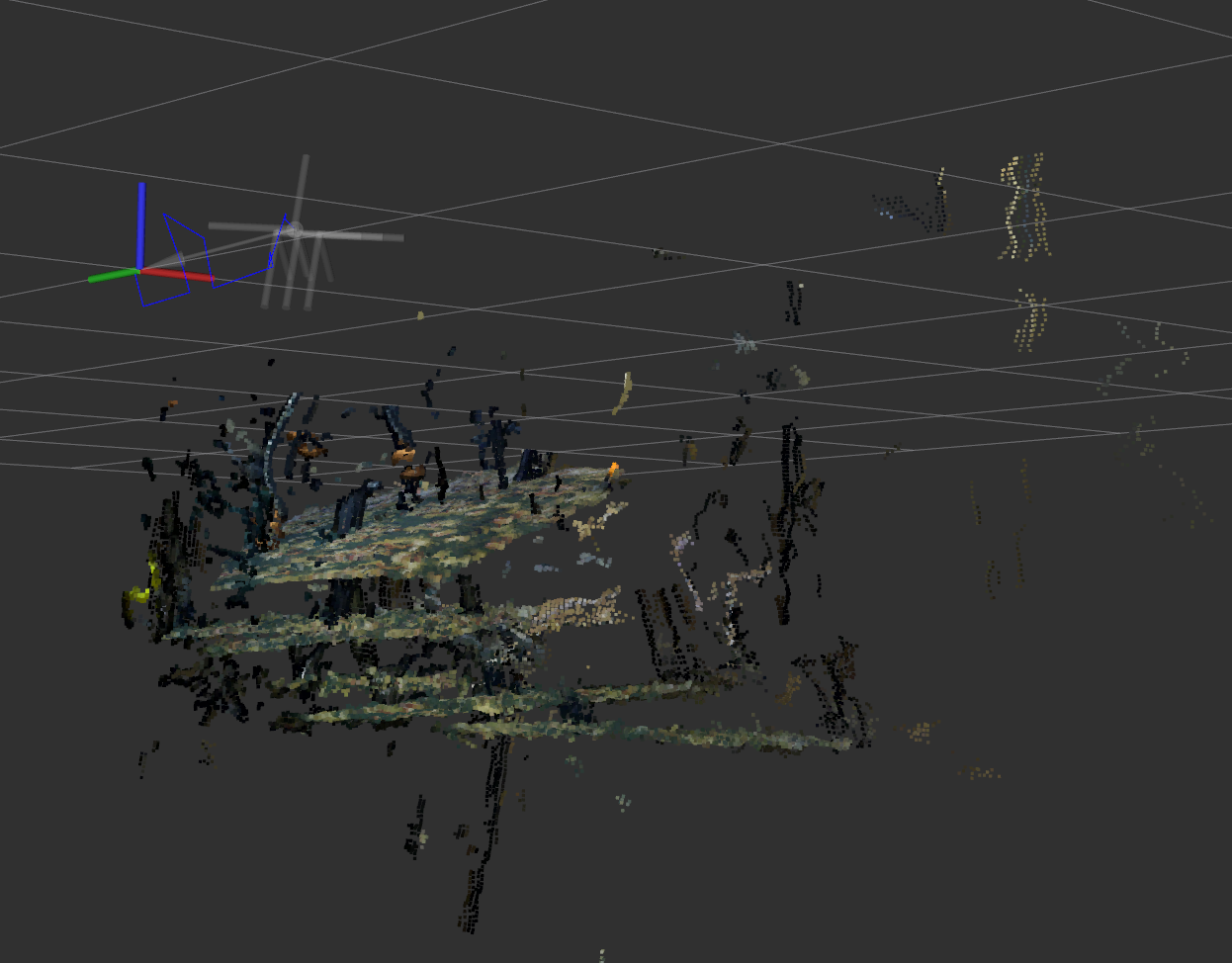

Probably this delay is somehow related to system throughput. For now, I have cameras at 30Hz and IMU at 60 Hz (unfortunately this is max I could achieve for IMU). For now I did a synchronization between IMU and cameras, and indeed the warning isn't happening! Thank you for that! Nevertheless, there is still a problem with slow localization estimation and map is scattered, and one object from real world appears in multiple places, like on the photo:  I followed the advanced tuning tutorial (https://wiki.ros.org/rtabmap_ros/Tutorials/Advanced%20Parameter%20Tuning) to increase a speed and reduce a pointcloud, and also a tutorial for stereo handheld mapping, but the problem still occurs. |

Reply |

Threaded

Open this post in threaded view

|

More ♦

♦

| Loading... |

| Reply to author |

| Edit post |

| Move post |

| Delete this post |

| Delete this post and replies |

| Change post date |

| Print post |

| Permalink |

| Raw mail |

Re: We are receiving imu data (buffer=37), but cannot interpolate imu transform at time. IMU won't be added to graph.

|

Also I have odometry run at 12Hz. Isn't it too slow?

|

Reply |

Threaded

Open this post in threaded view

|

More ♦

♦

| Loading... |

| Reply to author |

| Edit post |

| Move post |

| Delete this post |

| Delete this post and replies |

| Change post date |

| Print post |

| Permalink |

| Raw mail |

Re: We are receiving imu data (buffer=37), but cannot interpolate imu transform at time. IMU won't be added to graph.

|

BTW. Image resolution is already reduced: 640x480.

|

Reply |

Threaded

Open this post in threaded view

|

More ♦

♦

| Loading... |

| Reply to author |

| Edit post |

| Move post |

| Delete this post |

| Delete this post and replies |

| Change post date |

| Print post |

| Permalink |

| Raw mail |

Re: We are receiving imu data (buffer=37), but cannot interpolate imu transform at time. IMU won't be added to graph.

|

Administrator

|

In reply to this post by grzegorz

Can you share a rosbag of /tf, /tf_static and the imu/image topics?

In your screenshot, it seems there is maybe an optical rotation missing between base frame and camera frame. |

Reply |

Threaded

Open this post in threaded view

|

More ♦

♦

| Loading... |

| Reply to author |

| Edit post |

| Move post |

| Delete this post |

| Delete this post and replies |

| Change post date |

| Print post |

| Permalink |

| Raw mail |

Re: We are receiving imu data (buffer=37), but cannot interpolate imu transform at time. IMU won't be added to graph.

|

Link to rosbag:

https://drive.google.com/file/d/10JGdYOdbb8WnHJZ0KXDOTzpr_0PbZzxt/view?usp=sharing IMU topic here is the one that is synchronized - it's at lower HZ. |

Reply |

Threaded

Open this post in threaded view

|

More ♦

♦

| Loading... |

| Reply to author |

| Edit post |

| Move post |

| Delete this post |

| Delete this post and replies |

| Change post date |

| Print post |

| Permalink |

| Raw mail |

Re: We are receiving imu data (buffer=37), but cannot interpolate imu transform at time. IMU won't be added to graph.

|

Administrator

|

You don't need to manually synchronize the IMU with the camera, unless you mean synchronizing time between the clocks of the IMU and the camera (if one or the other is not using system clock). If the IMU publishes the data as soon it generates it, the error "Make sure IMU is published faster than data rate!" will never happen.

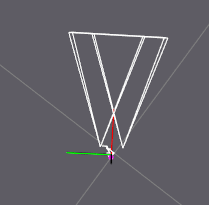

Without IMU, the visual odometry gets lost easily in that sequence. There are a couple of reasons: 1. the camera images look blurry or out of focus:  2. it seems the stereo calibration is not good (I see some vertical disparity):  3. the field of view is small (36 deg horizontal):  Note that the optical rotation looks okay, not sure what happened in your screenshot. cheers, Mathieu |

Reply |

Threaded

Open this post in threaded view

|

More ♦

♦

| Loading... |

| Reply to author |

| Edit post |

| Move post |

| Delete this post |

| Delete this post and replies |

| Change post date |

| Print post |

| Permalink |

| Raw mail |

Re: We are receiving imu data (buffer=37), but cannot interpolate imu transform at time. IMU won't be added to graph.

|

Thank you very much for the reply.

I set the focus in the camera for further objects and extend the view - as much I could without changing the lenses, and also re-calibrated. Now odometry seems to work better, thank you! I recorded rviz output to show what is going on in the pointcloud and TF during mapping. The video: https://drive.google.com/file/d/1DWj7_HBaG6-x7ns5acTxFS90yzqQnJoV/view?usp=sharing When an update of tf between map and odom_link comes, then it creates a large shift, which doesn't seem to be correct. Before that, during the first phase when only odometry and imu was affecting the tf it seems reasonable. |

Reply |

Threaded

Open this post in threaded view

|

More ♦

♦

| Loading... |

| Reply to author |

| Edit post |

| Move post |

| Delete this post |

| Delete this post and replies |

| Change post date |

| Print post |

| Permalink |

| Raw mail |

Re: We are receiving imu data (buffer=37), but cannot interpolate imu transform at time. IMU won't be added to graph.

|

Administrator

|

It looks like there was an odometry jump, then a loop closure causing the deformation of the map. What kind of stereo is this? It felt the jump was caused by unsync left and right camera frames. You may share a rosbag if you want. |

Reply |

Threaded

Open this post in threaded view

|

More ♦

♦

| Loading... |

| Reply to author |

| Edit post |

| Move post |

| Delete this post |

| Delete this post and replies |

| Change post date |

| Print post |

| Permalink |

| Raw mail |

Re: We are receiving imu data (buffer=37), but cannot interpolate imu transform at time. IMU won't be added to graph.

|

Yes, I am eager to make it work and appreciate any help here. : )

video: https://drive.google.com/file/d/1gQouPDyBnPS7ln_D9fjWvPjvkbvAcbJp/view?usp=sharing rosbag: https://drive.google.com/file/d/1XxuafTIQJqMl89ViWZGcRrNRwIvLPM9T/view?usp=sharing Usually, when I test, I don't have as much problem with odometry itself as with loop closure. Odometry sometimes does a small shift, but it is not that bad as when a loop closure comes. I also tried with default parameters with rtabmap_launch: https://drive.google.com/file/d/1pQfnO5XzccsEvWdGytUBu1BhrkyshhLb/view?usp=sharing At general, I publish images from both cameras with the same timestamp, because those are also captured at the same moment. The cameras are two raspberry pi module 3 cameras with a distance of 100 mm between them. Maybe there is a problem with loop closure parameters, but I am just exploring that. |

Reply |

Threaded

Open this post in threaded view

|

More ♦

♦

| Loading... |

| Reply to author |

| Edit post |

| Move post |

| Delete this post |

| Delete this post and replies |

| Change post date |

| Print post |

| Permalink |

| Raw mail |

Re: We are receiving imu data (buffer=37), but cannot interpolate imu transform at time. IMU won't be added to graph.

|

Administrator

|

Are you sure? In the bag and in the second video we can see in odometry view that left and right images are shaking against each other when the camera is moving. Ideally, the images should be taken with same hardware signal on both cameras to capture exactly at the same time.  tested with: ros2 launch rtabmap_launch rtabmap.launch.py \ args:="-d" \ stereo:=true \ use_sim_time:=true \ left_image_topic:=/left/image_rect_color \ left_camera_info_topic:=/left/camera_info \ right_camera_info_topic:=/right/camera_info \ right_image_topic:=/right/image_rect ros2 bag play rosbag2_2024_10_21-10_42_19_0.db3 --clock --remap tf:=tf_old |

«

Return to Official RTAB-Map Forum

|

1 view|%1 views

| Free forum by Nabble | Edit this page |