point_cloud_aggregator

|

Hi, I would like to map with ICP odometry using a fusion of an ouster lidar and realsense L515. To do this I would like to use the rtabmap_ros/point_cloud_aggregator nodelet, and have rtabmap main node subscribe to the combined cloud as input. When I try using the aggregator, I get a "Did not receive data since 5 seconds! " error despite topics being published, and a working TF as judged by rviz. I'm asking for help in case I am missing something obvious.

Additional details: I launch the aggregator nodelet and lidar nodes with the folowing launch file: <launch> <include file="os1record.launch" /> <include file="l515.launch" /> <node pkg="tf" type="static_transform_publisher" name="l515ostf" args="0.04 0.033 -0.145 0 -1.5308 1.5708 camera_depth_optical_frame os_sensor 100" /> <node pkg="nodelet" type="nodelet" name="aggregator" args="standalone rtabmap_ros/point_cloud_aggregator> <remap from="/cloud1" to="/camera/depth/color/points" /> <remap from="/cloud2" to="/os_cloud_node/points" /> </node> </launch> Once all nodes are up, the aggregator prints the following error: [ WARN] [1644195882.024546038]: /aggregator: Did not receive data since 5 seconds! Make sure the input topics are published ("$ rostopic hz my_topic") and the timestamps in their header are set. /aggregator subscribed to (approx sync): /camera/depth/color/points, /os_cloud_node/points rostopic hz indicates the topics are being published: $ rostopic hz /camera/depth/color/points subscribed to [/camera/depth/color/points] average rate: 29.950 min: 0.008s max: 0.064s std dev: 0.01595s window: 30 average rate: 29.939 min: 0.008s max: 0.067s std dev: 0.01669s window: 60 average rate: 29.941 min: 0.008s max: 0.067s std dev: 0.01549s window: 90 average rate: 29.957 min: 0.008s max: 0.067s std dev: 0.01517s window: 120 ^Caverage rate: 29.847 min: 0.008s max: 0.067s std dev: 0.01533s window: 125 $ rostopic hz /os_cloud_node/points subscribed to [/os_cloud_node/points] average rate: 10.296 min: 0.078s max: 0.122s std dev: 0.01510s window: 10 average rate: 10.064 min: 0.069s max: 0.128s std dev: 0.01641s window: 20 average rate: 10.107 I can also see both clouds projected from the same frame_id (os_sensor in this case)  |

|

Administrator

|

You could try to increase the queue_size (default 5) of point_cloud_aggregator, though with the default 5, it should have been able to synchronize the topic.

I've seen some people having difficulty to synchronize L515 cameras with other sensors, because of how frames are stamped (sensor clock versus system clock). How is the ouster's point cloud stamped? You may try to compare the timestamps: rostopic echo /camera/depth/color/points/header/stamp rostopic echo /os_cloud_node/points/header/stamp If it seems to have a large delay, increasing queue_size could help to synchronize, but if they are not stamped with same clock, there would be time drift over time and point_cloud_aggregator may stop synchronizing after some time. cheers, Mathieu |

|

Hi Mathieu, thanks for your quick reply (and for teaching me some ROS tricks

) the timestamps were indeed at fault . ) the timestamps were indeed at fault .

L5 header: stamp: secs: 1644296679 nsecs: 479192257 frame_id: "camera_depth_optical_frame" Ouster header: seq: 363 stamp: secs: 540 nsecs: 154357870 frame_id: "os_sensor" In this case the ouster timestamps were at fault, as the sensor was simply counting the time since it had powered up. That issue was resolved by following the steps in the comments of your launch file here: https://github.com/introlab/rtabmap_ros/blob/master/launch/tests/test_ouster_gen2.launch I'm now getting a seeminly illogical PCL error. [pcl::PCLPointCloud2::concatenate] Number of fields to copy in cloud1 (3) != Number of fields to copy in cloud2 (3) Best, Alan |

|

Turns out the PCL error message was not displaying correctly. The problem had to do with the ouster cloud messages containing 9 fields ( not 3 as indicated by the error).

After consulting with fellow RTABMap enthusiast Paul D (and Google), I was able to get an aggregate cloud adding these few lines of code just before the PCL version evaluation and cloud concatenation:

if (cloud2->fields.size() != output->fields.size()) { pcl::PointCloud<pcl::PointXYZ> cloudxyz; pcl::fromPCLPointCloud2(*cloud2, cloudxyz); pcl::toPCLPointCloud2(cloudxyz, *cloud2); }

This fix is specific to my case: cloud1 (L515 clouds) happen to have three fields and converting the ouster clouds from PCL::PointCloud2 to PCL::PointXYZ and back gives the ouster scans with three fields only. The same operation could be applied to both clouds to make it more flexible, but it has the effect of dropping reflectivity, intensity, etc. For my case this is fine as I will retain the original clouds and only use the aggregate cloud for odometry. |

|

Administrator

|

Thx, it is the way to go. However, as you said, if we want to keep intensity field (or any kind of fields), we lose it during the conversion. I am not sure how to make it general, unless documenting that the node expects same point cloud type for all inputs. All point clouds would have at least a minimum of XYZ fields, so an option could be added to convert the clouds to XYZ by default, at the cost of losing the other fields (it is why I would not set that option enabled by default, if people wanted to keep the lidar intensity field). In this commit, I added xyz_output option to force the conversion to XYZ. If you can test it, that would be nice, I don't have the setup to test it right now.

cheers, Mathieu |

|

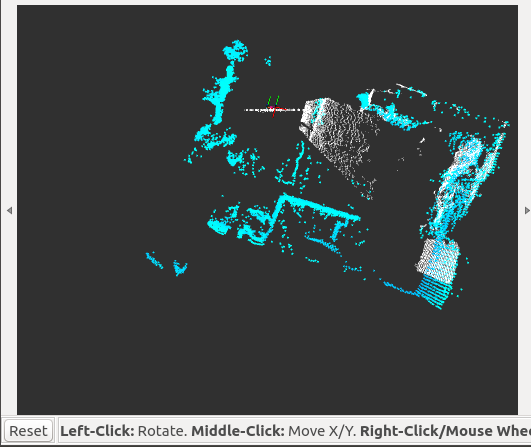

I was able to test the new commit and it seems to work well. The xyz_output option works as expected. When it is set to false I get the PCL error and the new field number warning message. Setting xyz_output to true publishes the combined cloud. My pi seems slow today compared to the last test, but with the new commit I am getting 7.4 hz, up from 7.2 hz using my implementation just prior to recompile. I will do a more thorough mapping test soon once I have my data processing computer set up properly.

|

| Free forum by Nabble | Edit this page |