rosbag rtabmap_ros

|

This post was updated on .

Well, I'm working with TUM Benchmark, I followed this topic http://official-rtab-map-forum.206.s1.nabble.com/comparison-of-odometry-SIFT-SURF-td168.html

I'm able to run the TUM demos with both: rtabmap_ros and RGBDSLAMv2. So I recorded my own bag file from kinect 1473 with openni_launch (as freenect didn't work). When I run: rosbag info rosbag/stage1_10march2015.bag (my bag file recorded) I got: ... output omitted ... types: sensor_msgs/CameraInfo [c9a58c1b0b154e0e6da7578cb991d214] sensor_msgs/Image [060021388200f6f0f447d0fcd9c64743] tf2_msgs/TFMessage [94810edda583a504dfda3829e70d7eec] topics: /camera/depth/camera_info 497 msgs : sensor_msgs/CameraInfo /camera/depth/image 497 msgs : sensor_msgs/Image /camera/rgb/camera_info 497 msgs : sensor_msgs/CameraInfo /camera/rgb/image_color 497 msgs : sensor_msgs/Image /tf 684 msgs : tf2_msgs/TFMessage (4 connections) When I run: rosbag info rosbag/rgbd_dataset_freiburg1_xyz.bag (TUM's bag file) I got: ... output omitted ... types: sensor_msgs/CameraInfo [c9a58c1b0b154e0e6da7578cb991d214] sensor_msgs/Image [060021388200f6f0f447d0fcd9c64743] sensor_msgs/Imu [6a62c6daae103f4ff57a132d6f95cec2] tf/tfMessage [94810edda583a504dfda3829e70d7eec] visualization_msgs/MarkerArray [f10fe193d6fac1bf68fad5d31da421a7] topics: /camera/depth/camera_info 798 msgs : sensor_msgs/CameraInfo /camera/depth/image 798 msgs : sensor_msgs/Image /camera/rgb/camera_info 798 msgs : sensor_msgs/CameraInfo /camera/rgb/image_color 798 msgs : sensor_msgs/Image /cortex_marker_array 3034 msgs : visualization_msgs/MarkerArray /imu 15158 msgs : sensor_msgs/Imu /tf 4242 msgs : tf/tfMessage The main difference is /tf types mine has: tf2_msgs/TFMessage and TUM's bag has: tf/tfMessage I recorded the bag as follows: rosbag record -O stage1_10march2015 /tf /camera/rgb/image_color /camera/rgb/camera_info /camera/depth/image /camera/depth/camera_info I'm able to play my recorded bag with rgbdslamv2, but with rtabmap_ros I can't. |

|

Administrator

|

Hello Micke,

Are there status/error messages from rtabmap or rgbd_odometry node? Which TFs are recorded? Note that by default rtabmap and rgbd_odometry "frame_id" ros parameter is set to "base_link". If you used only openni_launch for recording, you may change "frame_id" to "camera_link":

<launch>

(...)

<group ns="rtabmap">

<node pkg="rtabmap_ros" type="rgbd_odometry" name="visual_odometry" output="screen">

<param name="frame_id" type="string" value="camera_link"/>

(...)

</node>

<node name="rtabmap" pkg="rtabmap_ros" type="rtabmap" output="screen" args="--delete_db_on_start">

<param name="frame_id" type="string" value="camera_link"/>

(...)

</node>

</group>

</launch>

Cheers, Mathieu |

|

Hello Mathieu,

Well, at first without change anything I got this: Input depth type is 32FC1, please use type 16UC1 for depth. The depth images will be processed anyway but with a conversion. This warning is only be printed once... [ WARN] [1426109886.897073983, 1426027326.533805932]: Could not get transform from kinect to /camera_rgb_optical_frame after 1 second! When I run the modified file (as you told me) I got this: Input depth type is 32FC1, please use type 16UC1 for depth. The depth images will be processed anyway but with a conversion. This warning is only be printed once... [ WARN] [1426110264.539881219, 1426027326.534702627]: Could not get transform from world to camera_link after 1 second! That's my current launch file:

<launch>

(....)

<group ns="rtabmap">

<!-- Odometry -->

<node pkg="rtabmap_ros" type="rgbd_odometry" name="rgbd_odometry" output="screen">

<param name="frame_id" type="string" value="camera_link"/>

<!--<param name="frame_id" type="string" value="kinect"/>-->

(....)

</node>

<!-- rename the child frame of odometry -->

<!-- Do I have to change this too ? -->

<node name="odom_msg_to_tf" pkg="rtabmap_ros" type="odom_msg_to_tf">

<param name="frame_id" type="string" value="kinect_est"/>

</node>

<!-- Visual SLAM -->

<!-- args: "delete_db_on_start" and "udebug" -->

<node name="rtabmap" pkg="rtabmap_ros" type="rtabmap" output="screen" args="--delete_db_on_start">

<param name="frame_id" type="string" value="camera_link"/>

<!--<param name="frame_id" type="string" value="kinect"/>-->

(....)

</node>

</launch>

TFs recorded: /tf [tf2_msgs/TFMessage] Thanks in advance, Micke. |

|

Administrator

|

This post was updated on .

Hello Micke,

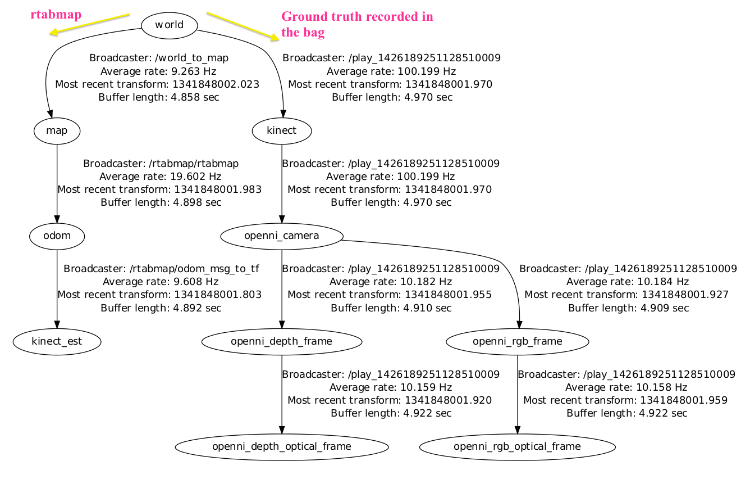

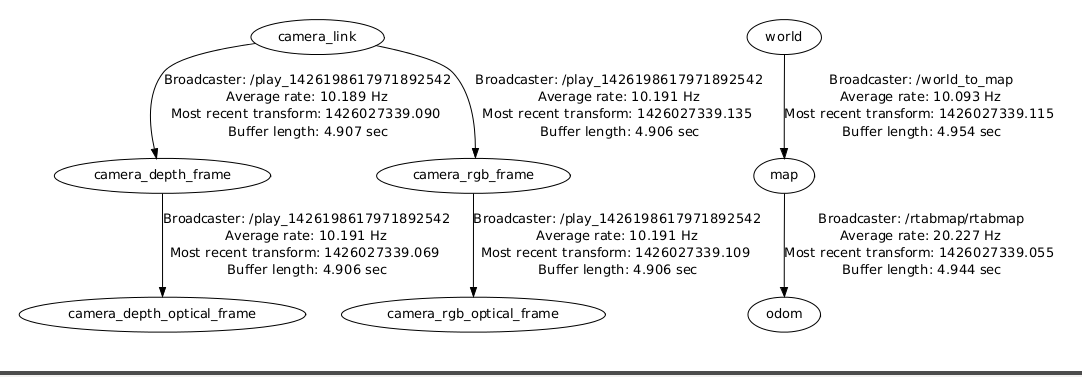

I assume that you are based on the rgbdslam_datasets.launch file used in this post. This launch file is configured with the frame names included in the /tf recorded in the dataset bags:  The goal of this launch file is to be able to compare /world->/kinect (ground truth) and /world->/kinect_est (rtabmap) transformations. Example:  In your case, if you have recorded only images/tf from openni_launch, you may have something like this in your bag:  Inspired from rgbdslam_datasets.launch without the "ground_truth_frame_id" parameter set (your world to camera_link error), "frame_id"="camera_link" and "publish_tf"="true":

<group ns="rtabmap">

<!-- Odometry -->

<node pkg="rtabmap_ros" type="rgbd_odometry" name="rgbd_odometry" output="screen">

<remap from="rgb/image" to="/camera/rgb/image_color"/>

<remap from="depth/image" to="/camera/depth/image"/>

<remap from="rgb/camera_info" to="/camera/rgb/camera_info"/>

<param name="frame_id" type="string" value="camera_link"/>

<param name="publish_tf" type="bool" value="true"/>

<param name="wait_for_transform" type="bool" value="true"/>

</node>

<!-- Visual SLAM -->

<node name="rtabmap" pkg="rtabmap_ros" type="rtabmap" output="screen" args="--delete_db_on_start">

<param name="subscribe_depth" type="bool" value="true"/>

<param name="frame_id" type="string" value="camera_link"/>

<remap from="rgb/image" to="/camera/rgb/image_color"/>

<remap from="depth/image" to="/camera/depth/image"/>

<remap from="rgb/camera_info" to="/camera/rgb/camera_info"/>

<remap from="odom" to="odom"/>

<param name="LccBow/MinInliers" type="string" value="10"/>

<param name="LccBow/InlierDistance" type="string" value="0.05"/>

</node>

</group>

|

|

Hello Mathieu,

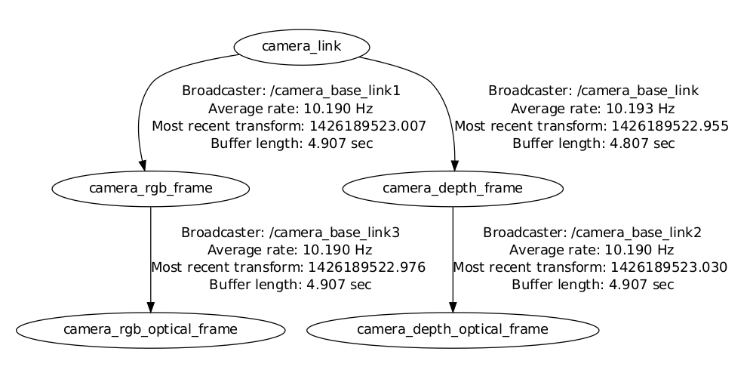

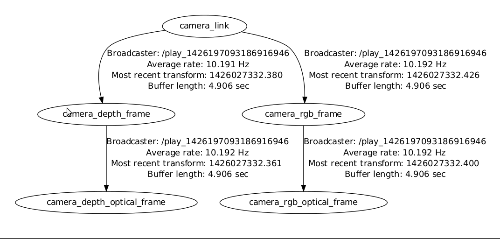

tf view_frames looks this way when I just run my rosbag:  And when I run my rosbag with rgbdslam_datasets.launch (modified as you just told me):  I got this error from the console: [ WARN] [1426199432.617938416, 1426027325.530611165]: Input depth type is 32FC1, please use type 16UC1 for depth. The depth images will be processed anyway but with a conversion. This warning is only be printed once... [ WARN] [1426199433.619656476, 1426027326.537271214]: Could not get transform from world to camera_link after 1 second! Current launch file:

<launch>

<param name="use_sim_time" type="bool" value="true"/>

<arg name="strategy" default="0" />

<arg name="feature" default="6" />

<arg name="nn" default="3" />

<arg name="local_map" default="1000" />

<!-- Choose visualization -->

<arg name="rviz" default="true" />

<arg name="rtabmapviz" default="false" />

<!-- TF FRAMES -->

<node pkg="tf" type="static_transform_publisher" name="world_to_map"

args="0.0 0.0 0.0 0.0 0.0 0.0 /world /map 100" />

<group ns="rtabmap">

<!-- Odometry -->

<node pkg="rtabmap_ros" type="rgbd_odometry" name="rgbd_odometry" output="screen">

<remap from="rgb/image" to="/camera/rgb/image_color"/>

<remap from="depth/image" to="/camera/depth/image"/>

<remap from="rgb/camera_info" to="/camera/rgb/camera_info"/>

<param name="Odom/Strategy" type="string" value="$(arg strategy)"/>

<param name="Odom/FeatureType" type="string" value="$(arg feature)"/>

<param name="OdomBow/NNType" type="string" value="$(arg nn)"/>

<param name="OdomBow/LocalHistorySize" type="string" value="$(arg local_map)"/>

<param name="Odom/FillInfoData" type="string" value="$(arg rtabmapviz)"/>

<param name="frame_id" type="string" value="camera_link"/>

<param name="publish_tf" type="bool" value="true"/>

<param name="queue_size" type="int" value="30"/>

<param name="wait_for_transform" type="bool" value="true"/>

</node>

<!-- Visual SLAM -->

<!-- args: "delete_db_on_start" and "udebug" -->

<node name="rtabmap" pkg="rtabmap_ros" type="rtabmap" output="screen" args="--delete_db_on_start">

<param name="subscribe_depth" type="bool" value="true"/>

<param name="frame_id" type="string" value="camera_link"/>

<remap from="rgb/image" to="/camera/rgb/image_color"/>

<remap from="depth/image" to="/camera/depth/image"/>

<remap from="rgb/camera_info" to="/camera/rgb/camera_info"/>

<remap from="odom" to="odom"/>

<param name="LccBow/MinInliers" type="string" value="10"/>

<param name="LccBow/InlierDistance" type="string" value="0.05"/>

<param name="queue_size" type="int" value="30"/>

</node>

<!-- Visualisation -->

<node if="$(arg rtabmapviz)" pkg="rtabmap_ros" type="rtabmapviz" name="rtabmapviz" args="-d $(find rtabmap_ros)/launch/config/rgbd_gui.ini" output="screen">

<param name="subscribe_depth" type="bool" value="true"/>

<param name="queue_size" type="int" value="30"/>

<param name="frame_id" type="string" value="camera_link"/>

<remap from="rgb/image" to="/camera/rgb/image_color"/>

<remap from="depth/image" to="/camera/depth/image"/>

<remap from="rgb/camera_info" to="/camera/rgb/camera_info"/>

<remap from="odom" to="odom"/>

</node>

</group>

<node if="$(arg rviz)" pkg="rviz" type="rviz" name="rviz" args="-d $(find rtabmap_ros)/launch/config/rgbdslam_datasets.rviz"/>

<node if="$(arg rviz)" pkg="nodelet" type="nodelet" name="points_xyzrgb" args="standalone rtabmap_ros/point_cloud_xyzrgb">

<remap from="rgb/image" to="/camera/rgb/image_color"/>

<remap from="depth/image" to="/camera/depth/image"/>

<remap from="rgb/camera_info" to="/camera/rgb/camera_info"/>

<remap from="cloud" to="voxel_cloud" />

<param name="queue_size" type="int" value="10"/>

<param name="decimation" type="double" value="4"/>

</node>

</launch>

So I can't get in RVIZ tf and voxel_cloud. What TFs do you record? What are minimal topics to get rtabmap_ros working from a rosbag file ? Thanks in advance, Micke |

|

Administrator

|

Hello Micke,

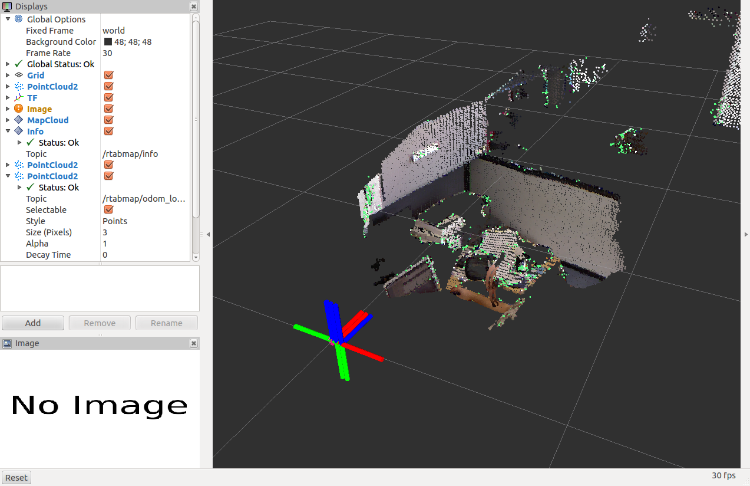

I recorded a small rosbag as you did, but I should subscribe to /camera/depth_registered/image_raw instead of /camera/depth_registered/image. In your launch file above, I just changed /camera/depth/image to /camera/depth_registered/image_raw and it works. Recording the bag:

$ roslaunch openni_launch openni.launch depth_registration:=true

$ rosbag record -O test /tf /camera/rgb/image_color /camera/rgb/camera_info /camera/depth_registered/image_raw

(...kill rosbag)

$ rosbag info test.bag

path: test.bag

version: 2.0

duration: 8.9s

start: Mar 13 2015 11:39:26.64 (1426261166.64)

end: Mar 13 2015 11:39:35.52 (1426261175.52)

size: 230.2 MB

messages: 827

compression: none [158/158 chunks]

types: sensor_msgs/CameraInfo [c9a58c1b0b154e0e6da7578cb991d214]

sensor_msgs/Image [060021388200f6f0f447d0fcd9c64743]

tf2_msgs/TFMessage [94810edda583a504dfda3829e70d7eec]

topics: /camera/depth_registered/image_raw 157 msgs : sensor_msgs/Image

/camera/rgb/camera_info 157 msgs : sensor_msgs/CameraInfo

/camera/rgb/image_color 157 msgs : sensor_msgs/Image

/tf 356 msgs : tf2_msgs/TFMessage (4 connections)

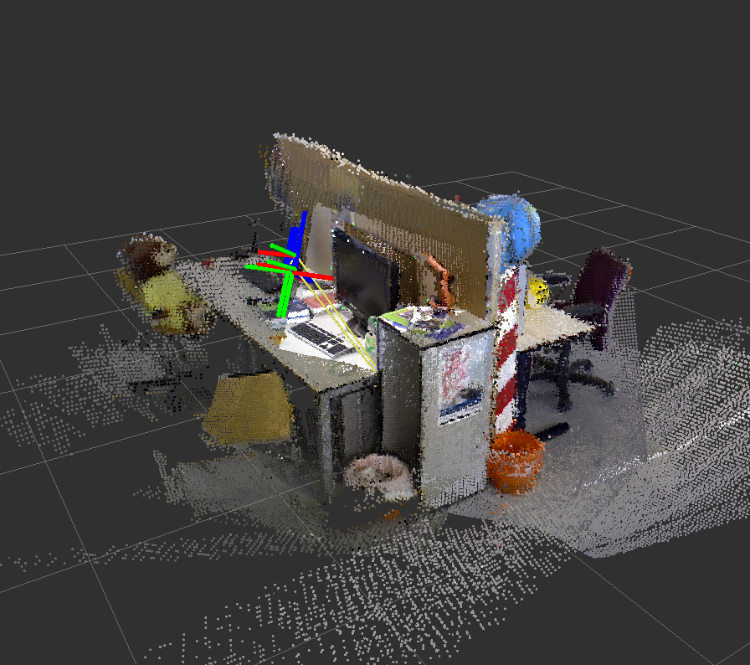

Playing the bag with your launch file "test.launch" (modified for "/camera/depth_registered/image_raw"): $ roslaunch test.launch $ rosbag play --clock test.bag RVIZ (I should zoom out a little to see the cloud):  Cheers, Mathieu |

|

Hello Mathieu,

I noticed openni_launch didn't get any depth images, so I tried with freenect and an old Kinect 1414, I modified the ros bag as you told me and it finally worked. Recording the bag: $ roslaunch freenect_launch freenect.launch depth_registration:=true $ rosbag record -O mytest /tf /camera/rgb/image_color /camera/rgb/camera_info /camera/depth_registered/image_raw Now, I wonder if it's possible to get the estimated trayectory from visual odometry, as I want to compare it with ground truth trayectory, How can I get it ? Thanks in advance, Micke. |

|

Administrator

|

You can use TF (/odom -> /base_link) or subscribe to nav_msgs/Odometry and save the pose with the timestamp somewhere.

For information, you can see the odometry trajectory by doing this: $ rostopic echo /rtabmap/odom/pose/pose position: x: -0.00229270756245 y: -0.00902292318642 z: -0.00674252817407 orientation: x: 0.00132995096318 y: -0.00228510321019 z: 0.00288171810597 w: 0.999992352588 --- position: x: -0.00188632041682 y: -0.0105277476832 z: -0.00697915675119 orientation: x: 0.000952038222566 y: -0.00242582823873 z: 0.00349794919707 w: 0.999990486621 --- position: x: -0.00185759039596 y: -0.0107648214325 z: -0.00687110237777 orientation: x: 0.00111394572297 y: -0.00236950602842 z: 0.00358777470415 w: 0.999990136171 --- position: x: -0.00176498887595 y: -0.00932307355106 z: -0.00668607419357 orientation: x: 0.00159518906434 y: -0.00225667481474 z: 0.00295281279954 w: 0.99999182181 --- position: x: -0.00210090610199 y: -0.00998457055539 z: -0.00718265213072 orientation: x: 0.00129497500437 y: -0.00251487016154 z: 0.00324167754177 w: 0.999990744954 --- [...] |

|

Hello Mathieu,

When I run rostopic list while executing a rosbag file I got this topics: /camera/depth/camera_info /camera/depth/image /camera/rgb/camera_info /camera/rgb/image_color /camera/rgb/image_rect_color /clock /cortex_marker_array /imu /initialpose /move_base_simple/goal /rosout /rosout_agg /rtabmap/graph /rtabmap/info /rtabmap/mapData /rtabmap/odom /rtabmap/odom_info /rtabmap/odom_last_frame /rtabmap/odom_local_map /tf /tf_static /voxel_cloud When I tried to print out the topic /rtabmap/odom/pose/pose I could't see anything. Currently I'm subscribing to the topic /rtabmap/odom but I'm not sure If I'm wrong or what else, I can't get any data from it, though. Cheers, Micke. |

|

Administrator

|

Hello Micke,

If the inputs to rgbd_odometry node are correct, /rtabmap/odom should be published even if odometry is lost. What is your rosbag info? Cheers, Mathieu |

|

This post was updated on .

Hello Mathieu,

When I run my freenect recorded bag files I'm able to subscribe to /rtabmap/odom topic and get Odometry messages from it either with my rospy script or rostopic echo /rtabmap/odom But when I run rgbdslam bag files (I'm using: rgbd_dataset_freiburg1_360.bag) I couldn't be able to get any output from /rtabmap/odom, neither with my rospy script nor rostopic echo /rtabmap/odom/pose/pose as you told me. The map in RVIZ is built without any problems all works fine, except I can't get Odometry messages from rgbdslam bags. This is my rospy script:

#!/usr/bin/env python

import rospy

from nav_msgs.msg import Odometry

def callback(odom_data):

"""

The format of each time 'timestamp tx ty tz qx qy qz qw'.

"""

position = odom_data.pose.pose.position

orientation = odom_data.pose.pose.orientation

data = ("%s.%s %s %s %s %s %s %s %s") % \

(odom_data.header.stamp.secs,

odom_data.header.stamp.nsecs,

position.x,

position.y,

position.z,

orientation.x,

orientation.y,

orientation.z,

orientation.w)

print data

def listener():

"""

Subscribe to the topic: /rtabmap/odom

Data type: nav_msgs/Odometry

Method called: callback(Odometry)

"""

rospy.init_node('estimated_trajectory_listener', anonymous=True)

rospy.Subscriber('/rtabmap/odom', Odometry, callback)

# spin() simply keeps python from exiting until this node is stopped

rospy.spin()

if __name__ == '__main__':

listener()

Console output when I run rgbdslam bag files: Input depth type is 32FC1, please use type 16UC1 for depth. The depth images will be processed anyway but with a conversion. This warning is only be printed once. I'm using this launch for the rgbdslam datasets rgbdslam_datasets.launch Are you able to get Odometry messages from rgbdslam bag datasets ? Cheers, Micke. |

|

Administrator

|

I can get odometry messages, but the camera is lost early while playing this dataset (camera is moving too fast). Don't forget to decompress the rosbag.

What I did: $ wget http://vision.in.tum.de/rgbd/dataset/freiburg1/rgbd_dataset_freiburg1_360.bag $ rosbag decompress rgbd_dataset_freiburg1_360.bag $ roslaunch rtabmap_ros rgbdslam_datasets.launch rtabmapviz:=true $ rosbag play --clock -r 0.2 rgbd_dataset_freiburg1_360.bag By adding "Odom/MinInliers" to 10 and reducing the publish rate (0.2) of the rosbag (the mapping starts after 3 seconds), I can almost map the whole room without having odometry lost. The /rtabmap/odom topic is published. Cheers |

«

Return to Official RTAB-Map Forum

|

1 view|%1 views

| Free forum by Nabble | Edit this page |